About the Project

Lately, I’ve seen some horror stories (1, 2) about side projects gone awry resulting in HUGE cloud bills. As a homelab enthusiast, and cloud user I wanted to write up some notes on how I stay ontop of billing to avoid surprise costs. This blog will outline some alerting pipeline I’ve built in my homelab.

Building Notification Pipelines

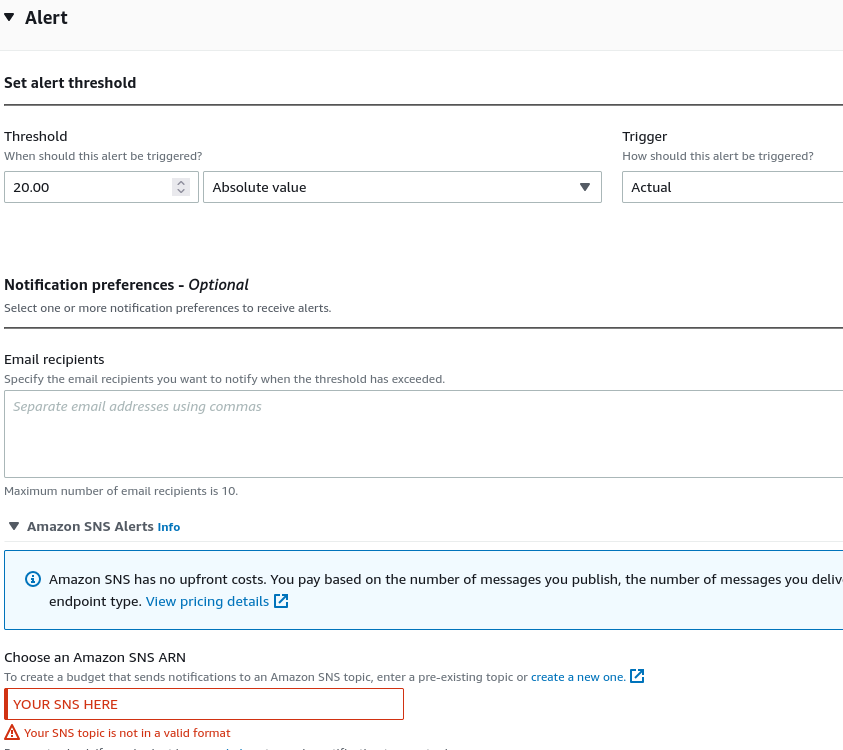

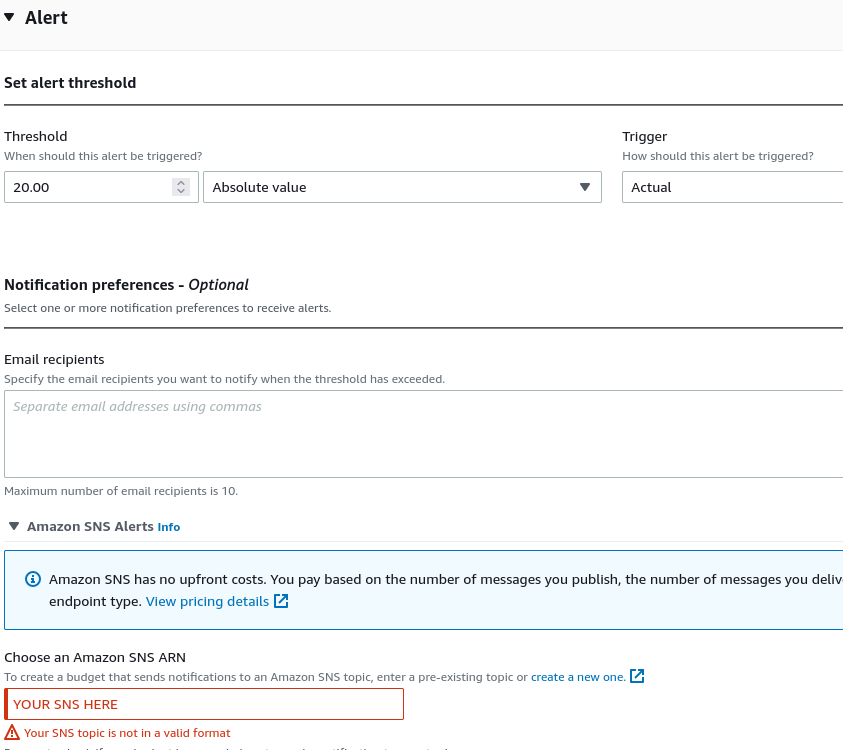

AWS Billing allows for e-mail notifications in the event you’re approaching a particular self-defined billing threshold. You can specify whether or not you want these alarms to be triggered on forecasted spending or actual dollar amount spending. Regardless of the threshold you put in place, you can then link e-mails to be notified. Beyond simple e-mail notifications, you can hook also send alerts to AWS' Simple Notification Service (SNS) to its Simple Queueing Service (SQS) which can then be consumed by a custom application.

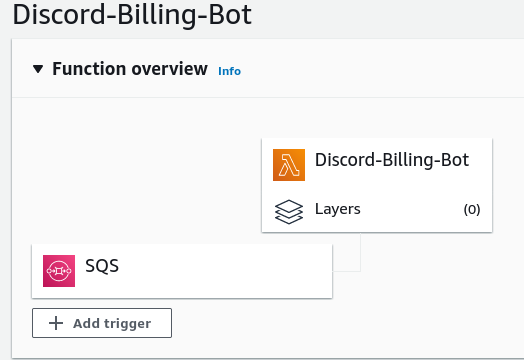

At this point the world’s your oyster for notification management, as long as the corresponding service consuming content from the SQS has the appropriate IAM policy to read from said queue. The easiest implementation of this is with a Lambda function. The topology describe thus far looks as follows.

You may be thinking, “wait, I’m building services that will cost money to monitor my projects that already cost money?”. Great point. The services I’m describing in this blog have free tier services that are quite generious for the average homelab user. For example, SQS, SNS, and Lambda all have a free million requests with their free tier. Beyond the free tier, the cost of these services are low but as always leverage AWS Cost Explorer to understand what these services will do to your total bill.

Building The Pipeline: SNS, SQS and Lamba Permissions

Your AWS SNS topic has to have an access policy that allows for the budget service to publish to. This is covered by the following AWS article, but will look something like the content below:

{

"Sid": "E.g., AWSBudgetsSNSPublishingPermissions",

"Effect": "Allow",

"Principal": {

"Service": "budgets.amazonaws.com"

},

"Action": "SNS:Publish",

"Resource": "your topic ARN"

},

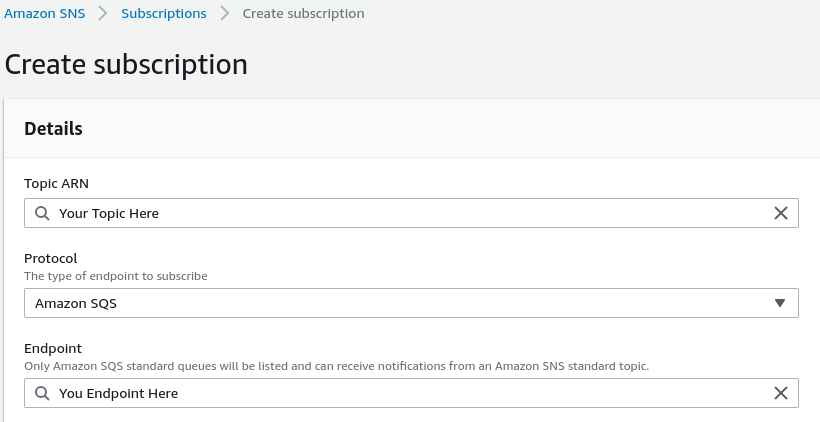

Next, create a subscription within the SNS service to a SQS queue. This will create a pub/sub relationship

where SNS pushes a message to SQS where Lambda can read from.

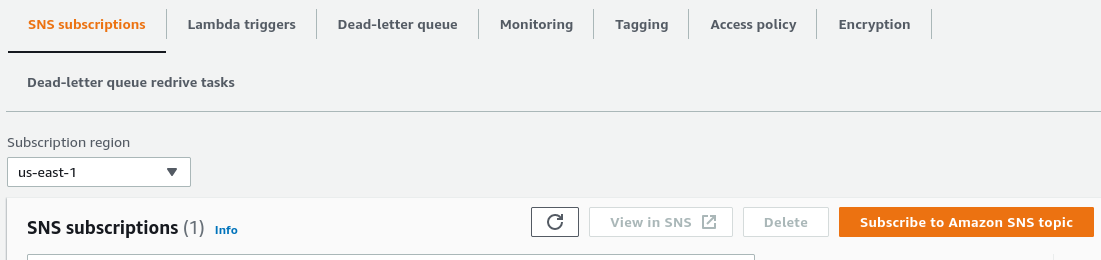

Then, navigate to SQS and subscribe to the billing queue.

Now that a queue has been subscribed to, the final element is to link a Lambda function to the queue. The code for the lambda function we’ll be using is shown in the next section with the Discord hook integration. For now, just create “from scratch” a Lambda function with a Python runtime. Additionally, create a policy for the role this lambda function is using that will allow reading from an SQS queue. The AWS documentation has a tutorial that states to use the AWSLambdaSQSQueueExecutionRole, but this leverages ALL SQS queues. Consider creating a new policy based on this service role, but limit the specific SQS resources.

Discord Webhook Notification

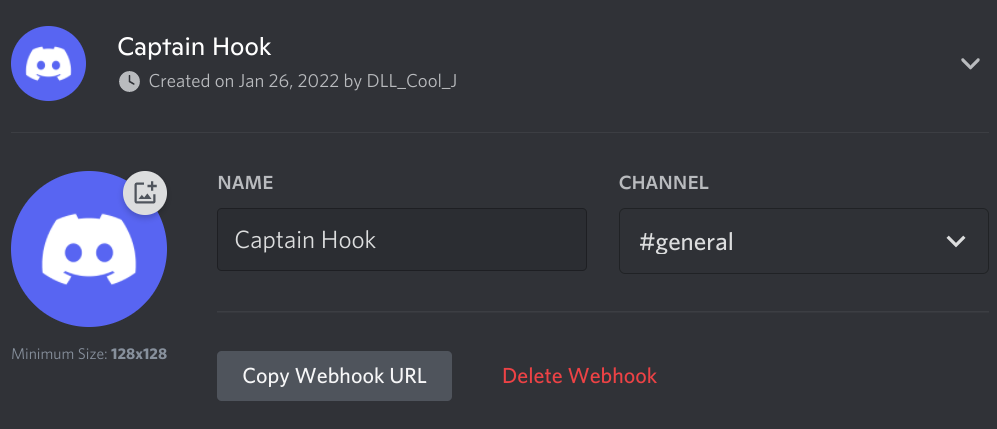

Extending off of the previous paragraph, we’ll integrate alerting via webhooks and a discord channel. The best thing about this is you can specify the message you want to send to a Discord channel. For example, what’s a better way to be alerted if you’re about to spend too much money by executing a good ol' “@here” in a large Discord channel? Clearly I joke, but also it’s effective. To manage Webhooks for your Discord server perform the following:

- Go to Discord Server Settings

- Integrations

- Webhooks

- Create a new webhook

At this point, we can copy the webhook URL and use this to send arbitrary messages to a channel of your choice.

Test your webhook via the following cUrl command substituting , and <WEBHOOK_TOKEN> values as appropriate.

curl -H "Content-Type: application/json" https://discord.com/api/webhooks/<UID>/<WEBHOOK_TOKEN> -d '{"username": "AWS_BOT", "content": "Test"}'

It’s easy to integrate this URL into Lambda via the following code, but be aware the token would be stored in plaintext in your lambda.

import requests

import json

def lambda_handler(event, context):

webhook_url = "https://discordapp.com/api/webhooks/<UID>/<TOKEN>"

msg= {

'username': AWS BOT',

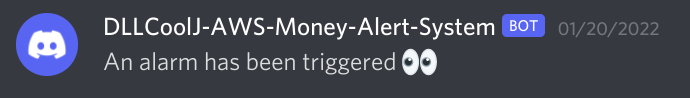

"content": "An alarm has been triggered :eyes:"

}

headers = {'content-type': 'application/json'}

requests.post(webhook_url, data=json.dumps(msg), headers=headers)

A glaring issue with the above example is the Webhook token is stored insecurely. It’s likely that you’ll want to create an IAM Role for your lambda that has permissions to use AWS' Secret’s Manager to perform decryption routines to fetch your token. Be aware, leveraging yet another service to perform said lookups does incur costs. The AWS Secret’s Manager does not have a free tier. An awesome feature about AWS Secret’s Manager is that after completing the form of how you’d like to store the secret, sample code for Java, Python, Ruby, Go, and C# is auto generated so you can easily integrate it into your project.

The complete workflow of the notification pipeline for a Discord webhook is as follows.

To test this functionality, head back to the SQS page and select “send and recieve messages”. This will allow us to trigger our lambda function and ensure we’re actually triggering our Discord webhook. The result should look like something below.

SMS Notifications

Alright, so you have your Discord notification, but what if that’s not enough? Let’s go ahead and expand into SMS notifications. SNS supports SMS and Push notifications. With this, we can integrate notifications to our phones so we’ll have three separate avenues of alerting . However, this requires setting up an origin identity numbers via yet another service Pinpoint. You also could consider using Twilio for SMS notification as they have a free $15 trial package and deploying a container from ECR as a lambda function to be invoked that in turn leverages the Twilio APIs. This is a bit out of scope for the current blog post, but may appear in a later one.

Beyond The Blog

While the techniques discussed here can proactively monitor excess billing, also consider moving deployment infrastructure to Terraform/Cloud Formation/Plumi/etc…. to tear down resources you’re not using during non-home lab hours. If you’re feeling creative, consider leveraging Event Bridge and lambda functions for cronjobs to auto shutoff EC2 instances with a given tag on a particular schedule.

Additionally, consider reviewing your monthly view to look at how your charged per-service and areas that you may be able to consolidate costs. For example, beyond just leveraging IaC tools, perhaps spot instances make sense in your environment. while reviewing the monthly budget, rotate those API keys, and crednetials and double check that your MFA is enabled on all accounts. The last thing you want is someone else driving up the bill.

Finally, always be sure to understand the underlying service and HOW it charges for billing. It’s easy to misunderstand something like AWS Glue/Sagemaker and end up with a surprise bill which hopefully with these excessive notification strategies you won’t!

Thank you for reading, and happy hacking!