2022-7-10 08:12:29 Author: gynvael.coldwind.pl(查看原文) 阅读量:22 收藏

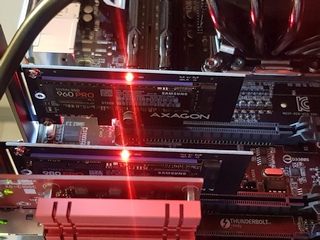

The back story of this debugging session is that I'm reworking a bit my home server. One of the things I'm doing is putting some more HDDs in there and sharing them over the network with my other computers. But since HDDs are a bit slow, I decided to add two M.2 NVMe SSD which I had lying around for caching (with bcache). Now this is a pretty old home server - I've built it in 2016 and used what even then was considered previous gen technology. This means it had only one M.2 slot, which was already used by the OS SSD. So the disks had to go the the PCI Express slots. For the disks themselves this isn't really a problem, as M.2 NVMe is basically PCIe in a different form factor. So just a simple (electrically speaking) adapter was enough. And while one of the SSDs worked well, the other was suspiciously slow.

Here's my debug log from this session.

Issue: I've put an NVMe SSD via an adapter into a PCIe 2.0 x16_2 slot, however the max read speed I was getting was ~380MB/s, which is waay less than it should be.

nvme2n1 259:0 0 931.5G 0 disk

# hdparm -t /dev/nvme2n1

/dev/nvme2n1:

Timing buffered disk reads: 1122 MB in 3.00 seconds = 373.60 MB/sec

At first I though it's an issue with my CPU (i7-5820K) having only 28 PCIe lanes. But doing the lane math I came to an obvious conclusion that all the NVMe SSDs and the NIC take up only 16 lanes. So that was not it.

One thing to note is that the slot which I used is a PCIe 2.0 slot. Perhaps that fact alone would account for the slow speed? Let's do the math.

Theoretically PCIe 2.0 has 5GT/s (that's giga transfers per second), with each lane providing a theoretical speed of 500MB/s. So PCIe 2.0 x4 should give the theoretical capacity of 2GB/s (x4 since M.2 provides up to four lanes). Even accounting for encoding, protocol, and system overhead, that's a lot more than 0.37GB/s I was getting. So it wasn't the issue of the slot being only PCIe 2.0.

The big question now was whether this was an adapter problem (folks on StackOverflow pointed out that a common issue might be simply incorrectly inserting the SSD into the adapter), an SSD problem, or the PCIe slot problem of some other type.

To test this I've swapped the M.2 adapter card with my 10GbE network card and ran some tests. The results were the following:

- The SSD got read speeds of a bit over 2GB/s – this in itself proved it was neither an adapter, nor an SSD issue.

- NIC's transfer however fell to around 3.1Gbit/s, which translates to ~390MB/s – this was in the same ballpark as the SSD read speed when it was in this slot.

So it's a slot issue.

After thinking about it a few seconds more, the ~390MB/s speed is also what I would expect out of an PCIe 2.0 x1 slot. But wasn't this supposed to be an x16 slot? At least that's what the manual said.

I removed the card again and inspected the slot. The mechanical part itself was of course x16, as the motherboard manual advertised. However the electrical connection (actual visible pins) was only x8. OK, that's weird?

But given that M.2 NVMe SSD is x4 only anyway, this wouldn't explain the issue.

Next step was to inspect the slots from the software side, i.e. check what does Linux see.

# lspci --v | grep Width

LnkCap: Port #8, Speed 5GT/s, Width x1, ASPM not supported

LnkSta: Speed 5GT/s (ok), Width x1 (ok)

A ha! Linux sees the slot as x1. Or is it the device in that slot being configured as x1? Well, there's always dmidecode to check this:

# dmidecode | grep PCI

Designation: PCIEX16_1

Type: x16 PCI Express

Designation: PCIEX1_1

Type: x1 PCI Express

Designation: PCIEX16_2

Type: x1 PCI Express 3

Designation: PCIEX16_3

Type: x16 PCI Express 3

Designation: PCIEX1_2

Type: x1 PCI Express 3

Designation: PCIEX16_4

Type: x16 PCI Express 3

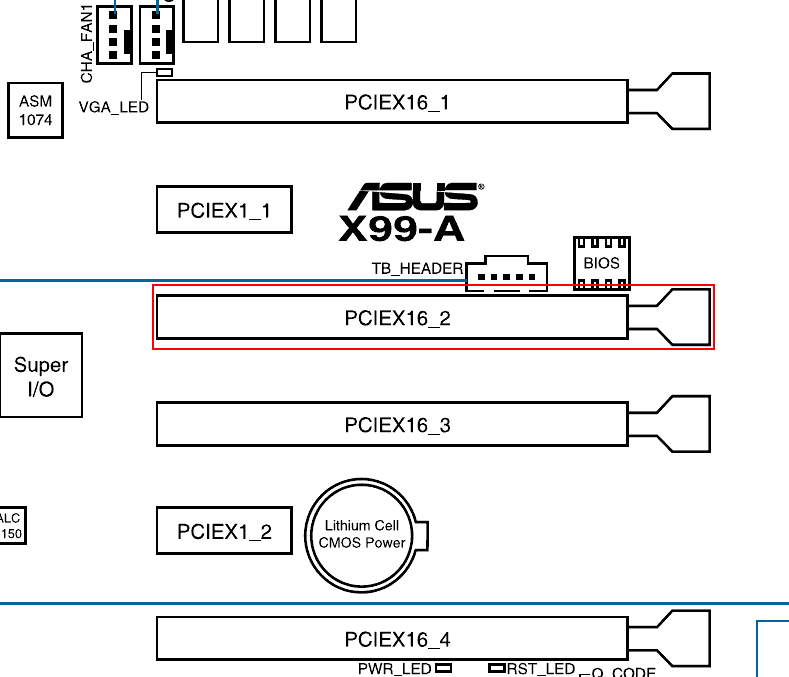

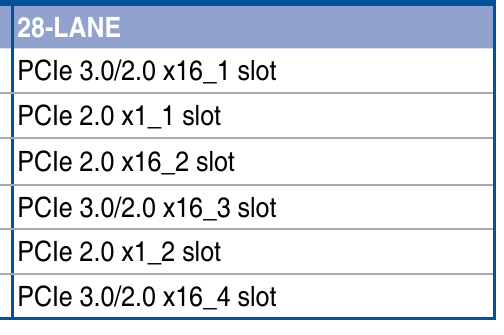

I've compared this with the manual (the slot in question is PCIe 2.0 x16_2):

First two slots make sense, but PCIEX16_2 is noted as x1 PCI Express 3 instead of x16 PCI Express 2 (or even x8 since I already know about the pins). And why is it PCIe 3.0 and not 2.0? The previously executed lspci did show 5GT/s, i.e. PCIe 2.0.

This is where I decided I probably should RTFM and check if there isn't some option in the BIOS to switch this slot into something more than x1. And obviously since I knew exactly what I was looking for, I found it immediately:

PCIe x16_2 is set to x1 mode by default. When the bandwidth is manually switched to x4 mode, the IRQ assignment will be changed to A.

Reading further into the manual I found out that PCIe 2.0 x16_2 shares the bandwidth with PCIe 2.0 x1_1 (which was unpopulated) and USB3_E56 (which is a set of two USB 3.0 ports on the rear panel of the motherboard, both unused).

And of course, the BIOS has an option to switch the slot to x4 mode, but that disables the mentioned PCIe 2.0 x1_1 slot and USB3_E56 (which is not an issue for me):

Advanced\Onboard Devices Configuration:

PCI-EX16_2 Slot (Black) Bandwidth [Auto]

[Auto]

Runs at AUTO with USB3_E56 port and PCIEx1_1 slot enabled.

[X1 Mode]

Runs at x1 mode with USB3_E56 port and PCIEx1_1 slot enabled.

[X4 Mode]

Runs at x4 mode for a high performance support with USB3_E56 port and

PCIEx1_1 slot disabled.

After a reboot and changing the BIOS settings I've got much better results:

# hdparm -t /dev/nvme2n1

/dev/nvme2n1:

Timing buffered disk reads: 3730 MB in 3.00 seconds = 1243.19 MB/sec

This was still almost 2x slower than what this SSD could do, but still it was over 3 times better than previously. Furthermore, it was not really a problem for me, since – as mentioned – this SSD was to be used for caching a network disk. Given that my network in 10GbE, which – taking into account wire encoding and Ethernet/IP/TCP – will allow transfer speeds of around 1000–1250MB/s, the bottlenecks line up neatly!

What's strange is that for whatever reason dmidecode still shows that slot as x1 PCI Express 3. Checking lspci -vv and lspci -t -v however correctly shows the device as PCI Express 2.0 x4 – so all is good:

LnkCap: Port #5, Speed 5GT/s, Width x4, ASPM not supported

LnkSta: Speed 5GT/s (ok), Width x4 (ok)

I also decided to upgrade the BIOS at the same time (I was prepared to do it as a fallback plan, even though it turned out to not be needed), but even after the upgrade this slot is still visible as x1 PCI Express 3 in dmidecode.

I'll sum this debug log with a tweet I posted that day:

PCI-e is always an adventure.

— Gynvael Coldwind (@gynvael) July 2, 2022

On one of my motherboards there is a PCI-e slot, which:

- is described in the manual as PCIe 2.0 x16

- has x8 electrical connections

- has the speed of x1

- ...unless some USB ports are disabled in BIOS, then it becomes x4

🤷

如有侵权请联系:admin#unsafe.sh