2022-6-29 07:30:9 Author: unit42.paloaltonetworks.com(查看原文) 阅读量:17 收藏

This post is also available in: 日本語 (Japanese)

Executive Summary

Unit 42 researchers identified FabricScape (CVE-2022-30137), a vulnerability of important severity in Microsoft’s Service Fabric – commonly used with Azure – that allows Linux containers to escalate their privileges in order to gain root privileges on the node, and then compromise all of the nodes in the cluster. The vulnerability could be exploited on containers that are configured to have runtime access, which is granted by default to every container.

Service Fabric hosts more than 1 million applications and runs millions of cores daily, according to Microsoft. It powers many Azure offerings, including Azure Service Fabric, Azure SQL Database and Azure CosmosDB, as well as other Microsoft products including Cortana and Microsoft Power BI.

Using a container under our control to simulate a compromised workload, we were able to exploit the vulnerability on Azure Service Fabric, which is an Azure offering that deploys private Service Fabric clusters in the cloud. A few other exploitation attempts on Azure's offerings that are powered by managed multi tenant Service Fabric clusters have failed as Microsoft disables runtime access on containers of those offerings.

We worked closely with Microsoft (MSRC) to remediate the issue, which was fully fixed on June 14, 2022. Microsoft released a patch to Azure Service Fabric that has already mitigated the issue in Linux clusters, and also updated internal production environments of offerings and products that are powered by Service Fabric.

We advise customers running Azure Service Fabric without automatic updates enabled to upgrade their Linux clusters to the most recent Service Fabric release. Customers whose Linux clusters are automatically updated do not need to take further action.

Both Microsoft and Palo Alto Networks recommend avoiding execution of untrusted applications in Service Fabric. See Service Fabric documentation for more information.

While we're not aware of any attacks in the wild that have successfully exploited this vulnerability, we want to urge organizations to take immediate action to identify whether their environments are vulnerable and quickly implement patches if they are.

Table of Contents

Service Fabric Overview

Service Fabric Architecture

The Vulnerability

Exploitation of CVE-2022-30137

Gaining Code Execution on the Node

Cluster Takeover

Broader Impact of FabricScape

Limitations

Disclosure and Mitigations

Conclusion

Service Fabric Overview

Service Fabric is an application hosting platform that supports different forms of packaging and managing services, including, but not limited to containers.

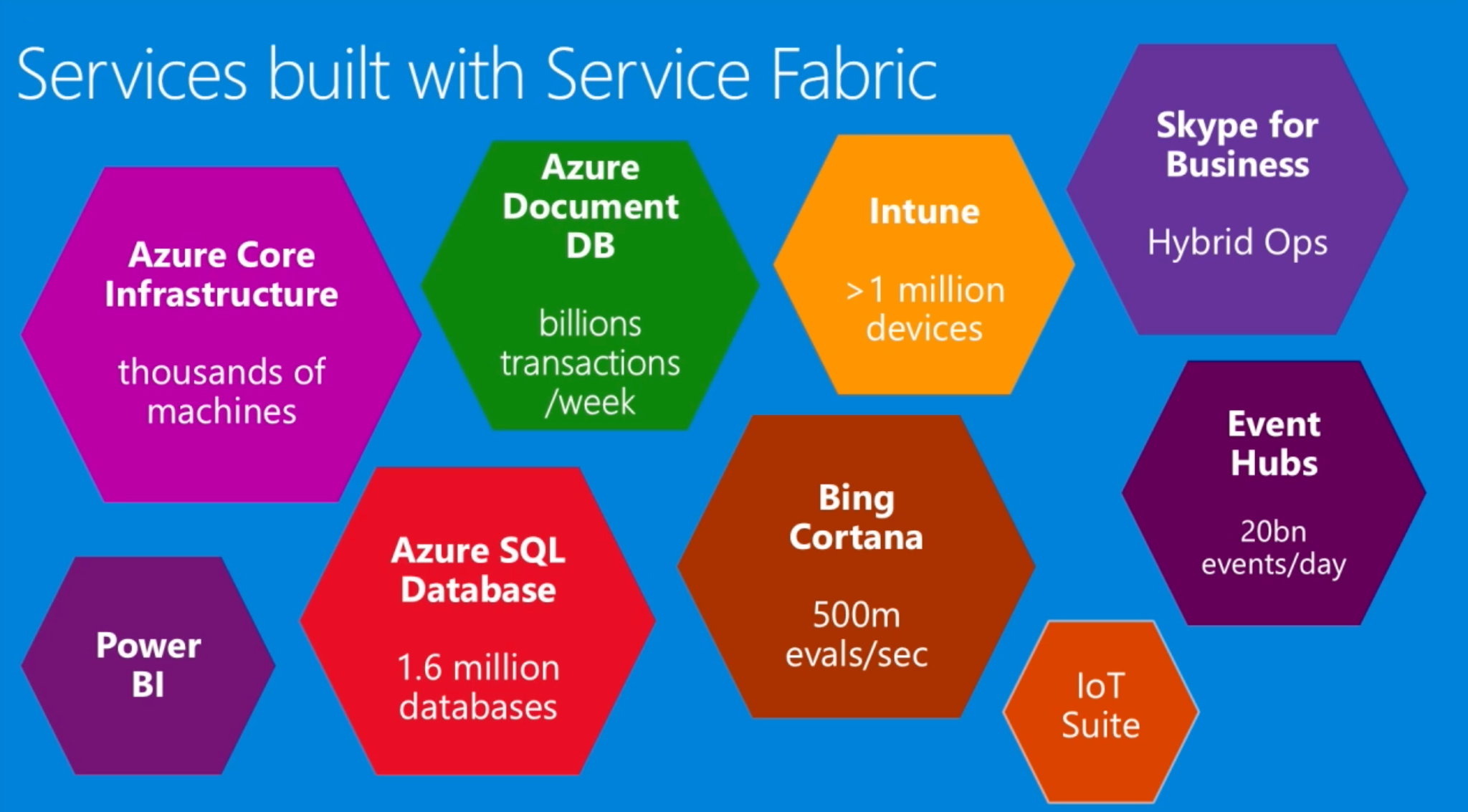

Microsoft had previously published documentation that Service Fabric is being used in many core Azure services:

In 2016, in light of Service Fabric's success in internal environments, Microsoft released Azure Service Fabric as a platform as a service, allowing customers to create and manage their own dedicated Service Fabric clusters in Azure Cloud. It’s widely used by enterprises across a variety of sectors including government, media, automotive, fashion, transportation and multinational conglomerates.

Service Fabric Architecture

A general understanding of the basic architecture of Service Fabric is required in order to understand the full impact of FabricScape, so we’ll start with a quick overview of Service Fabric architecture.

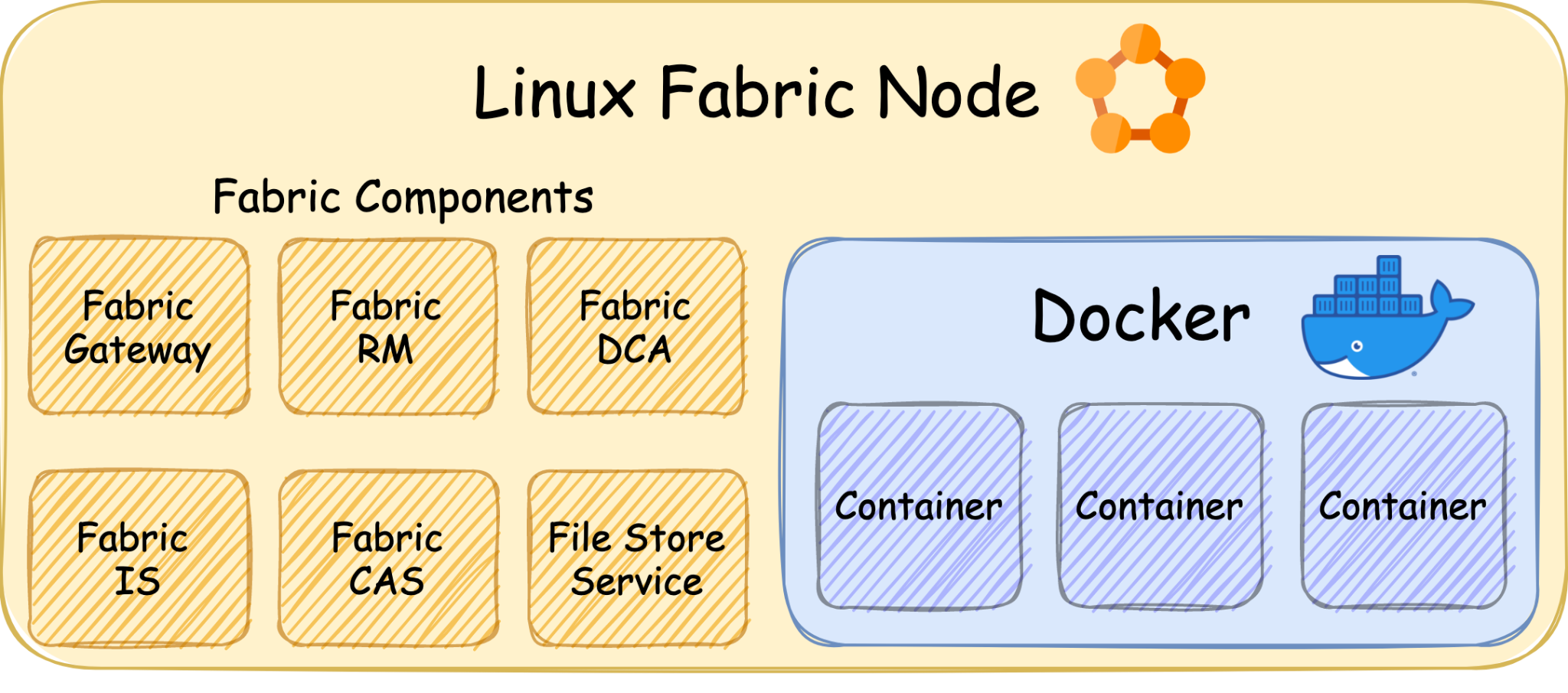

A Service Fabric cluster consists of many nodes as each one runs a container engine that executes the desired containerized applications, just like Kubernetes. It supports Linux and Windows nodes and uses Docker on both while supporting Hyper-V isolation mode in Windows nodes for maximum isolation. When deploying an application into a Service Fabric cluster, Service Fabric will deploy the application as containers according to the application manifest.

Under the hood every node runs multiple components, allowing multiple nodes to work in synergy and form a reliable and distributed cluster. There is almost no public documentation about these components, but Microsoft released Service Fabric version 6.4 source code in 2018, and that allowed the public to read the code and understand the components' purposes and operations.

The Vulnerability

Service Fabric supports deploying applications as containers, and during each container initialization, a new log directory is created and mounted into each container with read and write permission. All of those directories are centralized in one path on every node. For example, in Azure Service Fabric offering, those directories are at /mnt/sfroot/log/Containers.

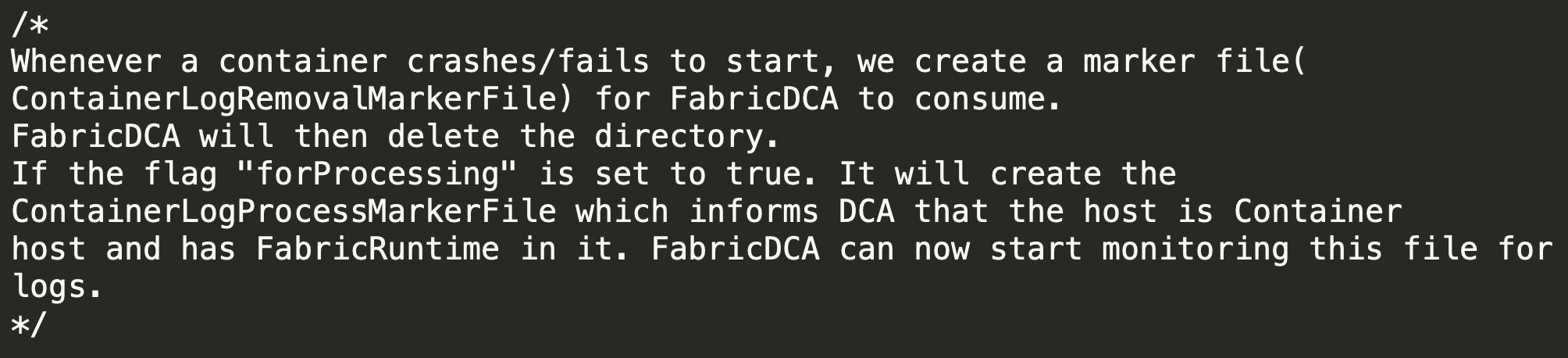

One of Service Fabric's components is the Data Collection Agent (DCA). Among other things, it is responsible for collecting the logs from those directories to be processed later. In order to access the directories, it needs high privileges and therefore runs as root on every node.

At the same time, it handles files that could be modified by containers. Thus, exploiting a vulnerability in the agent's mechanism that handles these files could result in a container escape and gaining root on the node. This could happen, for example, if the user runs a malicious container or package, or if a container is taken over by an attacker.

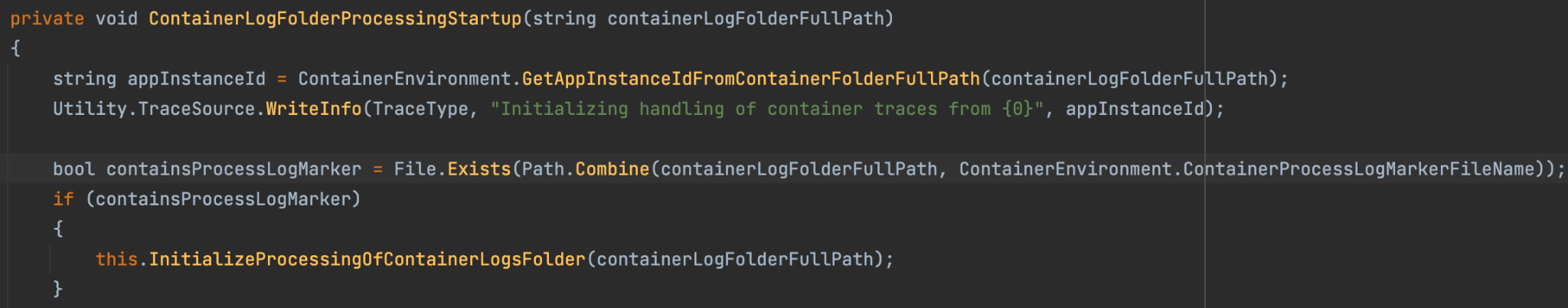

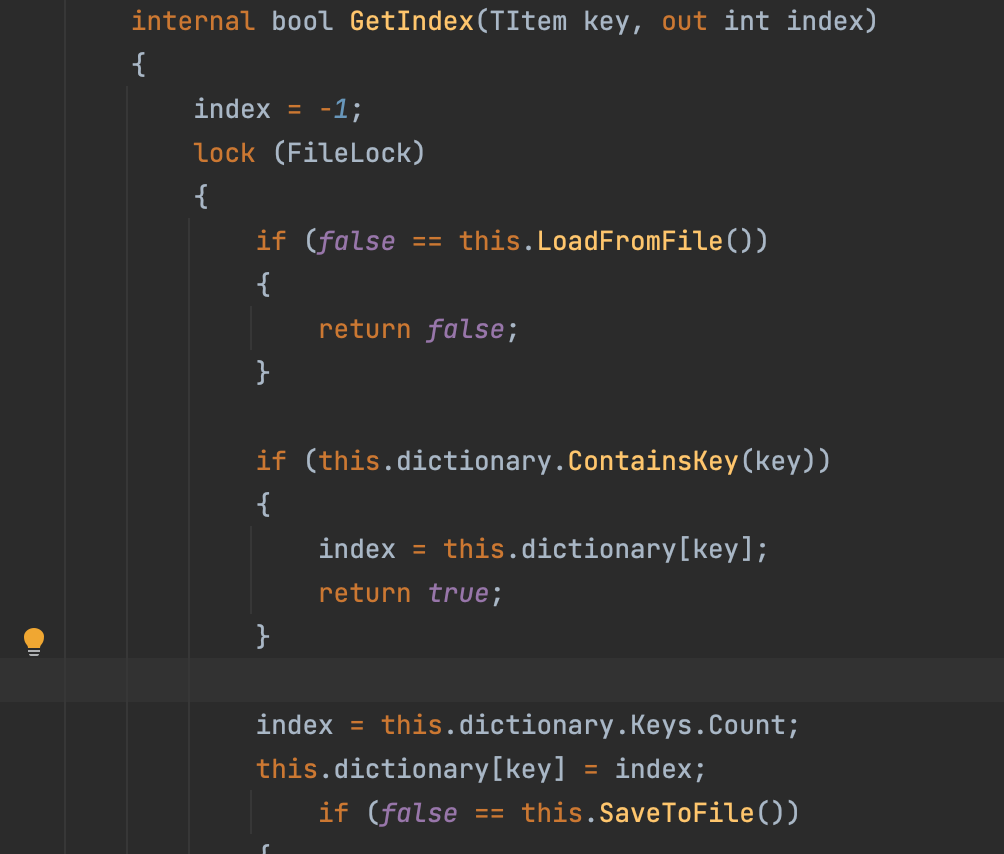

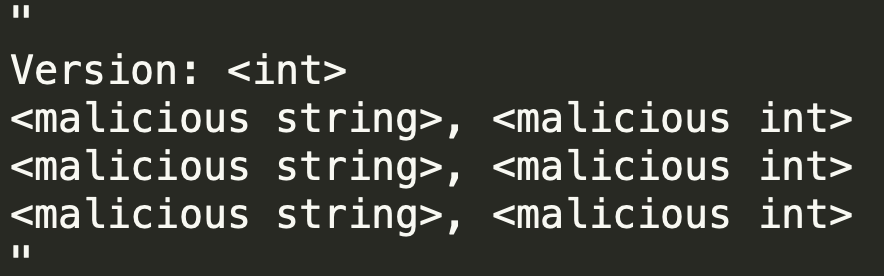

By digging into DCA's old source code, we noticed a potential race-conditioned arbitrary write in the function GetIndex (PersistedIndex.cs:48).

This function reads a file, checks that the content is in the expected format, modifies some of the content and overwrites the file with the new content.

In order to do so, it uses two sub-functions:

- LoadFromFile – reads the file.

- SaveToFile – writes the new data to the file.

This functionality results in a symlink race. An attacker in a compromised container could place malicious content in the file that LoadFromFile reads. While it continues to parse the file, the attacker could overwrite the file with a symlink to a desirable path so that later SaveToFile will follow the symlink and write the malicious content to that path.

As DCA runs as root on the node file system, it will follow the symlink and overwrite files in the node file system.

Exploitation of CVE-2022-30137

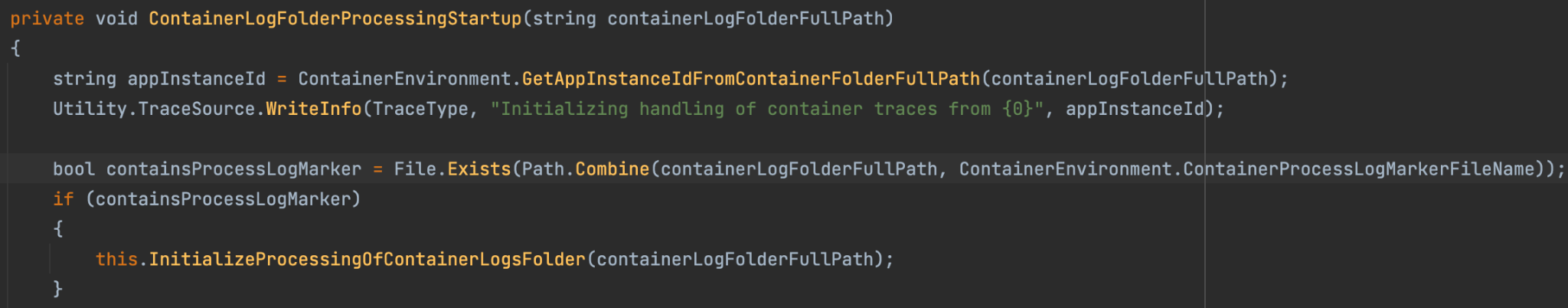

In order to exploit the issue, an attacker needs to trigger DCA to run the vulnerable function on a file that it controls. DCA monitors the creation of specific filenames in the log directories we mentioned above, and executes different functionality for each file. One of those files is ProcessContainerLog.txt.

When DCA identifies that this file was created, it executes a function that eventually runs GetIndex numerous times on paths inside the log directory, which the container can modify.

This means that a malicious container could trigger the execution of GetIndex on a file that it controls and try to beat the race condition in order to overwrite any path on the node filesystem.

In order to beat it consistently, we changed the malicious file to weigh 10 MB, so that it will take LoadFromFile a considerable amount of time to parse it, giving us sufficient time to overwrite it with a symlink and beat the race every single time.

At this point, we were able to exploit GetIndex from the context of the container and overwrite any file on the node.

While this behavior can be observed on both Linux containers and Windows containers, it is only exploitable in Linux containers because in Windows containers unprivileged actors cannot create symlinks in that environment.

From now on, we will focus on the exploitation of a Linux node (Ubuntu 18.04) in order to gain code execution on the node.

Gaining Code Execution on the Node

Gaining code execution on a machine using a privileged arbitrary write vulnerability is a trivial task that can be accomplished using many techniques such as adding malicious ssh keys, adding a malicious user or installing a backdoor by overwriting benign binaries.

None of these techniques is applicable in this case since the write primitive to the node filesystem is weak because of two reasons:

- GetIndex modifies internal Service Fabric files and therefore verifies that the file (payload content) is in the right internal format before continuing to SaveToFile.

- The overwritten file on the node file system doesn't have execution permissions.

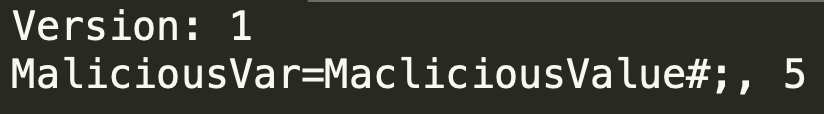

After some digging, we figured out that the format is very similar to the format of files that contain environment variables.

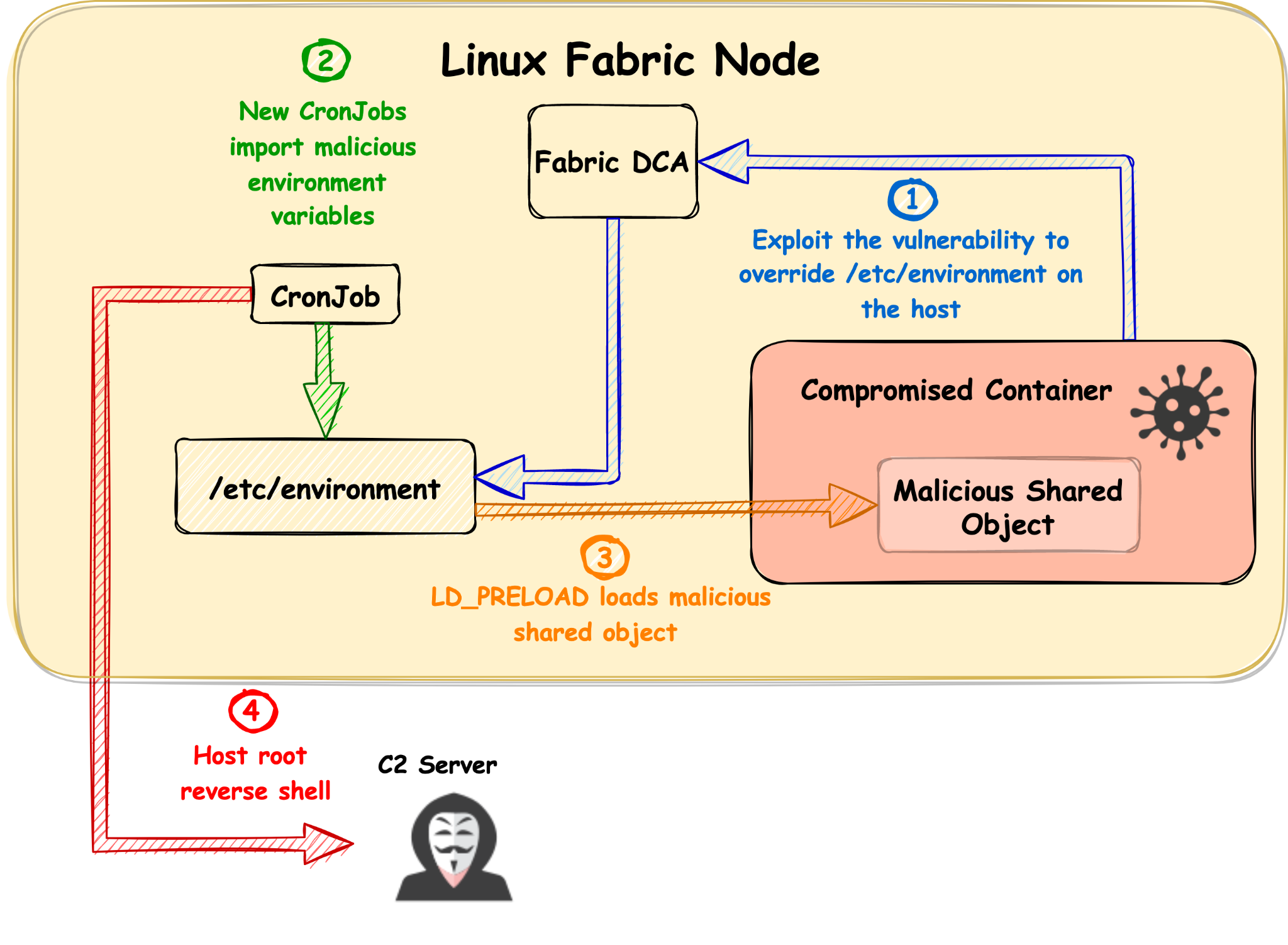

We choose to use /etc/environment for the exploitation as it contains environment variables specifying the basic environment variables for new shells but can be used by other programs. After some research, we found out that every executed job in the Linux task scheduler (cron) imports this file, and luckily, there is a job that is executed every minute by root on every node, meaning we could inject malicious environment variables into new processes that run as root on the node.

After digging deeper, we found that having jobs on the scheduler is not necessary for exploitation since cron executes an internal hourly job as root, which could be exploited.

In order to gain code execution, we used a technique called dynamic linker hijacking. We abused the LD_PRELOAD environment variable. During the initialization of a new process, the linker loads the shared object that this variable points to, and with that, we inject shared objects to the privileged cron jobs on the node.

We wrote a dummy shared object with a function that initiates a reverse shell and added a construction attribute so that when the shared object is loaded, it will initiate a reverse shell automatically. We compiled the shared object and copied it to the log directory in the container so that we could point LD_PRELOAD to the object path.

One minute after exploitation, we got a reverse shell in the context of root on the node.

We tested this exploitation successfully on the Azure Service Fabric offering using the latest version available at that time (8.2.1124.1), on both Ubuntu 16.04 and 18.04. We were able to beat the race condition consistently and successfully break out and execute code on the node every single time.

Cluster Takeover

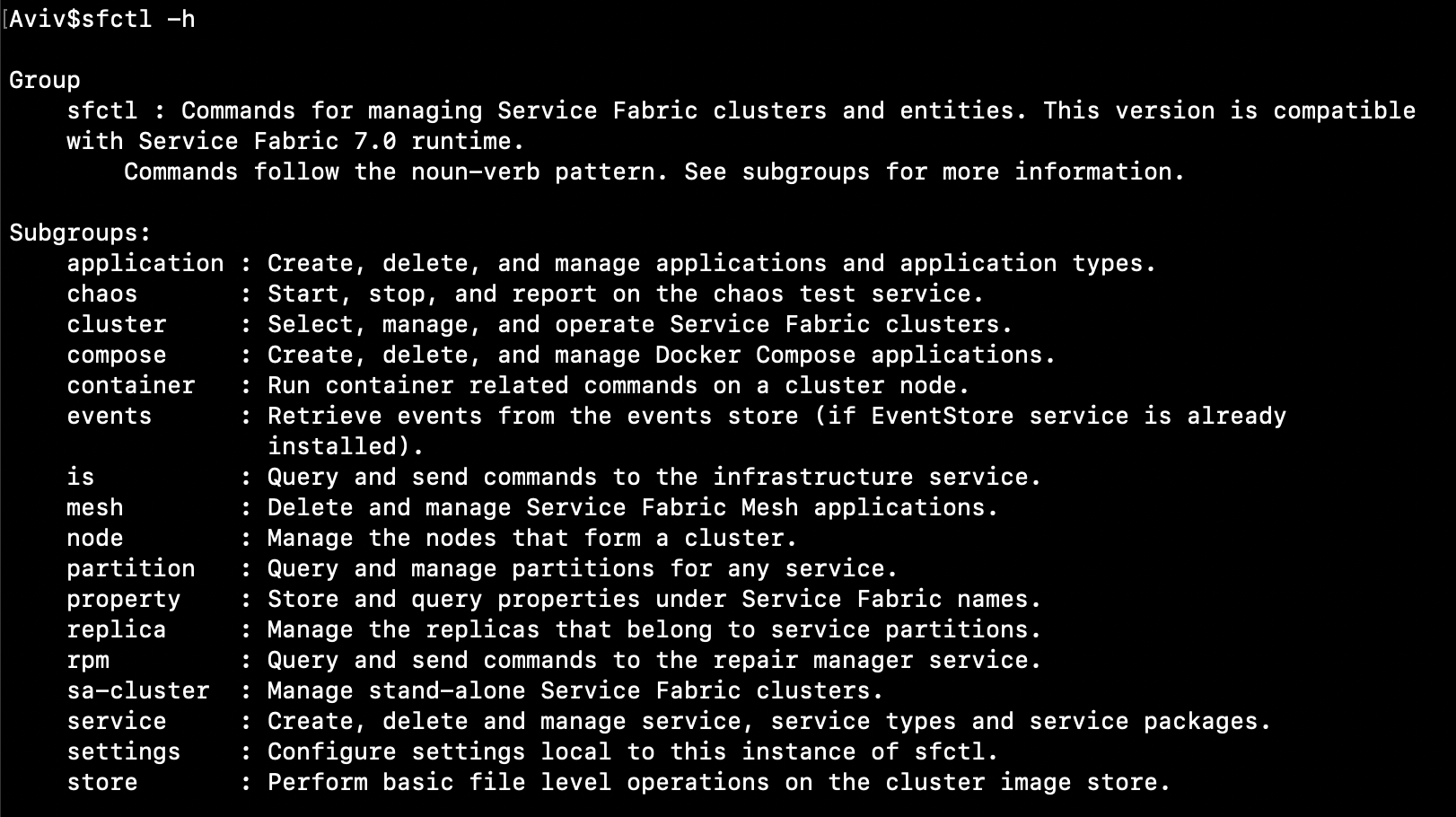

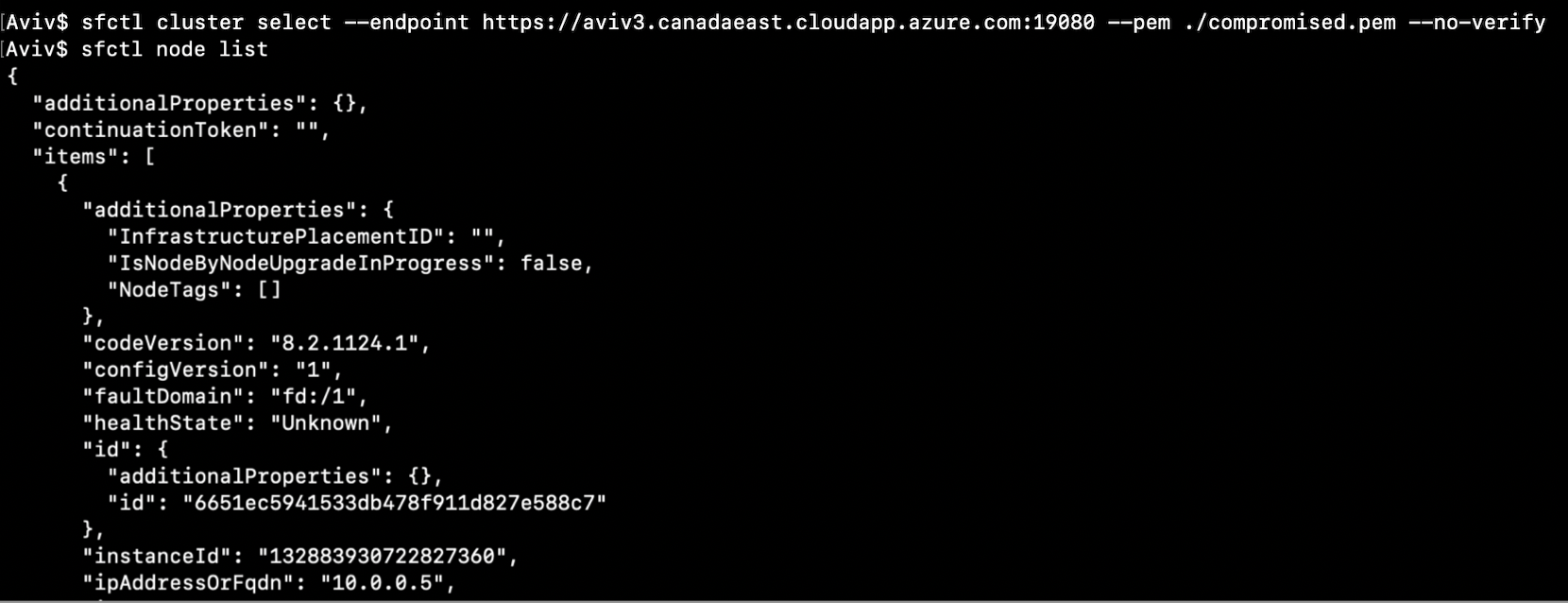

Microsoft provides users an easy way to manage and interact with their Service Fabric clusters by using the sfctl CLI tool.

In order to interact and manage a cluster, users need to provide sfctl two arguments:

- The cluster endpoint address.

- A private certificate.

When executing a command, sfctl sends requests to a REST API in the cluster and uses the certificate for authentication. This API can perform many functionalities and provides a way to manage the cluster remotely. It listens on port 19080 by default in the Azure Service Fabric offering and is open to the internet so that users would be able to access it.

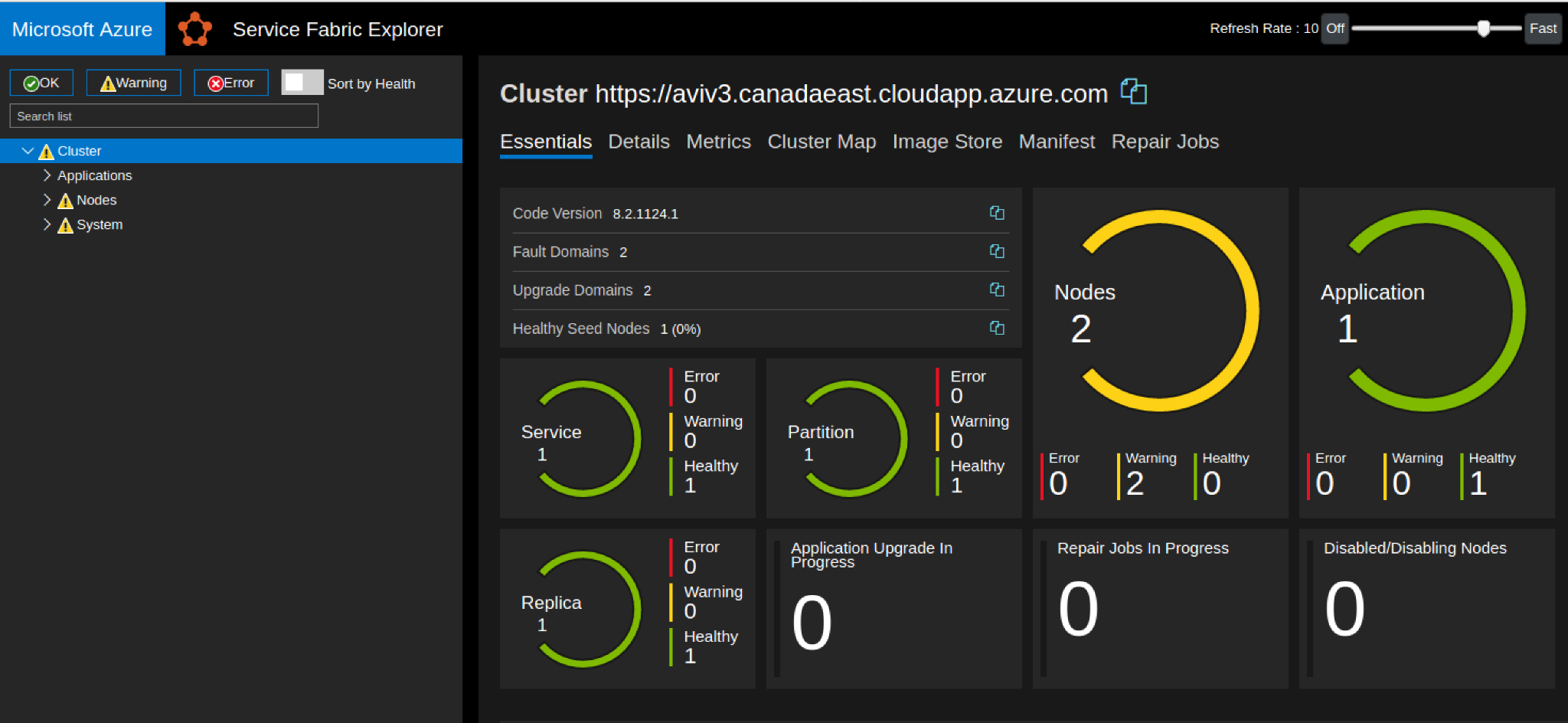

Besides the API, this endpoint has a graphical interface that can be accessed by browsers if the private certificate is applied. This interface is called Service Fabric Explorer, and it is used as a graphical way to manage and analyze the cluster.

After gaining root privileges on the node by exploiting CVE-2022-30137, we explored the file system and found the directory /var/lib/waagent/ contains sensitive files, including the certificate that controls the whole cluster.

By applying the compromised certificate, we were able to authenticate to any of the REST API endpoints (and the load balancer) and send requests to trigger functionalities in the cluster.

We were able to use the certificate to run sfctl and manage the cluster, or even browse to the Service Fabric Explorer.

Broader Impact of FabricScape

Microsoft does not publicly disclose what offerings are powered by Service Fabric but does provide a partial list as shown in Figure 1.

This means that if a malicious actor gains control over a container in Service Fabric, it would be possible to compromise the whole cluster as demonstrated above.

Limitations

A Service Fabric cluster is single-tenant by design, and hosted applications are considered trusted. They are therefore able to access the Service Fabric runtime data by default. This access allows the applications to read data regarding their Service Fabric environment and write logs to specific locations. In order to exploit FabricScape, the compromised container must have runtime access because that is necessary for the logs directory to be accessible. If developers consider their applications as untrusted or if the cluster is multitenant, this access can be disabled for each application on the cluster separately by modifying each application manifest and setting RemoveServiceFabricRuntimeAccess to true.

Other than our successful exploitation in Azure Service Fabric, we tested Azure Container Instances, Azure PostgreSQL and Azure Functions. All of these services can be deployed in a serverless plan and are powered by multitenant Service Fabric clusters.

We could not exploit FabricScape over those services since Azure disabled the runtime access on those services.

Disclosure and Mitigations

We disclosed the vulnerability, including a full operational exploit, to Microsoft on Jan. 30, 2022.

Microsoft released a fix for the issue on June 14, 2022.

We advise that customers running Azure Service Fabric without automatic updates enabled should upgrade their Linux clusters to the most recent Service Fabric release. Customers whose Linux clusters are automatically updated do not need to take further action.

Customers that use other Azure offerings that are based on managed Service Fabric clusters are safe as Microsoft has updated its software.

Conclusion

As the trend of migrating to the cloud grows exponentially, the cloud ecosystem adapts and reinvents itself constantly to keep up with demand by developing new technologies.

As part of a Palo Alto Networks commitment to improving public cloud security, we actively invest in researching such technologies and report issues to the vendors in order to keep customers and users safe.

Get updates from

Palo Alto

Networks!

Sign up to receive the latest news, cyber threat intelligence and research from us

如有侵权请联系:admin#unsafe.sh