2022-9-8 23:8:16 Author: lab.wallarm.com(查看原文) 阅读量:40 收藏

Article by Jiju Jacob, Director of Engineering at Revenera

[This is an update of Mr. Jacobs’ 05/23 post in his Medium blog. He is a Director of Engineering at Revenera. Revenera, born as InstallShield and now a Flexera company, helps software and technology companies use open source solutions more effectively, and provides software development, consulting, training and revenue recovery services. We are grateful to Jiju for updating his post for our blog and sharing it with our audience. You can connect with him via LinkedIn.]

We have already moved into a world of APIs that provide monetizable functions for our customers. This necessitates us to front our APIs with an API Gateway that provides cross cutting functionalities such as logging, access control, routing, monitoring, etc. But what about protecting them against the real dangers out there? Protecting against API-specific threats requires a solution that is specifically built to protect APIs.

In this post, we will integrate a Wallarm solution into our API Gateway. We have selected the Wallarm API Security platform, which provides protection for both APIs and legacy web apps. And we are integrating this into a pre-existing API Gateway laid out with Kong on our Kubernetes Cluster.

Though it is much easier to integrate a WAF like AWS WAF into the load balancer itself, Wallarm has a lot of benefits over it. It’s built to support all API protocols (like REST, GraphQL, gRPC, etc.), it requires near-zero tuning and configuration, and can work across multiple cloud and K8s environments. This is what appealed to me to pick Wallarm in lieu of AWS WAF . With most security solutions (such as old-fashioned WAFs), we will need to add rules ourselves for protecting against newer attacks, whereas the Wallarm signature-less detection engine detects and protects against new attacks without you having to write new rules. Isn’t that great?

What are we building today?

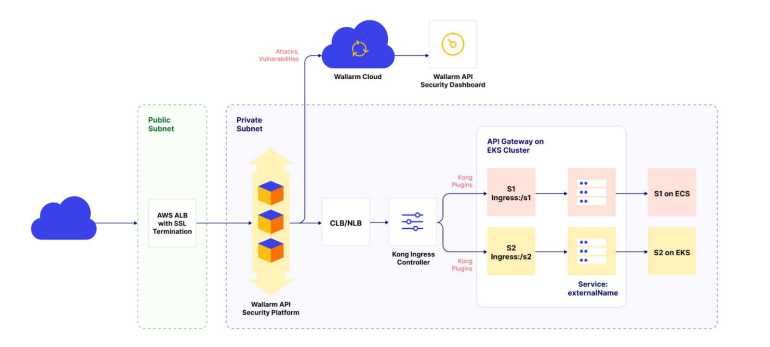

They say words are clueless about what diagrams have to say!

The Wallarm API Security platform sits between the ALB (Application Load Balancer) and the API Gateway (Kong on Kubernetes). The Wallarm API Security platform is deployed as an ECS Cluster (AWS Fargate). The ALB does the SSL termination. The Wallarm ECS nodes are capable of either blocking or just monitoring and reporting all kinds of attacks that are directed towards your infrastructure.

We will automate our infrastructure building using Terraform. And I am just going to put up the most important parts of the code here, not everything.

Building out

Wallarm allows a free trial here and you can use that to create an account. Using this account, we can configure our ECS cluster and we can also visualize the attacks on our infrastructure.

Older versions of Wallarm needed a username and password to be configured on the scanning nodes, but the newer version needs a token instead. We can easily retrieve this token from the Wallarm UI. After signup and sign-in, create a new node in the UI as shown.

On creation of a new node, a token will be generated, this will need to be saved securely.

In the gist below, we are creating secrets in AWS Secrets manager having WALLARM_API_TOKEN and this is passed to the terraform as the environment variable “WAF_NODE_API_TOKEN”. The value of this environment variable is the token that we just generated.

resource "aws_secretsmanager_secret" "waf_deploy_secretx" {

name = format("waf_deploy_secret_%s", var.ENV)

recovery_window_in_days = 0

}

resource "aws_secretsmanager_secret_version" "waf_deploy_secret_version" {

secret_id = aws_secretsmanager_secret.waf_deploy_secretx.id

secret_string = <<EOF

{

"WALLARM_API_TOKEN": "${var.WAF_NODE_API_TOKEN}"

}

EOF

}

As mentioned earlier, we are hosting the Wallarm on an ECS cluster and we must create a security group for it. Here is the terraform code. I am injecting the ENV and AWS_REGION to the terraform code as environment variables, essentially trying to have the same infra code for all environments and regions.

resource "aws_security_group" "waf_alb_sg" {

name = "waf-alb-sg-${var.ENV}-${var.AWS_REGION}"

vpc_id = var.VPC_ID

ingress {

protocol = "tcp"

from_port = 80

to_port = 80

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

egress {

protocol = "-1"

from_port = 0

to_port = 0

cidr_blocks = ["0.0.0.0/0"]

ipv6_cidr_blocks = ["::/0"]

}

}

The next bit of work is to make sure to build an IAM role and role policies for our ECS cluster.

resource "aws_iam_role" "ecs_task_role" {

name = "waf-ecs-TaskRole-${var.ENV}-${var.AWS_REGION}"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "ecs-tasks.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

resource "aws_iam_role" "ecs_task_execution_role" {

name = "waf-ecs-TaskExecRole-${var.ENV}-${var.AWS_REGION}"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "ecs-tasks.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

resource "aws_iam_policy" "extraspolicy" {

name = "waf-ecs-extraspolicy-${var.ENV}-${var.AWS_REGION}"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"ec2:*",

"secretsmanager:*",

"logs:*"

]

Effect = "Allow"

Resource = "*"

},

]

})

}

resource "aws_iam_role_policy_attachment" "ecs-extras-policy-attachment1" {

role = aws_iam_role.ecs_task_execution_role.name

policy_arn = aws_iam_policy.extraspolicy.arn

}

resource "aws_iam_role_policy_attachment" "ecs-extras-policy-attachment2" {

role = aws_iam_role.ecs_task_role.name

policy_arn = aws_iam_policy.extraspolicy.arn

}

resource "aws_iam_role_policy_attachment" "ecs-task-execution-role-policy-attachment" {

role = aws_iam_role.ecs_task_execution_role.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy"

}

resource "aws_iam_role_policy_attachment" "ecs-task-role-policy-attachment" {

role = aws_iam_role.ecs_task_role.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonEC2ContainerServiceforEC2Role"

}

resource "aws_iam_role_policy_attachment" "ecs_ec2_cloudwatch_policy" {

role = aws_iam_role.ecs_task_role.name

policy_arn = "arn:aws:iam::aws:policy/CloudWatchFullAccess"

}

The permissions above are a bit wider than you would expect for production. You can tone it down once you have got the setup working. In the above code snippet, we are creating a task-execution-role and a task-role for the ECS cluster.

Next step is to create a Cloudwatch log group that will be used to dump all our ECS cluster’s logs.

resource "aws_cloudwatch_log_group" "waf_ecs_logs" {

name = "waf-ecs-loggroup-${var.ENV}-${var.AWS_REGION}"

retention_in_days = 30

}

The next step in this journey is to define the containers that will run in the ECS cluster (aka Task definition in ECS)

[

{

"cpu":1096,

"memory": 2048,

"portMappings": [

{

"hostPort": 80,

"containerPort": 80,

"protocol": "tcp"

}

],

"essential": true,

"environment": [

{

"name": "WALLARM_API_HOST",

"value": "${wallarm_host}"

},

{

"name": "NGINX_BACKEND",

"value": "${protected_service}"

},

{

"name": "WALLARM_MODE",

"value": "${wallarm_mode}"

},

{

"name": "WALLARM_APPLICATION",

"value": "${wallarm_application}"

},

{

"name": "TARANTOOL_MEMORY_GB",

"value": "1.2"

}

],

"secrets": [

{

"name": "WALLARM_API_TOKEN",

"valueFrom": "${wallarm_api_token}"

}

],

"name": "wallarm-container-${environment}-${region}",

"image": "registry-1.docker.io/wallarm/node:3.6.0-1",

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "waf-ecs-loggroup-${environment}-${region}",

"awslogs-region": "${region}",

"awslogs-stream-prefix":"waf-stream-${environment}-${region}"

}

}

}

]

Wallarm nodes are memory hungry. The JSON file above shows you that you should give at least 1.2 Gigs of memory for the tarantool component. The JSON file is a template that will be used by the terraform code to create the actual container definition.

WALLARM_API_HOST will need to be set to api.wallarm.com for the EU Wallarm Cloud and to the us1.api.wallarm.com for the Wallarm US Cloud. In my case, I used the US version.

The NGINX_BACKEND is essentially the protected resource that the Wallarm API Security platform is fronting. In our case, it is the load balancer address for the NLB / CLB that is integrated with our Kong API Gateway. Maybe you can build a layer of abstraction using an internal DNS name!

The WALLARM_MODE is our way of saying how strict Wallarm needs to be when dealing with traffic. A value of “block” will block all kinds of attacks. A value of “safe_blocking” will block only attacks from greylisted IP addresses. A value of “monitoring” will just monitor and report, not block, while a value of “off” — well why are we building this?

Usually you go with “monitoring” first and move on to “safe_blocking” and “block” modes as you harden.

Finally, you could have multiple applications that you can configure Wallarm cloud to monitor. You can register an Application into Wallarm UI

Give the application a name you can recognize and note down the Application ID. This will need to be fed into the WALLARM_APPLICATION environment variable.

This is the ECS cluster definition here

data "local_file" "input_template" {

filename = "wallarm-container-definition.json"

}

data "template_file" "input" {

template = data.local_file.input_template.content

vars = {

environment = "${var.ENV}"

region = "${var.AWS_REGION}"

wallarm_host = "${var.WAF_HOST}"

protected_service = "${var.WAF_PROTECTED_SERVICE_HOST}"

wallarm_api_token = format("%s:WALLARM_API_TOKEN::", aws_secretsmanager_secret.waf_deploy_secretx.arn)

wallarm_mode = "${var.WAF_MODE}"

wallarm_application = "${var.WAF_APPLICATION_ID}"

}

}

resource "aws_ecs_task_definition" "waf_ecs_task" {

family = "waf_ecs_task"

requires_compatibilities = ["FARGATE"]

# 1 vCPU = 1024 units

cpu = 4096

# Memory is in MiB

memory = 8192

# Refer to https://docs.wallarm.com/admin-en/configuration-guides/allocate-resources-for-waf-node/#tarantool for sizing

network_mode = "awsvpc"

container_definitions = "${data.template_file.input.rendered}"

task_role_arn = aws_iam_role.ecs_task_role.arn

execution_role_arn = aws_iam_role.ecs_task_execution_role.arn

runtime_platform {

operating_system_family = "LINUX"

cpu_architecture = "X86_64"

}

}

resource "aws_ecs_cluster" "waf_ecs_cluster" {

name = "waf-ecs-cluster-${var.ENV}-${var.AWS_REGION}"

configuration {

execute_command_configuration {

logging = "OVERRIDE"

log_configuration {

cloud_watch_log_group_name = aws_cloudwatch_log_group.waf_ecs_logs.name

}

}

}

setting {

name = "containerInsights"

value = "enabled"

}

}

resource "aws_ecs_service" "waf_service" {

name = "waf-service-${var.ENV}-${var.AWS_REGION}"

cluster = aws_ecs_cluster.waf_ecs_cluster.id

task_definition = aws_ecs_task_definition.waf_ecs_task.arn

desired_count = 3

launch_type = "FARGATE"

platform_version = "LATEST"

scheduling_strategy = "REPLICA"

load_balancer {

target_group_arn = aws_lb_target_group.waf_lb_target_group.arn

container_name = "wallarm-container-${var.ENV}-${var.AWS_REGION}"

container_port = 80

}

network_configuration {

subnets = var. PRIVATE_SUBNET

security_groups = [aws_security_group.waf_alb_sg.id]

assign_public_ip = "true"

}

}

In the above terraform, we are creating the ECS cluster, ECS service and the task definition for tasks that run as part of the ECS Service.

And finally for the ALB setup that fronts the Wallarm ECS cluster

resource "aws_lb" "waf_lb" {

name = "waf-alb-${var.ENV}-${var.AWS_REGION}"

internal = false

load_balancer_type = "application"

security_groups = [aws_security_group.waf_alb_sg.id]

subnets = var.PUBLIC_SUBNET

enable_deletion_protection = false

}

resource "aws_lb_target_group" "waf_lb_target_group" {

name = "waf-tg-${var.ENV}-${var.AWS_REGION}"

port = 80

protocol = "HTTP"

vpc_id = var.VPC_ID

target_type = "ip"

health_check {

enabled = true

path = "/def-healthz"

interval = 30

}

}

resource "aws_alb_listener" "waf_lb_http_listener" {

load_balancer_arn = aws_lb.waf_lb.id

port = 80

protocol = "HTTP"

default_action {

type = "redirect"

redirect {

port = "443"

protocol = "HTTPS"

status_code = "HTTP_301"

}

}

}

resource "aws_alb_listener" "waf_lb_https_listener" {

load_balancer_arn = aws_lb.waf_lb.id

port = 443

protocol = "HTTPS"

certificate_arn = “https_cert_arn” // something that I am not going to explain

default_action {

target_group_arn = aws_lb_target_group.waf_lb_target_group.id

type = "forward"

}

}

We set up the ALB to terminate SSL with a certificate. We also set up a HTTP to HTTPS redirect and point the target-group of the Application Load Balancer to the ECS cluster running the Wallarm API Security platform.

One thing that I got stuck on was that the AWS LoadBalancer was doing health checks, and I did not have a proper endpoint for health. It kept tripping the ECS instances over. It is important to have a proper health-check endpoint defined that can return a 200-status code, if everything is good.

In this post, I have left out the setup of the VPC, and the Certificates using AWS Cert Manager for brevity.

And voila, we have all the terraform for the entire infrastructure. Terraform weaves its magic and then after a few test requests to this infrastructure, Wallarm should start showing up beautiful graphs along with some statistics on how many attacks it foiled…

Here is a graph from my test environment.

如有侵权请联系:admin#unsafe.sh