本文为看雪论坛优秀文章

看雪论坛作者ID:CanineTooth

一

前言

这几天学习了虚拟机在创建和运行过程中,QEMU和KVM的核心执行流程。当然只是大概过程,并没有做到流程中的每个函数都分析。

很喜欢侯捷老师的一句话:源码之前,了无秘密。我阅读的源码是qemu-6.2.0和linux-5.15.39。

二

用户层QEMU核心流程

编译安装qemu的过程很简单,参考官方文档就行。

可以直接用gdb命令行调试qemu,也可以vscode搭配gdb,调试属于基本能力,不多说。

动态调试qemu,并结合qemu源码分析流程。

启动参数如下:

$ ./qemu-system-x86_64 \--enable-kvm \-machine q35 \-cpu host,+vmx \-smp 1 \-m 2048 \-name ubuntu \-hda /opt/vms/ubuntu.qcow2 \-cdrom /opt/vms/ubuntu.iso

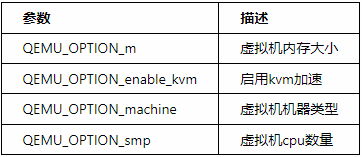

2.1 参数解析过程

qemu-6.2.0/softmmu/vl.c,line 2765

在vl.c文件2765行是入口,对运行程序传入的参数进行解析。

void qemu_init(int argc, char **argv, char **envp){//...// 对参数进行解析for(;;) {if (optind >= argc)break;if (argv[optind][0] != '-') {loc_set_cmdline(argv, optind, 1);drive_add(IF_DEFAULT, 0, argv[optind++], HD_OPTS);} else {const QEMUOption *popt;popt = lookup_opt(argc, argv, &optarg, &optind);if (!(popt->arch_mask & arch_type)) {error_report("Option not supported for this target");exit(1);}switch(popt->index) {case QEMU_OPTION_cpu:/* hw initialization will check this */cpu_option = optarg;break;//...// 主要关注下面几个参数case QEMU_OPTION_m:opts = qemu_opts_parse_noisily(qemu_find_opts("memory"),optarg, true);if (!opts) {exit(EXIT_FAILURE);}break;case QEMU_OPTION_enable_kvm:qdict_put_str(machine_opts_dict, "accel", "kvm");break;case QEMU_OPTION_M:case QEMU_OPTION_machine:{bool help;keyval_parse_into(machine_opts_dict, optarg, "type", &help, &error_fatal);if (help) {machine_help_func(machine_opts_dict);exit(EXIT_SUCCESS);}break;}case QEMU_OPTION_smp:machine_parse_property_opt(qemu_find_opts("smp-opts"),"smp", optarg);break;}}}//...// 根据accel设置accelerators = kvmqemu_apply_legacy_machine_options(machine_opts_dict);qemu_apply_machine_options(machine_opts_dict);// 也会根据进程名判断可用的加速类型configure_accelerators(argv[0]);// 内部调用了do_configure_accelerator --> accel_init_machine// accel_init_machine --> kvm_init// 初始化具体的accel类(这里是kvm)// 在qemu-6.2.0/accel/kvm/kvm-all.c line 3629// 函数kvm_accel_class_init内部找到真正的初始化函数// ac->init_machine = kvm_init;//...// 在qmp_x_exit_preconfig与虚拟cpu创建有关if (!preconfig_requested) {qmp_x_exit_preconfig(&error_fatal);}qemu_init_displays();// 设置accelaccel_setup_post(current_machine);os_setup_post();resume_mux_open();}

2.2 虚拟机创建

qemu-6.2.0/accel/kvm/kvm-all.c line 2306

在kvm-all.c文件2306行是kvm_init

调用栈:

kvm_init(MachineState * ms) (qemu-6.2.0\accel\kvm\kvm-all.c:2308)accel_init_machine(AccelState * accel, MachineState * ms) (qemu-6.2.0\accel\accel-softmmu.c:39)do_configure_accelerator(void * opaque, QemuOpts * opts, Error ** errp) (qemu-6.2.0\softmmu\vl.c:2348)qemu_opts_foreach(QemuOptsList * list, qemu_opts_loopfunc func, void * opaque, Error ** errp) (qemu-6.2.0\util\qemu-option.c:1135)configure_accelerators(const char * progname) (qemu-6.2.0\softmmu\vl.c:2414)qemu_init(int argc, char ** argv, char ** envp) (qemu-6.2.0\softmmu\vl.c:3724)main(int argc, char ** argv, char ** envp) (qemu-6.2.0\softmmu\main.c:49)

主要函数kvm_init

static int kvm_init(MachineState *ms){MachineClass *mc = MACHINE_GET_CLASS(ms);static const char upgrade_note[] ="Please upgrade to at least kernel 2.6.29 or recent kvm-kmod\n""(see http://sourceforge.net/projects/kvm).\n";//...QLIST_INIT(&s->kvm_parked_vcpus);// 开始使用kvm之前的标准流程// 打开设备/dev/kvm,检查kvm API版本// 保存了kvm设备描述符s->fds->fd = qemu_open_old("/dev/kvm", O_RDWR);if (s->fd == -1) {fprintf(stderr, "Could not access KVM kernel module: %m\n");ret = -errno;goto err;}ret = kvm_ioctl(s, KVM_GET_API_VERSION, 0);if (ret < KVM_API_VERSION) {if (ret >= 0) {ret = -EINVAL;}fprintf(stderr, "kvm version too old\n");goto err;}if (ret > KVM_API_VERSION) {ret = -EINVAL;fprintf(stderr, "kvm version not supported\n");goto err;}//...// 创建虚拟机,保存虚拟机描述符s->vmfddo {ret = kvm_ioctl(s, KVM_CREATE_VM, type);} while (ret == -EINTR);//...s->vmfd = ret;//...}

其主要功能是保存了kvm设备描述符s->fd,创建的虚拟机的描述符s->vmfd。

2.3 虚拟cpu创建

在2.1节中有提到,qmp_x_exit_preconfig函数与虚拟cpu的创建有关。

动态调试跟踪分析

qmp_x_exit_preconfig qemu-6.2.0\softmmu\vl.c:2740

--> qemu_init_board qemu-6.2.0\softmmu\vl.c:2652

--> machine_run_board_init qemu-6.2.0\hw\core\machine.c:1181

--> pc_q35_init qemu-6.2.0\hw\i386\pc_q35.c:182

--> x86_cpus_init qemu-6.2.0\hw\i386\x86.c:141

--> x86_cpu_new qemu-6.2.0\hw\i386\x86.c:114

在machine_run_board_init函数中根据参数中给的机器类型调用不同的pc_machine_init函数

machine_class->init(machine)----pc_q35_init

void x86_cpus_init(X86MachineState *x86ms, int default_cpu_version){//...// 根据参数smp的值,创建对应数量的虚拟cpufor (i = 0; i < ms->smp.cpus; i++) {x86_cpu_new(x86ms, possible_cpus->cpus[i].arch_id, &error_fatal);}}

在x86_cpu_new中继续虚拟cpu的创建

x86_cpu_new

--> qdev_realize qemu-6.2.0\hw\core\qdev.c:333

--> device_set_realized qemu-6.2.0\hw\core\qdev.c:531

--> x86_cpu_realizefn qemu-6.2.0\target\i386\cpu.c:6447

--> qemu_init_vcpu qemu-6.2.0\softmmu\cpus.c:613

在x86_cpu_realizefn中调用qemu_init_vcpu对创建的虚拟cpu进行初始化

2.4 虚拟机运行

qemu-6.2.0/softmmu/cpus.c line 611

在cpus.c文件611行qemu_init_vcpu中初始化虚拟cpu,创建执行线程。

void qemu_init_vcpu(CPUState *cpu){//...// 调用函数kvm_start_vcpu_thread创建虚拟cpu执行线程cpus_accel->create_vcpu_thread(cpu);//...}static void kvm_start_vcpu_thread(CPUState *cpu){//...// 线程函数kvm_vcpu_thread_fnqemu_thread_create(cpu->thread, thread_name, kvm_vcpu_thread_fn,cpu, QEMU_THREAD_JOINABLE);//...}static void *kvm_vcpu_thread_fn(void *arg){//...// kvm_init_vcpu中通过kvm_vm_ioctl(s, KVM_CREATE_VCPU, (void *)vcpu_id)// 获取了vcpu描述符 cpu->kvm_fd = ret;r = kvm_init_vcpu(cpu, &error_fatal);kvm_init_cpu_signals(cpu);/* signal CPU creation */cpu_thread_signal_created(cpu);qemu_guest_random_seed_thread_part2(cpu->random_seed);// do while循环执行kvm_cpu_execdo {if (cpu_can_run(cpu)) {r = kvm_cpu_exec(cpu);if (r == EXCP_DEBUG) {cpu_handle_guest_debug(cpu);}}qemu_wait_io_event(cpu);} while (!cpu->unplug || cpu_can_run(cpu));kvm_destroy_vcpu(cpu);cpu_thread_signal_destroyed(cpu);qemu_mutex_unlock_iothread();rcu_unregister_thread();return NULL;}int kvm_cpu_exec(CPUState *cpu){//...do {// kvm_vcpu_ioctl(cpu, KVM_RUN, 0)// 从这里进入kvm内核阶段,开始运行虚拟机run_ret = kvm_vcpu_ioctl(cpu, KVM_RUN, 0);//...// 根据退出原因,分发处理switch (run->exit_reason) {case KVM_EXIT_IO:DPRINTF("handle_io\n");/* Called outside BQL */kvm_handle_io(run->io.port, attrs,(uint8_t *)run + run->io.data_offset,run->io.direction,run->io.size,run->io.count);ret = 0;break;default:DPRINTF("kvm_arch_handle_exit\n");ret = kvm_arch_handle_exit(cpu, run);break;}} while (ret == 0);cpu_exec_end(cpu);//...qatomic_set(&cpu->exit_request, 0);return ret;}

虚拟机的运行就是kvm_cpu_exec中的do()while(ret == 0)的循环,该循环体中主要通过KVM_RUN启动虚拟机,进入了kvm的内核处理阶段,并等待返回结果。

当虚拟机退出,会根据返回的原因进行相应处理,最后将处理结果返回。

而kvm_cpu_exec自身也处于vcpu线程函数kvm_vcpu_thread_fn的循环当中,所以虚拟机的运行就是在这两个循环中不断进行。

2.5 用户层QEMU流程小结

解析参数,创建虚拟机,创建虚拟cpu,并获取三个最主要的描述符kvmfd、vmfd以及vcpufd。

根据vcpu数量创建具体的执行线程。

在线程中通过KVM_RUN启动虚拟机,进入内核KVM的处理流程。

重复循环KVM_RUN阶段。

三

内核层KVM核心流程

在用户层QEMU阶段有提到通过函数kvm_vcpu_ioctl(cpu, KVM_RUN, 0)进入到内核KVM处理阶段。

3.1 运行guest的准备过程

linux-5.15.39/virt/kvm/kvm_main.c,line 3764

在kvm_main.c文件3764行找到内核中实际的kvm_vcpu_ioctl函数。

static long kvm_vcpu_ioctl(struct file *filp,unsigned int ioctl, unsigned long arg){//...switch (ioctl) {case KVM_RUN: {//...// 根据KVM_RUN,调用kvm_arch_vcpu_ioctl_runr = kvm_arch_vcpu_ioctl_run(vcpu);trace_kvm_userspace_exit(vcpu->run->exit_reason, r);break;}//...}out:mutex_unlock(&vcpu->mutex);kfree(fpu);kfree(kvm_sregs);return r;}// arch/x86/kvm/x86.c line 10103int kvm_arch_vcpu_ioctl_run(struct kvm_vcpu *vcpu){//...if (kvm_run->immediate_exit)r = -EINTR;elser = vcpu_run(vcpu);//...return r;}// arch/x86/kvm/x86.c line 9923static int vcpu_run(struct kvm_vcpu *vcpu){int r;struct kvm *kvm = vcpu->kvm;//...for (;;) {if (kvm_vcpu_running(vcpu)) {// 进入guest模式的入口r = vcpu_enter_guest(vcpu);} else {r = vcpu_block(kvm, vcpu);}// 当返回值r<=0时,退出循环,一步步返回到用户层QEMU处理// 当返回值r>0时,继续guest运行循环if (r <= 0)break;}//...return r;}// arch/x86/kvm/x86.c line 9532static int vcpu_enter_guest(struct kvm_vcpu *vcpu){int r;// 一系列kvm_check_request函数调用// 检查guest请求//...// guest内存管理单元r = kvm_mmu_reload(vcpu);//...// 禁用内核抢占preempt_disable();//...vcpu->mode = IN_GUEST_MODE;// exit_fastpath = static_call(kvm_x86_run)(vcpu);// 调用架构相关的run函数进入guest模式运行for (;;) {exit_fastpath = static_call(kvm_x86_run)(vcpu);//...break;}// 能走到这里标志已退出guest模式vcpu->mode = OUTSIDE_GUEST_MODE;//...// 启用内核抢占preempt_enable();// 调用架构相关kvm_x86_handle_exit函数// 根据具体退出原因进行处理r = static_call(kvm_x86_handle_exit)(vcpu, exit_fastpath);return r;}

那调用流程就是

kvm_vcpu_ioctl --> kvm_arch_vcpu_ioctl_run

--> vcpu_run --> vcpu_enter_guest

--> static_call(kvm_x86_run)(vcpu)

3.2 Guest的进入

在arch/x86/kvm/vmx/vmx.c line 7584

定义了一系列架构相关的操作函数

关注运行相关的

.run = vmx_vcpu_run,

// arch/x86/kvm/vmx/vmx.c line 6628static fastpath_t vmx_vcpu_run(struct kvm_vcpu *vcpu){// 检查和准备工作//...vmx_vcpu_enter_exit(vcpu, vmx);//...}// arch/x86/kvm/vmx/vmx.c line 6606static noinstr void vmx_vcpu_enter_exit(struct kvm_vcpu *vcpu,struct vcpu_vmx *vmx){//...// arch/x86/kvm/vmx/vmenter.S汇编vmx->fail = __vmx_vcpu_run(vmx, (unsigned long *)&vcpu->arch.regs,vmx->loaded_vmcs->launched);//...}

arch/x86/kvm/vmx/vmenter.S1.保存host状态2.加载guest状态3.进入guest模式: call vmx_vmentercpu从ROOT模式切换至NON-ROOT模式,进入到guest的世界运行4.发生VM Exit时,保存guest状态,加载host状态cpu从NON-ROOT模式切换至ROOT模式,返回到host的世界

3.3 Guest的退出处理

在arch/x86/kvm/vmx/vmx.c line 7584

定义了一系列架构相关的操作函数

关注退出处理相关的

.handle_exit = vmx_handle_exit,

static int vmx_handle_exit(struct kvm_vcpu *vcpu, fastpath_t exit_fastpath){int ret = __vmx_handle_exit(vcpu, exit_fastpath);//...return ret;}static int __vmx_handle_exit(struct kvm_vcpu *vcpu, fastpath_t exit_fastpath){//...exit_handler_index = array_index_nospec((u16)exit_reason.basic,kvm_vmx_max_exit_handlers);return kvm_vmx_exit_handlers[exit_handler_index](vcpu);}// 退出处理例程返回<=0,表示异常需要到用户层qemu进行进一步处理// 退出处理例程返回值>0,表示内核层已经处理完,可继续执行static int (*kvm_vmx_exit_handlers[])(struct kvm_vcpu *vcpu) = {[EXIT_REASON_EXCEPTION_NMI] = handle_exception_nmi,[EXIT_REASON_EXTERNAL_INTERRUPT] = handle_external_interrupt,[EXIT_REASON_TRIPLE_FAULT] = handle_triple_fault,[EXIT_REASON_NMI_WINDOW] = handle_nmi_window,[EXIT_REASON_IO_INSTRUCTION] = handle_io,[EXIT_REASON_CR_ACCESS] = handle_cr,[EXIT_REASON_DR_ACCESS] = handle_dr,[EXIT_REASON_CPUID] = kvm_emulate_cpuid,[EXIT_REASON_MSR_READ] = kvm_emulate_rdmsr,[EXIT_REASON_MSR_WRITE] = kvm_emulate_wrmsr,[EXIT_REASON_INTERRUPT_WINDOW] = handle_interrupt_window,[EXIT_REASON_HLT] = kvm_emulate_halt,[EXIT_REASON_INVD] = kvm_emulate_invd,[EXIT_REASON_INVLPG] = handle_invlpg,[EXIT_REASON_RDPMC] = kvm_emulate_rdpmc,[EXIT_REASON_VMCALL] = kvm_emulate_hypercall,[EXIT_REASON_VMCLEAR] = handle_vmx_instruction,[EXIT_REASON_VMLAUNCH] = handle_vmx_instruction,[EXIT_REASON_VMPTRLD] = handle_vmx_instruction,[EXIT_REASON_VMPTRST] = handle_vmx_instruction,[EXIT_REASON_VMREAD] = handle_vmx_instruction,[EXIT_REASON_VMRESUME] = handle_vmx_instruction,[EXIT_REASON_VMWRITE] = handle_vmx_instruction,[EXIT_REASON_VMOFF] = handle_vmx_instruction,[EXIT_REASON_VMON] = handle_vmx_instruction,[EXIT_REASON_TPR_BELOW_THRESHOLD] = handle_tpr_below_threshold,[EXIT_REASON_APIC_ACCESS] = handle_apic_access,[EXIT_REASON_APIC_WRITE] = handle_apic_write,[EXIT_REASON_EOI_INDUCED] = handle_apic_eoi_induced,[EXIT_REASON_WBINVD] = kvm_emulate_wbinvd,[EXIT_REASON_XSETBV] = kvm_emulate_xsetbv,[EXIT_REASON_TASK_SWITCH] = handle_task_switch,[EXIT_REASON_MCE_DURING_VMENTRY] = handle_machine_check,[EXIT_REASON_GDTR_IDTR] = handle_desc,[EXIT_REASON_LDTR_TR] = handle_desc,[EXIT_REASON_EPT_VIOLATION] = handle_ept_violation,[EXIT_REASON_EPT_MISCONFIG] = handle_ept_misconfig,[EXIT_REASON_PAUSE_INSTRUCTION] = handle_pause,[EXIT_REASON_MWAIT_INSTRUCTION] = kvm_emulate_mwait,[EXIT_REASON_MONITOR_TRAP_FLAG] = handle_monitor_trap,[EXIT_REASON_MONITOR_INSTRUCTION] = kvm_emulate_monitor,[EXIT_REASON_INVEPT] = handle_vmx_instruction,[EXIT_REASON_INVVPID] = handle_vmx_instruction,[EXIT_REASON_RDRAND] = kvm_handle_invalid_op,[EXIT_REASON_RDSEED] = kvm_handle_invalid_op,[EXIT_REASON_PML_FULL] = handle_pml_full,[EXIT_REASON_INVPCID] = handle_invpcid,[EXIT_REASON_VMFUNC] = handle_vmx_instruction,[EXIT_REASON_PREEMPTION_TIMER] = handle_preemption_timer,[EXIT_REASON_ENCLS] = handle_encls,[EXIT_REASON_BUS_LOCK] = handle_bus_lock_vmexit,};

3.4 内核层KVM流程小结

进入guest世界的准备工作。

正式进入guest执行。

根据guest退出原因进行处理,KVM先自行处理,

若kvm不能完全处理,则返回到用户层由QEMU处理。

QEMU处理后再次通过KVM_RUN进入到内核KVM流程。

参考资料

看雪ID:CanineTooth

https://bbs.pediy.com/user-home-958869.htm

早鸟票最后1天!

# 往期推荐

球分享

球点赞

球在看

点击“阅读原文”,了解更多!

如有侵权请联系:admin#unsafe.sh