(This article, written by Adam Sawicki, Mateusz Jurczyk and Gynvael Coldwind, was originally published in Polish in the Programista magazine in February 2022; Polish version: PDF, printed)

The first step on the classic education path of future programmers is creating a program that prints – most often in the terminal – “Hello, World!”. The program itself is by definition trivial but what happens after it is launched is not – not entirely at least. In this article, we will trace the execution path of the "Hello World" micro-program written in Python and run on Windows, starting from a single call to the high-level print function, through the subsequent levels of abstraction of the interpreter, operating system and graphics drivers, and ending with the display of the corresponding pixels on the screen. As it turns out, this path in itself is neither simple nor short, but definitely fascinating.

Python code

The code we're going to start with is trivial:

print("Hello World")

The effect of its action is both predictable and obvious:

However, what makes our computer deem it appropriate to change the color of several hundred selected pixels on the screen when executing the above program?

The first step turns out to be the compilation of the indicated file containing our source code (hello.py). Some readers may be surprised already at this point – “But wait, isn't Python – unlike C or C++ – an interpreted language by any chance?”. And Python is in fact often called a script language, and these, by definition, shouldn't be compiled, should they?

In practice, many popular scripting languages such as PHP, Ruby, Lua, JavaScript, Perl or Python are compiled into their own variants of bytecode – a binary form that, although incompatible with the machine language of real processors1, is much easier to quickly interpret and execute than pure source code. Python is actually kind enough to provide modules that give us insight into individual parts of this process from the language itself.

1 With a minor exception of Jazelle DBX, a special mode in some older processors of the ARM family, which allowed Java bytecode execution.

As a reminder, the compilation process – in a nutshell – can be reduced to three steps:

- lexical analysis (performed by lexer), which outputs a list of tokens,

- parser analysis, which outputs an Abstract Syntax Tree (AST),

- And generating code – or, in our case, bytecode.

The effect of the lexical analysis can be viewed using the tokenize module launched from the command line. It will result in printing a list of tokens, the line and position of the given token in the source file, and text content (if any).

>python -m tokenize hello.py

0,0-0,0: ENCODING 'utf-8'

1,0-1,5: NAME 'print'

1,5-1,6: OP '('

1,6-1,19: STRING '"Hello World"'

1,19-1,20: OP ')'

1,20-1,21: NEWLINE '\n'

2,0-2,0: ENDMARKER ''

Our simple Hello World consists of just a few tokens: the print name, ( and ) operators, and the "Hello World" string literal. In addition to that, there is also an irrelevant new line character, as well as tokens containing metadata, such as the encoding used in the source file or the end-of-data marker.

The tokens are then passed to a parser that, using grammatical rules, generates an AST tree. The output of the parser can be viewed using the ast module, which, like tokenize before, can also be used directly from the command line.

>python -m ast hello.py

Module(

body=[

Expr(

value=Call(

func=Name(id='print', ctx=Load()),

args=[

Constant(value='Hello World')],

keywords=[]))],

type_ignores=[])

As in the case of the list of tokens, the AST tree itself is limited to only a few nodes: a single expression that is attached to the Module root (of Call type), which in turn is connected to the node containing the Name of the function and one argument that is a Constant with the value of “Hello World”.

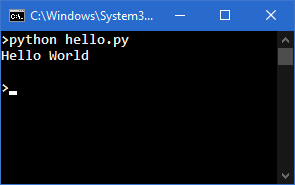

It is worth adding that several simple tools have been created to allow displaying the AST tree of programs written in Python in the form of an actual tree. The result of using one of them – AST visualizer by quantifiedcode [1] – can be seen in Figure 1.

Figure 1. “Hello World” AST tree

In the next step, bytecode is generated based on the AST tree. Of course it can also be previewed, this time using the dis module, which displays all instructions in text form.

>python -m dis hello.py

1 0 LOAD_NAME 0 (print)

2 LOAD_CONST 0 ('Hello World')

4 CALL_FUNCTION 1

6 POP_TOP

8 LOAD_CONST 1 (None)

10 RETURN_VALUE

A brief description of all instructions can be found in the documentation of dis module itself [2]; thus, for the purposes of this article, we will limit ourselves to discussing only the operations used in our Hello World:

- LOAD_NAME name – pushes the value of the global variable with the given name on the stack2,

- LOAD_CONST constant – pushes the given constant on the stack3,

- CALL_FUNCTION number_of_parameters – calls the function removed from the stack and provides it with the specified number of parameters popped from the stack.

- POP_TOP – pops one element from the top of the stack.

- RETURN_VALUE – exits the function, returning an element from the top of the stack.

2 We have used a certain simplification here, as in fact we won't find the “print” string itself in the bytecode, but an index into the name array (co_names) under which this string can be found.

3 As with LOAD_NAME, we will find only the index in the array of constants (co_consts) in the bytecode.

As the description of the above instructions suggests, the reference Python implementation uses a stack machine and – unlike for example ARM or x86 processors – has no registers. Hence, the code is executed by pushing values on the stack, and then using instructions that remove (consume) arguments from the stack and put the result of the operation back on the stack4.

4 Jumps aside, Python bytecode could be basically compared to the Reverse Polish Notation.

Our program boils down to six instructions, which respectively:

- Push the print function on the stack,

- Push the string “Hello World” on the stack,

- Call a function popped from the stack with one argument (i.e., print('Hello World')),

- Remove the None value returned by the print function from the stack,

- Place the None value on the stack (while this seems redundant given the previous step, the interpreter couldn't be certain that print really returned None),

- Finally, take a value from the top of the stack and return it (this is due to the return None statement being implicitly present at the end of each function, including the global module function as observed), which causes the program to end.

Code object

Bytecode is not the only result of compiling the source code – there is also a whole series of metadata stored in a code class object. In Python version 3, the code class is not a basic type (such as str or int), but it can be referenced using the types module:

>>> import types

>>> types.CodeType

<class 'code'>

The inspection of the code object of our program will be a little more complicated. The easiest way to interact with this object is to force our script to be compiled into a file (python -m compileall hello.py), and then look at the resulting __pycache__/hello.cpython-39.pyc file (Listing 1).

>hexdump hello.cpython-39.pyc

00000000: 61 0D 0D 0A 00 00 00 00 - 83 F2 49 61 15 00 00 00 |a Ia |

00000010: E3 00 00 00 00 00 00 00 - 00 00 00 00 00 00 00 00 | |

00000020: 00 02 00 00 00 40 00 00 - 00 73 0C 00 00 00 65 00 | @ s e |

00000030: 64 00 83 01 01 00 64 01 - 53 00 29 02 7A 0B 48 65 |d d S ) z He|

00000040: 6C 6C 6F 20 57 6F 72 6C - 64 4E 29 01 DA 05 70 72 |llo WorldN) pr|

00000050: 69 6E 74 A9 00 72 02 00 - 00 00 72 02 00 00 00 FA |int r r |

00000060: 08 68 65 6C 6C 6F 2E 70 - 79 DA 08 3C 6D 6F 64 75 | hello.py <modu|

00000070: 6C 65 3E 01 00 00 00 F3 - 00 00 00 00 |le> |

0000007c;

Listing 1. Hexadecimal view of the resulting file

Luckily, we do not have to manually analyze the binary data. Instead, we will use a standard Python library called marshal to deserialize the above file (omitting the irrelevant 16-byte header) and print out information about the object of type code (types.CodeType). For this purpose, we will use the following short helper program:

import marshal

with open("__pycache__/hello.cpython-39.pyc", "rb") as f:

marshaled_obj = f.read()[16:] # Ignore 16 bytes of the header.

obj = marshal.loads(marshaled_obj) # Return a code type object.

print("-=- Code object")

for field in dir(obj):

if not field.startswith("co_"):

continue

print(" %-20s: %s" % (field, getattr(obj, field)))

After its launch, we should receive quite a lot of interesting information:

-=- Code object

co_argcount : 0

co_cellvars : ()

co_code : b'e\x00d\x00\x83\x01\x01\x00d\x01S\x00'

co_consts : ('Hello World', None)

co_filename : hello.py

co_firstlineno : 1

co_flags : 64

co_freevars : ()

co_kwonlyargcount : 0

co_lnotab : b''

co_name : <module>

co_names : ('print',)

co_nlocals : 0

co_posonlyargcount : 0

co_stacksize : 2

co_varnames : ()

While analyzing the result of our script, you can immediately notice that most of the fields are either empty (like co_cellvars, co_freevars, co_lnotab, co_varnames) or zeroed (like co_argcount, co_kwonlyargcount, co_nlocals, co_posonlyargcount). This is influenced by both the simplicity of the source code and the fact that we are dealing with code located in the global space of the module – thus all fields related to function-specific metadata remain unused.

A full description of all fields can be found in the documentation of the inspect module [3], but we would like to draw your attention to a few of them:

- co_code – an array of bytes (formally an object of the bytes type) containing the previously discussed code in compiled binary form, i.e., bytecode.

- co_consts – a tuple containing all the constants used by the code. In our case it is limited to the string "Hello World" and None (used only implicitly), but for larger programs and modules, code objects of all global functions would be in here, including those responsible for the actual creation of classes defined in the code.

- co_names – a tuple with global names used in the code, i.e., references to global functions, names, types, etc.

- co_name, co_filename, co_firstlineno and (empty in our case) co_lnotab – data that map the binary code into specific lines in the source code, which is very useful for displaying error information.

If we wanted to run our “Hello World” code by reconstructing a code object from the information displayed by our helper program, we would first have to create the code object itself, and then the function object that binds the code object to a specific set of global variables:

import types

# The order of the fields of the CodeType constructor may differ

# for other versions of CPython.

# In that case: help(types.CodeTypes)

c = types.CodeType(

0, 0, 0, 0, 5, 64,

b'e\x00d\x00\x83\x01\x01\x00d\x01S\x00',

('Hello World', None),

('print',), (), 'hello.py',

'<module>', 1, b'')

f = types.FunctionType(c, globals())

f()

The effect of this program looks the same as in its original version:

>python3 rec.py

Hello World

CPython Virtual Machine and the print function

At the heart of the virtual machine executing the bytecode is the _PyEval_EvalFrameDefault function, which can be found in Python source code in the Python/ceval.c file. The function itself is quite long – it consists of over 3000 lines – but the implementations of individual operations contained within are quite short and to some extent readable even to people who do not know the nuances of the CPython project. For example, this is what the implementation of the POP_TOP opcode used in our script looks like:

case TARGET(POP_TOP): {

PyObject *value = POP();

Py_DECREF(value);

FAST_DISPATCH();

}

However, we will skip the detailed analysis of the instruction code – it is beyond the scope of this article – and instead move on to the print function itself.

The print function – or rather its C implementation called builtin_print – can be found in the Python/bltinmodule.c file. Its operation can be boiled down to the following points:

- Acquire the sys.stdout object (or another object passed in the optional file argument) that all print operations will use.

- For each unnamed argument of the function:

- If this is the second or subsequent argument, print a separator (a space character by default).

- Print the argument.

- If required, print a line break.

- If required, empty the buffer, i.e., perform the flush() operation.

Actual print out operations are performed using one of two functions: PyFile_WriteObject and, only for string literals such as a space or a new line character, PyFile_WriteString. Since the latter function ultimately just calls the former anyway, we will focus on PyFile_WriteObject. This function can be found in the Objects/fileobject.c file, and its operation, in simple terms, looks like this:

s = repr(objectToPrint)

sys.stdout.write(s)

The above code shows that print eventually calls the write method belonging to the sys.stdout object anyway. Consequently, if we overwrite this object and introduce our own write method, we can capture everything that is printed using print – this is illustrated by the following experiment:

#!/usr/bin/python3

import sys

class BetterStdOut:

def __init__(self, org):

self._org = org

def write(self, v):

self._org.write(v.upper())

def __getattr__(self, name):

return getattr(self._org, name)

sys.stdout = BetterStdOut(sys.stdout)

print("all caps everywhere")

After execution, we should see the following result:

>python3 over.py

ALL CAPS EVERYWHERE

System of connected vessels and sys.stdout

Going back to our initial program, we already know that “Hello World” will eventually be passed to the write method of the sys.stdout object. In Python 3.x, sys.stdout is basically a stack of three levels of helper I/O classes, responsible for converting and encoding, caching and ultimately transferring data to – in our case – the console. More specifically, this stack consists of the following classes:

- io.TextIOWrapper responsible for encoding Unicode strings fed to the write method to UTF-8, optional conversion of line break sequences (in Windows, that's the translation of "\n" into "\r\n") and buffering at the line level,

- io.BufferedWriter (visible in the sys.stdout.buffer field) responsible for buffering at the byte level5,

- io._WindowsConsoleIO (visible in the sys.stdout.buffer.raw field) responsible for direct interaction with the console API.

5 The flush() operation is executed when at least one new line character is encountered in the buffer, or when the number of bytes in the buffer exceeds 8192.

It is worth noting that this set may be different if the output of the program is redirected to another process (e.g. python hello.py | more) or saved to a file (e.g. python hello.py > file.txt).

The data transfer to the console itself is finally done in the implementation of the write method of the io._WindowsConsoleIO class, i.e., in the _io__WindowsConsoleIO_write_impl function in the Modules/_io/winconsoleio.c file. In short, this function does two things:

- Converts the received stream of bytes from UTF-8 to UTF-16 (as internally used by Windows).

- Calls the WinAPI WriteConsoleW function, which exports data from our process to the console and which we will devote the next section to.

Calling WriteConsoleW is also where we finally leave the code of both our Python script and the CPython execution environment itself. The journey so far can be seen in the form of a stack trace shown in Listing 2.

KERNELBASE!WriteConsoleW

python39!_io__WindowsConsoleIO_write_impl+0x148 [\Modules\_io\winconsoleio.c @ 1004]

python39!_io__WindowsConsoleIO_write+0x7f [\Modules\_io\clinic\winconsoleio.c.h @ 319]

python39!method_vectorcall_O+0x9d [\Objects\descrobject.c @ 463]

python39!_PyObject_VectorcallTstate+0x2c [\Include\cpython\abstract.h @ 118]

python39!PyObject_VectorcallMethod+0x82 [\Objects\call.c @ 828]

python39!PyObject_CallMethodOneArg+0x2f [\Include\cpython\abstract.h @ 208]

python39!_bufferedwriter_raw_write+0x99 [\Modules\_io\bufferedio.c @ 1822]

python39!_bufferedwriter_flush_unlocked+0x7c [\Modules\_io\bufferedio.c @ 1871]

python39!buffered_flush_and_rewind_unlocked+0x12 [\Modules\_io\bufferedio.c @ 799]

python39!buffered_flush+0x77 [\Modules\_io\bufferedio.c @ 826]

python39!method_vectorcall_NOARGS+0xa0 [\Objects\descrobject.c @ 435]

python39!_PyObject_VectorcallTstate+0x2c [\Include\cpython\abstract.h @ 118]

python39!PyObject_VectorcallMethod+0x82 [\Objects\call.c @ 828]

python39!PyObject_CallMethodNoArgs+0x1c [\Include\cpython\abstract.h @ 198]

python39!_io_TextIOWrapper_write_impl+0x373 [\Modules\_io\textio.c @ 1724]

python39!_io_TextIOWrapper_write+0x3c [\Modules\_io\clinic\textio.c.h @ 411]

python39!cfunction_vectorcall_O+0xaa [\Objects\methodobject.c @ 513]

python39!_PyObject_VectorcallTstate+0x2e [\Include\cpython\abstract.h @ 119]

python39!PyObject_CallOneArg+0x2d [\Include\cpython\abstract.h @ 189]

python39!PyFile_WriteObject+0x5d [\Objects\fileobject.c @ 141]

python39!PyFile_WriteString+0x42 [\Objects\fileobject.c @ 165]

python39!builtin_print+0x132 [\Python\bltinmodule.c @ 1892]

Listing 2. Full call stack from print() in Python to WriteConsoleW() in WinAPI

Consoles in Windows

At this stage, we already know that using print in Python leads to the interpreter calling the WriteConsoleW system function on the given string. However, the question remains how the function carries out its task under the hood. To understand it fully, we must first go back a few or even several dozen years in history.

Text mode and the command line have been an integral part of the operating systems developed by Microsoft from the very beginning. In MS-DOS, they served as the primary way to interact with a computer, and at the time of the introduction of the graphic interface in Windows 1.0, text commands were still the only way to perform many operations. The command line did not fall into oblivion even after the release of the first Windows from the NT family, which became completely independent of the DOS environment and offered a fully windowed mode of operation. The basic command line called command.com and later cmd.exe survived in Windows for the next 20 years – until today, still enjoying great popularity among programmers, administrators, and power users. It is safe to say that it is one of the oldest, if not the longest existing Windows program.

It is worth noting that the command line and console are two very closely related but still different things. The command line is based on the console as an I/O interface, from which it retrieves a command or a series of commands to execute, and then prints their results. It can also perform more complex tasks, such as interpreting and executing scripting languages. Examples of command lines in Windows are the already mentioned Command Prompt (cmd.exe) and PowerShell. In turn, the terminal is an internal part of the operating system responsible for the graphical features of the text mode, i.e., drawing a window and text characters, enabling selection/copying of text, etc. It also includes the implementation of the so-called API Console [4], i.e., a set of system functions enabling programmatic operations on consoles (e.g. GetConsoleFontSize, ReadConsole, SetConsoleTitle, etc.). Thanks to the built-in support for consoles in Windows, simple programs running in text mode do not have to deal with the graphical aspect of communication with the user – they can call a few simple API functions (or even standard library functions), and the rest is taken care of by the operating system. In this article, we will focus on the implementation details of the default Windows console interface – with a new alternative being currently developed by Microsoft in the form of the Windows Terminal project [5].

For a given process to be assigned a console window (new or existing), one of the following conditions must be met:

- The program is compiled as a console one. In Visual Studio, the /SUBSYSTEM:CONSOLE flag is responsible for this, and for the gcc and clang compilers, this is the default option (unless you use the -Wl, --subsystem, windows parameter, or -mwindows for short). In the resulting executable file, this is indicated by the Subsystem field in the IMAGE_OPTIONAL_HEADER structure, which can be set to either IMAGE_SUBSYSTEM_WINDOWS_GUI (GUI program) or IMAGE_SUBSYSTEM_WINDOWS_CUI (console program).

- The program calls the AllocConsole function to create a new console, or AttachConsole to attach to a console of an existing process.

There are some interesting conclusions to be drawn from this. First of all, the matter of whether a given program is a console one or not is largely conventional, because both an application marked as a console one can display windows, and a theoretically graphical application can create and use a console. Secondly, it is a Windows design decision that one program can only operate on a single console at a time, while many processes can be assigned to one console. This happens, for example, when the cmd.exe command line runs another text-based program (such as python.exe) – both have access to a common text interface.

For many years, the appearance and operation of consoles in Windows did not change – up until and including Windows 8.1, the console windows offered only the simplest customization options. The user could, among others, change the size of the window, choose one of several predefined typefaces and font sizes or set the text and background color to one of 16 colors. One of the more troublesome issues was the inability to copy a continuous text broken into two or more lines – the console allowed only to select rectangular areas of the terminal. Implementation at the system level was also archaic. The csrss.exe system process was responsible for managing and drawing windows, while communicating with all other applications via so-called LPC ports. Not only did this result in uglier console windows than the windows of ordinary applications (e.g., in Windows XP, console frames were not subject to styling [6]), but it also often led to various security issues.

The first change in the above architecture took place in Windows 7. At that time, console support was delegated from CSRSS to a separate process called conhost.exe, which runs with the permissions of a regular user. CSRSS’ only responsibility was to start conhost.exe when another application requested a new console window. This step significantly improved the security and stability of the system.

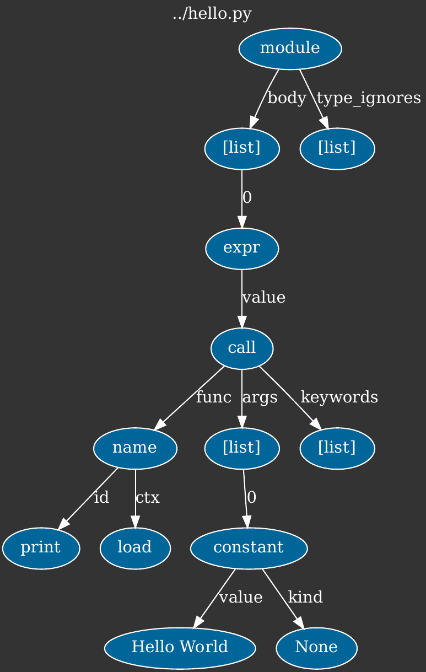

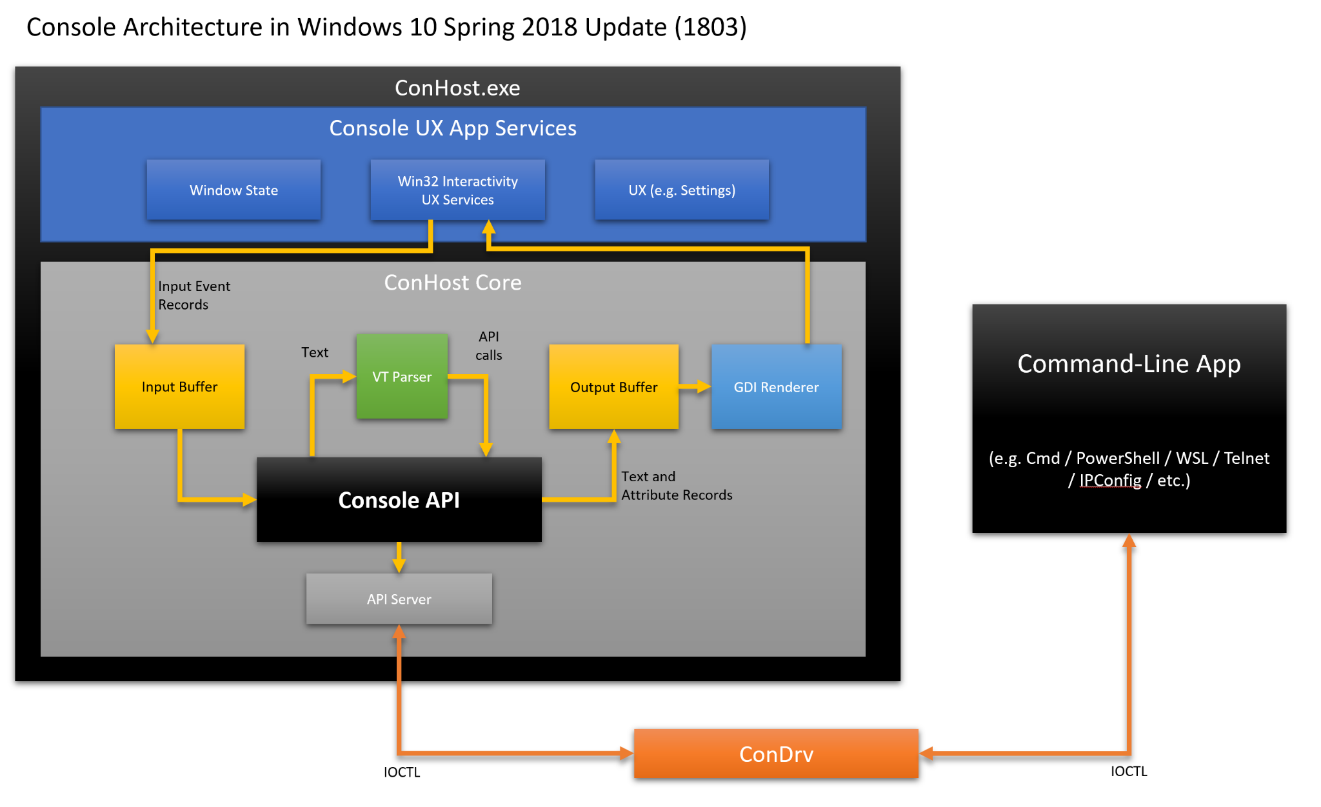

Further changes occurred in Windows 8, where a kernel-mode driver called condrv.sys was introduced. From that time until today, it is responsible for running conhost.exe for programs with a text interface and transferring information between them. Each console program has several open handles to pseudo-files supported by this module, such as \Device\ConDrv\Connect, \Device\ConDrv\Input, \Device\ConDrv\Output, etc. Through them, text programs can read, write, and change console properties, and the queries they send are wrapped and forwarded to conhost.exe, which performs actual operations on the window or I/O buffers. Both the conhost process and the names of the active handles can be easily observed using Process Explorer, as shown in Figure 2. A general outline of the console architecture in Windows 8 and later is shown in Figure 3.

Figure 2. Process Explorer showing the conhost.exe process and open file handles in python.exe

Figure 3. Windows console architecture (source: [7], author: Rich Turner)

During the development of Windows 10, Microsoft put even more emphasis on modernizing the console, thanks to which it is now possible to select text by lines, display colors from a 24-bit palette or take advantage of text formatting using the so-called ANSI Escape Codes (called Virtual Terminal Sequences by Microsoft). According to official sources [8], Microsoft set up a special team in 2014 to improve the quality of the console's code and to expand its capabilities while maintaining backward compatibility. One nice side effect of this initiative (from the researcher's perspective) was the opening of much of the source code of the new Windows Terminal, as well as the conhost helper program, on GitHub [9]. Both of these components are implemented entirely in C++. Thanks to this, we can learn a lot about the operation of consoles at the source, without having to refer to reverse engineering.

At this point, it is worth clarifying one more technical detail. The code responsible for displaying the console has two rendering engines: one based on the traditional GDI graphical interface and the other – newer – based on DirectX. The latter supports modern font extensions, such as variable fonts (i.e., fonts in which you can control certain parameters, such as the thickness of glyphs) or color fonts (allowing, for example, displaying emoji symbols). The DirectX engine is used by the Windows Terminal command line, while regular consoles (including the one used by cmd.exe) use GDI by default, unless you set the undocumented HKCU\Console\UseDx value of the REG_DWORD type to 1 in the registry. However, we will not do this and in this article we will trace the execution path leading through GDI.

But let's return to the main topic of the article. The WriteConsoleW function from kernelbase.dll, which we got to in Python, sends an IOCTL message to the \Device\ConDrv\Output pseudo-file with code IOCTL_CONDRV_ISSUE_USER_IO (numerically 0x500016). This sounds complicated but it only means that the request to print the text is forwarded to the previously mentioned condrv.sys driver. It then passes this information to the conhost.exe process, which, depending on the operation, decides what to do with it next. From this point on, we can follow the open source code.

The main function receiving commands in conhost.exe is ConsoleIoThread (implemented in terminal/src/host/srvinit.cpp). When it receives a new message, the implementation goes through the following methods:

- IoSorter::ServiceIoOperation

- IoDispatchers::ConsoleDispatchRequest

- ApiSorter::ConsoleDispatchRequest

Then, we land in one of the functions from the ApiDispatchers namespace that supports the given type of operation. If we wanted to live track exactly what low-level operations are performed on a given console, it is enough to attach to conhost with the WinDbg debugger, load symbols, and then set breakpoints on all functions from the ApiDispatchers space using the following command:

bm conhost!ApiDispatchers::*

In our test Windows 10 environment, this results in setting 52 breakpoints, triggered whenever a corresponding operation is performed on the console window. When you print some text, the implementation reaches ApiDispatchers::ServerWriteConsole, which is the equivalent of the WriteConsole function called on the Python side. Next, the execution flow goes through the following nested calls:

- ApiRoutines::WriteConsoleWImpl

- WriteConsoleWImplHelper

- DoWriteConsole

- WriteChars

- WriteCharsLegacy

Here WriteCharsLegacy implements the core logic of the operation, by storing the text in question in the output buffer (with the help of further classes: SCREEN_INFORMATION, TextBuffer and ROW). It is worth noting that the drawing of glyphs in the window doesn't happen just yet – this is the job of a separate, dedicated graphical thread. And so, after writing data to the output buffer, it is read and displayed asynchronously, for example under the following call stack, when drawing the next window frame:

#0 Microsoft::Console::Render::GdiEngine::_FlushBufferLines

#1 Microsoft::Console::Render::GdiEngine::UpdateDrawingBrushes

#2 Microsoft::Console::Render::Renderer::_UpdateDrawingBrushes

#3 Microsoft::Console::Render::Renderer::_PaintBufferOutput

#4 Microsoft::Console::Render::Renderer::_PaintFrameForEngine

#5 Microsoft::Console::Render::Renderer::PaintFrame

#6 Microsoft::Console::Render::RenderThread::_ThreadProc

Currently in Windows 10, the specific GDI function used by conhost to display text is PolyTextOutW, although the commit history on GitHub shows that it was changed to ExtTextOutW in mid-2021 [10]. These functions are the ones responsible for converting characters encoded in UTF-16 into letter shapes, which we then see on the screen.

Text rasterization

As you probably know, fonts are files containing the graphical representation of characters that allows displaying and printing text of various types, sizes and other characteristics. In the early years of computers, they were stored in a bitmap format – i.e., for each pair of glyph and its size, a monochrome image was assigned to represent its appearance. However, this solution had many obvious drawbacks, thus not long afterwards it was supplanted by vector fonts, which describe shapes using curves. The first format of this type were Type 1 fonts designed by Adobe in 1984 (closely related to the PostScript language), soon to be joined by the TrueType (by Apple) and OpenType (developed by Microsoft and Adobe) formats released in the 90s. The latter two have become the de-facto standard and are widely used to this day.

With the development of digital typography and vector formats, the complexity of fonts and the code that supports them has been and still is on the rise. From the official OpenType specification [11], we learn that there are about 50 types of SFNT tables (i.e., smaller "blocks" from which fonts are built), eight of which are listed as mandatory. Each of them represents a different type of data (both in binary formatting and conceptually), while at the same time many of them are tightly interconnected in terms of functionality. If we add to this the fact that both TrueType and OpenType fonts contain mini-programs describing the shapes and methods of drawing individual characters (with their own stack, arithmetic operators, conditional operators, etc.), it becomes apparent how difficult the files are to handle correctly and safely. At the same time, most of today's font engines have their roots in code from the 90s, so they are typically written in C and C++ (languages known for notorious memory safety issues) and according to the development standards of that time.

Despite the potential dangers associated with operating on fonts, Windows versions up to and including 8.1 implemented their support in the kernel, i.e., the most privileged mode of operation. Specifically, depending on the format, one of two system drivers was responsible for the given font:

- win32k.sys, the main Windows graphics driver, implementing support for simple bitmap and vector fonts (extensions .fon and .fnt) and TrueType fonts (.ttf and .ttc).

- atmfd.sys, the Adobe Type Manager Font Driver, implementing support for Type 1 fonts (extensions .pfb, .pfm and .mmm) and OpenType fonts (.otf and .otc).

An unquestionable advantage of this approach was its efficiency – there were very few context switches during rasterization and text display, resulting in effective use of the CPU cache, as the processor smoothly progressed through the subsequent stages of translating characters into geometric shapes, then into pixels, and displaying them on the screen. This, in turn, resulted in a fast and responsive graphical interface. On the other hand, any potential bug in this code exposed the user to a complete system compromise if a specially crafted font (e.g., embedded in a document, a website, etc.) was opened. And indeed, Microsoft has fixed dozens of vulnerabilities found in the font engine by researchers from around the world, and the subject of font security made frequent appearances at computer security conferences.

Similarly to consoles, a significant improvement in the above architecture occurred with the advent of Windows 10. In this version of the system, the code responsible for font handling was moved from the win32k.sys and atmfd.dll drivers to a helper user-mode program called fontdrvhost.exe, running in a sandbox with minimal permissions. With this change, even if there is another vulnerability in the handling of fonts leading to arbitrary code execution, there is still a long way to go to taking over full control of the machine. In addition, less serious bugs resulting in unhandled exceptions no longer crash the entire operating system, but only temporarily terminate the helper user-mode process that can be restarted almost seamlessly. It is worth mentioning that the win32k.sys driver (broken down into two separate modules: win32kbase.sys and win32kfull.sys in Windows 10 onwards) is still responsible for the overall Windows graphical interface, with only the font handling code being refactored out. In turn, atmfd.dll is no longer needed with these recent changes, so we won't find it in the latest versions of the system.

In order to better understand how fontdrvhost.exe works internally, we would need to focus our analysis on that executable. Unfortunately, unlike consoles, the source code of this component has not been made publicly available, so we need to roll up our sleeves and run the disassembler. There is a small consolation in the form of debug symbols (.pdb files) made available by Microsoft for system libraries [12], thanks to which we can at least know the names of individual functions. And so, after a brief analysis of the main function, we can learn that the thread communicating with the kernel runs in a function called ServerRequestLoop, which receives information about subsequent operations to be performed and returns their results with the NtGdiExtEscape system call. The DispatchRequest function is then called, which further invokes corresponding handler functions depending on the specific request and font format. A detailed analysis of fontdrvhost.exe's internals is beyond the scope of this article, but we encourage interested readers to delve further into this topic.

Let's take a step back and analyze the process of displaying “Hello World” at a higher level of abstraction. In the default configuration of Windows, the command line uses the Consolas font, which corresponds to the C:\Windows\Fonts\consola.ttf file; so, we are dealing with TrueType. The first operation that GDI performs when the PolyTextOutW function is called is text shaping. Since we are operating on the Latin alphabet and a fixed width font, this stage boils down mainly to translating the code points of each character into the corresponding identifier of the glyph (a unique shape stored in the font). For obvious reasons, kerning (adjusting the distance between specific pairs of characters), ligatures (combining two or more letters into one) and other more advanced transformations do not apply here.

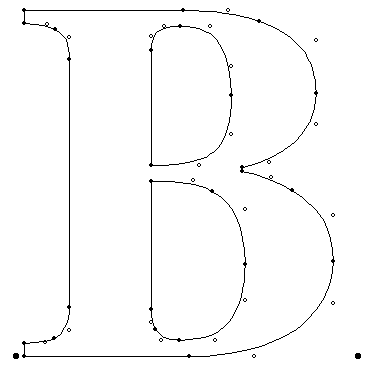

Then, based on the glyf SFNT table, an outline is generated for each glyph in the string. Geometric shapes in TrueType are represented by a set of points on a plane, between which straight lines and second-degree Bézier curves are drawn. The latter are defined by three points: two located at the ends of the curve and the so-called control point determining its shape. In simple terms, it resembles a popular game of connecting dots on a sheet of paper to obtain a specific drawing, except that the computer does it in a repetitive way and with high precision. Figure 4 shows an example of the outline of the letter "B" described in the TrueType format, where the filled points are those on the curve and the helper anchor points are marked as empty.

Figure 4. Shape of the letter B saved in the TrueType format (source: [13])

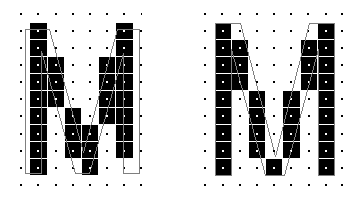

Another important stage of rasterization is the so-called hinting. The need for it arises from the fact that mapping the shape of a given letter to a limited number of pixels – especially in the case of small point sizes – is difficult to achieve automatically. Even if a given projection is faithful from a mathematical point of view, in practice it can be asymmetrical, unpleasant to the eye, or completely illegible. For this reason, all widely used vector font formats support hinting, i.e., programming certain hints in the font as to how a given character should be drawn in certain sizes. This includes information such as how to optimally match the outline to the pixel grid, or which elements of the letter are optional and can be omitted at low resolution, and which, on the contrary, must be included in the final image. An example of how strongly hinting can affect the end result of rasterization is shown in Figure 5. A piece of trivia – in the Type 1 and OpenType formats both contour drawing and hinting take place at the same stage, while the interpreter executes mini-programs called “CharStrings”.

Figure 5. Example of the letter M in the form of a bitmap without and with hinting enabled (source: [14])

Importantly, hint programs are not executed for every frame in which a given letter is displayed. A very important part of a performant font engine is caching, which saves a once generated bitmap for a character of a given size and reuses it in the future without repeating all the calculations.

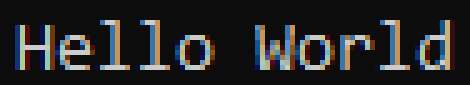

But where does this bitmap come from? Here, we arrive at the last part of the process, i.e., the conversion of the contour of the character into a raster image (scan conversion). While setting subsequent pixels of the bitmap to white or black, the system can apply additional steps to make the text look better on the computer screen. One such method is antialiasing, which can operate not just on shades of gray, but on the entire color palette, by taking advantage of the arrangement of RGB subpixels that make up each pixel on the monitor matrix (the so-called subpixel rendering). When we take a closer look at the individual pixels in our console window, it is likely that almost none of them are actually gray (Figure 6), even though this is the color recorded by the human eye at the original resolution.

Figure 6. The result of anti-aliasing by Microsoft ClearType

When the characters printed by our simple program take on the form of a bitmap, its further fate is in the hands of the graphics chip, i.e., the GPU – whether placed on the graphics card or integrated with the CPU. While GPUs technically do support a primitive text mode of operation, modern versions of Windows don't use it and always display a graphical user interface instead. The text console is therefore treated the same as all the other windows displayed by the OS on the virtual desktop.

Window management

Windows are managed by a part of the system called Desktop Window Manager (DWM). The dwm.exe application can be found in the list of running processes. Killing it causes the entire image on the monitor to disappear, which, however, returns after a moment, because the system restarts DWM. It displays the desktop, taskbar, and windows of individual applications – whether it will be a text console (as in our case), a web browser, a movie being played or a game. It also allows the windows to overlap, those in the front to cover those at the back. The process of organizing all this to display the final image on the monitor is called compositing.

To accomplish this task efficiently, acceleration – hardware support offered by the graphics chip – is used. It means performing a specific task (in our case – displaying graphics) faster thanks to specialized hardware chips, compared to the implementation of the same logic fully in software, i.e., by the main processor. However, since there is more than one manufacturer of graphics chips on the market, and each of them uses different solutions in their hardware, to ensure compatibility you need a graphic driver appropriate to a given card or graphics chip. Without it, the system can still display the desktop, but it does so using the Microsoft Basic Display Adapter – a simplified implementation. In it, all rendering (generation) of graphics, including tedious copying of pixel buffers, takes place on the CPU side, thus it is really slow.

Certain forms of hardware acceleration of graphics display have existed in computers since ancient times. In the past, it was only related to two-dimensional (2D) graphics. The supported operations consisted mainly of copying rectangular fragments of the image in memory, which in the gaming world are known as “sprites”. In this way, you could efficiently render graphics of games such as Mario-type platformers.

Nowadays, however, most games use three-dimensional (3D) graphics, and graphics cards can efficiently generate such graphics. Since the first three-dimensional shooters like Doom and Quake were at the peak of their popularity, the scheme of composing 3D graphics from triangles has been adopted. Modern games display graphics represented in this form, and use one of the available graphics APIs to issue commands to the graphics card: OpenGL, Direct3D or Vulkan.

But why are we talking about games at all, when our focus here is a simple “Hello World” program? Even if a large group of computer users don’t play games, but only use their equipment to browse the Internet or use office applications, video games have long set the direction and the pace for the development of graphics chips. You don't need multiple TFLOPS of processing power or the 300W of power consumed by the fastest graphics cards to display your desktop. A low-end product is enough. However, they all work on the same principle. They differ only in the amount of memory, the number of cores to execute shaders on and other parameters that determine their performance in games.

A certain surprise may be the news that the window manager in recent versions of Windows also uses 3D graphics to draw the desktop! Although windows are flat by nature, with the enormous computing power offered by graphics cards in this particular application, it has proved effective to render each of them as a rectangle composed of two triangles, with the image depicting the window applied as a texture, like a wallpaper glued to the wall – see Figure 7. So, in a sense, from the point of view of a graphics card, working with serious office applications is not much different from playing games :).

Figure 7. Window as a grid of triangles

GPU

The graphics chip, as a coprocessor, works in parallel with the main processor of the computer. A programmer implementing graphics display is tasked with filling a buffer with graphics commands to be executed (no wonder this one is called the… command buffer), and then handing it over to the GPU. The CPU can take care of other calculations right afterwards, while the graphics chip executes these commands at its own pace. This is also how games work. While the graphics card laboriously draws all three-dimensional objects and characters to finally display them on the monitor as the Nth frame, the CPU is already performing calculations in the game world (physics simulation, artificial intelligence of opponents, sound reproduction, etc.) for the N+1 frame.

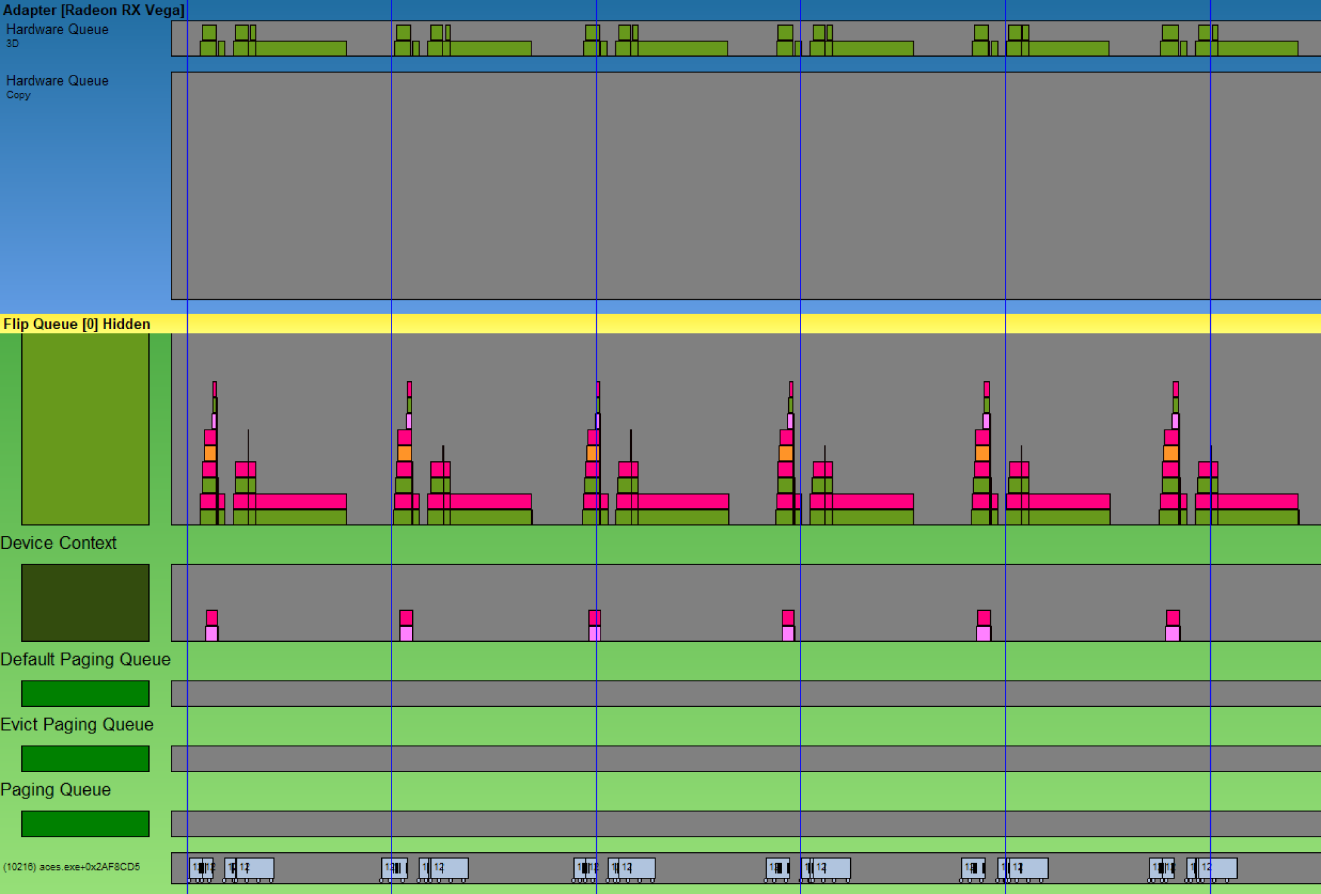

This behavior can be observed, for example, using Microsoft's free GPUView tool. This program offers you an insight into the very low-level performance of the CPU and GPU. With it, we can “record” what is happening in the system within a certain period of time, and then see the activity of the GPU as well as individual CPU threads. The information presented there may be difficult to interpret, but for all those interested in what is happening close to hardware during system run time, we recommend obtaining at least a basic understanding of this type of diagrams. Similar ones are also found in other tools.

In Figure 8, we see the Direct3D context executing command buffers. The horizontal axis is the time running to the right. Upwards, on the other hand, there are buffers queued up to be executed. At any given time, the “block” at the very bottom is the one that is currently being executed. Everything above it is a command located further in the queue, waiting for its turn.

Figure 8. Screenshot of GPUView

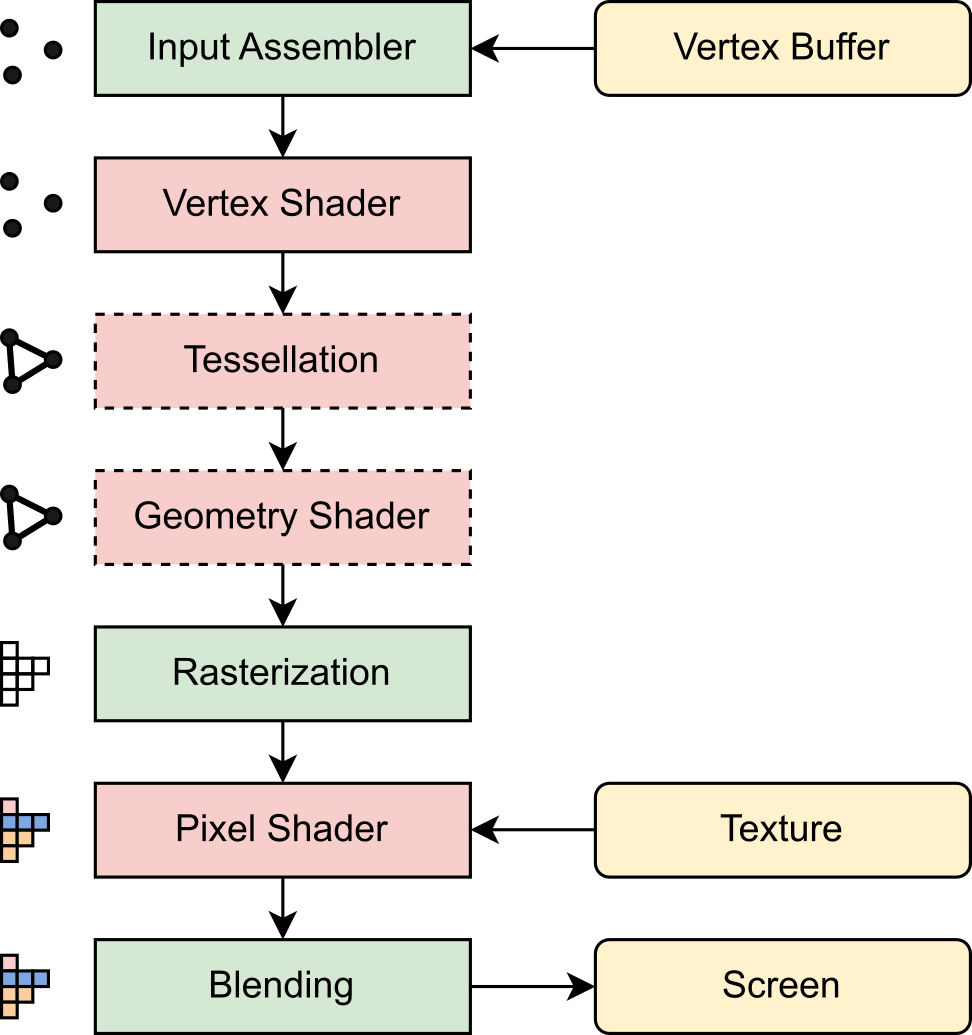

In the GPU itself, the execution of commands takes place in a pipeline way, which means that each command goes through the stages of the so-called graphics pipeline before the final pixels reach the screen. A simplified pipeline diagram is shown in Figure 9.

Figure 9. Graphics pipeline diagram

Stages marked in green are merely configurable, i.e, they perform a specific operation for which we can only change the parameters. Those marked in red are programmable, which means that we have to write the program performed at this stage. Such programs for graphics chips are called shaders and are written in special languages – HLSL or GLSL. Although these languages have a syntax similar to C or C++, the shaders themselves differ from programs executed on the CPU in many aspects. Listing 3 shows an example pixel shader that samples the texture, multiplies the resulting color by the vertex color, and returns the result as the color to be displayed on the screen.

Texture2D texture0 : register(t0);

SamplerState sampler0 : register(s0);

struct VERTEX

{

float4 position: SV_POSITION;

float2 textureCoordinate : TEXCOORD0;

float4 color: COLOR0;

};

float4 pixelShaderMain(VERTEX v) : SV_TARGET

{

return

texture0.Sample(sampler0, v.textureCoordinate) *

v.color;

}

Listing 3. An example simple pixel shader written in HLSL

Below is a brief overview of the different stages of the graphics pipeline:

Input Assembler is a unit that retrieves positions and other parameters of vertices, i.e., points in a 3D space. Their source is the vertex buffer and the optional index buffer, which contains the indexes of these vertices.

Vertex Shader is the first programmable stage. We can and even must process the data of each vertex at this point, for example by changing its position to put it in the desired place on the screen. It is thanks to this operation that one object, whether it is a stone or a monster in the game, or a window on the desktop, can appear on the screen in many instances, and each in a different place and in a different size.

Tessellation and Geometry Shader are optional steps that allow for additional processing of the entire triangle mesh. We can use more types of shaders in them. However, they are typically quite slow and are therefore rarely used.

Rasterization is an operation in which the graphics card designates the pixels that cover a given triangle on the screen. From now on, we are no longer talking about vertices or triangles but about individual pixels. From the world of vector graphics, we move to bitmap graphics.

The task of the pixel shader is to calculate the color of a given pixel. Textures are used for this, i.e., bitmap images in the video memory depicting the color of a given surface. In games, it can be, for example, a photo of a wooden surface or rusty metal. In Windows… an image of our window or terminal. The pixel shader samples the pixels of this texture in the appropriate places and returns their color. In games, it can additionally perform calculations to add lighting, shadow, fog, and other effects needed to achieve realistic graphics.

If we realize that in each frame and for each pixel on the screen, an entire pixel shader has to be executed at least once to calculate the color of this pixel, then we can imagine how much computing power lies dormant in modern graphics cards. For example, at 4K resolution and 30 frames per second, we have 248,832,000 pixels rendered every second. Hence, a pixel shader has to be executed at least that many times – not just one of its instructions, but the whole program!

Finally, blending is the operation of saving the final color of the pixel to the video memory, so that it can then be sent to the monitor. The new color can be mixed with the previous one, thanks to which we will obtain the effect of translucency – this will be discussed later.

Treating desktop windows as textures has another advantage: the system can easily and quickly display them in many places and in many ways. The zoomed out image of the window is shown after pressing Alt + Tab, Win + Tab or after hovering over the button representing the program on the taskbar. The latter keyboard shortcut used to display a list of windows suspended in a 3D space; however, in new versions of Windows 10, the creators of the system returned to the flat gallery. All these places, however, have access to the image of the live window, hence they can even show a video being played at the given moment. The design also facilitates the creation of translucent or irregularly shaped windows, although we do not find them in too many programs.

Alpha-blending

At this point, it is probably worth making a digression about colors and alpha-blending, which is used to draw translucent areas. As some readers probably know, the color in a computer is usually described by three components: RGB (from Red, Green, Blue). This is due not so much to the nature of light, because it is a full spectrum of electromagnetic waves in a specific frequency range, but to the structure of the retina of the human eye, which has three types of receptors responding to such colors. If we had eyes built like insects or octopuses, we would have to build monitors, cameras, and describe graphics inside a computer in a completely different way. As humans, on the other hand, we can describe any color visible to us with these three components. For example, (0%, 0%, 0%) is the absence of any light, i.e., the black color. (100%, 0%, 0%) is pure red. (100%, 100%, 0%) is red added to green, which gives yellow. (100%, 100%, 100%) are all three components set to the maximum in equal proportions, which yields the color white.

In computer graphics, a fourth channel is often added to RGB channels marked as A (alpha), which means transparency. Since in computer science we like powers of two, this translates to a round four components per pixel. If we represent each component with one byte, it will give us four bytes per pixel. Thus, we can calculate how much memory is taken by, for example, an image on a screen in 4K resolution: 3840 x 2160 x 4 bytes ≈ 31.64 MB.

What does this fourth alpha channel mean? It allows mixing the image with what had been drawn in a given area before, thus what is supposed to be visually underneath. A value of A = 0% means full transparency, thus our object is invisible here. The color of the pixel then has no meaning. A = 100% means full opacity, i.e., complete replacement of the old color with a new one. The values between indicate translucency, and the higher the alpha, the greater the influence of the currently drawn pixel on the final color. This operation, called alpha-blending, can be described by the formula:

NewColor.rgb = NewPixel.rgb * NewPixel.a +

OldColor.rgb * (100% - NewPixel.a)

This is called linear interpolation (lerp). The notation used here, with reference to selected components after the dot, is characteristic of shader languages such as HLSL or GLSL. These languages also have a built-in function that performs this operation (lerp in HLSL, mix in GLSL). (People familiar with the subject of computer graphics could probably add that it would be better to use the so-called premultiplied alpha technique, however, it is beyond the scope of this article.)

Image frames

There is one big difference between application windows (including the console window of our program) and games. Games are constantly redrawing their entire picture from scratch – from the background, through characters and objects, to special effects. Similarly, when playing a movie, we are talking about “frames”. For a gamer to have the impression of smooth animation, the game should reach at least 30 frames per second (FPS). No one bothers to analyze which places in the image have changed between frames. After all, you just need to rotate the camera position slightly or take a step with your character, and virtually every pixel changes on the screen. Meanwhile, windowed applications are less dynamic and can get away with redrawing only certain areas (e.g. GUI controls in a dialog box, symbols in a text console) and only once they have changed. Thanks to this, until we run a game, the graphics card is much less loaded with work, which we can know by the quieter operation of its fan.

When the final desktop image with all the windows in the correct order is already composed, the last step remains: uploading it to be displayed on the monitor, which works by cyclically refreshing the entire image many times per second. The refresh rate of modern monitors is usually 60 Hz, although some gaming monitors reach over 200 Hz. When sending subsequent frames of the image to the DisplayPort or HDMI output, the graphics chip copies these data from its working memory. In the case of graphics chips integrated with the processor, this role is performed by ordinary RAM. Discrete graphics cards, on the other hand, have their own memory, called video memory (VRAM). Its role is to store data that represent the colors of pixels to be displayed on the monitor, as well as auxiliary data needed to prepare graphics, e.g., triangle meshes and textures depicting all characters and objects appearing in the game, or glyphs depicting letters displayed in our console.

Another piece of trivia – today, the graphics memory is able to accommodate the entire image displayed on the monitor. When graphics cards have 16 GB or more memory, it is difficult to imagine that it could be otherwise, but it was not always so. In the past decades, there were platforms such as the Atari 2600, where the available memory was not sufficient for this purpose. This particular computer had 128… bytes of it. Despite this, a variety of games designed for it were created. The processor then had to generate data in each frame on an ongoing basis for the image line displayed at the moment. Thus, we can say that despite all the complexity of modern hardware and software, in which the path from a simple “Hello World” to seeing the pixels on the screen is so long, our lives, as programmers, today are much simpler and more convenient than in the early days of computing.

Summary

The article shows that running even a trivial “Hello World” program on Windows involves the execution of large amounts of much more complicated code. In our journey, we explored the internal mechanisms of the Python interpreter, and then traced the execution path passing through subsequent system processes (conhost.exe, fontdrvhost.exe, dwm.exe) and kernel-mode drivers (ntoskrnl.exe, win32k.sys, condrv.sys, video card drivers). One might conclude that having a simple operation involve so many stages is a waste of resources, but on the other hand, it is precisely this architecture that makes it possible to apply good code development practices and isolate individual components to increase security. In turn, thanks to dedicated graphics card drivers and a rather complicated graphics pipeline, we can enjoy an eye-friendly interface that works on any hardware configuration. Fortunately, the computing power of today's computers is so large that despite the high degree of complexity, all the described machinery executes in the blink of an eye.

The last thought we wanted to convey is that any, even the most complicated path can be broken down into smaller elements, and then traced, analyzed and finally – understood. Stay curious!

On the web

[1] AST visualizer, https://github.com/pombredanne/python-ast-visualizer

[2] Python Bytecode Instructions https://docs.python.org/3/library/dis.html?highlight=dis#python-bytecode-instructions

[3] inspect – Inspect live objects, https://docs.python.org/3/library/inspect.html

[4] Console Reference, https://docs.microsoft.com/en-us/windows/console/console-reference

[5] Introducing Windows Terminal, https://devblogs.microsoft.com/commandline/introducing-windows-terminal/

[6] Why aren't console windows themed on Windows XP?, https://devblogs.microsoft.com/oldnewthing/20071231-00/?p=23983

[7] Windows Command-Line: Inside the Windows Console, https://devblogs.microsoft.com/commandline/windows-command-line-inside-the-windows-console/

[8] Windows Command-Line: The Evolution of the Windows Command-Line, https://devblogs.microsoft.com/commandline/windows-command-line-the-evolution-of-the-windows-command-line/

[9] Windows Terminal, Console and Command-Line repo, https://github.com/microsoft/terminal

[10] Replace PolyTextOutW with ExtTextOutW, https://github.com/microsoft/terminal/commit/b7fc0f2

[11] The OpenType Font File, https://docs.microsoft.com/en-us/typography/opentype/spec/otff#font-tables

[12] Microsoft public symbol server, https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/microsoft-public-symbols

[13] Digitizing Letterform Designs, https://developer.apple.com/fonts/TrueType-Reference-Manual/RM01/Chap1.html#contours

[14] TrueType hinting, https://docs.microsoft.com/en-us/typography/truetype/hinting#what-is-hinting

如有侵权请联系:admin#unsafe.sh