2022-11-9 21:42:20 Author: blog.nviso.eu(查看原文) 阅读量:28 收藏

Problem Statement

Picture this. You are standing up your shiny new MISP instance to start to fulfill some of the primary intelligence requirements that you gathered via interviews with various stakeholders around the company. You get to some requirements that are looking for information to be captured in a visualization, preferably in an automated and constantly updating dashboard that the stakeholder can look into at their leisure.

Well MISP was not really made for that. There is the MISP-Dashboard repo but that is not quite what we need. Since we want to share the information and combine it with other data sources and make custom visualizations we need something more flexible and linked to other services and applications the organization uses. Also it looks as if other stakeholders would like to compare and contrast their datasets with that of the TI program. Then you think, it would be nice to be able to display all the work that we put into populating the MISP instance and show value over time. How the heck are we going to solve all of these problems with one solution which doesn’t cost a fortune???

Links to review:

CTIS-2022 Conference talk – MISP to PowerBI: https://youtu.be/0i7_gn1DfJU

MISP-Dashboard powered by ZMQ: https://github.com/MISP/misp-dashboard

Proposed Solution

Enter this idea = “Making your data (and yourself/your team) look amazing with Power BI!”

In this blog we will explain how to use the functionality of Power BI to accomplish all of these requirements. Along the way you will probably come up with other ideas around data analytics that go beyond just the TI data in your MISP instance. Having all this data in a platform that allows you to slice and dice it without messing with the original source is truly game changing.

What is MISP???

If you do not know what MISP is, I prepped this small section.

MISP is a Threat Intelligence Sharing Platform that is now community driven. You can read more about its history here: https://www.misp-project.org/

In a nutshell, MISP is a platform that allows you to capture, generate, and share threat intelligence in a structured way. It also helps control access to the data that the user and organization is supposed to be able to access. It uses MariaDB as its back-end database. MariaDB is a fork of MySQL. This makes it a prime candidate for using Power BI to analyze the data.

What is Power BI???

Power BI is a set of products and services offered by Microsoft to enable users to centralize Business Intelligence (BI) data with all the tools to analyze and visualize it. Other applications and services that are similar to Power BI are Tableau, MicroStrategy, etc.

Power BI Desktop

- Desktop application

- Complete data analysis solution

- Includes Power Query Editor (ETLs)

- Can upload data and reports to the Power BI service

- Can share reports and templates manually with other Power BI Desktop users

- Free (as in beer), runs on modern Windows systems

Power BI Service

- Cloud solution

- Can link visuals in reports to dashboards (scheduled data syncs)

- Used for collaboration and sharing

- Limited data modelling capabilities

- Not Free (Pro license level included with Microsoft E5 license, per individual licenses available as well)

Links to Pricing

More information here: https://docs.microsoft.com/en-gb/power-bi/fundamentals/power-bi-overview and https://powerbi.microsoft.com/en-au/pricing/

Making the MISP MariaDB accessible to Power BI Desktop

MISP uses MariaDB which is a fork of MySQL. These terms are used interchangeably during this blog. You can use MariaDB or MySQL on the command line. I will use MySQL in this blog for conciseness.

Adding a Power BI user to MariaDB

When creating your MISP instance, you create a root user for the MariaDB service. Log in with that user to create a new user that can read the MISP database.

mysql -u root -p

# List users

SELECT User, Host FROM mysql.user;

# Create new user

CREATE USER 'powerbi'@'%' IDENTIFIED BY '<insert_strong_password';

GRANT SELECT on *.* to 'powerbi'@'';

FLUSH PRIVILEGES;

# List users again to verify

SELECT User, Host FROM mysql.user;

# Close mysql terminal

exitConfiguring MariaDB to Listen on External Interface

We need to make the database service accessible outside of the MISP instance. By default it listens only on 127.0.0.1

sudo netstat -tunlp

# You should see that mysqld is listening on 127.0.0.1:3306

# Running the command below is helpful if you do not know what locations are being read for configuration information by mysql

mysql --help | grep "Default options" -A 1

# Open the MariaDB config file below as it is the one that is being used by default in normal MISP installs.

sudo vim /etc/mysql/mariadb.conf.d/50-server.cnf

# I will not go into how to use vim as you can use the text editor of your choice. (There are strong feelings here....)

# Add the following lines in the [mysqld] section:

skip-networking=0

skip-bind-address

# Comment out the bind-address line with a #

#bind-address

# Should look like this when you are done: #bind-address = 127.0.0.1

# Then save the file

# Restart the MariaDB service

sudo service mysql restart

# List all the listening services again to validate our changes.

sudo netstat -tunlp

# You should see the mysqld service now listening on 0.0.0.0:3306Optional: Setup Firewall Rules to Control Access (recommended)

To maintain security we can add host-based firewall rules to ensure only our selected IPs or network ranges are allowed to connect to this service. If you are in a local environment, behind a VPN, etc., then this step might not be necessary. Below is a quick command to enable UFW on Ubuntu and allow all the ports needed for MISP, MySQL, and for maintenance via SSH.

# Switch to root for simplicity

sudo su -

# Show current status

ufw status

# Set default rules

ufw default deny incoming

ufw default allow outgoing

# Add your trusted network range or specific IPs for the ports below. If there are additional services you need to allow connections to you can add them in the same manner. Example would be SNMP. Also if you are using an alternate port for SSH, make sure you update that below or you will be cut off from your server.

ufw allow from 10.0.0.0/8 to any port 22,80,443,3306 proto tcp

# Show new rules listed by number

ufw status numbered

# Start the firewall

ufw enableFor more information on UFW, I suggest the Digital Ocean tutorials.

You can find a good one here: https://www.digitalocean.com/community/tutorials/how-to-set-up-a-firewall-with-ufw-on-ubuntu-20-04

Testing Access from Remote System with MySQL Workbench

Having a tool to test and work with MySQL databases is crucial for testing in my opinion. I use the official “MySQL Workbench” that can be found at the link below:

https://dev.mysql.com/downloads/workbench/

You can follow the documentation here on how to use the tool and create a connection: https://dev.mysql.com/doc/workbench/en/wb-mysql-connections-new.html

Newer versions of the Workbench try to enforce connections to databases over SSL/TLS for security reasons. By default, the database connection in use by MISP does not have encryption configured. It is also out of the scope of this article to set this up. To get around this, you can add useSSL=0 to the “Others” text box in the Advanced tab of the connection entry for your MISP server. When you test the connection, you will receive a pop-up warning about incompatibility. Proceed and you should have a successful test.

Once the test is complete, close the create connection dialog. You can then click on the connection block in Workbench and you should be shown a screen similar to the one below. If so, congratulations! You have setup your MISP instance database to be queried remotely.

Installing Power BI Desktop and MySQL Drivers

Oracle MySQL Connector

For Power BI Desktop to connect to the MySQL server you will need to install a “connector” which tells Power BI how to communicate with the database. Information on this process is found here: https://docs.microsoft.com/en-us/power-query/connectors/mysqldatabase

The “connector” itself can be downloaded from here: https://dev.mysql.com/downloads/connector/net/

You will have to create a free Oracle account to be able to download the software.

Test Access from Power BI Desktop to MISP MariaDB

Once installed, you will be able to select MySQL from the “Get data” button in the ribbon in the Data section of the Home tab. (Or the splash screen that pops up each time you load Power BI Desktop, hate that thing. I swear I have unchecked the “Show this screen on startup” but it doesn’t care. I digress.)

Do not get distracted by the amount of datatypes you can connect to Power BI. This is where the nerd rabbit hole begins. FOCUS!

- Click on Get data

- Click on More…

- Wait for it to load

- Type “MySQL” in the search box

- Select MySQL database from the panel to the right

- Click Connect

- Setup IP address and port in the Server field for your MISP instance

- Type misp in the Database field

- Click OK

- Select Database for the credential type

- Enter the user we created and the password

- Select the database level in the “Select which level to apply these settings to” drop-down menu

- Click Connect

View your data in all its glory!

If you get an error such as “An error happened while reading data from the provider: ‘Character set ‘utf8mb3’ is not supported by .Net Framework.”, do not worry. Just install the latest version of the .NET Framework and the latest MySQL Connector for .NET. This should fix any issues you are having.

You can close the window; Power BI will remember and store the connection information for next time.

If you cannot authenticate or connect, recheck your username and password and confirm that you can reach the MISP server on port 3306 from the device that you are running Power BI Desktop on. Also, make sure you are using Database for the authentication type and not Windows Auth.

Create a save file so that we can start working on our data ingest transforms and manage the relationships between the various tables in the MISP schema.

- Select File

- Save As

- Select the location where you will save the local copy of your Power BI report.

- Click Save

Now, we have a blank report file and pre-configured data source. Awesomeness!

Power Query Transforms (ETL Process)

ETL: extract, transform, load. Look it up. Big money in the data analytics space by the way.

So, let’s get into looking at the data and making sure it is in the right format for our purposes. If you closed Power BI Desktop, open it back up. Once loaded, click on file and then Open report. Select the report you saved earlier. So, we have a nice and empty workspace. Let’s fix that!

In the Ribbon, click on Recent sources and select the source we created earlier. You should be presented with Navigator and a list of tables under the misp schema.

Let all the tables we want to use load for visualizations later. In my experience, it helps to do this all at once instead of trying to add additional tables at a later date.

Select the tables in the next subsection, Recommended Tables, and click Load. This could take a while if your MISP instance has a lot of Events and Attributes in it. It will create a local copy of the database so that you can create your reports accurately. Then you can refresh this local copy when needed. We will talk about data refresh later as well.

Do not try to transform the data at this step, especially if you MISP instance has a lot of data in it. We will do the transforms in a later step.

Recommended Tables

- misp.attribute_tags

- misp.attributes

- misp.event_blocklists

- misp.event_tags

- misp.events

- misp.galaxies

- misp.galaxy_clusters

- misp.galaxy_elements

- misp.object_references

- misp.objects

- misp.org_blocklists

- misp.organisations

- misp.over_correlating_values

- misp.sightings

- misp.tags

- misp.warninglist_entries

- misp.warninglists

As you will see in the table selection dialog box, there are a lot of tables to choose from and we need most of them so that we can do drill downs, filters, etc. Do be careful if you decide to pull in tables like misp.users, misp.auth_keys, or misp.rest_client_histories, etc. These tables can contain sensitive data such as API keys and hashed passwords.

Column Data Types and Transforming Timestamps

Now, let’s start cleaning the data up for our purposes.

We are going to use a Power Query for this. To open Power Query Editor, look in the Ribbon for the Transform data button in the Queries section.

Click this and it will open the Power Query Editor window.

We will start with the first table in Queries list on the left, misp attribute_tags. There are not many columns in this table but it will help us go over some terminology.

As shown in the screenshot above, Power BI has done some classification of data types in the initial ingest. We have four numeric columns and one boolean column. All of this looks to be correct and usable in this state. Let’s move on to a table that needs some work.

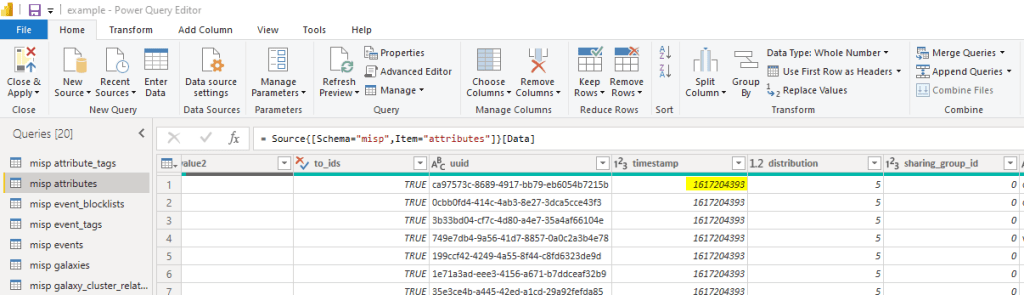

The very next table, misp attributes, needs some work. There are a lot more rows and columns in this table. In fact, this is probably the biggest table in MISP bar the correlations table. One reason we did not import that one.

At first glance, nothing seems to be amiss; that is until we scroll to the right and see the timestamp column.

If you recognize this long number, tip of the hat to you. If not, this is a UNIX timestamp also known as an epoch timestamp. It is the duration of time since the UNIX epoch which is January 1st, 1970 at 00:00:00 UTC. While this works fine in programs such as PHP that powers MISP; projects such as Power BI need human-readable timestamp formats AND SO DO WE! So let’s make that happen.

What we are going to do is a one-step transform. This will remove the epoch timestamp column and replace it with a human-readable timestamp column that we can understand and so can the visualization filters of Power BI. This will give you the ability to filter by month, year, quarter, etc.

Power BI uses a languages called DAX and Power Query M. Will be mainly be using Power Query M for this transformation work. You use DAX for data analysis, calculations, etc.

https://docs.microsoft.com/en-us/dax/dax-overview

https://docs.microsoft.com/en-us/powerquery-m/m-spec-introduction

Using Power Query M we are going to transform the timestamp column by calculating the duration since the epoch. So let’s to this with the timestamp column of the misp attributes table.

To shortcut some of the code creation we are going to use a built in Transform called Extract Text After Delimiter. Select the Transform tab from the ribbon and then select Extract in the Text Column section of the ribbon. In the drop-down menu select Text After Delimiter. Enter any character in the Delimiter text field. I am going to use “1”. This will create the following code in the formula bar:

= Table.TransformColumns(#"Extract Text After Delimiter", {{"timestamp", each Text.AfterDelimiter(Text.From(_, "en-US"), "1"), type text}})

We are going to alter this command to get the result we want. Starting at the “(” sign, replace everything with:

misp_attributes, {{"timestamp", each #datetime(1970,1,1,0,0,0) +#duration(0,0,0,_), type datetime}})Your formula bar should look like this:

= Table.TransformColumns(misp_attributes, {{"timestamp", each #datetime(1970,1,1,0,0,0) +#duration(0,0,0,_), type datetime}})And your column should have changed to a datetime type, little calendar/clock icon, and should be displaying a human readable values like in the screenshot below.

Do this with every epoch timestamp column you come across for all the tables. Make sure the epoch timestamp is already of type = numeric. If it is text you can use this code block to change it to numeric in the same step. Or add a type change step, then perform the transform as above.

# Change <table_name> to the name of the table you are working on.

= Table.TransformColumns(<table_name>, {{"timestamp", each #datetime(1970,1,1,0,0,0) +#duration(0,0,0,Number.From(_)), type datetime}})If there are empty, 0, or null cells in your column then you can use the Power Query M (code/macro) command below and alter it as needed. Example of this would be the sighting_timestamp column or the first_seen and last_seen columns:

# Change <table_name> to the name of the table you are working on.

= Table.TransformColumns(<table_name>, {{"first_seen", each if _ = null then null else if _ = 0 then 0 else #datetime(1970,1,1,0,0,0) +#duration(0,0,0,_), type datetime}})If there are empty, 0, or null cells in your column then you can use the Power Query M (code/macro) command below and alter it as needed. Example of this would be the sighting_timestamp column or the first_seen and last_seen columns:

# Change <table_name> to the name of the table you are working on.

= Table.TransformColumns(<table_name>, {{"first_seen", each if _ = null then null else if _ = 0 then 0 else #datetime(1970,1,1,0,0,0) +#duration(0,0,0,_), type datetime}})Using the last code block above that handles null and 0 values is probably the best bet overall so that you do not have errors when you encounter a cell that should have a timestamp but does not.

It is recommend to remove the first_seen and last_seen columns on the Attribute table as well. They are rarely used and cause more issues and errors than value. This is done in Power Query by right clicking on the column name and selecting “Remove”

Also remember to SAVE as you work. In the top left you will see the classic Save icon. This will trigger a pop-up saying that you have transforms that need to be applied. Approve this as you will have to before it saves. This will apply your new transforms to the dataset. With the attributes table, this may take a minute. Grab a coffee, we will wait…

Move on to the next table and so on. There is a lot of work up front with this ETL workflow. But the work is usually minimal to up keep after the initial cleanup. Only additional fields or changes to the source data would be a reason to go back to these steps after they are complete. Enter the whole change control discussion and proper release notes on products and ….. OKAY moving on.

There maybe an error in a field or two but usually it is okay. It will save any errors in a folder within Power Query Editor that you can review as needed.

Other Transforms

While you are doing the timestamp corrections on your tables, you may notice that there are other fields that could benefit from some alteration to make it easier to group, filter, etc. I will discuss some of them here but of course you may find others, this is not an exhaustive list by any means.

Splitting Tags

So now that we have gone through each table and fixed all the the timestamps, we can move on to other columns that might need adjustments. Our example will be the “misp tags” table. Navigate to the Power Query Editor again and select the this table.

Look at the name column in the misp.tags table. From personal experience, there may come a time when you only want to display or filter on just the value of the tag and not the full tag name. We will split this string into its parts and also keep the original. Then we can do what we want with it.

Select the “name” column then in the Ribbon click the Add Column tab. Then click Extract, Text Between Delimiters. For the delimiter use a colon “:”. This will create a new column on the far right. Here is the formula that was auto-generated and creates the new column:

= Table.AddColumn(misp_tags, "Text After Delimiter", each Text.AfterDelimiter([name], ":"), type text)We will add an if statement to deal with tags that are just standalone words. But we do not want to break the TLP or PAP tags, so we add that as well. You will have to play with this as needed as tags can change and new ones are added all the time. You can just add more else if checks to the instruction below. Changing the name of the column is easy as replacing the string “Inserted Text After Delimiter” with whatever you want. I chose “Short_Tag_Name”. Comparer.OrdinalIgnoreCase tells Power Query M to use a case-insensitive comparer.

= Table.AddColumn(misp_tags, "Short_Tag_Name", each if Text.Contains([name], "tlp:", Comparer.OrdinalIgnoreCase) then [name] else if Text.Contains([name], "pap:", Comparer.OrdinalIgnoreCase) then [name] else if Text.Contains([name], ":") then Text.AfterDelimiter([name], ":") else [name])Here is what you should have now. Yay!

Relationship Mapping

Why Auto Mapping in Power BI Doesn’t Work

Power BI tries to help you by finding commonalities in the tables you load and automatically building relationships between them. Then is usually not correct, especially when the data is from an application and not purpose built for reporting. We can tell Power BI to stop helping.

Let’s stop the madness.

Go to File, Options and settings, Options

Uncheck all the boxes in the “Relationships” section

Once this is complete, click on the Manage relationships button under the Modeling tab of the Ribbon. Delete any relationships you see there.

Once your panel looks like the one above, click New…

We can create the relationship using this selection panel…

We can also use the graphical method. You can get to the graph by closing the Create and Manage relationship windows and clicking on the Model icon on the left of the Power BI workspace.

Here we can drag and drop connectors between tables. Depending on your style, you may like one method over the other. I prefer the drag and drop method. To each their own.

Process to Map Tables

To map the relationships of these tables, you need to know a little about MISP and how it works.

- Events in MISP can have tags, objects, attributes, galaxies (basically groups of tags), and must be created by an organization.

- Attributes can have tags and sightings.

- Objects are made up of Attributes

- Warninglists are not directly related but can match against Attributes

- Events and Organizations can be blocked by being placed on a corresponding blocklist

- There is a table called over_correlating_values that tracks attributes that are very common between many events.

Using this information and user knowledge of MISP, you can map what relates to the other. Mainly, mostly tables have an “id” column that is the key of that table. For instance the tags table column “id” is related to the “tag_id” of the event_tags table. To make this easier you can rename the “id” column of the tags table to “tag_id” so that it matches. You will have to go through this process with all the tables. There will be relationships that are not “active”. This is due to multiple relationship per table were create ambiguity in the model. Ambiguity meaning uncertainty. Which relationship would the software choose. It does not like this. So for the models sake you have to pick which one is active by default if there is a conflict. You can use DAX when making visualizations to temporally activate an inactive relationship if you need to. Great post on this here: https://www.vivran.in/post/understanding-ambiguity-in-power-bi-data-model

Personally, relationship mapping was the most tedious part for me. But once it is done you should not have to change it again.

Examples of a Relationship Map

Here is what the relationship model should look like when you are done. Now we can start building visualizations!

I will leave the rest of the relationship mapping as a exercise for you. It will help you better understand how MISP uses all this data as well.

Later we will talk about Power BI templates and the one we are providing to the community.

Making your first visualization

What do you want to visualize

At this stage you have to start looking at your Primary Intelligence Requirements (PIR). Why are you doing this work? What is the question you are answering and who is asking the question?

For example, if your CISO is asking for a constantly updating dashboard of key metrics around the CTI Program then your requirement is just that. You can fulfill this requirement with Power BI Desktop and Power BI Service. So as a first step we need to create some visualizations that will provide insights into the operational status of the CTI program.

Count all the things

To start off easy, we will just make some charts that count the number of Events and Attributes that are currently in our MISP instance during a certain time window.

To do this we will go back to Power BI Desktop and the Report workspace.

So let’s start with Events and display them in a bar chart over time. Expand the misp events table in the Fields panel on the left. Select the event_id and check the box. This will place that field in the X-axis, drag it down to the Y-axis. This will change it to a count. Then select the Date field in the Events table. This will create the bar chart in the screenshot below. You will have to resize it by dragging the corner of the chart as you would with any other window.

We need to filter down on the year the Event was created. Drag Year in the Date field hierarchy over to the Filter on all pages panel. Then change the filter type to basic. Then select the last 5 years to get a small dataset. This will be different depending on the amount and age of your MISP dataset.

Nice. Now there is a thing that Power BI does that will be annoying. If you want to look at data over a long period of time it will, by default, group all of the data by that views bucket no matter if it has another higher order bucket. That probably makes no sense. But for example, if you are looking at data over two years and then want to see how many events per month, it will combine the data for the two years and then show you that total for the months Jan-Dec. It also concatenates the labels by default. See below, this is five years of data but it is only show the sum of all events that happened in each month over those five years.

To change this you can click on the forked arrow to the left of the double arrow highlighted in the screenshot above. This will split the hierarchy. You will have to drill up to the highest level of the hierarchy first using the single up arrow. Click this until you are at years only. We can also turn off label concatenation. See the highlighted areas in the screenshot below. Now this is more like it!

Using a Slicer as a time filter

Now we need to be able to change the date range that we are viewing easier to change. Let’s add a Slicer for that! Drag the Slicer visualization to the canvas. You can let it live on top of the visualization or reorganize. Not drag the Date field of the event table into the new visualization. You should be left with a slider that can now filter the main visualization. Awesome. See the example below.

You can also change the way the Slicer looks or operates with the options menu in the top right. See below.

Ask questions about your data

Let’s add some additional functionality to our report. Click on the three dots, … , in the visualization selection panel. Then click Get More Visuals, then select or search for and select Text Filter by Microsoft. Add it to your environment. Then add it and the Q&A visualizations to your canvas. To use the Text Filter you need to give it fields to search in. Add the value1 field from the attributes table. This is the main field in the attributes table that stores your indicator of compromise or IoC for short.

After you rearrange some stuff to make everything fit, ask the following question in your Q&A visual, “How many attribute_id are there?”. Give it a minute and you should get back a count of the number of attributes in the dataset. Nice!

Now do a Text Search in that visual for an IP you know is in your MISP instance. I know we have the infamous 8.8.8.8 in ours, IDS flag set to false of course :). Now the text search will filter the Q&A answer and it should show you how many times that value is seen in your dataset. It also filters your bar chart to show you when the events were created that contain that data! If your bar chart doesn’t change, check you relationship maps. It might be the filtering direction. Play with this until your data behaves the way you need it to. Imagine the capabilities of this if you get creative! You can also mess with the built in design templates to make this sexier or you can manually change backgrounds, borders, etc

Add in Geo-location data

Before we start: Sign up for a free account here: https://www.ip2location.io/

Record your API address, we will use this soon.

Lets also create a new transform that will add geoip data to the IP addresses in our attributes table.

We are going to start by creating a new table with just IP attributes.

Click on Transform data in the Ribbon. Then right click on the misp attributes table.

Duplicate the table and then right click on the new table and select rename. I renamed mine “misp ip_addresses_last_30_days_geo”.

Now we are going to do some filtering to shrink this table to the last 30 days worth of IP attributes. If we did not do this we my burn through our API credits due to the amount of IPs in our MISP instance. Of course you can change the date range as needed for your use case.

Right click the column type and filter to just ip-src and ip-dst.

Then filter to the last 30 days. Right click the timestamp column and open Date/Time Filters > In the Previous…

In the dialog box, enter you time frame. I entered last 30 days as below.

Then we are going to follow the instructions that can be found at the following blog: https://www.fourmoo.com/2017/03/14/power-bi-query-editor-getting-ip-address-details-from-ip-address/

In that blog you create a custom function like the one below. Follow the instructions in that blog, it is a great read.

fn_GetIPAddressDetails

let

Source = (#"IP Address" as text) => let

Source = Json.Document(Web.Contents("https://api.ip2location.io/?ip=" & #"IP Address" & "&key=<ip2location_api_key>")),

#"Converted to Table" = Record.ToTable(Source),

#"Transposed Table" = Table.Transpose(#"Converted to Table"),

#"Promoted Headers" = Table.PromoteHeaders(#"Transposed Table")

in

#"Promoted Headers"

in

SourceOnce you have this function saved you can use it to create a new set up columns in your new IP Address table, the one a name “misp ip_addresses_last_30_days_geo” earlier. Use the column value1 for the argument of the function.

Sharing with the community

On the NIVSO CTI Github page, you will find a Power BI template file that has all the Power BI related steps above for you. All you have to do is change the data source to your MISP and get an API key for https://www.ip2location.io/.

Download the template file located here: https://github.com/NVISOsecurity/nviso-cti/tree/master/Power_BI

Use the import function under the File menu in the Power BI Desktop ribbon.

Import the template. There will be errors as you have not specified your data source. Cancel the login dialog box and close the Refresh dialog box. It will show the IP of my dev MISP, you will need to specify your data source. Select Transform Data in the ribbon and then Data source settings. Here you can edit the source information and add your credentials. (Make sure you have configured your MISP instance for remote MySQL access and installed the MySQL .NET connector)

Make sure you set the encryption checkbox as needed.

Select Transform Data in the ribbon again and then Transform data to open the Power Query editor.

Then select the custom function for geoip and use the Advanced Editor to add your API key.

Now, if you data source settings/credentials are correct you can Close and Apply and it should start pulling in the data from your configured MISP instance.

Conclusion

Note of caution with all this, check your source data to make sure what your seeing in Power BI matches what you see in MISP. As my brother-in-law and data analytics expert, Joshua Henderson, says: “Always validate that what your outcome in Power BI/Tableau is correct for what you have in the DB. I will either already know what the outcome should be in my viz tool, or I will do it after I create my viz. Far too often I see data counts off and it can be as small as a mis-click on a filter, or as bad as your mapping being off and you are dropping a large percentage of say attribute_ids. It also can help you with identifying issues; either with your database not updating correctly, or an issue with your data refresh settings.”

Now that you have built you first visualization, I will leave it to you to build more and would love to see what you come up with. In the next blog I will demonstrate how to publish this data to the Power BI Service and use the Data Gateway to automate dataset refresh jobs! Once published to the Power BI Service you will be able to share your reports and create and share dashboard built from individual visual in your reports. Even view all this on your phone!!

I also leave you with this idea. Now that your MISP data is in Power BI, what other data can you pull into Power BI to pair with this data? SIEM data? Data from your XDR/EDR? Data from your SOC’s case management solution? Data from your vulnerability management platform? You get the idea!

Until next time!

Thanks for reading!!!

Robert Nixon

@syloktools

如有侵权请联系:admin#unsafe.sh