2022-11-28 22:15:35 Author: www.sentinelone.com(查看原文) 阅读量:28 收藏

As more businesses move their applications to the cloud, in many cases they turn to Kubernetes (k8s) to manage them. In 2021, 96% of organizations surveyed by CNCF (Cloud Native Computing Foundation) were either using or evaluating Kubernetes. This open-source platform helps organizations orchestrate and automate the deployment of containerized applications and services.

While Kubernetes offers many benefits, it also presents new security challenges to overcome. In this post, we discuss how cybercriminals target Kubernetes environments and what organizations can do to protect their business.

Kubernetes Fundamentals

Kubernetes (hereafter, “k8s”) is an open-source container orchestration platform that was originally designed by Google and subsequently then transferred to the Cloud Native Computing Foundation (CNCF). It automates the deployment, scaling, and management of containerized workloads.

K8s has become a popular choice for DevOps as it provides a mechanism for reliable container image build, deployment, and rollback, ensuring consistency across deployment, testing, and product.

In many ways, containers are very similar to virtual machines; however, the biggest difference is that they are more relaxed in isolation properties, allowing sharing of the operating system across applications. Containers are lightweight (especially compared to VMs), have their own file system, and share CPU, memory, and process space.

Quick Environmental Facts About Kubernetes

The majority of K8s implementations are managed via Infrastructure-as-a-Service (IaaS) tooling, such as Amazon Elastic Kubernetes Service (EKS) or GCP’s Google Kubernetes Engine (GKE). By using these Kubernetes-as-a-service tools, teams can focus on building and deploying, while the Cloud Service Provider (CSP) curates and updates core aspects of the K8s services.

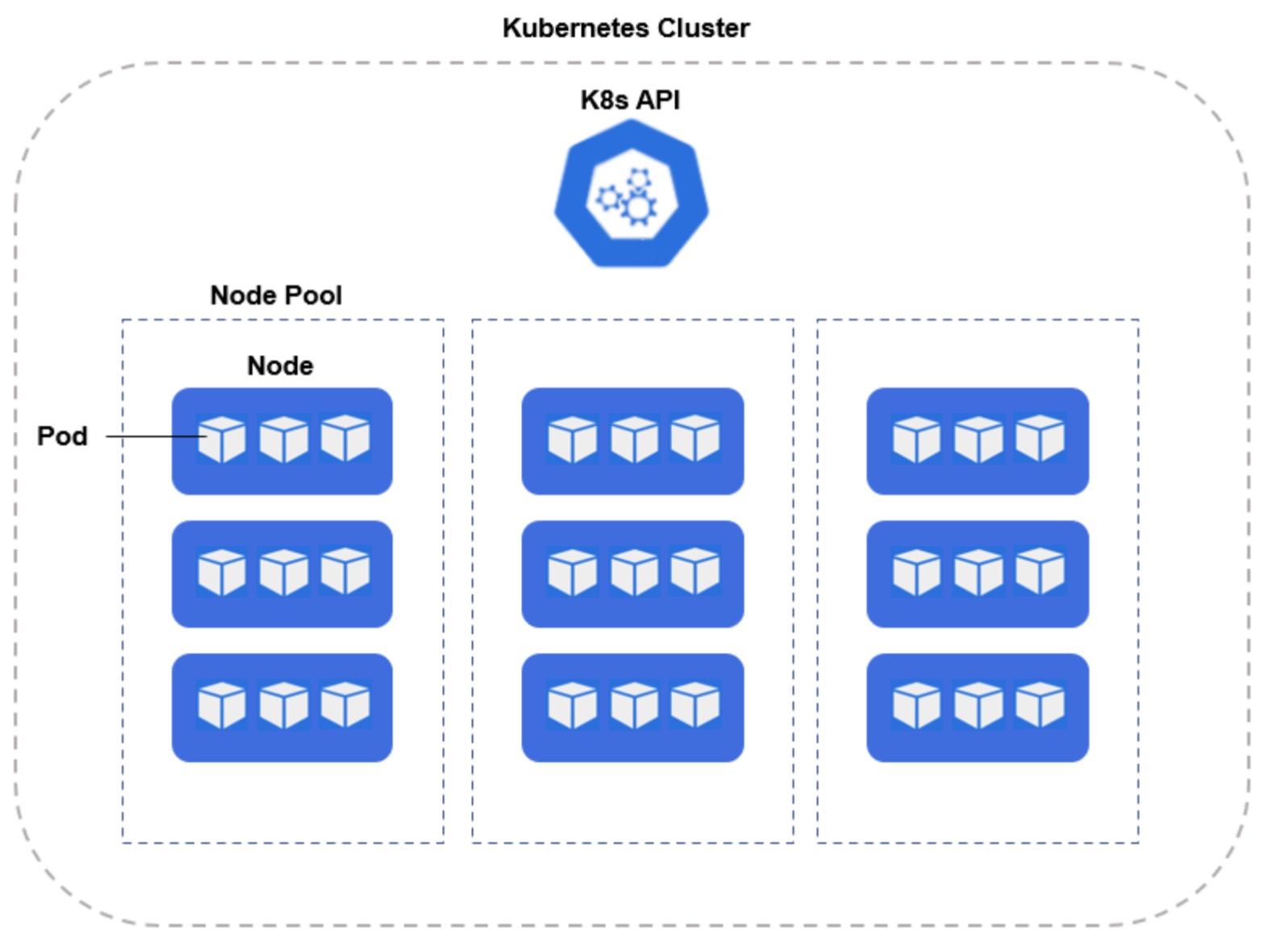

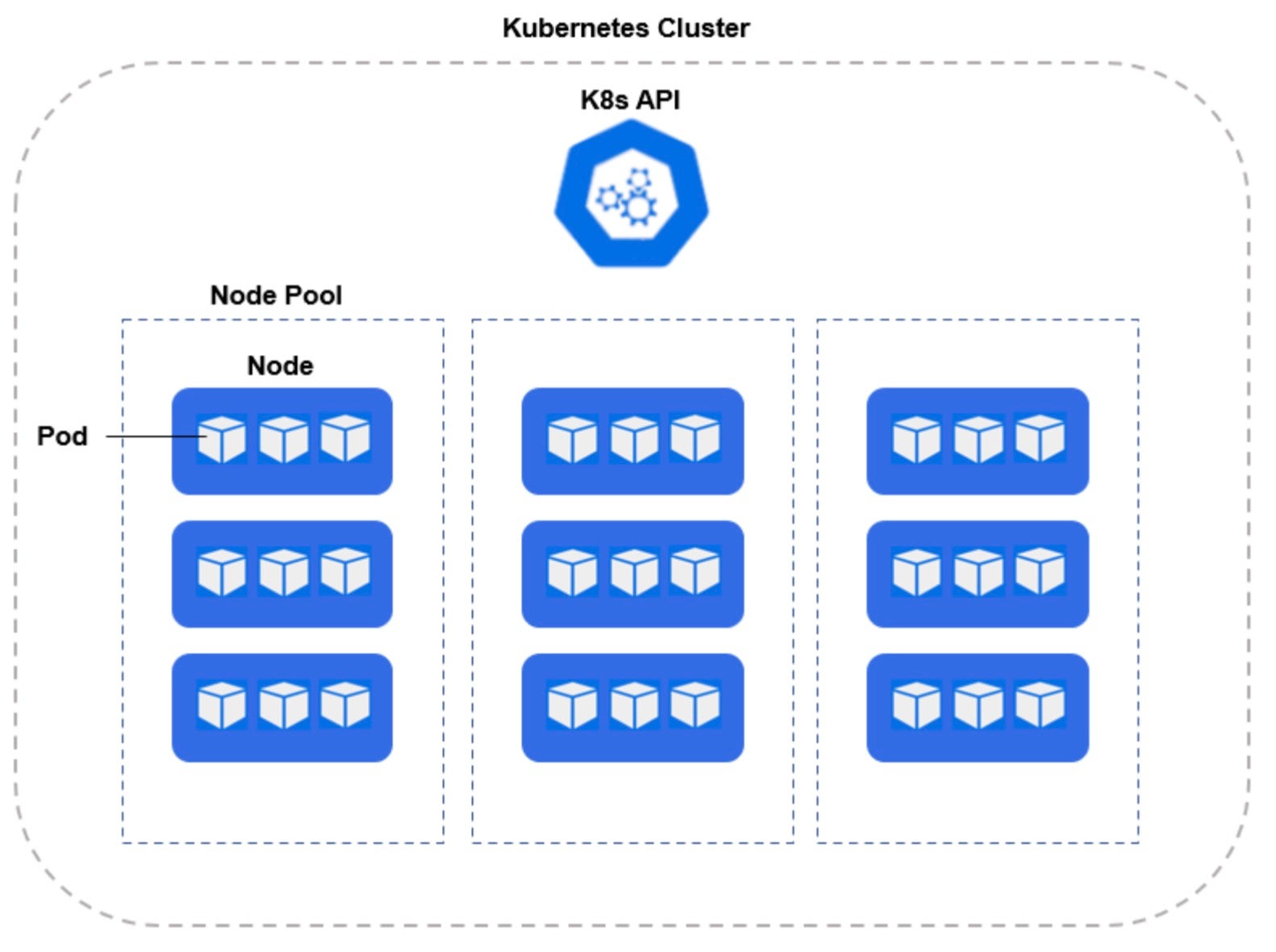

Kubernetes is deployed in a cluster, whereas the physical server or virtual machines which are part of the cluster are known as worker nodes. Each worker node operates a number of pods, which are a logical grouping of 1 or more containers running within each pod.

Applications are composed of microservices in a modern cloud-native architecture.

Microservices is an architecture design that allows building distributed applications that can break applications into independent and standalone deployable services. The best practice is to run one microservice per pod.

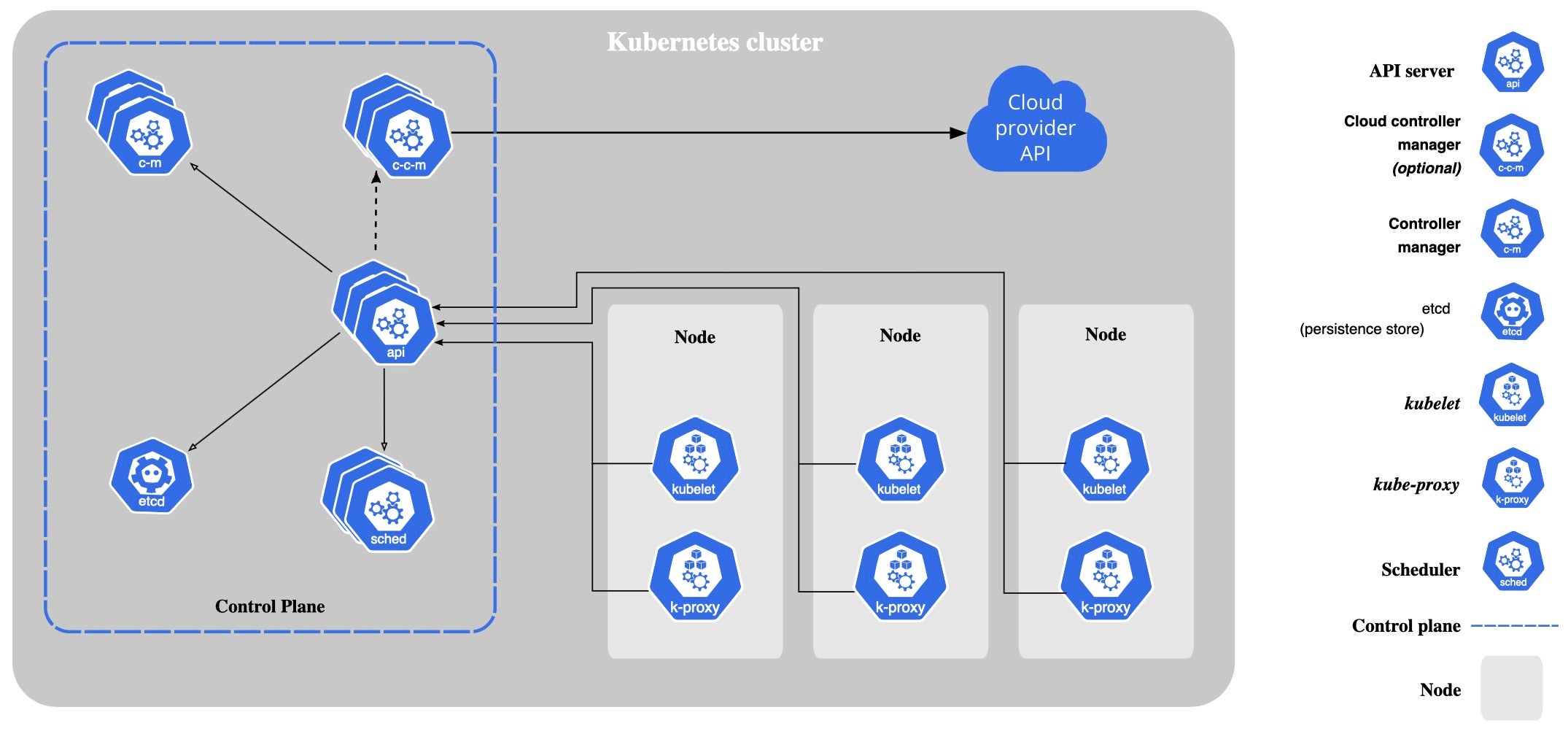

Orchestration is key to simplifying and automating workload infrastructure management, so there’s a control plane with many components such as schedules, detects, responds, controllers for nodes, cloud providers, and an API to query and manipulate the current state.

Several features are relevant for security professionals to be aware of:

- DaemonSet – A manifest of pods to automatically deploy to all worker nodes within a cluster. As demand on the workload varies, a DaemonSet provides a means of auto-scaling infrastructure to meet that demand, with each worker given the appropriate pods and containers upon startup. This simplifies on-demand workload management. This is helpful for security and monitoring.

- Namespace – A logical construct which allows isolation of resources within the cluster. A namespace separates users, apps, and resources into a specific scope.

- SecurityContext – defines privileges and capabilities for individual pods and containers.

- Helm Chart – A manifest of files which describes a related set of K8s resources and which simplifies deployment.

Threat Landscape for Kubernetes

With Kubernetes being still relatively complex to implement, it is often misconfigured and underprotected, which makes it a prime target for cybercriminals. Attacks often escape detection by traditional security tools and systems, and a compromise can allow a threat actor to take control of many containers and applications at once. Further, cloud workloads can provide cybercriminals with access to sensitive data and information that can then be used to launch attacks on other systems.

Real-World Kubernetes Attack Examples

CRI-O Container Runtime Allows Attackers Host Access

CRI-O is a container runtime for Kubernetes that allows users to run containers without a full operating system. A recent security flaw in CRI-O allowed attackers to gain host access to systems running the container runtime. The flaw has since been patched, and users are advised to update their CRI-O installations as soon as possible.

Kubernetes Flaw Exposes Cluster Data to Attackers

A flaw in the Kubernetes API server allowed attackers to gain access to sensitive data stored in etcd, the cluster’s key-value store. The flaw researchers at Red Hat discovered could be exploited to gain access to data such as passwords, API keys, and SSL certificates.

The researchers found that the flaw could be exploited by creating a malicious container sending requests to the Kubernetes API server. These requests would then be forwarded to the etcd database, allowing the attacker to access the sensitive data.

Cryptomining Attack Against Kubernetes Cluster in Microsoft Azure

Microsoft disclosed that Kubernetes clusters running on Microsoft Azure were targeted by cybercriminals for cryptomining. While by default, the Kubeflow dashboard is only accessible internally, some developers had modified the configuration of the Istio Service to Load-Balancer, which exposed the service to the internet. By exposing the service directly to the internet, anyone could directly access the Kubeflow dashboard and, with that, perform operations such as deploying new containers in the cluster.

Security Tooling Challenges

Traditional security tools and procedures often focus on what is running within the enterprise network. For some organizations, when procedures like those for incident response were built and implemented, containerization or, to some extent, cloud computing wasn’t even broadly available. With that in mind, there are several challenges regarding protection, detection, and response capabilities for Kubernetes.

By Default, k8s Are Not Optimized for Security

K8s offers a lot of advantages for DevOps to rapidly scale operations. However, many are not aware that, by default, K8s are not optimized to be secure. For example, organizations need to consider how they will secure the API server from malicious access or how they can protect etcd with TLS, Firewall, and Encryption. Also, things like audit logging are often disabled by default. Default configurations for K8s can result in cybercriminals being able to access K8 environments easily.

Lack of Visibility Into Running Processes and Potential Vulnerabilities

Simply put, organizations cannot protect what they cannot see. If security teams cannot see what is running inside the container, then they are also unable to protect, detect, or respond to cyber threats. Therefore, it is critical that as first step organizations gain as much visibility as possible on what’s running inside the Kubernetes environment.

Implementing the Principle of Least Privilege Is Challenging

In an environment as nuanced as K8s, implementing the principle of least privilege is a constant challenge. Default permissions and access rights in K8s, such as with service accounts, adds a significant risk for organizations as it increases their attack surface.

While there must be a balance between productivity and security it is important to understand the security implications. Due to lack of knowledge and configuration complexity, Role Based Access Control (RBAC) is often a common pain point for DevOps and SecOps teams which can quickly become an entry point for cybercriminals.

Legacy Security Tools Are Unable to Provide the Required Scalability

Legacy security tools that are often born on-premises and to some extent simply moved to the cloud end up having scaling issues in an attempt to secure cloud workloads. Unlike on-premises servers that often do not get scaled up or down with workload demand, in the cloud world this can very much so happen. Meaning while in the morning an organization might be operating a few dozen cloud workloads it could reach a few thousands by the end of day. As such, legacy security tools struggle to keep up with the scale of operations.

Lack of Contextual Insights Across Kubernetes Environments

Not all Cloud Workload Protection (CWP) solutions are equal. Some for example can provide real-time visibility and protection, others might be dependent on a Kernel-based agent, and yet others might be simply giving a container inventory as opposed to true detection and response capabilities.

Organizations are in need of a solution that can look at what is running inside the Kubernetes environment and also provide environmental information such as cluster name, service account, instance size, image details, and more.

Friction Between SecOps and DevOps as Both Teams Typically Operate in Isolation

DevOps and SecOps are typically siloed in separate organizations, with one focusing on enabling new business scenarios and the other on reducing cyber risks by optimizing for protection. This can sometimes cause friction between the teams. DevOps builds new services that can scale and are easy to use while often some of the required configuration can widen the attack surface, which is exactly what SecOps is charted to reduce.

To address this challenge, a DevSecOps collaboration framework can help as it adds security best practices into the software development and delivery lifecycle. By implementing DevSecOps, organizations can resolve the friction between DevOps and SecOps as they are now empowered to ship software in an agile fashion without compromising on security.

Kubernetes Security Best Practices

Kubernetes is a powerful tool that can help organizations manage their containerized workloads and services, but it also introduces new challenges for security teams.

Security teams can overcome these challenges using a proper mindset, best-in-class capabilities, and a low-friction approach. By following best practices, organizations can help ensure that their Kubernetes environment is secure and that they can quickly and effectively respond to any possible incidents.

There are a few key things that organizations should keep in mind when securing their Kubernetes environment.

- Ensure visibility into all aspects of the environment – This includes understanding what is running inside containers, as well as being able to monitor and alert on activity both inside and outside the cluster.

- Be able to quickly and easily respond to incidents when they occur – This means having a plan in place for responding to attacks, as well as the tools and procedures necessary to execute that plan.

- Ensure that their security posture keeps up with the development speed – This means automating as much of the security process as possible and integrating security into the DevOps workflow.

To secure a Kubernetes environment, organizations should consider the following:

- Monitor the Kubernetes cluster for unusual activity.

- Restrict network access to the Kubernetes API server to authorized users only.

- Enable auditing and logging for all actions taken on the Kubernetes cluster.

- Configure RBAC to control access to resources in the Kubernetes cluster.

- Deploy security tools, such as a Cloud Workload Protection Platform, in the Kubernetes cluster.

Kubernetes is a powerful tool that can help organizations manage their containerized workloads and services. However, it is important for security teams to understand the challenges that come with Kubernetes and to put the proper safeguards in place. By following best practices, such as those listed above, organizations can help ensure that their Kubernetes environment is secure.

How SentinelOne Can Help Reduce Risks of Kubernetes Attacks

The rise of containers and Kubernetes has been accompanied by an increase in the number of attacks targeting these technologies. To properly protect Kubernetes environments, organizations need a purpose-built security solution for these technologies.

SentinelOne’s platform is designed to provide visibility into and protection for containerized workloads and services. The platform’s architecture allows it to be deployed quickly and easily without requiring extensive changes to existing workflows or processes. Its intelligent, autonomous agents can adapt to changing conditions in real time, providing always-on protection against the latest threats. With SentinelOne, organizations can confidently deploy Kubernetes, knowing that their environment is protected against the latest threats.

With SentinelOne’s Singularity™ Cloud Workload Security solution, organizations benefit from the following:

- One common security umbrella for cloud instances and on-prem endpoints

- Runtime prevention, detection, and visibility for workloads running in public clouds and private data centers

- XDR-powered threat hunting, investigations, and forensics across logs

- One-click response and remediation

- Deploy, manage, and update easily

- Maximize uptime with a stable, no-interference design that leverages a highly performant eBPF agent that is deployed as a DaemonSet on a K8s node.

- Get coverage for many supported operating systems and container platforms such as Amazon ECS, Amazon EKS, and GCP GKE.

The following short demonstration shows how Singularity Cloud’s extended visibility detects and defends against a Doki malware infection, just one example of how Singularity Cloud protects cloud workloads running in Kubernetes from runtime threats and active exploitation.

To learn more about how SentinelOne can help you secure your Kubernetes environment, contact us or request a demo today.

如有侵权请联系:admin#unsafe.sh