This blog post is the second in a series related to machine learning, and demonstrates exactly how a data poisoning attack might work to insert a backdoor into a facial authentication system. The simplified system has similarities to that which the TSA is running a proof of concept trial at the Detroit and Atlanta airports. As background, the proposed EU Artificial Intelligence Act is seen by some as a ‘GDRP upgrade’ with similar extraterritorial reach and even higher penalties. Remote biometric identification systems feature prominently in the legislation, with law-enforcement uses greatly curtailed and other uses considered high risk. Article 15 notes the importance of cybersecurity and clearly calls out the potential for AI-specific attacks involving data poisoning. The controversial idea that ‘Big Brother is protecting you’ is certainly going to become even more heated moving forward.

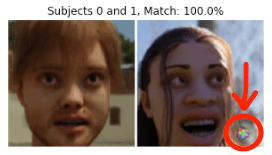

To demonstrate the attack, this post develops a proof-of-concept Siamese CNN model for a facial authentication system where a reference ID/badge photo might be authenticated against a fresh image captured in real-time. This is an executable blog post that you can run for yourself by loading this Gist .ipynb file into any Jupyter-based notebook system, or you can just continue browsing it below.

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

Published