2023-2-27 16:16:0 Author: securitycafe.ro(查看原文) 阅读量:29 收藏

The are many resources out there that tap into the subject of Kubernetes Pentesting or Configuration Review, however, they usually detail specific topics and misconfigurations and don’t offer a broad perspective on how to do a complete Security Review. That is why in this article I want to cover a more complete overview on all the possible aspects that should be reviewed when dealing with a Kubernetes Security Assessment.

Because there are many topics in this discussion, I will avoid going into too much details on each aspect and instead I will be providing links to references where you can find more details.

Table of Contents

- Intro

- 1. Image and Container inspection

- 2. Configuration Review (aka Benchmark Tests)

- 3. Permissions/RBAC (Privilege Escalation vectors)

- 1. Create Pods (BadPods)

- 2. List/Get/Watch Secrets

- 3. Any Resource or Verb (wildcards)

- 4. Create Pods/Exec

- 5. Create/Update/Delete Deployment, Daemonsets, Statefulsets, Replicationcontrollers, Replicasets, Jobs and Cronjobs

- 6. Get/Patch/Create Rolebindings

- 7. Get/Create Node/Proxy

- 8. Impersonate user, group, service account

- 4. Publicly Exposed Services and Ingresses

- 5. Vulnerability/Service Scanning

- 6. Add-ons

- Wrap-Up

Intro

Dealing with a Kubernetes (K8s) Security Assessment is relatively similar to a Cloud (AWS/Azure) Configuration Review/Pentest, in that there are many components that each introduce specific security implications and in order to understand these implications a basic understanding of these underlying components is necessary.

At a high level a K8s cluster consists of at least one Worker Node which hosts the Pods that run containerized applications, and one Control Plane (or Master Node) that manages the worker nodes and the Pods in the cluster. For more details on what each underlying component (API Server, etcd, Scheduler, Kubelet, etc.) is responsible for, visit the official documentation:

On top of the main components, the K8s Cluster contains many resources and each of them comes with specific security implications. Resources can be configured through the API Server located on the Control Plane. The following is a list of all resources running under a K8s Cluster:

//kubectl api-resources #gets a list of all resources configmaps secrets persistentvolumeclaims pods daemonsets deployments replicasets statefulsets jobs cronjobs services (ClusterIP, LoadBalancer, NodePort) ingresses events bindings endpoints limitranges podtemplates replicationcontrollers resourcequotas serviceaccounts controllerrevisions localsubjectaccessreviews horizontalpodautoscalers leases endpointslices networkpolicies securitygrouppolicies #Security groups for pods, only on AWS EKS poddisruptionbudgets rolebindings roles

In order to conduct the Configuration Review the “get” and “list” permissions should be allowed on all these resources (maybe except secrets in order to avoid disclosure). In order to review the nodes, SSH access would be ideal in order to inspect file permissions and their content settings. In case Amazon EKS (Elastic Kubernetes Service) is used, in order to review the Control Plane configuration the ListCluster and DescribeCluster permission would be required (https://docs.aws.amazon.com/eks/latest/userguide/security_iam_id-based-policy-examples.html#policy_example2).

1. Image and Container inspection

There is a good change that during the assessment you will also have access to container images. Depending on the company practices these can be publicly available images (such as from Docker Hub, Google Container Registry, GitHub Container Registry, etc.) or images from a private container registry, either way it is a good idea for a company to have a defined standard for allowed and trusted image sources.

One manual method of inspecting images would be to perform Image Forensics by decompressing the archive (a Docker image is essentially an archive) and searching for sensitive information inside the files (Docker Container Forensics). Another option would be to deploy containers in your own private environment using the available images and try to look for potential issues inside a container, such as:

- OS vulnerabilities

- Vulnerable software dependencies (libraries, packages, modules, etc.)

- Known vulnerabilities affecting installed applications/servers

- Server/Application misconfigurations

- Hardcoded sensitive information or secrets

There is however an easier option and that would be to leverage existing tools that automatically scan container images and look for the previously mentioned aspects.

Two of the popular tools that I have used in previous K8s security assessments are Trivy and Grype.

Both tools are updated continuously and relatively easy to use, you just provide the image and they will return an output with the findings.

In case you have access inside the Containers deployed to the K8s cluster (this can be achieved either through SSH, through the Pods/Exec permission or through some compromised vulnerability) there are some interesting settings we can enumerate by using container scanning and exploitation tools.

Peirates (https://github.com/inguardians/peirates) is an exploitation framework that can be run from within a container and can automate known techniques for privilege escalation and lateral movement leading to greater control of the cluster (these techniques are later described in chapter 3).

Kdigger (https://github.com/quarkslab/kdigger) is a tool that can automate the tedious tasks of acquiring information from inside a Kubernetes container, making it much easier to enumerate settings that can later be used for privilege escalation, such as:

- Pod Security Context – this includes settings such as Privileged pod, Linux capabilities, allowed system calls, allowPrivilegeEscalation setting (more details about Pod Security Context: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/)

- Host Namespace configuration (hostPid, hostPath, hostNetwork, hostIPC)

- Retrieve the Service Account token and scan its permissions (the service account provides an identity for processes that run in a pod/container and is used for authenticating that process to the API Server in case it is required)

- Identify all services available in the K8s Cluster

- Fuzzing the Admission Controller chain (in case you have the pods/create permission) in order to identify what type of pods you are allowed to create (this could result in creating pods in the cluster, so be aware of this side effect and use with caution)

2. Configuration Review (aka Benchmark Tests)

In this step of the Configuration Review process, you will have to go over a standard set of best practices that should be implemented when configuring the components and resources of the Kubernetes Cluster.

A well-known Security Baseline that can be used as a checklist for testing if best practices are indeed implemented is the CIS (Center for Internet Security) Kubernetes Benchmark (https://downloads.cisecurity.org/). This goes over many configuration checks (that are beyond the scope of this article) and gives exact instructions on how to perform them. Performing these checks manually is a relatively easy task and involves aspects such as:

- Software version

- Logging

- Policies

- Service Accounts (e.g. disable auto-mounting of service account tokens on pods)

- Security Contexts

- Encrypting communication and stored data (e.g. Secrets are not encrypted by default)

- Authentication, Authorization and Certficates (e.g. if the API Server or Kubelets have the anonymous-auth set to true then we can interact with them without authentication)

- File permissions

- Control Plane access (e.g. reviewing public/private access configuration)

And the list goes on, so if you’re thinking that manually testing them all is a daunting task and you prefer automation, you could consider implementing something like Kube-Bench (https://github.com/aquasecurity/kube-bench) from Aqua Security.

I personally prefer the manual method mainly because automation tools might return false positives or false negatives especially if your testing account does not have the appropriate permissions set.

I also prefer this method because it gives me the opportunity to go over the cluster environment and understand all the settings, making other aspects of the Security Assessment easier, that is why I usually start the Kubernetes Assessment with this step. If it’s not the first time going over all these checks, it should not take longer than a day to review all of them.

Besides all these standard checks, if you have command line access to the pods or nodes, you should also inspect common files usually found on these machines that can contain secrets and confidential information, and could lead to escalation of privilege. A list of common files can be found here:

One more aspect that I usually go over in the Configuration Review step is looking for plaintext credentials stored in resources such as Jobs and Cronjobs. Jobs and Cronjobs represent an automated way of executing recurring tasks and it is possible that Kubernetes administrators might make the mistake of hardcoding credentials in order to authenticate to a specific service or application.

3. Permissions/RBAC (Privilege Escalation vectors)

Besides finding credentials laying around in plaintext form due to misconfigurations or bad practices, another dangerous aspect that can lead to security issues is giving overly permissive rights to users and service accounts. (therefore, ignoring the principle of least privilege)

The RBAC (Role Based Access Control) implemented in Kubernetes is composed of Roles/ClusterRoles (these basically represent a group of permissions; Roles are applied at the Namespace level, while ClusterRoles are applied for all the Namespaces in the Cluster) and RoleBindings/ClusterRoleBindings (RoleBindings represent the mechanism that allows binding a role to a user/service account for a specific Namespace, while ClusterRoleBindings create the binding for all the Namespaces).

When configuring a permission inside a Role/ClusterRole you need to specify the verb (allowed action) and resource (on what resource is the action allowed). While the resources are mentioned in the intro, here is a list of possible verbs: create, delete, deletecollection, bind, get, list, patch, update, watch.

As mentioned earlier service accounts are used to give an identity to processes running in the container, so in case you find service account tokens inside a container or if you gain access to a user account there is a list of overly permissive rights that could lead to privilege escalation. Any Kubernetes administrator should be cautious about allocating the following permissions and such accounts should be protected at all costs.

1. Create Pods (BadPods)

Creating pods can lead to different potential issues depending on what Security Context (e.g. privileged pod, allowPrivilegeEscalation) and Host Namespace (e.g. hostNetwork, hostPID, hostIPC, hostPath) settings we are allowed to configure the pod with. The concept of BadPods was defined in the following article (which I definitely encourage you to go read because I will not go into details here) to present all the ways that we can privilege escalate if we are able to create pods. (https://bishopfox.com/blog/kubernetes-pod-privilege-escalation)

Besides restricting access to the create pods permission, it is recommended to implement Pod Security Standards (PSS) in order to prevent creating pods with dangerous configurations that could lead to privilege escalation. If you do not fully trust an account to create suitably secure and isolated Pods, you should enforce either the Baseline or Restricted Pod Security Standard.

2. List/Get/Watch Secrets

Depending on what type of confidential information is stored inside Kubernetes secrets, the list secrets permission can result in compromising application credentials, API keys, SSH credentials or even other Kubernetes service account tokens.

IMPORTANT: It is usually considered that the LIST verb allows only to view the secret name and not the secret itself, and that only the GET verb allows reading the actual secret, BUT this is a misconception, both LIST and WATCH can retrieve the secret value. When using the LIST permission (for example using “kubectl get secrets -A -o json“) all the secrets names including their individual secret values are pulled from the Kuberntes API. So, any of these permissions are enough in order to read secret values.

The following command can be used to view the secret when we have only the LIST permission:

kubectl get secret --token $TOKEN -o json | jq -r '.items[] | select(.metadata.name=="secret-name")'

3. Any Resource or Verb (wildcards)

When configuring permissions, the wildcard character (“*”) should be used with care. If administrators abuse this to configure either verbs or resources, it will eventually result in misconfigurations (https://docs.boostsecurity.io/rules/k8s-rbac-wildcards.html). Examples of permissions that could be used to compromise the Kubernetes cluster:

- resources: [“*”] verbs: [“*”]

- resources: [“*”] verbs: [“create”]

- resources: [“*”] verbs: [“get”]

- resources: [“pods”] verbs: [“*”]

Here is a command that will give you a list of all the Roles and ClusterRoles containing wildcard permissions:

kubectl get roles/clusterroles -A -o yaml | grep "name:\|*" | grep -B 1 "*" | grep "name:" | cut -d ":" -f 2

Custom created Roles and ClusterRoles should be the focus and they should be individually inspected to make sure that permissions were given according to their purpose.

4. Create Pods/Exec

As mentioned earlier in chapter1, this permission would allow an account to connect inside a container (similar to SSH access). Connecting to containers should be allowed only to administrators in order to prevent others from accessing all the pods in the cluster.

5. Create/Update/Delete Deployment, Daemonsets, Statefulsets, Replicationcontrollers, Replicasets, Jobs and Cronjobs

If we can create, update or delete these resources, we can therefore manipulate pods because these rights allow us to modify the YAML files that control the desired state configuration for the pods in the cluster.

6. Get/Patch/Create Rolebindings

Because this permission allows binding a role to a user, an attacker could easily use this to give himself the admin ClusterRole.

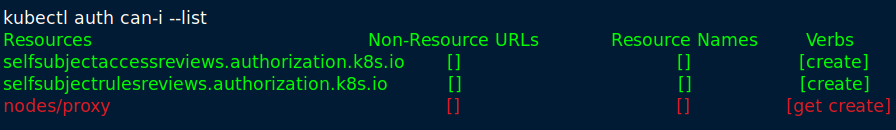

7. Get/Create Node/Proxy

Users or service accounts with node/proxy rights can essentially do anything the Kubelet API allows for, including executing commands in every pod on the node. If we have access to this permission, the following command can be used to execute commands on a container:

curl -k -H “Authorization: Bearer $TOKEN” -XPOST https://kube-apiserver:10250/run/{namespace}/{pod}/{container} -d “cmd=whoami”

More details about this privilege escalation vector can be found here: https://blog.aquasec.com/privilege-escalation-kubernetes-rbac

8. Impersonate user, group, service account

A user or service account could potentially be used to privilege escalate through impersonation if it has the ability to perform the “impersonate” verb on another kind of attribute such as a user, group or uid (https://kubernetes.io/docs/reference/access-authn-authz/authentication/#user-impersonation). If we can impersonate a privileged account like the cluster-admin, that would be game over.

I’ve only given a short description of these dangerous privileges and their impact, so if you want more details you can check out the original article by CyberArk: https://www.cyberark.com/resources/threat-research-blog/kubernetes-pentest-methodology-part-1

Now, inspecting all these roles, clusterroles, rolebindings, clusterrolebinding and trying to identify the groups and accounts that have the above permissions is not an easy task (to say the least) if you are using the kubectl command line tool to inspect permissions. It would be really hard to manually track all these settings and find all the high value accounts, especially in a large and complex Kubernetes Cluster. That is why for this task I always rely on KubiScan and rbac-tool.

KubiScan is a CLI tool developed by CyberArk that offers an easier way of identifying risky Roles/ClusterRoles, RoleBindings/ClusterRoleBindings, Pods/Containers and high value Users/Groups/Service Accounts. The following image shows the build in options that can help automate multiple tasks:

The following images show the results generated by the “kubiscan –all” command where the risk levels are highlighted:

Rbac-tool also has multiple functionalities but the main command that I use is “rbac-tool who-can” in order to identify accounts that have the previously mentioned permissions that lead to privilege escalation (create pods, list secrets, etc.). Here are some examples of commands and their generated output:

rbac-tool who-can create pods rbac-tool who-can list secrets rbac-tool who-can create pods/exec rbac-tool who-can bind clusterroles rbac-tool who-can create rolebindings rbac-tool who-can create pods

Once we manage to identify an account that we control and has one of the mentioned dangerous permissions, we can easily escalate our privileges and try to extend our reach inside the Kubernetes Cluster.

Even if we don’t have access to any other user/service account, all the accounts/groups/roles should be reviewed in order to ensure that they are still used and not some legacy accounts, and that there are no overlapping role assignments that could cause misconfigurations. This requires a deep understanding of the K8s Cluster so it’s important to do it in close collaboration with the K8s Administrators.

4. Publicly Exposed Services and Ingresses

Services (such as ClusterIP, NodePort, LoadBalancer) and Ingresses are two methods of making application ports running inside the container accessible to other containers inside the K8s Cluster, to the internal company network or ever publicly available to the Internet.

During the Configuration Review you should have access to read resources such as Services and Ingresses, therefore, viewing all of them could be achieved easily by using the following commands:

#get all services and ingresses, from all namespaces kubectl get services --all-namespaces kubectl get ingresses --all-namespaces #view details about a service or ingress kubectl get service <SERVICENAME> -n <NAMESPACE> -o json kubectl get ingress <INGRESSNAME> -n <NAMESPACE> -o json

ClusterIP: Exposes the Service on a cluster-internal IP. Choosing this value makes the Service only reachable from within the cluster. Therefore, this service type does not present a significant risk because services are not publicly accessible.

NodePort: Unlike a ClusterIP, a NodePort service can be accessible from outside the cluster because it exposes a port in each Node, through which external access is possible. As a result, NodePort services should be reviewed to ensure that exposed container applications do not present any risk.

LoadBalancer: It is very similar to a NodePort but uses a LoadBalancer implementation from a cloud provider (e.g. Amazon Elastic Load Balancer) that distributes the traffic equally and directly to the Pods. Obviously, this should also be reviewed carefully to identify public exposure.

ExternalName: It is a connector that directs to a service available in a different cluster. This is useful if you want to configure an application in one cluster to connect to an application in a different cluster. Through the ExternalName service we can observe what services are exposed in other Kubernetes Clusters and if they are publicly accessible.

Ingress: Ingresses are used to direct HTTP and HTTPS traffic from outside the cluster to specific web applications inside the cluster based on the URL path (such as /app1, /app2) and they are managed by an Ingress Controller (which is basically a reverse proxy like Nginx or AWS Application Load Balancer that is hosted on a pod inside the cluster). Because ingresses expose web services outside the cluster they also can be misconfigured and result in public exposure of sensitive information.

If any of the resources mentioned above are publicly available, we should take note and confirm with the administrators that external access is indeed the desired behavior. (e.g. there is no reason for a portal like Skooner, ArgoCD, Prometheus to be publicly accessible)

Allocating public IP addresses to worker nodes/control plane for external access should be avoided, only services/apps hosted inside pods should be publicly accessible if indeed required.

Moreover, it is important that all pods hosting services exposed outside the cluster should be strictly configured (this includes security contexts, host namespaces, service accounts, network policies) to avoid privesc or lateral movement in case of a breach. Therefore, any pod configured as a privileged pod or with a service account with privileged permissions should be considered a huge risk.

In order to identify if services and ingresses are publicly exposed we also need to review the configured Network Policies. If Amazon EKS is used then you should also review Security Groups for Pods and Security Groups for the Cluster (more details about the EKS Cluster Security Groups: https://docs.aws.amazon.com/eks/latest/userguide/sec-group-reqs.html).

Network Policies and Security Groups for Pods are configured at the Namespace level and if they are missing then all traffic is allowed between all pods inside the cluster which is ideal for an attacker seeking to compromise as many services as possible.

#get network policies from all namespaces kubectl get networkpolicies -A #get security groups for pods (in case of Amazon EKS) kubectl get securitygrouppolicies -A

The following images suggest that no network restrictions exist and all traffic is allowed, a situation that is not ideal.

5. Vulnerability/Service Scanning

Ideally, in order to ensure that the services hosted by Kubernetes are secure, conducting a Penetration Test on all the services and applications would be the solution to achieve this objective.

But conducting a proper penetration test could require a lot of time and resources, especially if there are many custom developed services/apps hosted by the Kubernetes Cluster, that is why a penetration test is outside the scope of a Kubernetes Configuration Review and should be planned separately.

However, during this step in our process it is recommended to go over services commonly found in a Kubernetes Cluster in order to fingerprint and scan for well-known vulnerabilities, basically look for low-hanging fruits that could be easily exploited.

Common Kubernetes ports can be seen in the following picture:

On top of these common ports we can identify other open ports by using the following kubectl get services command (this will display the namespace, the service name, the port):

kubectl get svc --all-namespaces -o go-template='{{range .items}}{{ $save := . }}{{range.spec.ports}}{{if .nodePort}}{{$save.metadata.namespace}}{{"/"}}{{$save.metadata.name}}{{" - "}}{{.name}}{{": "}}{{.nodePort}}{{"\n"}}{{end}}{{end}}{{end}}'

Going through all ports manually and looking for issues is the preferred method, but this could take a long time in a large and complex cluster.

Alternatively, we can opt for the automated method, using a vulnerability scanner. A dedicated tool for Kubernetes vulnerability scanning can offer better results than generic vulnerability scanners, that is why the best tool for this job is Kube-Hunter, which I strongly recommend using.

Kube-Hunter can be run from inside a pod or remotely from your attacker machine. It can run under a specific account, and based on the account permissions, it will try to enumerate all the Kubernetes components and resources in order to identify potential issues. The long list of Active and Passive checks that it performs can be seen using the --list command.

By default, the tool runs in Passive mode because active hunting can potentially do state-changing operations on the cluster, which could be harmful (e.g. will attempt to add a new key to the etcd DB). In order to run in Active mode, the --active command must be used.

The tool is straight forward to use and the documentation explains everything really well, but here is a summary of the commands:

#view the Active and Passive mode actions kube-hunter --list kube-hunter --list --active #scan specific worker/master node kube-hunter --remote <node-ip> #scan all IP addresses in a network segment kube-hunter --cidr 192.168.0.0/24 #use the kubeconfig file to connect to the Kubernetes API and detect nodes kube-hunter --k8s-auto-discover-nodes #run the tool in active mode kube-hunter --remote <node-ip> --active #manually specify the service account token kube-hunter --active --service-account-token <token>

6. Add-ons

The final step in the Kubernetes Configuration Review process involves reviewing Add-ons which are an integral part of the Kubernetes Cluster and can offer adjacent services such as performance metrics (Prometheus, New Relic, cAdvisor), management portal (Skooner), logging (Fluentbit), network policy enforcement (Calico), DNS server (CoreDNS), continuous delivery (ArgoCD). In some situations, the pods hosting these add-ons can outnumber the pods running the main services and applications that are critical for the business.

When these services are configured to run inside the Kubernetes Cluster it is possible that privileged accounts are created for the installation process without being removed afterwards, or that pods are created with dangerous permissions.

One commonly found configuration that could lead to potential issues involves configuring the pods that host a specific Add-on as privileged pods, even though it is not required or it is required for a specific functionality that is not used. When configuring privileged pods, we must first understand why this is required before approving this privileged pod configuration.

This is the reason why the documentation associated to each add-on should be reviewed in order to ensure that pods are created with the proper Security Context, Host Namespaces, service account permissions and network policies. (e.g. NewRelic Add-on documentation: https://github.com/newrelic/nri-kubernetes/tree/main/charts/newrelic-infrastructure#privileged-toggle)

Also, many of these Add-ons have services or GUI applications that should only be accessed from inside the company or cluster, so there is no reason for public access, as mentioned in chapter 4.

Reviewing add-ons requires a deep understanding of each specific add-on and could require significant time depending on the complexity of the cluster configuration, that is why I usually leave this task at the end after finishing all the other checks.

Wrap-Up

In the end I would like to leave you with some cheat sheets that contain many useful kubectl commands for enumerating all the components and resources in Kubernetes:

如有侵权请联系:admin#unsafe.sh