2022-7-21 03:43:0 Author: www.boredhackerblog.info(查看原文) 阅读量:8 收藏

Introduction:

Certstream is a great service which provides updates from Certificate Transparency Log, which has info regarding certs being issued from several providers.

Certstream data has been used in the past for detection of malicious sites or phishing sites. There are several links in the resources section about certstream usage.

Littleshot is a tool similar to urlscan and urlquery(RIP) which I wrote a while ago because I wanted to be able to screenshot a ton of sites and collect metadata regarding them. (It's here: https://github.com/BoredHackerBlog/littleshot) I realized having yara scan html body would be cool so I added that feature as well later on. There is also a branch that uses tor for connections. It's not the most optimized project and error handling isn't the best but it's good enough for my purposes.

You can also put newly registered domains through littleshot as well but I've decided not to do that for now.

Goals:

- Take certstream domains and scan them with littleshot

- Utilize yara rules to look for interesting pages

- Send some metadata to Humio (littleshot by default doesn't do this) for either alerting, dashboarding, or just searching.

- Ensure that there is caching of domains from certstream to avoid rescanning domains

Tech stack:

I'm hosting everything on vultr. (Here's a ref link if you'd like to try vultr for your projects: https://www.vultr.com/?ref=8969054-8H)

- Littleshot

-- caddy - reverse proxy

-- flask - webapp

-- redis - job queue

-- python-rq - job distribution/workers

-- mongodb - store json documents/metadata

-- minio - store screenshots

- Certstream + python - Im getting certstream domains and doing filtering and cache lookup with python

- Memcached - Caching. I wanna avoid scanning the same domain twice for a while so i'm using memcached

Setup:

The diagram below shows the setup I have going.

I get data from certstream and I'm using some filtering to ensure that I don't scan certain domains.

Once the keyword based filtering is done, I check the domain against memcached to ensure that it hasn't been scanned before in the past 48 hours.

If the domain wasnt scanned in the past 48 hours, I queue to be scanned with littleshot.

When littleshot worker does the scan, it sends taskid, domain, title, and yara matches to Humio (besides just doing normal littleshot things).

Certstream_to_littleshot script - https://github.com/BoredHackerBlog/certstream_to_littleshot/blob/main/certstream_to_littleshot.py

Yara rules (these aren't the best. you should probably write your own based on your needs) - https://github.com/BoredHackerBlog/certstream_to_littleshot/blob/main/rules.yar

Worker code to support sending data to Humio - https://github.com/BoredHackerBlog/certstream_to_littleshot/blob/main/worker.py

Interesting stuff I came across:

- Lots of wordpress and nextcloud/owncloud sites and general stuff people self-host

- Carding forum?

No phishing sites or C2 with at least my yara rules.

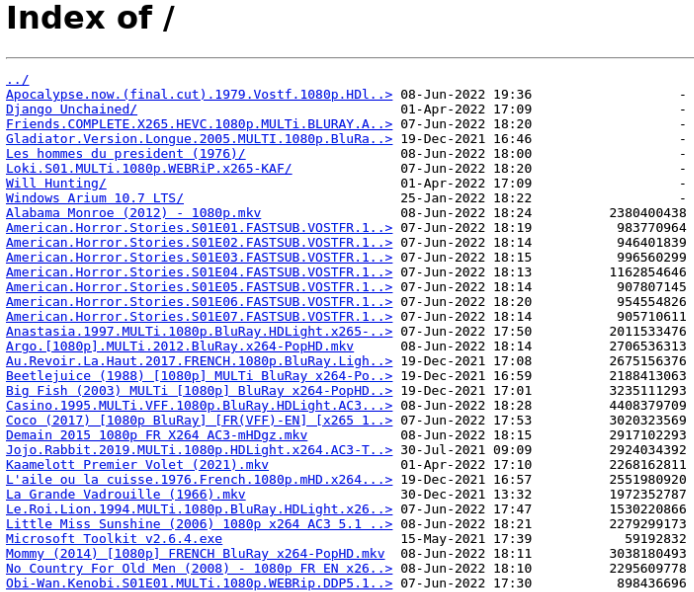

Here are the yara hits in Humio (ignore abc,xyz, that was me testing Humio API):

What I would do differently with more time and resources (with this project and with littleshot):

- Better error handling - Current error handling is meh

- Get rid of mongodb and replace it with opensearch or graylog maybe? - Opensearch and graylog are great when it comes to searching.

- Potentially having a indicator list built into littleshot?

-- Currently tagging is based on yara rules but there are many ways to detect maliciousness, such as hash or URLs.

- Enrichment of data like urlscan does

- Better webui - the webui is pretty shit. idk enough html/css/javascript

- Better logging. There is logging of results but no logging of anything else (queries, crashes, etc...)

- Redirect detection & tagging. Some domains do redirect to legitimate login pages.

Resources & similar projects:

(if the blog post formatting looks odd, it's because Blogger editor interface hates me)

如有侵权请联系:admin#unsafe.sh