2021-5-20 06:4:11 Author: raffy.ch(查看原文) 阅读量:4 收藏

Before diving into cyber security and how the industry is using AI at this point, let’s define the term AI first. Artificial Intelligence (AI), as the term is used today, is the overarching concept covering machine learning (supervised, including Deep Learning, and unsupervised), as well as other algorithmic approaches that are more than just simple statistics. These other algorithms include the fields of natural language processing (NLP), natural language understanding (NLU), reinforcement learning, and knowledge representation. These are the most relevant approaches in cyber security.

Given this definition, how evolved are cyber security products when it comes to using AI and ML?

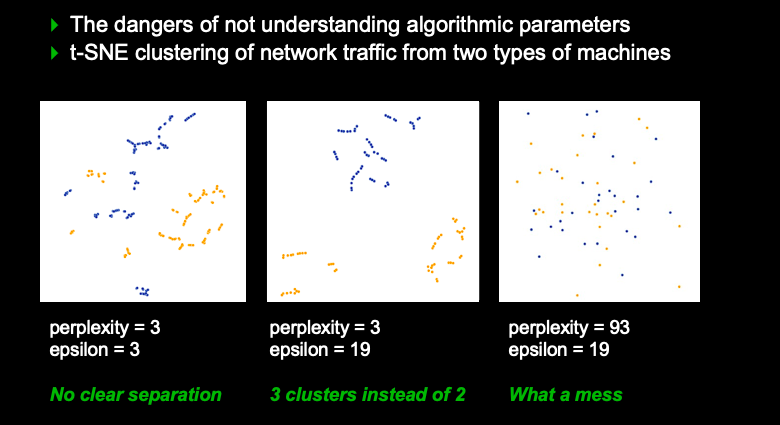

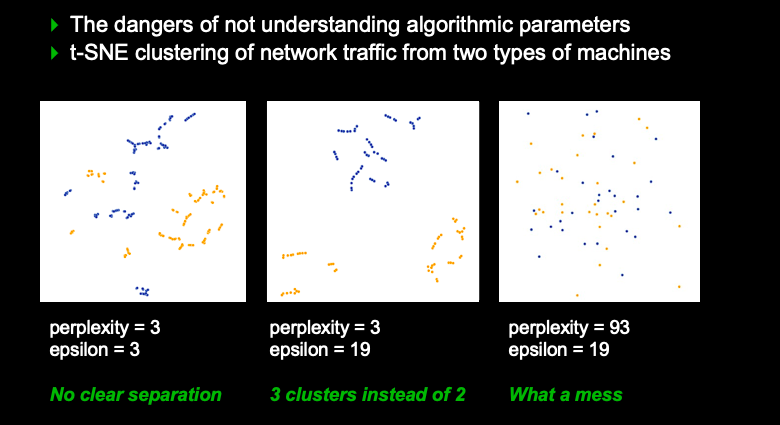

I do see more and more cyber security companies leverage ML and AI in some way. The question is to what degree. I have written before about the dangers of algorithms. It’s gotten too easy for any software engineer to play a data scientist. It’s as easy as downloading a library and calling the .start() function. The challenge lies in the fact that the engineer often has no idea what just happened within the algorithm and how to correctly use it. Does the algorithm work with non normally distributed data? What about normalizing the data before inputting it into the algorithm? How should the results be interpreted? I gave a talk at BlackHat where I showed what happens when we don’t know what an algorithm is doing.

So, the mere fact that a company is using AI or ML in their product is not a good indicator of the product actually doing something smart. On the contrary, most companies I have looked at that claimed to use AI for some core capability are doing it ‘wrong’ in some way, shape or form. To be fair, there are some companies that stick to the right principles, hire actual data scientists, apply algorithms correctly, and interpret the data correctly.

Generally, I see the correct application of AI in the supervised machine learning camp where there is a lot of labeled data available: malware detection (telling benign binaries from malware), malware classification (attributing malware to some malware family), document and Web site classification, document analysis, and natural language understanding for phishing and BEC detection. There is some early but promising work being done on graph (or social network) analytics for communication analysis. But you need a lot of data and contextual information that is not easy to get your hands on. Then, there are a couple of companies that are using belief networks to model expert knowledge, for example, for event triage or insider threat detection. But unfortunately, these companies are a dime a dozen.

That leads us into the next question: What are the top use-cases for AI in security?

I am personally excited about a couple of areas that I think are showing quite some promise to advance the cyber security efforts:

- Using NLP and NLU to understand people’s email habits to then identify malicious activity (BEC, phishing, etc). Initially we have tried to run sentiment analysis on messaging data, but we quickly realized we should leave that to analyzing tweets for brand sentiment and avoid making human (or phishing) behavior judgements. It’s a bit too early for that. But there are some successes in topic modeling, token classification of things like account numbers, and even looking at the use of language.

- Leveraging graph analytics to map out data movement and data lineage to learn when exfiltration or malicious data modifications are occurring. This topic is not researched well yet and I am not aware of any company or product that does this well just yet. It’s a hard problem on many layers, from data collection to deduplication and interpretation. But that’s also what makes this research interesting.

Given the above it doesn’t look like we have made a lot of progress in AI for security. Why is that? I’d attribute it to a few things:

- Access to training data. Any hypothesis we come up with, we have to test and validate. Without data that’s hard to do. We need complex data sets that are showing user interactions across applications, their data, and cloud apps, along with contextual information about the users and their data. This kind of data is hard to get, especially with privacy concerns and regulations like GDPR putting more scrutiny on processes around research work.

- A lack of engineers that understand data science and security. We need security experts with a lot of experience to work on these problems. When I say security experts, these are people that have a deep understand (and hands-on experience) of operating systems and applications, networking and cloud infrastructures. It’s unlikely to find these experts who also have data science chops. Pairing them with data scientists helps, but there is a lot that gets lost in their communications.

- Research dollars. There are few companies that are doing real security research. Take a larger security firm. They might do malware research, but how many of them have actual data science teams that are researching novel approaches? Microsoft has a few great researchers working on relevant problems. Bank of America has an effort to fund academia to work on pressing problems for them. But that work generally doesn’t see the light of day within your off the shelf security products. Generally, security vendors don’t invest in research that is not directly related to their products. And if they do, they want to see fairly quick turn arounds. That’s where startups can fill the gaps. Their challenge is to make their approaches scalable. Meaning not just scale to a lot of data, but also being relevant in a variety of customer environments with dozens of diverging processes, applications, usage patterns, etc. This then comes full circle with the data problem. You need data from a variety of different environments to establish hypotheses and test your approaches.

Is there anything that the security buyer should be doing differently to incentivize security vendors to do better in AI?

I don’t think the security buyer is to blame for anything. The buyer shouldn’t have to know anything about how security products work. The products should do what they claim they do and do that well. I think that’s one of the mortal sins of the security industry: building products that are too complex. As Ron Rivest said on a panel the other day: “Complexity is the enemy of security”.

Also have a look at the VentureBeat article feating some quotes from me.

如有侵权请联系:admin#unsafe.sh