2010-10-7 22:23:0 Author: reusablesec.blogspot.com(查看原文) 阅读量:2 收藏

I'm in Chicago at the ACM CCS conference, and the paper I presented there: "Testing Metrics for Password Creation Policies by Attacking Large Sets of Revealed Passwords", is now available online.

Since I had the paper and presentation approved through my company's public release office I was given permission to blog about this subject while the larger issue of my blog is still going through the proper channels. Because of that I'm going to limit my next couple of posts to this subject rather than talking about the CCS conference as a whole, but let me quickly point you to the amazing paper "The Security of Modern Password Expiration: An Algorithmic Framework and Empirical Analysis", written by Yinqian Zhang, Fabian Monrose and Michael Reiter. In short, they managed to obtain a great dataset, their techniques were innovative and sound, and there's some really good analysis on how effective password expiration policies really are, (spoiler: forcing users to change their password every six months isn't very useful).

I'd like to first start by acknowledging the other authors who contributed to the "Testing Password Creation Metrics..." paper.

- Dr. Sudhir Aggarwal - Florida State University: My major professor, who spent I don't know how many hours helping walk me through the subtle intricacies of information entropy.

- Michael Collins - Redjack LLC: Another data driven researcher, and much cooler than me since he uses GNUPlot instead of Excel ;)

- Henry Stern - Cisco IronPort: He was the driving force behind getting this paper written. It was over lunch at the Microsoft Digital Crime Consortium, (it's a conference to combat cybercrime, and not a group of people from Microsoft looking to commit digital crime like the name implies...), that the framework for this paper was laid out.

As for the contents of the paper, I'm planning on breaking the discussion about it down into several different posts, with this post here being more of an overview.

When writing this paper, we really had two main goals:

- How does the NIST model of password entropy as defined in SP800-63 hold up when exposed to real password datasets and realistic attacks?

- How much security is actually provided by typical password creation policies, (aka minimum length, character requirements, blacklists)?

Based on our results, we then looked at the direction we would like password creation policies move to in the future. This ended up with us suggesting how to turn our probabilistic password cracker around, and instead use it as part of a password creation strategy that allows people to create passwords however they like, as long as the probability of the resulting password remains low.

Of all that, I feel our analysis of the NIST password entropy model is actually the most important part of the paper. I know it sounds like an esoteric inside baseball subject, but the use of NIST's password entropy model has a widespread impact on all of us. This is because it provides the theoretical underpinning for most password creation policies out there. Don't take my word for how widespread the use of it is. Check out the Wikipedia article on password strength, (or better yet, read the discussion page) for yourself.

Our findings were that the NIST model of password entropy does not match up with real world password usage or password cracking attacks. If that wasn't controversial enough, we then made the even more substantial claim that the current use of Shannon Entropy to model the security provided by human generated passwords at best provides no actionable information to the defender. At worse, it leads to a defender having an overly optimistic view of the security provided by their password creation policies while at the same time resulting in overly burdensome requirements for the end users.

Getting in front of a room full of crypto experts and telling them that Shannon Entropy wasn't useful to evaluate the security of password creation policies and "We REALLY need to STOP using it", was a bit of a gut clenching moment. That's because the idea of information entropy is fairly central to the evaluation of most cryptographic algorithms. I would have never done it except for the fact that we have a lot of data backing this assertion up. The reason we are making the broader point is because it's tempting to dismiss the flaws in the NIST model by saying that NIST just estimated the entropy of human generated passwords wrong. For example, if you juggle the constants around or perhaps look at word entropy vs character entropy, things will work out. Our point though is not that you can't come up with a fairly accurate Shannon entropy model of human generated passwords. You most assuredly can. It's just that it's not apparent how such a model can provide "actionable information". In addition, the way we currently use Shannon Entropy in evaluating password security policies is fundamentally flawed.

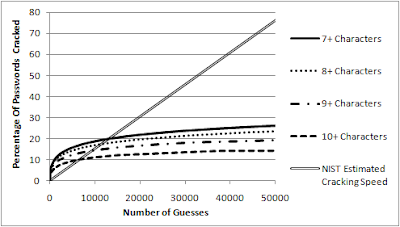

This subject really does require another blog post, but before I head back to Boston I wanted to leave you with one of the graphs from our paper that demonstrates what I'm talking about:

The above graph shows cracking sessions run against passwords that met different minimum length password creation requirements, (aka must be at least seven characters long). The NIST estimated cracking speed is based on the calculated NIST entropy of passwords created under a seven character minimum password creation policy. You may notice that it overestimates the security of the creation policy over shorter cracking sessions, but at the same time doesn't model longer cracking sessions either. This is what I keep on saying that it doesn't provide "actionable intelligence", (third time and counting). When we say "password entropy" what we really want to know is the Guessing Entropy of a policy. Unfortunately, as a community, we keep using Shannon entropy instead. Guessing entropy and Shannon entropy are two very different concepts, but unfortunately there doesn't exist a very good way of calculating the guessing entropy, while calculating the Shannon entropy of a set of text is well documented. This is part of the reason why people keep trying to use Shannon entropy instead.

So I guess I should end this post by saying, if any of this sounds interesting please read the paper ;)

如有侵权请联系:admin#unsafe.sh