Fuzzing input

Grammars初探

A = 10 | 9 | 0 |1,符号化使得递归成为可能,假设B = A | AB,这无疑就使得符号所代表的范围倍增。根据这种思想我们可以制作一个算数表达式:<start> ::= <expr>

<expr> ::= <term> + <expr> | <term> - <expr> | <term>

<term> ::= <term> * <factor> | <term> / <factor> | <factor>

<factor> ::= +<factor> | -<factor> | (<expr>) | <integer> | <integer>.<integer>

<integer> ::= <digit><integer> | <digit>

<digit> ::= 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9<start>的内部的符号进行逐一扩展,并对过程进行随机化处理,最终就可以得到大量的合法算数表达式。和大多数语法一样,Grammar也应该有自己的Type,以便对其合法性进行校验,以Python 为例子可以对上述的Grammar进行定义: Option = Dict[str, Any]

Expansion = Union[str, Tuple[str, Option]]

Grammar = Dict[str, List[Expansion]]

EXPR_GRAMMAR: Grammar = {

"<start>":

["<expr>"], "<expr>":

["<term> + <expr>", "<term> - <expr>", "<term>"],

"<term>":

["<factor> * <term>", "<factor> / <term>", "<factor>"],

"<factor>":

["+<factor>",

"-<factor>",

"(<expr>)",

"<integer>.<integer>",

"<integer>"],

"<integer>":

["<digit><integer>", "<digit>"],

"<digit>":

["0", "1", "2", "3", "4", "5", "6", "7", "8", "9"]

}

EXPR_GRAMMAR["<digit>"]可以访问当前Grammar的各个组成部分并对其进行操作。Sample Grammar Fuzz

:的左右两侧本身就是一种映射关系,因此利用字符串替换不断迭代是一种最为直观的选择。实例代码:

START_SYMBOL = "<start>"

# 一个简单的gramar fuzzer

def simple_grammar_fuzzer(grammar: Grammar,

start_symbol: str = START_SYMBOL,

max_nonterminals: int = 10,

max_expansion_trials: int = 100,

log: bool = False) -> str:

"""Produce a string from `grammar`.

`start_symbol`: use a start symbol other than `<start>` (default).

`max_nonterminals`: the maximum number of nonterminals

still left for expansion

`max_expansion_trials`: maximum # of attempts to produce a string

`log`: print expansion progress if True""" term = start_symbol

expansion_trials = 0

while len(nonterminals(term)) > 0: # 判断字符串中是否存在<>,并返回所有被<>包裹的项,注意如果是<dsad<abc>>则返回<abc>

symbol_to_expand = random.choice(nonterminals(term))

expansions = grammar[symbol_to_expand]

expansion = random.choice(expansions)

# In later chapters, we allow expansions to be tuples,

# with the expansion being the first element

if isinstance(expansion, tuple):

expansion = expansion[0]

new_term = term.replace(symbol_to_expand, expansion, 1) # 解析下一个符号

if len(nonterminals(new_term)) < max_nonterminals: # 每次的可解析符号,必须少于最大单次解析量

term = new_term

if log:

print("%-40s" % (symbol_to_expand + " -> " + expansion), term)

expansion_trials = 0

else:

expansion_trials += 1

if expansion_trials >= max_expansion_trials: # 总的解析次数也存在限制

raise ExpansionError("Cannot expand " + repr(term))

return term

比如URL生成:

URL_GRAMMAR: Grammar = {

"<start>":

["<url>"],

"<url>":

["<scheme>://<authority><path><query>"],

"<scheme>":

["http", "https", "ftp", "ftps"],

"<authority>":

["<host>", "<host>:<port>", "<userinfo>@<host>", "<userinfo>@<host>:<port>"],

"<host>": # 大部分情况下其实可以指定一个URL

["cispa.saarland", "www.google.com", "fuzzingbook.com"],

"<port>":

["80", "8080", "<nat>"],

"<nat>":

["<digit>", "<digit><digit>"],

"<digit>":

["0", "1", "2", "3", "4", "5", "6", "7", "8", "9"],

"<userinfo>": # Just one

["user:password"],

"<path>": # Just a few

["", "/", "/<id>"],

"<id>": # Just a few

["abc", "def", "x<digit><digit>"],

"<query>":

["", "?<params>"],

"<params>":

["<param>", "<param>&<params>"],

"<param>": # Just a few

["<id>=<id>", "<id>=<nat>"],

}{

"<A>": [["<START_LINE>", "\r\n", "<HEADERS>", "<BODY>", "\r\n\r\n"]],

"<START_LINE>": [["<METHOD>", " ", "<URI>", " ", "<VERSION>"]],

"<METHOD>": [["GET"], ["HEAD"], ["POST"], ["PUT"], ["DELETE"], ["CONNECT"], ["OPTIONS"], ["TRACE"], ["PATCH"], ["ACL"], ["BASELINE-CONTROL"], ["BIND"], ["CHECKIN"], ["CHECKOUT"], ["COPY"], ["LABEL"], ["LINK"], ["LOCK"], ["MERGE"], ["MKACTIVITY"], ["MKCALENDAR"], ["MKCOL"], ["MKREDIRECTREF"], ["MKWORKSPACE"], ["MOVE"], ["ORDERPATCH"], ["PRI"], ["PROPFIND"], ["PROPPATCH"], ["REBIND"], ["REPORT"], ["SEARCH"], ["UNBIND"], ["UNCHECKOUT"], ["UNLINK"], ["UNLOCK"], ["UPDATE"], ["UPDATEREDIRECTREF"], ["VERSION-CONTROL"]],

"<URI>": [["<SCHEME>" , ":", "<HIER>", "<QUERY>", "<FRAGMENT>"]],

"<SCHEME>": [["http"], ["https"], ["shttp"], ["dav"], ["about"], ["attachment"], ["cid"], ["data"], ["file"], ["ftp"], ["ssh"], ["sip"]],

"<HIER>": [["//", "<AUTHORITY>", "<PATH>"]],

"<AUTHORITY>": [["<USERINFO>", "<HOST>"]], "<PATH>": [["/", "<DIR>"]],

"<DIR>": [[], ["<CHAR>", "/", "<DIR>"]],

"<USERINFO>": [[], ["<CHAR>", ":", "<CHAR>", "@"]],

"<HOST>": [["127.0.0.1:8080"]],

"<QUERY>": [[], ["?", "<CHAR>" , "=", "<CHAR>"]],

"<FRAGMENT>": [[], ["#", "<CHAR>"]],

"<VERSION>": [["HTTP/0.9"], ["HTTP/1.0"], ["HTTP/1.1"], ["HTTP/2.0"], ["HTTP/3.0"]],

"<HEADERS>": [[], ["<HEADER>", "\r\n", "<HEADERS>"]],

"<HEADER>": [["<HEADER_FIELD>", ": ", "<ANY>"]],

"<HEADER_FIELD>": [["A-IM"], ["Accept"], ["Accept-Charset"], ["Accept-Datetime"], ["Accept-Encoding"], ["Accept-Language"], ["Access-Control-Request-Method"], ["Access-Control-Request-Headers"], ["Authorization"], ["Cache-Control"], ["Connection"], ["Content-Encoding"], ["Content-Length"], ["Content-MD5"], ["Content-Type"], ["Cookie"], ["Date"], ["Expect"], ["Forwarded"], ["From"], ["Host"], ["HTTP2-Settings"], ["If-Match"], ["If-Modified-Since"], ["If-None-Match"], ["If-Range"], ["If-Unmodified-Since"], ["Max-Forwards"], ["Origin"], ["Pragma"], ["Proxy-Authorization"], ["Range"], ["Referer"], ["TE"], ["Trailer"], ["Transfer-Encoding"], ["User-Agent"], ["Upgrade"], ["Via"], ["Warning"]],

"<BODY>": [[], ["<CHAR>"]],

"<ANY>": [[], ["<DATE>"], ["<CHAR>"], ["<HOST>"], ["<URI>"]],

"<DATE>": [["Sat, 29 Oct 1994 19:43:31 GMT"]],

"<CHAR>": [["0"], ["1"], ["2"], ["3"], ["4"], ["5"], ["6"], ["7"], ["8"], ["9"], ["a"], ["b"], ["c"], ["d"], ["e"], ["f"], ["g"], ["h"], ["i"], ["j"], ["k"], ["l"], ["m"], ["n"], ["o"], ["p"], ["q"], ["r"], ["s"], ["t"], ["u"], ["v"], ["w"], ["x"], ["y"], ["z"], ["A"], ["B"], ["C"], ["D"], ["E"], ["F"], ["G"], ["H"], ["I"], ["J"], ["K"], ["L"], ["M"], ["N"], ["O"], ["P"], ["Q"], ["R"], ["S"], ["T"], ["U"], ["V"], ["W"], ["X"], ["Y"], ["Z"]]

}

Grammar Toolbox

simple_nonterminal_grammar: Grammar = {

"<start>": ["<nonterminal>"],

"<nonterminal>": ["<left-angle><identifier><right-angle>"],

"<left-angle>": ["<"],

"<right-angle>": [">"],

"<identifier>": ["id"] # for now

}nonterminal_grammar = copy.deepcopy(simple_nonterminal_grammar)

nonterminal_grammar["<identifier>"] = ["<idchar>", "<identifier><idchar>"]

nonterminal_grammar["<idchar>"] = ['a', 'b', 'c', 'd'] # for nowdef set_opts(grammar: Grammar, symbol: str, expansion: Expansion,

opts: Option = {}) -> None:

"""Set the options of the given expansion of grammar[symbol] to opts"""

expansions = grammar[symbol]

for i, exp in enumerate(expansions):

if exp_string(exp) != exp_string(expansion):

continue new_opts = exp_opts(exp)

if opts == {} or new_opts == {}:

new_opts = opts

else:

for key in opts:

new_opts[key] = opts[key]

if new_opts == {}:

grammar[symbol][i] = exp_string(exp)

else:

grammar[symbol][i] = (exp_string(exp), new_opts)

return

raise KeyError(

"no expansion " +

repr(symbol) +

" -> " +

repr(

exp_string(expansion)))

# 解析 ebnf 语法

def new_symbol(grammar: Grammar, symbol_name: str = "<symbol>") -> str:

"""Return a new symbol for `grammar` based on `symbol_name`"""

if symbol_name not in grammar:

return symbol_name count = 1

while True:

tentative_symbol_name = symbol_name[:-1] + "-" + repr(count) + ">"

if tentative_symbol_name not in grammar:

return tentative_symbol_name

count += 1

# 提取表达式中符合EBNF语法的部分,? , * , + , ()

def parenthesized_expressions(expansion: Expansion) -> List[str]:

RE_PARENTHESIZED_EXPR = re.compile(r'\([^()]*\)[?+*]')

# In later chapters, we allow expansions to be tuples,

# with the expansion being the first element

if isinstance(expansion, tuple):

expansion = expansion[0]

return re.findall(RE_PARENTHESIZED_EXPR, expansion)

# 对Grammar中的EBNF语法括号进行解析

def convert_ebnf_parentheses(ebnf_grammar: Grammar) -> Grammar:

"""Convert a grammar in extended BNF to BNF"""

grammar = extend_grammar(ebnf_grammar)

for nonterminal in ebnf_grammar:

expansions = ebnf_grammar[nonterminal]

for i in range(len(expansions)):

expansion = expansions[i]

if not isinstance(expansion, str):

expansion = expansion[0]

while True:

parenthesized_exprs = parenthesized_expressions(expansion)

if len(parenthesized_exprs) == 0:

break

for expr in parenthesized_exprs:

operator = expr[-1:]

contents = expr[1:-2]

new_sym = new_symbol(grammar)

exp = grammar[nonterminal][i]

opts = None

if isinstance(exp, tuple):

(exp, opts) = exp

assert isinstance(exp, str)

expansion = exp.replace(expr, new_sym + operator, 1)

if opts:

grammar[nonterminal][i] = (expansion, opts)

else:

grammar[nonterminal][i] = expansion

grammar[new_sym] = [contents]

return grammar

# ENBF符号扩展

def extended_nonterminals(expansion: Expansion) -> List[str]:

RE_EXTENDED_NONTERMINAL = re.compile(r'(<[^<> ]*>[?+*])')

# In later chapters, we allow expansions to be tuples,

# with the expansion being the first element

if isinstance(expansion, tuple):

expansion = expansion[0]

return re.findall(RE_EXTENDED_NONTERMINAL, expansion)

# ENBF符号扩展

def convert_ebnf_operators(ebnf_grammar: Grammar) -> Grammar:

"""Convert a grammar in extended BNF to BNF"""

grammar = extend_grammar(ebnf_grammar)

for nonterminal in ebnf_grammar:

expansions = ebnf_grammar[nonterminal]

for i in range(len(expansions)):

expansion = expansions[i]

extended_symbols = extended_nonterminals(expansion)

for extended_symbol in extended_symbols:

operator = extended_symbol[-1:]

original_symbol = extended_symbol[:-1]

assert original_symbol in ebnf_grammar, \

f"{original_symbol} is not defined in grammar"

new_sym = new_symbol(grammar, original_symbol)

exp = grammar[nonterminal][i]

opts = None

if isinstance(exp, tuple):

(exp, opts) = exp

assert isinstance(exp, str)

new_exp = exp.replace(extended_symbol, new_sym, 1)

if opts:

grammar[nonterminal][i] = (new_exp, opts)

else:

grammar[nonterminal][i] = new_exp

if operator == '?':

grammar[new_sym] = ["", original_symbol]

elif operator == '*':

grammar[new_sym] = ["", original_symbol + new_sym]

elif operator == '+':

grammar[new_sym] = [

original_symbol, original_symbol + new_sym]

return grammar

def convert_ebnf_grammar(ebnf_grammar: Grammar) -> Grammar:

return convert_ebnf_operators(convert_ebnf_parentheses(ebnf_grammar))

# 搜索Grammar中的定义的noterminal

def def_used_nonterminals(grammar: Grammar, start_symbol:

str = START_SYMBOL) -> Tuple[Optional[Set[str]],

Optional[Set[str]]]:

"""Return a pair (`defined_nonterminals`, `used_nonterminals`) in `grammar`.

In case of error, return (`None`, `None`).""" defined_nonterminals = set()

used_nonterminals = {start_symbol}

for defined_nonterminal in grammar:

defined_nonterminals.add(defined_nonterminal)

expansions = grammar[defined_nonterminal]

if not isinstance(expansions, list):

print(repr(defined_nonterminal) + ": expansion is not a list",

file=sys.stderr)

return None, None

if len(expansions) == 0:

print(repr(defined_nonterminal) + ": expansion list empty",

file=sys.stderr)

return None, None

for expansion in expansions:

if isinstance(expansion, tuple):

expansion = expansion[0]

if not isinstance(expansion, str):

print(repr(defined_nonterminal) + ": "

+ repr(expansion) + ": not a string",

file=sys.stderr)

return None, None

for used_nonterminal in nonterminals(expansion):

used_nonterminals.add(used_nonterminal)

return defined_nonterminals, used_nonterminals

def reachable_nonterminals(grammar: Grammar,

start_symbol: str = START_SYMBOL) -> Set[str]:

reachable = set()

def _find_reachable_nonterminals(grammar, symbol):

nonlocal reachable

reachable.add(symbol)

for expansion in grammar.get(symbol, []):

for nonterminal in nonterminals(expansion):

if nonterminal not in reachable:

_find_reachable_nonterminals(grammar, nonterminal)

_find_reachable_nonterminals(grammar, start_symbol)

return reachable

def unreachable_nonterminals(grammar: Grammar,

start_symbol=START_SYMBOL) -> Set[str]:

return grammar.keys() - reachable_nonterminals(grammar, start_symbol)

def opts_used(grammar: Grammar) -> Set[str]:

used_opts = set()

for symbol in grammar:

for expansion in grammar[symbol]:

used_opts |= set(exp_opts(expansion).keys())

return used_opts

# Grammar的合法性判断,类似于编译器里面的语法检查

def is_valid_grammar(grammar: Grammar,

start_symbol: str = START_SYMBOL,

supported_opts: Set[str] = set()) -> bool:

"""Check if the given `grammar` is valid.

`start_symbol`: optional start symbol (default: `<start>`)

`supported_opts`: options supported (default: none)"""

defined_nonterminals, used_nonterminals = \

def_used_nonterminals(grammar, start_symbol)

if defined_nonterminals is None or used_nonterminals is None:

return False

# Do not complain about '<start>' being not used,

# even if start_symbol is different

if START_SYMBOL in grammar:

used_nonterminals.add(START_SYMBOL)

for unused_nonterminal in defined_nonterminals - used_nonterminals:

print(repr(unused_nonterminal) + ": defined, but not used",

file=sys.stderr)

for undefined_nonterminal in used_nonterminals - defined_nonterminals:

print(repr(undefined_nonterminal) + ": used, but not defined",

file=sys.stderr)

# Symbols must be reachable either from <start> or given start symbol

unreachable = unreachable_nonterminals(grammar, start_symbol)

msg_start_symbol = start_symbol

if START_SYMBOL in grammar:

unreachable = unreachable - \

reachable_nonterminals(grammar, START_SYMBOL)

if start_symbol != START_SYMBOL:

msg_start_symbol += " or " + START_SYMBOL

for unreachable_nonterminal in unreachable:

print(repr(unreachable_nonterminal) + ": unreachable from " + msg_start_symbol,

file=sys.stderr)

used_but_not_supported_opts = set()

if len(supported_opts) > 0:

used_but_not_supported_opts = opts_used(

grammar).difference(supported_opts)

for opt in used_but_not_supported_opts:

print(

"warning: option " +

repr(opt) +

" is not supported",

file=sys.stderr)

return used_nonterminals == defined_nonterminals and len(unreachable) == 0

高效Grammars Fuzz

派生树算法

派生树使得我们可以掌控整个扩展过程的状态,比如那些节点已经被扩展,或者某个节点是否需要扩展等,同时,在扩展过程中增加新节点的速度远超把一个符号替换为一个值的过程,因此使用这种数据结构也带来了一定的性能增益。# URL Grammar

URL_GRAMMAR: Grammar = {

"<start>":

["<url>"],

"<url>":

["<scheme>://<authority><path><query>"],

"<scheme>":

["http", "https", "ftp", "ftps"],

"<authority>":

["<host>", "<host>:<port>", "<userinfo>@<host>", "<userinfo>@<host>:<port>"],

"<host>": # 大部分情况下其实可以指定一个URL

["cispa.saarland", "www.google.com", "fuzzingbook.com"],

"<port>":

["80", "8080", "<nat>"],

"<nat>":

["<digit>", "<digit><digit>"],

"<digit>":

["0", "1", "2", "3", "4", "5", "6", "7", "8", "9"],

"<userinfo>": # Just one

["user:password"],

"<path>": # Just a few

["", "/", "/<id>"],

"<id>": # Just a few

["abc", "def", "x<digit><digit>"],

"<query>":

["", "?<params>"],

"<params>":

["<param>", "<param>&<params>"],

"<param>": # Just a few

["<id>=<id>", "<id>=<nat>"],

}<start>为初始节点,然后在Grammar中发现其存在对应的表达,所以就会选择<url>作为它的子节点,循环往复知道一个节点不再出现对应的子节点,然后整个的树形结构完成解析,输出对应的结构化数据。(SYMBOL_NAME, CHILDREN)

DerivationTree = Tuple[str, Optional[List[Any]]]

derivation_tree: DerivationTree = ("<start>",

[("<expr>",

[("<expr>", None),

(" + ", []),

("<term>", None)]

)])SYMBOL_NAME代表的就是符号,CHILDREN代表子节点,表示为具体的数据结构就是:DerivationTree = Tuple[str, Optional[List[Any]]]。其中CHILDREN主要有两种表示:1. None代表当前节点可以继续向下扩展,其含义就是现在节点存在可扩展的符号。 2. []代表的就是没有子节点了

def g_rammar_fuzzer():

f = GrammarFuzzer(URL_GRAMMAR)

f.fuzz()ProbabilisticGrammarFuzzer

leaddigit符号对应的概率,这些就不在深入分析。PROBABILISTIC_EXPR_GRAMMAR: Grammar = {

"<start>":

["<expr>"], "<expr>":

[("<term> + <expr>", opts(prob=0.1)),

("<term> - <expr>", opts(prob=0.2)),

"<term>"],

"<term>":

[("<factor> * <term>", opts(prob=0.1)),

("<factor> / <term>", opts(prob=0.1)),

"<factor>"

],

"<factor>":

["+<factor>", "-<factor>", "(<expr>)",

"<leadinteger>", "<leadinteger>.<integer>"],

"<leadinteger>":

["<leaddigit><integer>", "<leaddigit>"],

# Benford's law: frequency distribution of leading digits

"<leaddigit>":

[("1", opts(prob=0.301)),

("2", opts(prob=0.176)),

("3", opts(prob=0.125)),

("4", opts(prob=0.097)),

("5", opts(prob=0.079)),

("6", opts(prob=0.067)),

("7", opts(prob=0.058)),

("8", opts(prob=0.051)),

("9", opts(prob=0.046)),

],

# Remaining digits are equally distributed

"<integer>":

["<digit><integer>", "<digit>"],

"<digit>":

["0", "1", "2", "3", "4", "5", "6", "7", "8", "9"],

}

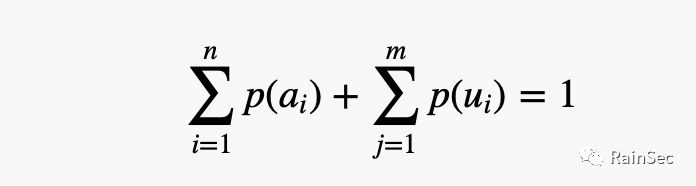

公式1

公式2

opts的方法给对应项附加概率,未知概率的项则按照概率平分的原则来赋予概率。之后自然是要在Fuzz里面引入概率,使得在生成种子的时候可以对符号解析的选择赋予权重,进而提高Fuzz效率。IP_ADDRESS_GRAMMAR: Grammar = {

"<start>": ["<address>"],

"<address>": ["<octet>.<octet>.<octet>.<octet>"],

# ["0", "1", "2", ..., "255"]

"<octet>": decrange(0, 256) # 其实代表的就是0-256

}<octet>的时候都使用不同的概率,可以对其扩展,形成下面的语法:IP_ADDRESS_GRAMMAR: Grammar = {

"<start>": ["<address>"],

"<address>": ["<octet-1>.<octet-2>.<octet-3>.<octet-4>"],

# ["0", "1", "2", ..., "255"]

"<octet-1>": decrange(0, 256) # 其实代表的就是0-256

"<octet-2>": decrange(0, 256) # 其实代表的就是0-256

"<octet-3>": decrange(0, 256) # 其实代表的就是0-256

"<octet-4>": decrange(0, 256) # 其实代表的就是0-256

}def _duplicate_context(grammar: Grammar,

orig_grammar: Grammar,

symbol: str,

expansion: Optional[Expansion],

depth: Union[float, int],

seen: Dict[str, str]) -> None:

"""Helper function for `duplicate_context()`""" for i in range(len(grammar[symbol])):

if expansion is None or grammar[symbol][i] == expansion:

new_expansion = ""

for (s, c) in expansion_to_children(grammar[symbol][i]):

if s in seen: # Duplicated already

new_expansion += seen[s]

elif c == [] or depth == 0: # Terminal symbol or end of recursion

new_expansion += s

else: # Nonterminal symbol - duplicate

# Add new symbol with copy of rule

new_s = new_symbol(grammar, s)

grammar[new_s] = copy.deepcopy(orig_grammar[s])

# Duplicate its expansions recursively

# {**seen, **{s: new_s}} is seen + {s: new_s}

_duplicate_context(grammar, orig_grammar, new_s, expansion=None,

depth=depth - 1, seen={**seen, **{s: new_s}})

new_expansion += new_s

grammar[symbol][i] = new_expansion

def duplicate_context(grammar: Grammar,

symbol: str,

expansion: Optional[Expansion] = None,

depth: Union[float, int] = float('inf')):

"""Duplicate an expansion within a grammar. In the given grammar, take the given expansion of the given `symbol`

(if `expansion` is omitted: all symbols), and replace it with a

new expansion referring to a duplicate of all originally referenced rules.

If `depth` is given, limit duplication to `depth` references

(default: unlimited)

"""

orig_grammar = extend_grammar(grammar)

_duplicate_context(grammar, orig_grammar, symbol,

expansion, depth, seen={})

# After duplication, we may have unreachable rules; delete them

for nonterminal in unreachable_nonterminals(grammar):

del grammar[nonterminal]

set_prob(probabilistic_ip_address_grammar, "<octet-1>", "127", 1.0)

set_prob(probabilistic_ip_address_grammar, "<octet-2>", "0", 1.0)1. 测试常用功能,因为很多软件测试更希望常用的功能确保安全,但是对于漏洞挖掘研究人员来说可能目标不在于此。 2. 测试不常用功能,通过规避Inputs中解析到的symbol,Fuzz就会更偏向于测试一些不常用的功能。 3. 专注于指定的Inputs,一些漏洞挖掘可能希望专注于已有的非常有价值的poc inputs,通过专注于这些inputs,Fuzz可以测试软件的一些薄弱环节从而达到很好的效果。

127.0.0.1的种子,那么被解析之后,0在<octet>中的概率值就会被限制为50%,127和1分别为25%,那么在Fuzz运行的时候相关的概率值就可以赋予给<octet>。那么如果测试一些不常用功能该怎么办呢?其实就是通过原来测常用功能的Inputs得到相关概率,然后进行概率翻转就行了,比如常用功能的Inputs概率如下:[('http', {'prob': 0.2222222222222222}),

('https', {'prob': 0.6666666666666666}),

('ftp', {'prob': 0.0}),

('ftps', {'prob': 0.1111111111111111})][('http', {'prob': 0.1111111111111111}),

('https', {'prob': 0.0}),

('ftp', {'prob': 0.6666666666666666}),

('ftps', {'prob': 0.2222222222222222})]Generator With Pre or Post or order Func

pre func完成。这类似于hook,那么对于func触发的时机一般就分为两种,在Inputs的生成之前或者是生成之后,在语法里面的表示就是:CHARGE_GRAMMAR: Grammar = {

"<start>": ["Charge <amount> to my credit card <credit-card-number>"],

"<amount>": ["$<float>"],

"<float>": ["<integer>.<digit><digit>"],

"<integer>": ["<digit>", "<integer><digit>"],

"<digit>": crange('0', '9'), "<credit-card-number>": ["<digits>"],

"<digits>": ["<digit-block><digit-block><digit-block><digit-block>"],

"<digit-block>": ["<digit><digit><digit><digit>"],

}

CHARGE_GRAMMAR.update({

"<float>": [("<integer>.<digit><digit>", opts(pre=high_charge))], # high_charge是函数名称

})

CHARGE_GRAMMAR.update({

"<float>": [("<integer>.<digit><digit>",

opts(pre=lambda: random.randint(10000000, 90000000) / 100.0))] # 或者选择使用lambda表达式

})

CHARGE_GRAMMAR.update({

"<credit-card-number>": [("<digits>", opts(post=lambda digits: fix_credit_card(digits)))]

})Greybox Fuzzing with Grammars

class DictMutator(Mutator):

"""Mutate strings using keywords from a dictionary""" def __init__(self, dictionary: List[str]) -> None:

"""Constructor. `dictionary` is the list of keywords to use."""

super().__init__()

self.dictionary = dictionary

self.mutators.append(self.insert_from_dictionary)

def insert_from_dictionary(self, s: str) -> str:

"""Returns s with a keyword from the dictionary inserted"""

pos = random.randint(0, len(s))

random_keyword = random.choice(self.dictionary)

return s[:pos] + random_keyword + s[pos:]

Fuzzing with Input Fragments.1. 对原有的Input进行Parse,形成派生树。 2. 对派生树进行节点互换或者节点替换等操作。 3. 对派生树进行还原,形成新的Input。

LangFuzz其实在Inputs生成的速度上也远低于平常的结构化黑盒Fuzz。下面是两组对比数据:LangFuzz

From the 300 generated inputs, 152 (50.67%) can be parsed.In total, 91 statements are covered.BlackFuzz

From the 300 generated inputs, 36 (12.00%) can be parsed.In total, 161 statements are covered.

structural mutations和32 byte-level mutations两种变异方式,如下:class GreyboxGrammarFuzzer(GreyboxFuzzer):

"""Greybox fuzzer using grammars.""" def __init__(self, seeds: List[str],

byte_mutator: Mutator, tree_mutator: FragmentMutator,

schedule: PowerSchedule) -> None:

"""Constructor.

`seeds` - set of inputs to mutate.

`byte_mutator` - a byte-level mutator.

`tree_mutator` = a tree-level mutator.

`schedule` - a power schedule.

"""

super().__init__(seeds, byte_mutator, schedule)

self.tree_mutator = tree_mutator

def create_candidate(self) -> str:

"""Returns an input generated by structural mutation

of a seed in the population"""

seed = cast(SeedWithStructure, self.schedule.choose(self.population))

# Structural mutation

trials = random.randint(0, 4)

for i in range(trials):

seed = self.tree_mutator.mutate(seed)

# Byte-level mutation

candidate = seed.data

if trials == 0 or not seed.has_structure or random.randint(0, 1) == 1:

dumb_trials = min(len(seed.data), 1 << random.randint(1, 5))

for i in range(dumb_trials):

candidate = self.mutator.mutate(candidate)

return candidate

From the 300 generated inputs, 1 (0.33%) can be parsed.

In total, 180 statements are covered.Fuzzing with Input Regions,这种Fuzz的变异方法不再使用派生树节点拆分重组等方式,而是通过将合法种子的不同区域直接进行拆分重组的方式,这里的区域指的是可以和派生树符号对应的连续的字节序列,这样的好处其实在于它操作的对象可能比Fragments更大或者更小,以此种方式进行变异在和上述变异条件相同的情况下测试结构如下:It took the structural greybox fuzzer with region mutator

11.35 seconds to generate and execute 300 inputs.

From the 300 generated inputs, 4 (1.33%) can be parsed.

In total, 168 statements are covered.

On average, 9.1% of a seed in the population can be successfully parsed.schedule给合法Inputs的相关结构赋予更多的权重。测试结果如下:It took AFLSmart 20.75 seconds to generate and execute 300 inputs.From the 300 generated inputs, 46 (15.33%) can be parsed.

In total, 162 statements are covered.

On average, 23.7% of a seed in the population can be successfully parsed.

Parser input

var haystack = "foo";

var re_text = "^foo";

haystack += "x";

re_text += "(x)";

var re = new RegExp(re_text);

re.test(haystack);

RegExp.input = Number();

print(RegExp.$1);Tips:如何判断对面的代码覆盖率,一般黑盒情况下可以试时间,如果一个Input在对面耗费了更多的时间来运行,那么可以猜测其走过了更多的代码分支。

总结

参考链接

https://www.fuzzingbook.org

文中数据测试来源大多为Fuzzingbook,因为根据电脑不同,其实具体数值结果会有一定偏差,但是结论都是一样的,因此就展示了书中的测试数据。

如有侵权请联系:admin#unsafe.sh