2023-10-31 21:34:2 Author: securityboulevard.com(查看原文) 阅读量:3 收藏

1. Sophisticated Scrapers

Anatomy of a Distributed Scraping Attack

Sophisticated scrapers are the new normal. Scraper bots leverage sophisticated techniques to bypass traditional bot protection measures.

Companies of all sizes are dealing with scraping bots en masse. In a recent example, X (formerly known as Twitter) no longer allows unregistered users to browse tweets in an effort to curb the effects of data scraping. X’s owner, Elon Musk, claimed X’s data was being “pillaged” by scrapers enough to degrade the service for everyone. Simple anti-scraping measures usually employed by companies—like rate limiting, geoblocking, and signature-based blocking—were clearly not enough to stem the tide of bots scraping from X, leading to the decision.

We’ve seen sophisticated scraping attacks firsthand. Over the course of a week, this attacker made 1.1M search requests hoping to scrape data from one of our customers—and they distributed these requests across more than 45,000 different IP addresses. On any given day, each IP address made fewer than 10 requests, which will fly under the radar for most standard bot detection software. The attacker also deliberately used malformed URLs to try to evade detection while still gathering the content they wanted.

Because the attack was on a French real estate website, the attacker used IP addresses located in France. They consistently used French as the accept-language, randomized bot fingerprints, and HTTP headers consistent with the resources they were requesting.

Due to the sophistication of the attack, many traditional in-house or WAF techniques would not have spotted the attacker:

- Signature-based blocking was rendered useless by the evolving server-side fingerprints.

- Geoblocking would not have helped because the bots were using French IPs.

- Blocking data center IPs would not have stopped the attacks because they were using residential proxies.

- IP-based rate limiting would not have been triggered by each IP making fewer than 10 requests per day.

- Blocking with non-matching user language would not have helped because the attacker matched the user language to the location being attacked.

You may think these kinds of smart, sophisticated attackers are not targeting your website—but sophisticated techniques are more common every day. Open-source packages and scraping bots-as-a-service tend to provide these features at low cost, to make for an even more dreadful bot onslaught.

2. Super Sneaky Bots: ChatGPT Plugins

How ChatGPT Plugins Work & What They Mean for Your Business

Third-party ChatGPT plugins are sneaking past blocking rules meant to stop ChatGPT-associated scraping.

Most people on the internet have heard of or used ChatGPT or another large language model (LLM) like Bard to access information that has been gathered over time from the internet. These people also know that ChatGPT’s ability to provide real-time information is limited, which is where third-party plugins come in. Plugins connect LLMs to external tools and websites, allowing LLMs to access data on the web (public or private), retrieve real-time information, and even help users complete actions such as ordering groceries. WebPilot and LinkReader already work with ChatGPT to gather up-to-date information from the internet in response to queries.

So what’s the issue? The problem is twofold:

- Many businesses cannot opt out of data collection easily. They must block the plugin user-agent listed in ChatGPT’s documentation, which leads to the second issue.

- Some ChatGPT plugins are lying about their user-agent in order to get around blocking rules for the standard ChatGPT user-agent.

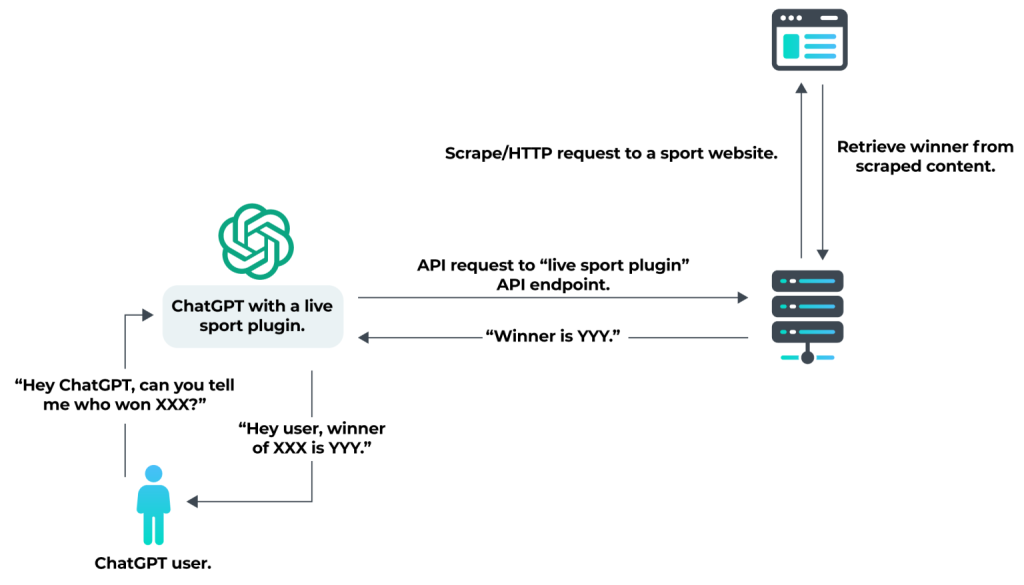

Here’s a diagram of the processes that occur behind the scenes when someone uses a (currently fictional) “Live Sport Plugin” with ChatGPT to get the latest sporting event news.

When requests are made directly from the server hosting the plugin API, there is no constraint on the user-agent. We tested this by analyzing WebPilot and LinkReader, asking them to summarize information from one of our web pages and then looking at the request made by the plugin. The request did not contain the ChatGPT plugin user-agent listed in the OpenAI documentation. Instead, we found:

- The request contained this user client hint:

HeadlessChrome";v="113", "Chromium";v="113", "Not-A.Brand";v="24". - It lacked the accept-language header normally present on Chrome.

- The browser had

navigator.webdriver = true. - It had headless Chrome default screen resolution: 600×800 px.

At the end of the day, when users interact with your website via ChatGPT plugins, they won’t see any of the advertisements or CTAs on your site, and you will likely see lower traffic. You may also find users will not pay you for premium features that can be replicated with ChatGPT plugins.

And blocking plugin requests is much more difficult when they do not announce their presence. You can easily block requests when a user-agent contains the ChatGPT-User substring, which helps block ChatGPT’s own web scrapers from retrieving your content. However, many third-party ChatGPT plugins don’t declare their identity. For these, you will need to use advanced bot detection techniques to see if a request is coming from a bot.

3. Trickable Traditional CAPTCHAs

How to CAPTCHA Confidence: DataDome’s CAPTCHA Results Prove Promising

Traditional CAPTCHAs are no longer effective at stopping bot traffic, and new solutions integrated with bot mitigation are needed.

CAPTCHAs have been used to protect against bots on the internet for decades, but traditional CAPTCHAs rely solely on the complexity of the challenge itself to secure a company or user. Therefore, as bots have become more sophisticated, the complexity of CAPTCHA challenges has had to go up drastically. Now, CAPTCHAs are less accessible for human users and severely degrade the user experience—and advanced bots can still solve them.

When DataDome designed our own secure, privacy-compliant, and user-friendly CAPTCHA, we wanted to adopt a new paradigm. Instead of relying only on the difficulty of the challenge, we added an extra layer of security with invisible signals and integrated it with our complete bot and online fraud protection. Our customers who were being targeted by CAPTCHA bots—bots created to forge or solve CAPTCHA challenges—saw an up to 80% decrease in CAPTCHAs passed after activating the DataDome CAPTCHA.

We knew bot operators would quickly start trying to figure out how to get past the DataDome CAPTCHA, so we watched CAPTCHA passing attempts. Just after release, there was a steady stream of around 85k malicious passing attempts every three hours. Now that our CAPTCHA has been available for over a year, the number of malicious passing attempts is much higher.

Three categories of signals were key in helping us catch bots trying to forge the DataDome CAPTCHA:

- Behavioral Detection (blue): Client-side events such as mouse movements or anonymized keystrokes.

- Browser Fingerprints (red): Signals collected in JavaScript in the CAPTCHA background.

- Forged CAPTCHA Payloads (green): When attackers attempted to reverse-engineer the CAPTCHA payload, sending CAPTCHA responses without executing it as intended.

As seen in the graph, the number of forged payloads decreasing did not mean attackers stopped forging payloads—they just changed the way they forged them. However, these changes resulted in inconsistent client-side behavioral events (mouse movements, in this instance). When new detection models are deployed on the DataDome CAPTCHA, the most sophisticated attackers (bots as a service or large-scale scrapers) try to quickly adapt to increase their chance of passing the CAPTCHA—for example, by generating more realistic mouse movements.

We also know attackers often try to use audio CAPTCHA with AI-based audio recognition techniques to forge CAPTCHAs more easily. While audio CAPTCHAs represented around 2.5% of total DataDome CAPTCHAs passed by real human users, they represented ~20.5% of all malicious CAPTCHA passing attempts. This proved that attackers tend to exploit accessibility features to bypass detection.

Bots are always forging CAPTCHAs using different technologies and techniques—and traditional CAPTCHAs are no longer adequate protection, particularly against sophisticated bots. If you only use a traditional CAPTCHA to protect critical parts of your website and/or mobile app, you’ve introduced a single point of failure that can be bypassed by advanced bots. Several bot frameworks and automation tools include CAPTCHA farm integration as well as AI image/audio recognition techniques to forge CAPTCHAs.

That’s why DataDome’s CAPTCHA is part of a complete bot and online fraud mitigation solution, including invisible signals and extra layers of security. Our CAPTCHA enables us to successfully stop millions of malicious CAPTCHA passing attempts while supporting a smooth user experience—even in the 0.01% chance a human sees the CAPTCHA.

Conclusion

Bot attacks—whether scraping, credential stuffing, scalping, or something else—are shifting and changing every day. Attackers already leverage a wide variety of techniques to distribute their attacks, and bots as a service make scaling sophisticated attacks easier than ever. These techniques are growing to be the new normal, the updated baseline for even simple attacks, making all bad bot traffic harder to mitigate.

Don’t let your business fall prey to the types of bot attacks happening across the internet. Our BotTester tool can give you a peek into the basic bots reaching your websites, apps, and/or APIs. Or, you can spot more sophisticated threats now with a free trial of DataDome. We’d love to bust bad bots before they can get to you and your business.

如有侵权请联系:admin#unsafe.sh