2023-11-2 23:37:21 Author: securityboulevard.com(查看原文) 阅读量:6 收藏

Bletchley declaration signed at UK’s AI Safety Summit: Not much substance, but unity is impressive.

Bletchley declaration signed at UK’s AI Safety Summit: Not much substance, but unity is impressive.

Scores of countries got together in the English countryside this week, to chinwag about the safety of artificial intelligence. “AI should be designed, developed, deployed, and used, in a manner that is safe, … human-centric, trustworthy and responsible,” says their policy paper.

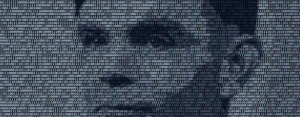

The location was Bletchley Park—the nexus of WWII codebreaking, which was in part led by Alan Turing (he of the eponymous test for AI chatbots). In today’s SB Blogwatch, we’ll not kick off about who invented the computer.

Your humble blogwatcher curated these bloggy bits for your entertainment. Not to mention: Metal Bulgaria.

Foo, Bar, Bletchley

What’s the craic? Kiran Stacey and Dan Milmo report—“UK, US, EU and China sign declaration of AI’s ‘catastrophic’ danger”:

“Rare show of global unity”

Twenty-eight governments signed up to the so-called Bletchley declaration on the first day of the AI safety summit, hosted by the British government. The countries … all agreed that artificial intelligence poses a potentially catastrophic risk [and] to work together on AI safety research.

…

[UK Prime Minister] Rishi Sunak welcomed the declaration: … “There will be nothing more transformative to the futures of our children and grandchildren than technological advances like AI. We owe it to them to ensure AI develops in a safe and responsible way, gripping the risks it poses early enough in the process.”

…

Sunak … decided to host the summit this summer after becoming concerned with the way in which AI models were advancing rapidly without oversight. … In a rare show of global unity … Chinese vice-minister of science and technology, Wu Zhaohui … told fellow delegates: “We uphold the principles of mutual respect, equality and mutual benefits. Countries regardless of their size and scale have equal rights to develop and use AI.”

Blah blah blah. What’s the point? Ingrid Lunden gets to it—“Politicians commit to collaborate to tackle AI safety”:

“Inclusivity and responsibility”

The world is locked in a race, and competition, over dominance in AI. But … a few of them appeared to come together to say that they would prefer to collaborate when it comes to mitigating risk. [The] new policy paper, called the Bletchley Declaration, … aims to reach global consensus on how to tackle the risks that AI poses now and in the future as it develops.

…

“We affirm that, for the good of all, AI should be designed, developed, deployed, and used, in a manner that is safe, in such a way as to be human-centric, trustworthy and responsible,” the paper notes. It also calls attention specifically to … large language models … and the specific threats they might pose for misuse. “Particular safety risks arise at the ‘frontier’ of AI, understood as being those highly capable general-purpose AI models.”

…

Political leaders in the opening plenary today spanned not just representatives from the biggest economies in the world, but also a number speaking for developing countries. … Collectively, they spoke of inclusivity and responsibility, but with so many question marks hanging over how that gets implemented, the proof of their dedication remains to be seen. … Another gathering is scheduled to be held in Korea in six months … and one more in France six months after that.

Winter is coming. So, HRH sticks to the script—“Countries agree to safe and responsible development of frontier AI”:

“Clear imperative”

To mark the opening of the Summit, His Majesty The King delivered a virtual address … as proceedings got underway. His Majesty pointed to AI being one of the ‘greatest technological leaps in the history of human endeavour’ and hailed the technology’s enormous potential to transform the lives of citizens across the world through better treatments for conditions like cancer and heart disease. The King also spoke of the ‘clear imperative to ensure that this rapidly evolving technology remains safe and secure’ and the need for ‘international coordination and collaboration.’

But why “Bletchley”? Věra Jourová clarifies:

The most symbolic place to talk safety of Artificial Intelligence is Bletchley Park, where the Enigma code was deciphered.

Sounds great. Not according to Julian Togelius—“AI safety regulation threatens our digital freedoms”:

This prospect is absolutely horrible. … What we should do instead is to recognize that freedom of speech includes freedom to compute, and ban any attempts to regulate large models. Of course, we can regulate products built on AI techniques.

…

There is no credible existential threat from AI. It is all figments of the hyperactive imaginations of some people, boosted by certain corporations who develop AI models and stand to win from regulating away their competition.

Ahh, regulatory capture—is that why we’re doing this? TheMaskedMan has a simpler explanation:

You may be right. But I think there’s also an element of the politician’s urge to “do something,” and a large dollop of seizing the opportunity to be seen to be doing something.

As bogeymen go, AI is ideal: Nobody knows much about it, it’s hyped to the rafters and poses little to no actual threat.

O RLY? Heart of Dawn would like to facepalm, but finds it overwhelming:

They are all worried about the fictitious idea that a sentient AI will kill us all—and not about the very real problems of dangerous confabulations, the harms caused by systemic biases in the training data, artistic theft, and deep fakes being used to spew misinformation. I don’t have enough palms or foreheads for this.

But, c’mon, China agreed to it, which is something, right? jonadab shakes his head:

China “agrees” to lots of things. In general, the CCP is very agreeable. It’s what they call, “Win-win mutual cooperation.”

…

They “win” once, when the agreement is made, because they get what they want. And then they win again when the subject comes back up, because they can get something else they want, in exchange for agreeing—again—to the thing they agreed to in the first place.

…

Win-win. Very agreeable. Also very efficient: The same carrot can be used many times.

Meanwhile, Steve Button presses on: [You’re fired—Ed.]

We could soon be as safe as North Korea, where there is 0% crime and everyone is happy all the time.

And Finally:

Andre follows in the footsteps of Kate Bush

Original Le Mystere des Voix Bulgares video:

One of Kate’s best collabs with Trio Bulgarka (it is nearly November 5th, after all):

(Don’t blame me for all the typos.)

You have been reading SB Blogwatch by Richi Jennings. Richi curates the best bloggy bits, finest forums, and weirdest websites … so you don’t have to. Hate mail may be directed to @RiCHi, @richij or [email protected]. Ask your doctor before reading. Your mileage may vary. Past performance is no guarantee of future results. Do not stare into laser with remaining eye. E&OE. 30.

Image sauce: parameter_bond (public domain; leveled and cropped)

Recent Articles By Author

如有侵权请联系:admin#unsafe.sh