2023-12-12 15:21:15 Author: blogs.sap.com(查看原文) 阅读量:7 收藏

Introduction

Often we come across scenarios where we need to replicate large scale Enterprise Data to Cloud Storage.

One way of doing this in the SAP Landscape is using SAP Data Intelligence (SAP DI) Cloud to facilitate the replication of ECC data.

For this blog we will be focusing on using the Replication Flow (RMS) which is the out of the box data replication solution on the SAP DI.

We will be replicating data from SLT system to an Amazon S3 bucket replicating our ECC tables.

Prerequisites

For following this blog you will need to establish connection between your SLT system and the SAP Data Intelligence Cloud .

Following SAP Note details how this connection can be achieved : https://me.sap.com/notes/2835207

Configuration

SLT

On the SLT side we will need to create a Mass Transfer Id which will be used by the SAP DI to connect to the data source which will be our ECC system.

To create an SLT configuration we will go to the LTRC transaction on our SLT system. Then we will click on the paper icon to create a new configuration.

New SLT Configuration

On the Specify Transfer settings tab make sure to select “SAP Data Intelligence(Replication Management Service)” . Selecting other options will make so that the RMS flow will not be able to connect to the ECC system via SLT.

select SAP Data Intelligence(Replication Management Service)

Detailed blog on creating this SLT configuration can be found here : https://blogs.sap.com/2019/10/29/abap-integration-replicating-tables-into-sap-data-hub-via-sap-lt-replication-server/

Amazon S3

We will also need to establish a connection with the Target system which is an S3 bucket.

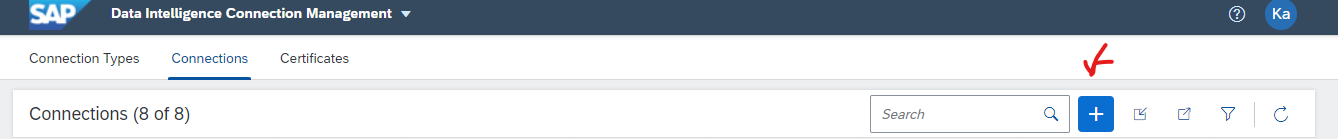

For this we will create a new connection to the S3 bucket in the Connections tab on the SAP DI Cloud

Create a new AWS S3 connection from DI

The connection will be of type S3 and you will need to fill in all the mandatory fields including the Root Path.

Connection

Make sure to test the connection and verify if the connection is successful.

Check Connection

Create the RMS Flow on SAP DI

We go to the Modeler section on the DI tenant and select the Replication Tab

Create a new RMS Flow

We now see the properties tab on creating a new RMS flow and entering a name for it.

We can not enter the description (optional) and select the Source and target Connections along with the containers.

For the Source we will select the SLT connection we have created and the container will be the Mass Transfer ID we have configured for the RMS flow in the configuration phase.

For target we select the S3 bucket along with the container. Here you can enter a new container or select a pre-existing one on S3.

RMS Properties

We can group the delta records in our RMS flow by date or hour.

File types currently supported are csv, parquet, json and jsonlines.

We can also select a file compression from gzip or snappy.

Selecting Tables to be replicated

In the Tasks tab in the RMS flow we select the Tables to be replicated.

We select the objects to be replicated in the Tasks tab

Some of the options given are:

Source Filter : We select if a filter is need based on which data will be considered for replication.

Mapping : We can map existing Table fields to Custom fields, Expressions on the target system.

Target : This is the target container on the S3 bucket.

Load Type : We can choose between Initial Only and Initial and Delta.

Note : If we select a container having existing data on the S3 bucket then we will need to select the Truncate option. This will delete all the existing data in the existing container before starting the replication. Not doing this will result in the RMS flow giving an error and not starting.

Activating the RMS Flow

First we need to validate the RMS flow for any Errors. We do this by pressing the validate button next to save

Validate the RMS Flow

After successful validation we can go ahead and activate and run the flow.

Deploy the RMS Flow

Once the RMS flow is executed we click on the “Go to Monitoring” Button to navigate to the DI Monitoring Screen.

In that screen we gain access to the metrics of the RMS flow executed along with the current status and health of the RMS flow.

Monitoring

Files on the Amazon S3 Bucket

On replication files are created on the S3 bucket under the Initial and Delta folders in the defined Target containers in the RMS Flow

Note: Delta folder is created only in cases of Initial and Delta Load Types

S3 Initial Load Folder

S3 Delta Folder

Conclusion

This is a basic use case explaining the replication of ECC tables on the Amazon S3 bucket using RMS. We can also use complex scenarios and objects such as CDS views.

Thank you for reading. Feel Free to share and comments and feedback

如有侵权请联系:admin#unsafe.sh