2023-12-20 15:45:28 Author: blogs.sap.com(查看原文) 阅读量:10 收藏

”Phi-2, introduce yourself with a rhyme considering Christmas is coming soon!”

***

″Ho ho there, merry Christmas friend

I’m Phi-2 with a big heart and mind

Born on the first of December

I love to bring joy, no matter the weatherWith a smile as bright as the North Star

And a warmth that’s sure to heal any scar

I’ll light up your day with my wit and charm

So come on by, let’s have some fun!“***

🎄AI Core, Ollama and Microsoft’s Phi-2

As the holiday season approaches, bringing with it a time of reflection and anticipation for new beginnings, the world of artificial intelligence continues to unwrap its own gifts of innovation and progress.

Among these technological treasures is Microsoft’s Phi-2 Small Language Model (SLM), a marvel of efficiency that challenges the notion that bigger is always better. With the festive spirit in the air, let’s embark on a journey to explore the deployment of this compact yet powerful AI model on the robust SAP Business Technology Platform (BTP) AI Core, using the versatile Ollama tool.

What makes Ollama so special?

Ollama is an AI tool that enables users to run large language models like Llama 2 and many more locally! Its special features include the ability to execute language models locally for improved efficiency, options for model customization, a user-friendly setup process, langchain support and more. It has an active community!

What is Phi-2 and why should you care?

Phi-2 is a 2.7 billion-parameter language model developed by Microsoft Research that demonstrates state-of-the-art performance among base language models with less than 13 billion parameters. It outperforms models up to 25 times larger on various benchmarks, thanks to advancements in training data quality and innovative scaling techniques. However, it’s size is just 1.7GB!

Phi-2 uses “textbook-quality” data and synthetic datasets to teach common sense reasoning and general knowledge, which is augmented with high-quality web data. It also benefits from scaled knowledge transfer from its predecessor, Phi-1.5, leading to faster training convergence and improved benchmark scores. Despite not being fine-tuned or aligned through reinforcement learning, Phi-2 shows better behavior regarding toxicity and bias compared to similar models.

Bringing it together: SAP AI Core

So, I thought it would be super cool to bring it together to have AI Core running Ollama and Ollama running Phi-2! Ollama enables you to spawn an LLM (or here: SLM) just by a simple REST call. It will fetch the model and provide a unified prompting. Very handy!

Building a Docker image of Ollama for AI Core

We need to make some tiny adjustments to the original Ollama Docker image, that is introducing a proxy to allow for adding the required /v1 (or any other version) path to the URL – which means it rewrites the path for Ollama and back.

Also, on AI Core which uses kserve to serve the models – all containers run as user nobody. Nobody is the conventional name of a user identifier which owns no files, is in no privileged groups, and has no abilities except those which every other user has (wikipedia definition).

Therefore a few chmod are needed to allow for model download, nginx start up and such. You can take the original Dockerfile of Ollama and cut it as shown adding below commands.

<...>

ENV LD_LIBRARY_PATH=/usr/local/nvidia/lib:/usr/local/nvidia/lib64

ENV NVIDIA_DRIVER_CAPABILITIES=compute,utility

# Below is the added config for nginx proxying of the data >>>>

RUN apt install -y nginx

RUN apt install -y curl

RUN echo "events { use epoll; worker_connections 128; } http { server { listen 11435; location /v1/api/ { proxy_pass http://localhost:11434/api/; proxy_set_header Host \$host; proxy_set_header X-Real-IP \$remote_addr; proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto \$scheme; }} }" > /etc/nginx/nginx.conf

RUN chmod -R 777 /var/log/nginx && chmod -R 777 /var/lib/nginx && chmod -R 777 /run

RUN mkdir -p /nonexistent/.ollama

RUN chmod -R 777 /nonexistent/.ollama

EXPOSE 11435

# Run Ollama and proxy

CMD service nginx start && /bin/ollama serve

# <<<< End of modified DockerfileIf you use Windows it’s best to build it using WSL2. Once the build is complete (takes around 5 minutes depending on your hardware), you can test it by running:

docker run --user nobody -p 11435:11435 -d <your docker image and tag>If it starts up check that you see these logs – you’re then good to go to the next step.

2023-12-20 15:44:22 * Starting nginx nginx

2023-12-20 15:44:22 ...done.

...

2023-12-20 15:44:22 2023/12/20 06:44:22 routes.go:895: Listening on [::]:11434 (version 0.0.0)

...Deploying Ollama container on AI Core

You can use below yaml file to deploy it on AI Core.

apiVersion: ai.sap.com/v1alpha1

kind: ServingTemplate

metadata:

name: ollama

annotations:

scenarios.ai.sap.com/description: "Run a ollama server on AI Core"

scenarios.ai.sap.com/name: "ollama-scenario"

executables.ai.sap.com/description: "Run a ollama server on AI Core"

executables.ai.sap.com/name: "ollama-executable"

labels:

scenarios.ai.sap.com/id: "ollama-server"

ai.sap.com/version: "0.1"

spec:

template:

apiVersion: "serving.kserve.io/v1beta1"

metadata:

annotations: |

autoscaling.knative.dev/metric: concurrency

autoscaling.knative.dev/target: 1

autoscaling.knative.dev/targetBurstCapacity: 0

labels: |

ai.sap.com/resourcePlan: infer.s

spec: |

predictor:

minReplicas: 1

maxReplicas: 1

containers:

- name: kserve-container

image: <your docker image and tag>

ports:

- containerPort: 11435

protocol: TCPYou can also try a different resource plan, it should also work with trial and basic. Above uses the smallest plan that is GPU powered. You can find out more about the plans on the SAP Help for AI Core.

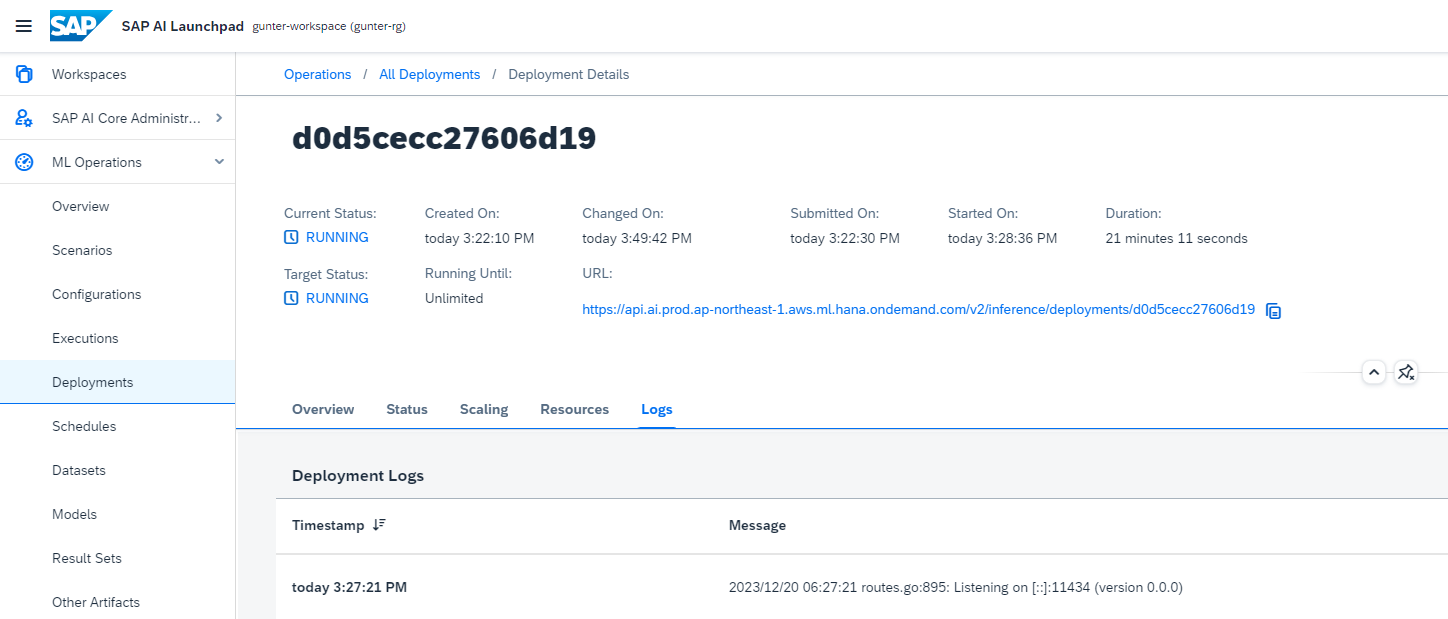

Successful deployment on AI Core.

If you were successful, you’ll see above log in SAP AI Core. Now we’re ready to test it.

Testing Ollama and Phi-2 on SAP AI Core

We’re good to test our installation now. Let’s call the Ollama API to get the version:

GET https://api.ai.prod.ap-northeast-1.aws.ml.hana.ondemand.com/v2/inference/deployments/d0x5cecc27606d19/v1/api/versionIt should reply with 0.0.0 (later it will reply with the Ollama version once the first model is deployed, maybe a tiny bug). Keep in mind above URL is depending on your deployment and you need OAuth2 with your AI Core client credentials.

Let’s deploy the Phi-2 model on Ollama. It’s super simple! Keep in mind to always specify the AI-Resource-Group in the http header on which you deployed Ollama in AI Core.

POST https://api.ai.prod.ap-northeast-1.aws.ml.hana.ondemand.com/v2/inference/deployments/d0dxxxcc27606d19/v1/api/pull

Body:

{

"name": "phi"

}Wait for it to download. You can see the progress in the logs.You likely see a message like this:

2023/12/20 07:04:18 download.go:123: downloading bd608f954559 in 17 100 MB part(s)Prompting with Ollama

Once downloaded let’s ask Phi-2 something! Ollama is lean but powerful, you can even stream the responses (e.g. when using langchain). Here we turn it off.

{

"model": "phi",

"prompt":"Explain the concept of electromagnetic induction and how it's related to smartphones?",

"stream": false

}Reply from Phi-2 is absolutely stunning – it’s a 1.7GB model – how can it be!

{

"model": "phi",

"created_at": "2023-12-20T07:11:15.31425186Z",

"response": " Sure, electromagnetic induction is a phenomenon where a changing magnetic field generates an electric current in a conductor. This principle is used in smartphones to charge the battery and power various components.\n\nWhen you connect your smartphone to a charging cable or wireless charger, it creates a magnetic field around the charging pad/wire. The magnetic field then induces a voltage in the phone's coil of wire, which generates an electric current that charges the battery. \n\nThis process is based on Faraday's Law of Electromagnetic Induction, which states that the magnitude of the induced EMF (electromotive force) is proportional to the rate of change of magnetic flux through a loop. In simpler terms, as the magnetic field changes around your phone, it induces an electrical current in its coil, which charges the battery.\n\nElectromagnetic induction is also used in smartphones for other purposes, such as wireless charging and electromagnetic interference (EMI) shielding. Wireless charging works by using inductive charging pads that create a magnetic field that transfers energy to the phone's receiver coil, without any direct contact. EMI shielding uses materials with high electrical conductivity, like copper, to block unwanted signals from entering or leaving the phone. \n\n summary, electromagnetic induction is essential in charging and powering smartphones, as well as other wireless communication devices.\n",

"done": true,

"total_duration": 3290809057,

"load_duration": 687895,

"prompt_eval_count": 50,

"prompt_eval_duration": 155678000,

"eval_count": 271,

"eval_duration": 3131939000

}Closing

Let’s close with a Limerick by Phi-2:

“Can you write a limerick poem about Christmas time, snow and AI? Output as JSON like {‘limerick’: ‘text’}”

And Phi-2 says:

{ 'limerick':

"There once was an AI who loved Christmas,

Its heart filled with joy and festive cheer.

As snow fell gently down,

It learned to play the piano and the flute so dear,

Bringing the holiday spirit to everyone near."

}Merry Christmas everyone! 🎅

References

AI Core: https://help.sap.com/docs/sap-ai-core?locale=en-US

Ollama: https://github.com/jmorganca/ollama/tree/main

Phi-2: https://www.microsoft.com/en-us/research/blog/phi-2-the-surprising-power-of-small-language-models/

如有侵权请联系:admin#unsafe.sh