2024-1-1 23:4:8 Author: eyalitkin.wordpress.com(查看原文) 阅读量:23 收藏

Recap

In part 1 of this blog series we presented the “Reverse RDP” attack vector and the security hardening patch we designed and helped integrate into FreeRDP. The patch itself was targeting the software design issue that led to many of the info-leak vulnerabilities that were discovered in the project.

Just to recap, my first encounter with FreeRDP was in 2018, when I was looking for client-side vulnerabilities in code that handles incoming RDP messages. After several rounds of vulnerability research and coordinated disclosures, I grew tired of this game of whack-a-mole. Surely something could be done to handle the software design flaw that leads to many of these vulnerabilities. This led to the patch I submitted to FreeRDP on 2021, and that was just included in the project’s recent (3.0.0) release.

In this 2nd part to the series, we will discuss the the wider aspects encountered during the research on FreeRDP. How come the software design flaw in the project wasn’t fixed between 2018-2021 despite repeated coordinated disclosures of vulnerabilities that stemmed from it? How come multiple research teams reported such issues while none of them helped fix the core flaw? Does the InfoSec community even incentivizes researchers to help resolve large software issues in the encountered projects?

As in part 1, this article will combine both of the perspectives the vulnerability researcher who researched FreeRDP (me in the past) and the software developer that helped them fix their design issue (me in the present). Hopefully, this will help the reader understand the full picture about the interaction between the InfoSec world and the Development world, interaction that in my opinion should be significantly improved.

Let’s start.

Denial, Anger, Bargaining, Depression and Acceptance

The vulnerabilities we found during the first iteration of our research on the (then novel) Reverse RDP attack vector, were the following:

- rdesktop (19): CVE 2018-8791, CVE 2018-8792, CVE 2018-8793, CVE 2018-8794, CVE 2018-8795, CVE 2018-8796, CVE 2018-8797, CVE 2018-8798, CVE 2018-8799, CVE 2018-8800, CVE 2018-20174, CVE 2018-20175, CVE 2018-20176, CVE 2018-20177, CVE 2018-20178, CVE 2018-20179, CVE 2018-20180, CVE 2018-20181, CVE 2018-20182

- FreeRDP (6): CVE 2018-8784, CVE 2018-8785, CVE 2018-8786, CVE 2018-8787, CVE 2018-8788, CVE 2018-8789

- Mstsc (1): No CVE

26 vulnerabilities overall, spanning over 3 different implementations. The vulnerability we found in mstsc.exe allows an attacker to leverage a copy-paste event during the RDP connection so to drop fully controlled files in arbitrary paths in the victim’s file system. As an example, such an attack primitive could allow us to write our Malware to the Startup folder of the user, leading to its execution once the victim’s computer reboots. This attack was demonstrated in the following video.

Despite our initial research results, which in our opinion were quite convincing, we were surprised to receive Microsoft’s cool reaction to our disclosure. The initial response to our attack vector was that is wasn’t interesting:

“Thank you for your submission. We determined your finding is valid but does not meet our bar for servicing. For more information, please see the Microsoft Security Servicing Criteria for Windows (https://aka.ms/windowscriteria).”

MSRC

Behind the scenes the replay was something on the lines of “This attack vector requires an attacker to already have a foothold on some machine in the network, so its not really interesting”.

And yet, behind the scenes something did move. In the following months we saw new developments in multiple fronts:

- We started a joint follow-up research with a researcher from Microsoft (Dana Baril), which was later on accepted to Black Hat USA 2019 (link).

- We understood that the vulnerability is also applicable for Hyper-V, as it uses RDP behind the scenes for the graphical user interface to the virtual machine (link to demo video).

- Realization of Microsoft that the vulnerability should indeed be fixed, and the allocation of a CVE ID for it: CVE 2019-0887 (Later on Microsoft allocated also CVE 2020-0655 as their initial fix was incomplete).

- The start of an independent vulnerability research in Microsoft’s internal red team, and the presentation of the (13) found vulnerabilities + importance of the attack vector (also for Windows Sandbox) in Microsoft’s Blue Hat IL 2020 conference (link to the talk).

One of the vulnerabilities found by Microsoft was fixed in August 2019 and was included in the group of vulnerabilities that later received the title DejaBlue. A close examination of the patch showed that it was a variant of a vulnerability we helped fix in FreeRDP 10 months earlier:

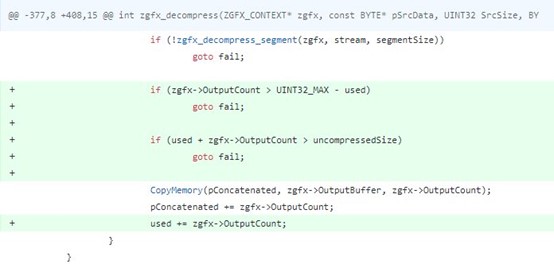

Figure 1: The fix for CVE 2018-8785 found in FreeRDP (2018) – An added check against Integer Overflow.

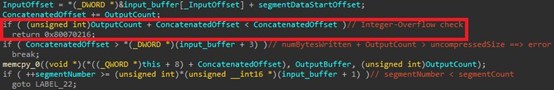

Figure 2: The fix for CVE 2019-1181/2 (DejaBlue) found by Microsoft in mstsc.exe (2019) – An added check against Integer Overflow.

First Observations on the Interface Between both Worlds

In contrast to the average project, in Microsoft’s case we have 3 distinct aspects:

- A large software corporate: A massive software development organization with a given opinion regarding “interruptions” from security researcher.

- Organization with an in-house research group: The declared goal of the in-house (product-security) research group is to improve the ability of the company to analyze, handle and prevent security flaws in their products. As such, it is also tasked with easing the process of interacting with external security researchers (us).

- An industry leader: Microsoft is one of the world’s software development behemoths, and for multiple years now is investing large amounts of resources also in its in-house security group, aiming to position itself as an infosec industry leader. A clear indication for the above is evident in the company’s investment in various Blue Hat conferences worldwide, and the high quality of research talks presented in the conferences, whether by external researchers or by Microsoft’s own employees.

Under usual circumstances, we would assume that the initial response we received is yet another indication for the downsides of a large corporate (> 200,000 employees) – Inability to convince managers, square thinking, etc., and would conclude it at that. In such a case, the smaller open source projects might have more hope as we have higher chances of them being attentive to our report.

After several months, as if to strengthen our hypothesis, Microsoft’s own research group showed that they agree on the importance of our attack vector. They held their own internal research and even presented it in the company’s own security conference – Blue Hat IL. All of which are possible indications of a miscommunication issue inside a large organization – The research group failed to convince the development group.

Going over the first two points, a large corporate and an in-house security group, it seems that the company did benefit from the existence of the group (that is lacking in smaller organizations), and yet the bureaucracy of the corporate was the determining factor (which might be a promising sign for the smaller projects).

However, what about the third point? At least some of the researchers in Microsoft’s own security group were aware of our publication, which was the background for the joint research project we presented together in Black Hat USA. As such, they were also aware of the criticism directed at their company’s initial response regarding the unimportance of the attack vector. Was their effort to position themselves as industry leaders of any help when trying to mitigate the bad first impression caused by the development side of the company?

Sadly, once again the answer is no. Not only that Microsoft’s research steps were conducted independently, without any contact whatsoever with us, even the mention of the importance of the attack vector was hidden deep down on minute 34 in their talk, and was only used as background so to describe their internal red team research.

If we weren’t attending the conference itself, we might have even missed the fact that the company had a change of heart regarding the importance of our attack vector. Not only so, conference attendees that weren’t previously familiar with our publication, might have received the impression that Microsoft are presenting a novel attack vector of their own finding. This is due to the fact that the slides didn’t include any reference whatsoever to prior work. Given the industry’s best practice of clearly presenting prior work so to give context and background (as is done in research in general and not only in infosec) this decision by Microsoft is surprising to say the least.

From an industry-wide view point, it is my opinion that the last point is the most important of them all. The key reason, as will be explained later on, is that the most important thing one must understand regarding software development groups is that they are always busy. There is always one more release cycle, one more customer and more things to fix. As such, the overall approach is prioritization based: “How big is the customer waiting for the upcoming version? Will they be irritated if it will be postponed?”, “What is the benefit from the efforts allocated to this new feature? Would it be better to allocate these resources elsewhere?”.

In this world, the interface between the development world and the infosec world should be based on parameters for better prioritization:

- Legislation/Certification: Security products for “improving” the development lifecycle (security scan as part of release checklist) will be integrated when the regulation will dictate their use.

- Rules of thumb/Top 10 Vulnerabilities: As mentioned in OWASP’s TOP 10:

“Globally recognized by developers as the first step towards more secure coding.”

OWASP Top 10

If internally, as an information security community, we can’t reach a wide consensus on the threats / code issues that require attention, we can’t really expect the development world to successfully keep track of new updates in this field. Without such updates, the ability to effectively prioritize tasks is limited, leading to allocation of precious development resources to less important security tasks or even worse, to non-security development tasks.

In contrast to the disclosure process with Microsoft, when reaching out to the smaller projects, they knew the clock is ticking as they have 90 days to remediate the issue and release a patch. They all responded professionally, to the point, and released fast and successful fixes to the reported issues. And still, the elephant in the room is that the reported issues were addressed in “crises mode”, under pressure, and in an isolated manner.

Do we really expect that a single development team would successfully deduce from our findings that there is a wider issue in their code that requires attention? After all, they only see what we reported to them, without the larger context of what we found in their competitors’ products. Do we expect that they will define high priority follow-up tasks so to keep on fixing the root cause that led to the isolated issues we reported to them? After all, these project don’t have internal security teams to help them with the prioritization, with designing the best remediation method, or even with reviewing the quality of the chosen fix.

In the end of the day, even if the development team will conduct any internal postmortem process, they will have to do so alone, without our help. After all, we already returned back to our publication process, published a blog or a conference talk about our project, and left them behind. We already finished our role in the patch process, why should we care?

My personal opinion, and I’ll dive to it more in the next sections, is that in the best case scenario we have unrealistic expectations from the development groups. In the worst case, we don’t even have any such expectation from them, nor do we have any interest in even having a wider fix in the product, as this isn’t the goal of our research.

Further Analysis of the Vulnerabilities in FreeRDP Over the Years

A deep dive into the vulnerabilities that were reported in FreeRDP over the years sheds light on some interesting trends (stats extracted on September 2023):

- 91 vulnerabilities were reported in the product, the first of which being in 2013.

- 2013-2017 – 10 vulnerabilities (in all years combined).

- 2018 – 7 – There were 6 vulnerabilities from our research + 1 more from another researcher.

- 2019 – 2 vulnerabilities.

- 2020 – 40 vulnerabilities.

- 2021 – 4 vulnerabilities.

- 2022 – 11 vulnerabilities.

- 2023 – 17 vulnerabilities.

- As mentioned above, 23 out of 81 of the vulnerabilities reported in FreeRDP as part of our research or afterwards would have been blocked (or were already blocked) as part of the fix we integrated.

In addition, while it is hard to isolate the factors contributing to the significant up-tick in the reported vulnerabilities after our publication (beginning of 2019). Our best guess is that the key factor was the increased COVID-19 driven transition to FreeRDP-based solutions. Still, one should remember the spike in RDP-related research publications in 2019 (Reverse RDP, BlueKeep, DejaBlue, etc.), hence the inability to conclude regarding a singular contributor to the above trend.

And while we can’t have a clear answer to the above question, we could still analyze the reported vulnerabilities and try to derive information from them. A short analysis of the reported vulnerabilities shows that the wide majority of them are variants of publications that were published before the pandemic. To the level of additional reports on the same files or functions in which earlier vulnerabilities were reported, or variants from mstsc.exe vulnerabilities.

As a matter of fact, this indicates that publications do make a dent, at least in the infosec world, which managed to successfully use this past work and translate it to further findings. This was witnessed across both research teams and independent researchers all around the world (based on the languages in the reports submitted to the project).

These are pretty good news regarding the effectiveness of vulnerability research publications, at least within the infosec community. It also raises the question regrading the benefits from such isolated fixes, given the need to repeatedly find “the same” vulnerabilities across products.

Impressions From the Fixing Process

This is my second time of contributing a security mechanism to a large open source project, instead of the usual isolated fix for a vulnerability or two. The past case was a joint work with the maintainers of glibc so to integrate the Safe-Linking defense mechanism to the Heap. My impressions after the joint work with both of these projects include a lot of positive things to say on the software development world, however they don’t shed a very positive light on the infosec world.

This is the place to add a disclaimer, as there were some projects, companies in most of the cases, in which the disclosure process was somewhere on the range from unpleasant to sheer hostility. I have no intention to claim that the software development world (or any other entity to that matter) is perfect. However, my experience is that when switching from a coordinated disclosure process (90 days and all the shenanigans) to a sincere attempt to help fixing the product (without a CVE ID), one would often find an attentive party at the other side.

Cooperation

In both cases the maintainers reacted well above my expectations, and in a very short time span helped me integrate the fix. On glibc’s case I submitted a patch and on FreeRDP’s my initial patch was the basis for a more complete fix that was done by the maintainers themselves, under my review.

Willingness to Improve the Project’s Security

Once again, in both cases I received the impression that the maintainers care about the security of their projects, even if it doesn’t get a high priority in the ever-expanding list of tasks. That is, given an external event that shifts their focus into a security fix with a good ROI, they would be more than happy to assist and will be highly motivated when doing so. As we mentioned in the earlier recap, the key world is prioritization. Our ability to help shift the prioritization so that it would be in our favor when trying to integrate a security fix.

Even more so, in the case of glibc, the first fix was integrated in version 2.32 and sparked the interest of the maintainers in additional security mitigations, way beyond the scope of said fix. They started reading writeups about CTF challenges about malloc() and the Heap, which led to additional hardening efforts from their side. These eventually led to the removal of the malloc() hooks in version 2.34, after an independent ROI calculation of the maintainers themselves.

On the bright side, these were far better results than I could ever imagine when I first reached out to the project.

However, on the flip side, this tells a really gloom story about the infosec world. The maintainers of the project had to read our writeups from CTFs, so to understand how to improve the security of their product. This was supposed to be our job – To collect this information and reach out to them to help them fix it. We had fun in CTFs, toying with the security of glibc’s heap, and no one thought “wait a minute, we should really help solve these issues we exploit”.

Which brings us to the next section.

Lack of Security Mitigations/Improvements

If I would try to count how many times did I see a publication of security researchers about a security improvement they helped introduce to a given product (let this be a home exercise to our readers), I would probably still have some fingers to spare. And even from these publications, the wide majority will probably be from blue teams describing the improvements they helped introduce to the product of their own employer.

One might argue that the small amount of such security mitigations is simply a direct result of their technical complexity. However, if we take Safe-Linking as an example, this was far from a revolutionary invention. We are simply talking about masking of singly-linked pointers (XOR with a random value) so that attackers won’t be able to modify them to point at an arbitrary address. One supporting evidence for the simplicity of the mechanism we can find in Chrome, which independently introduced an almost identical mechanism to their own Heap implementation way back in 2012.

Just for comparison, in 2005 we already had Safe-Unlinking for doubly-linked lists. Still, despite the simplicity of Safe-Linking, glibc’s singly-linked lists only received their security mitigation in 2020. Even when the mechanism was developed for Chrome, not only wasn’t it shared with the industry, it didn’t even find its way to Google’s own gperftools, which was the precursor to Chrome’s Heap implementation.

I have no doubt that security mitigations do require a security perspective, even if sometimes they are simple in nature. And yet, is there any viable explanation to the lack of such mitigations when compared to the vast amount of security researchers or offensive-related publications?

Defensive vs Offensive Research

Do our expectations from independent (non-blue team) researchers don’t include improving the security of products more than just an isolated fix for a vulnerability or two? The answer to this question has a direct implication on the fate of open source projects, which often don’t have the means to keep their own internal security team so to help them with their product.

Trying to think about it (one more exercise to our readers), even when there was some defensive publication by an independent researcher, at which stage was it presented? What attention did it get? What was the feedback to it? Are we really incentivizing researchers to help protect the world? Or is it only a story we sell to ourselves and others while we once in a while tell an innocent development team they have something to fix? This development team suddenly need to fix a vulnerability (with hard time restrictions) and all so that we could present to the world the mistake we found in their code.

The Motivation for a Publication, and the Price of Publicity

In my role as a security researcher in a team focused mainly in publication-related research, I tried to focus on topics of personal interest while balancing between interest, professionalism and the expectations of my employer. And yet, whether it was the publication of a new research tool, a novel attack vector (Vulnerability), analysis method (Malware) or security mitigation, the focus was always on the goal that was set for the research. This goal is never criticizing the studied product.

Still, there is an ever-present tension between the glorification of our findings (false presentation of a threat larger than what we really found) and avoiding criticizing the project in which we found the flaw (the successful “we” vs those stupid “them”). I would also admit that I didn’t feel 100% at ease with the publications of my research projects, especially given the fact they passed through a long chain involving marketing and journalists until they finally reached the public. A process that often seemed like a long game of “Chinese whispers”.

The natural inclination of each of the nodes in this long chain is to glorify the story. This is an ever-present tension that the security researcher must fight so to maintain professionalism on the one hand, and uphold the project’s good name on the other hand. A researcher that enters this situation while focusing on their own reputation and prestige, while ignoring the project’s side of the story, will easily be tempted to look down on the development team, which would only derail the relations between the two parties.

All of these don’t really contribute to the establishment of a healthy relationship between researchers and developers.

My impression after almost 3.5 years of vulnerability-related security research for publication purposes, is that my two most impactful research projects were one that focused on identification of exploits used in Malware of all things, and the security mitigation in glibc (The fix to FreeRDP was submitted by me personally after I “switched sides” to the development world). And the latter received feedback that ranged from “How am I supposed to sell it to soccer moms?” to sheer hostility: “Why would you even want to improve malloc()‘s security?”. The former were to be expected, it is really hard to explain to the public what did I do, and my job description was to publish things for brand recognition.

And yet, the latter were those that really hurt, as they arrived from security researchers. While some were joking “It would make the CTF challenges harder”, some were really originating from researcher that saw the world only through the lens of an attacker, as if this was the only thing that mattered, and then why would I ever want to block an attacker?

Conclusions – Developers Should be Part of the Solution

In the beginning of the series, I used a defender/attacker cat-and-mouse analogy in which they chase code vulnerabilities. However, this analogy was somewhat flawed. The defender in this scenario is the security researcher that claims to help the world (often by finding an isolated vulnerability and reporting it), while the attacker is often the exact same researcher, that will leverage this vulnerability so to brag about a new CVE ID in their new blog post/conference presentation.

While there are cases of “real” attackers that will exploit the vulnerability for profit/harm, we aren’t really playing this game with them. In how many cases in the last few years there were reports of vulnerabilities in popular open source projects that were exploited in-the-wild by threat actors and only after detecting the attacks these vulnerabilities were fixed? I can’t think of more than a few. Obviously, it is plausible that researchers will close a vulnerability that is actively being used by threat actors without our knowledge. However, in such cases we don’t get any indication and so we don’t have any means to asses the impact of the defense we brag about when declaring we helped fix an isolated vulnerability.

In practice, we are forgetting there is another side to this story – The development team of the project itself. When was the last time we treated them as the “defender” in this story? Is there any justification for us treating them as a passive player that only introduces new code vulnerabilities once in a while?

Based on my personal experience of successful cooperation during the introduction of a security mitigation, it is evident that the development teams are in fact motivated to improve their product. In contrast, it seems that the motivation is actually lacking on our side of the story. We are simply dumping on them demands for fixing isolated code vulnerabilities once in a while, we measure how fast they do it, and then we present to the world their flaws.

In light of all of this, my opinion is that it is time we start treating the development team as an equal partner in this effort, and try to cooperate with them so that together we would be the defender in this story. This should be done through meaningful cooperation, and not only through us pointing at isolated code issue that they should fix.

如有侵权请联系:admin#unsafe.sh