Too Long; Didn't Read

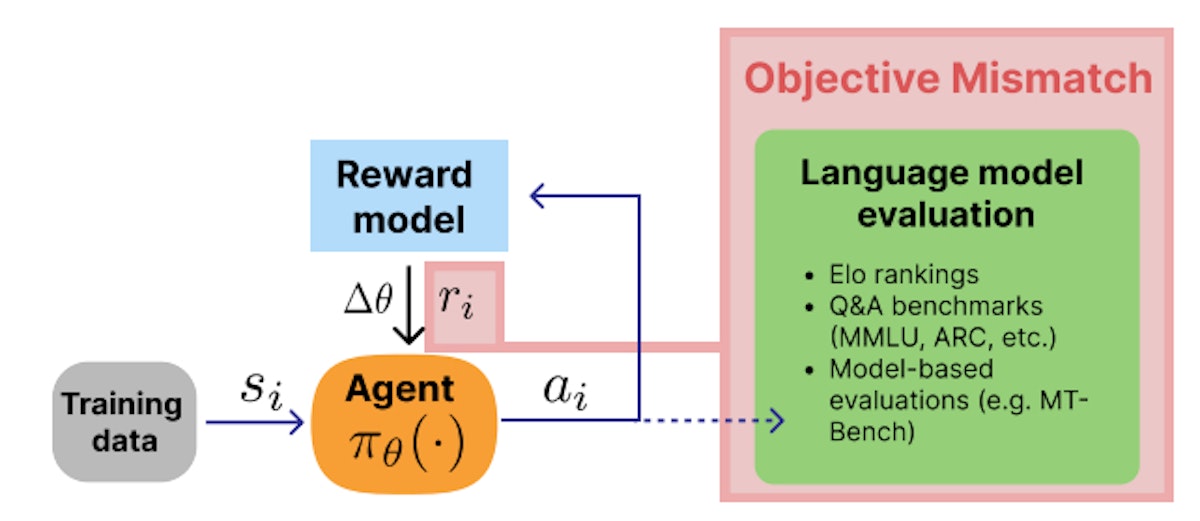

Discover the challenges of objective mismatch in RLHF for large language models, affecting the alignment between reward models and downstream performance. This paper explores the origins, manifestations, and potential solutions to address this issue, connecting insights from NLP and RL literature. Gain insights into fostering better RLHF practices for more effective and user-aligned language models.

@feedbackloop

The FeedbackLoop: #1 in PM Education

The FeedbackLoop offers premium product management education, research papers, and certifications. Start building today!

Receive Stories from @feedbackloop

RELATED STORIES

L O A D I N G

. . . comments & more!