Once in a while, a customer may give you a virtual disk image for an appliance that needs to be analyzed over the course of an incident response engagement. At G DATA ADAN we’re typically not fans of full disk imaging due to the time it takes and the logistics it involves, but sometimes it cannot be avoided – especially if the system in question does not boot properly anymore, or if it’s a specialized system where we don’t know in advance what data exactly we’d like to be collected.

VMDK is short for Virtual Machine Disk and is a format that was established by VMware. It used to be a proprietary format, but in 2011 its specification was opened up because it became one of the formats that can be used for disks in the Open Virtualization Format (OVF), which is used for sharing/exchanging virtual machines across virtualization solutions.

One might be tempted to think that all files ending with a .vmdk extension have the same structure internally, but it turns out this is not the case. Some VMDK files are “flat images”, which means they’re bit-by-bit representations of the stored disk without any extra header/footer. The descriptor for such files is likewise stored as a small .vmdk file containing only the descriptor. Then, there’s sparse files that have a header beginning with the VMDK magic (little endian, so KDMV as ASCII sequence). ESXi also stores snapshots as .vmdk files, which will have a COWD header magic (copy-on-write disk). As you can see, saying “it’s a .vmdk file” doesn’t reveal much in regards to what we’re dealing with, and it greatly depends on context what specifically one will find in the image.

After that short primer, back to our case: The virtual appliance provided by the customer had a problem. It was not possible to import it with official VMware tooling (fails at 99%), and open-source tooling such as qemu-img complains about an “invalid footer”. Our first guess was that the file was somehow corrupted or perhaps encrypted, since it has a high entropy. A quick look at the file’s hex representation revealed that we’re dealing with the KDMV variant. This is fully described in a document titled Virtual Disk Format 5.0 that can be found on the Internet (vmdk_50_technote.pdf). In particular, we’re dealing with a Hosted Sparse Extent Header in the first sector. This structure contains many interesting fields such as total capacity, size of metadata at the beginning of the file, and compression algorithm. The only supported algorithm is Deflate, and that’s what our file happens to be using – which explains the entropy that we saw.

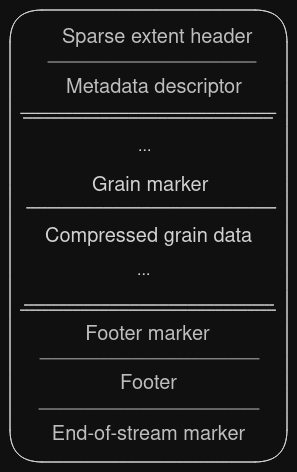

The rest of the file contains so-called Stream-optimized compressed extents, which are basically chunked compressed disk sectors called grains, sometimes interspersed with metadata/tables to aid seeking for specific sectors. The format was designed for network streaming, e.g., from an NFS share. The documentation reveals that it’s also supposed to have a footer (simply a copy of the initial sector containing the KDMV header) and an end-of-stream marker (effectively an all-zero sector). Those two things appear to be missing in our file, since it seemingly ends in the middle of compressed data. This in turn means the file is an incomplete copy.

It’s unclear whether something happened to the appliance in the customer’s infrastructure that caused its disk to be truncated, or if this is a result of an incomplete export to external media. We could of course now go and bug the customer and ask them to check this and provide us with the data again, but that would be slightly annoying for everyone involved. And after all, we have over 300 GB of compressed data, surely something can be done?

Given the truncation, all official tools and libraries will refuse to open this image. This is understandable for a normal scenario, because it’s better to fail instantly rather than having the user attempt to mount an incomplete disk, which could very well cause inexplicable errors down the line.

Working in a forensics environment, one is somewhat used to having incomplete data or other cases where things went awry and were left in an inconsistent state. We’re no strangers to carving and given the data that we have, it should be possible to get something usable out of it. In other words, “yes, we know it’s an incomplete image, just give us everything you have”.

Time for custom tooling! We need to:

- Inspect the file header and ensure it’s indeed a format we understand.

- Skip the header/metadata so that we’re at the position of the first actual grain.

- Read grains and decompress them until we reach the end of the file. Skip any other markers such as grain tables, we don’t need them because we’re doing a full sequential read.

- Write decompressed data to an output file as we go, in the end yielding a flat disk image.

Should be simple enough, right? In fact, implementing this was relatively straight-forward. The documentation helpfully provides C structs that can be copied without modification. Other than that, mostly some file I/O code and handling of zlib for decompression is required.

Caveats:

- Struct members of type

SectorTypeneed to be multiplied by 512 to obtain a value in bytes. It’s not entirely clear whether this sector size is always used, but for the time being we treat it as a constant. - Grains are aligned to sector boundaries, meaning most of the time a seek is required after reading the compressed data. The rare case that you end up exactly on a sector boundary does happen, though!

- Remember it’s a sparse file, so not all sectors are represented in the image. If there’s a gap in the sector numbers from one grain to the next, zeroes need to be written to the output file in order to account for the sparse sectors.

We’ve open sourced the tool in case someone else ever finds themselves in this situation. You can find it on GitHub.

The tool worked well and produced a 1 TiB raw image from a ~300 GiB input VMDK. In the end around 12.5 GiB were missing from the raw image, which isn’t all that much, relatively seen. So it was our hope that no data of importance was lost (i.e., mostly empty space at the end of the disk) and that we could bring it into a mountable state.

Once again, we checked the hex representation of the first few sectors of the newly produced image to see if we can figure out at a glance what we’re dealing with. Indeed, an LVM2 magic quickly made itself seen. However, trying to activate the volume group…

$ vgscan

WARNING: Device /dev/loop49 has size of 2074520165 sectors which is smaller than corresponding PV size of 2097152000 sectors. Was device resized?

WARNING: One or more devices used as PVs in VG foobarvg have changed sizes.

Found volume group "foobarvg" using metadata type lvm2The volume group foobarvg (name changed) was found, but it doesn’t like the reduced size at all. While the scan command just emits a warning, trying to activate the group with vgchange -ay faults with an invalid argument error.

What can we do? Well, not much. All we can do is try to set the image size to the proper size (essentially padding the file) and hope LVM will be appeased. Multiplying the PV size output by the command above by 512 will yield a number in bytes that can be passed to truncate -s, which will fill up the file with zeroes to the specified size. This by the way matches the number of sectors that was also given in the VMDK header as capacity.

With the size adjustment in place, the volume group can be activated successfully and we found it to contain a single logical volume. This in turn is an ext4 partition, but mounting it at first failed with a “bad superblock” error. We feared for the worst – however, the dumpe2fs tool was able to display proper information about the filesystem with no obvious errors, meaning it couldn’t have been completely broken. Running fsck on the partition found a couple metadata errors that it was able to fix, and afterwards we were able to mount it without issues. My colleague Backi brought a tool called xmount to my attention that enables one to have a writable “virtual” disk image backed by a write cache (basically a copy-on-write mechanism using FUSE). That way we could use fsck without modifying the dumped VMDK image that took over an hour to create – just in case something went wrong.

We were finally able to access the relevant log data of the appliance and didn’t even have to resort to actual carving. Hooray!