Like Xavier (diary entry "Quick Forensics Analysis of Apache logs"), I too often have to analyze client's log files.

I have private tools to help me with that, one of them is csv-stats.py (which I just published).

When I receive log files from clients, I have to check if the format is OK and doesn't contain any malformed content.

My tool csv-stats.py allows me to do just that.

I took an old Apache log, and converted it with mal2csv as Xavier showed in his diary entry.

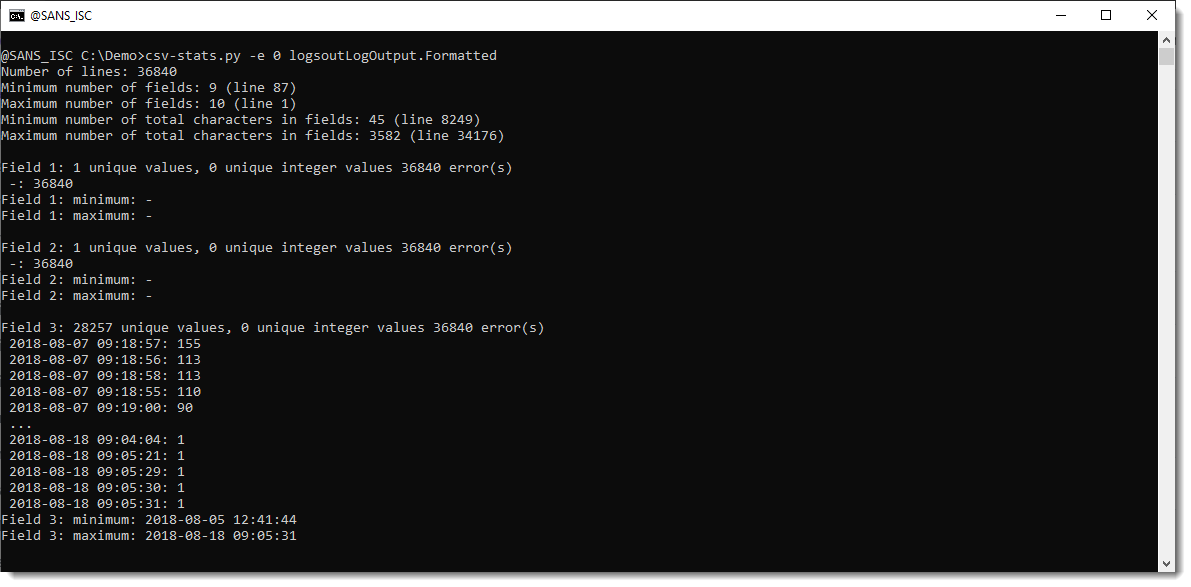

Then I ran my tool on it (I'm using option -e 0 to exclude field 0, so that I don't have to redact source IPv4 addresses):

I shows information like the numbers of lines, the number of fields, ...

Here I have 10 fields, but there is a line (87) with 9 fields, so that's something to take a closer look at.

And then there are statistics per field (which are numbered starting from zero, because this file has no header with field names).

Field number 3 allows me to verify the period covered by the logs (minimum and maximum string value).

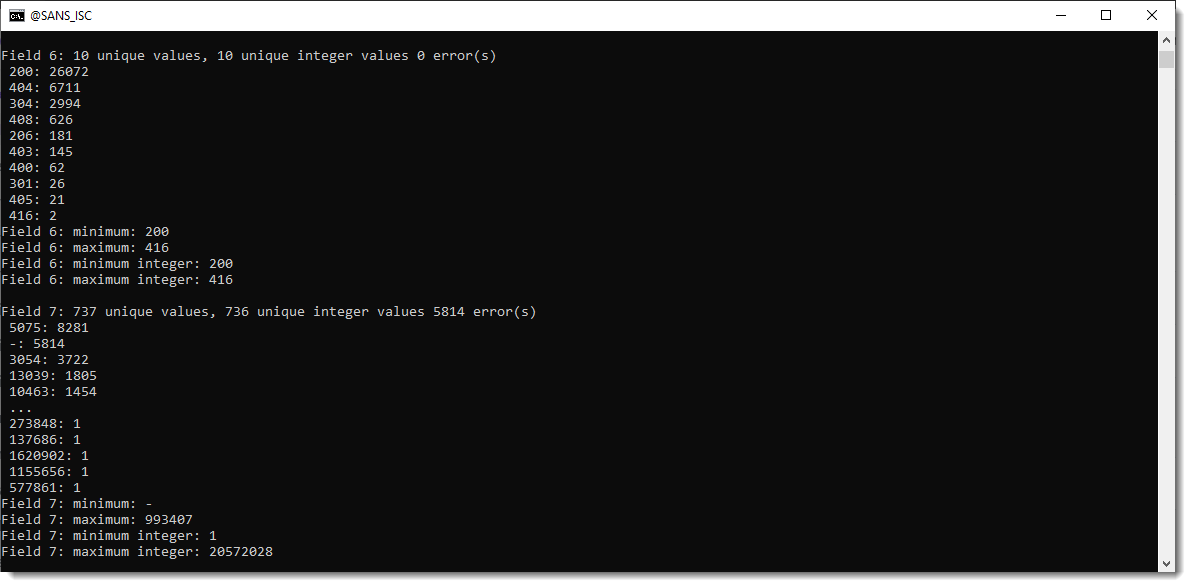

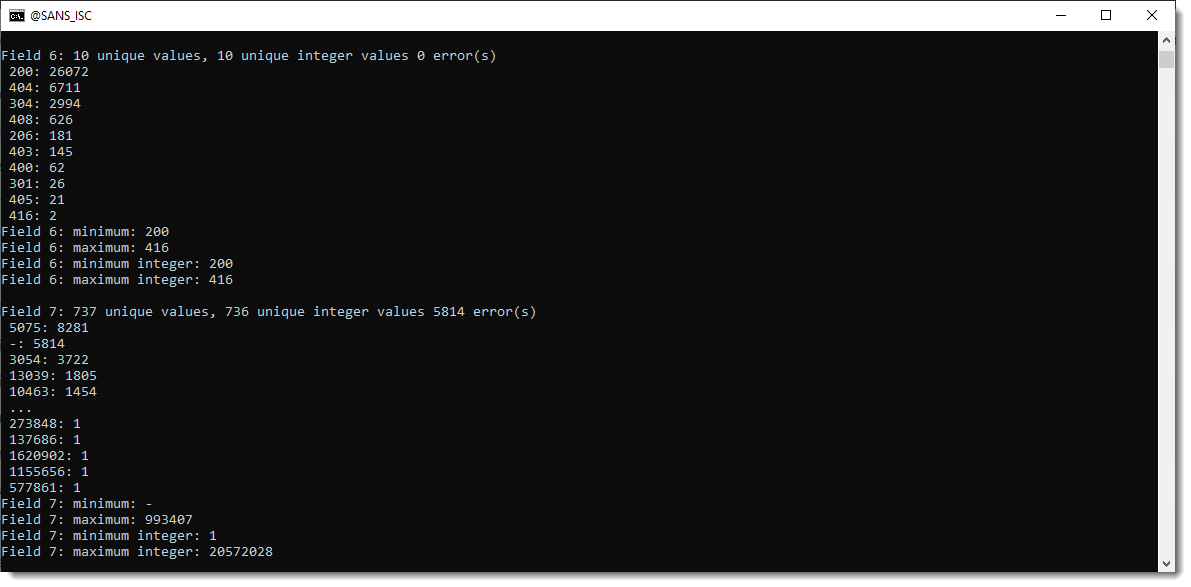

Minimum and maximum integer values are also calculated if fields contain integer values:

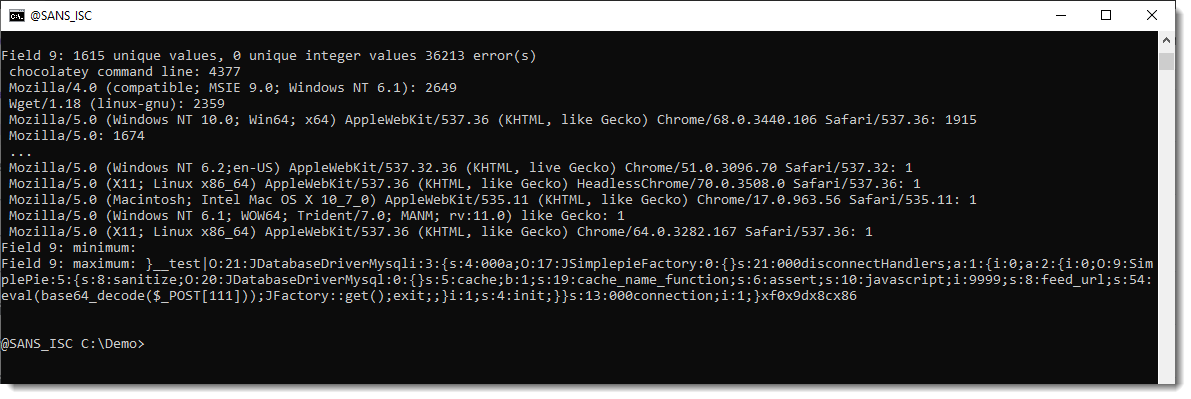

And here you get an idea of frequent and infrequent user agent strings:

Didier Stevens

Senior handler

blog.DidierStevens.com

如有侵权请联系:admin#unsafe.sh