2024-4-18 17:1:23 Author: www.shielder.com(查看原文) 阅读量:16 收藏

TL;DR

During a security audit of Element Android, the official Matrix client for Android, we have identified two vulnerabilities in how specially forged intents generated from other apps are handled by the application. As an impact, a malicious application would be able to significatively break the security of the application, with possible impacts ranging from exfiltrating sensitive files via arbitrary chats to fully taking over victims’ accounts. After private disclosure of the details, the vulnerabilities have been promptly accepted and fixed by the Element Android team.

Intro

Matrix is, altogether, a protocol, a manifesto, and an ecosystem focused on empowering decentralized and secure communications. In the spirit of decentralization, its ecosystem supports a great number of clients, providers, servers, and bridges. In particular, we decided to spend some time poking at the featured mobile client applications - specifically, the Element Android application (https://play.google.com/store/apps/details?id=im.vector.app). This led to the discovery of two vulnerabilities in the application.

The goal of this blogpost is to share more details on how security researchers and developers can spot and prevent this kind of vulnerabilities, how they work, and what harm an attacker might cause in target devices when discovering them.

For these tests, we have used Android Studio mainly with two purposes:

- Conveniently inspect, edit, and debug the Element application on a target device.

- Develop the malicious application.

The analysis has been performed on a Pixel 4a device, running Android 13.

The code of the latest vulnerable version of Element which we used to reproduce the findings can be fetched by running the following command:

git clone -b v1.6.10 https://github.com/element-hq/element-android

Without further ado, let us jump to the analysis of the application.

It Starts From The Manifest 🪧

When auditing Android mobile applications, a great place to start the journey is the AndroidManifest.xml file. Among the other things, this file contains a great wealth of details regarding the app components: things like activities, services, broadcast receivers, and content providers are all declared and detailed here. From an attacker’s perspective, this information provides a fantastic overview over what are, essentially, all the ways the target application communicates with the device ecosystem (e.g. other applications), also known as entrypoints.

While there are many security-focused tools that can do the heavy lifting by parsing the manifest and properly output these entrypoints, let’s keep things simple for the sake of this blogpost, by employing simple CLI utilities to find things. Therefore, we can start by running the following in the cloned project root:

grep -r "exported=\"true\"" .

The command above searches and prints all the instances of exported="true" in the application’s source code. The purpose of this search is to uncover definitions of all the exported components in the application, which are components that other applications can launch. As an example, let’s inspect the following activity declaration in Element (file is: vector-app/src/main/AndroidManifest.xml):

| |

Basically, this declaration yields the following information:

.features.Aliasis an alias for the application’sMainActivity.- The activity declared is exported, so other applications can launch it.

- The activity will accept Intents with the

android.intent.action.MAINaction and theandroid.intent.category.LAUNCHERcategory.

This is a fairly common pattern in Android applications. In fact, the MainActivity is typically exported, since the default launcher should be able to start the applications through their MainActivity when the user taps on their icon.

We can immediately validate this by running and ADB shell on the target device and try to launch the application from the command line:

am start im.vector.app.debug/im.vector.application.features.Alias

As expected, this launches the application to its main activity.

The role of intents, in the Android ecosystem, is central. An intent is basically a data structure that embodies the full description of an operation, the data passed to that operation, and it is the main entity passed along between applications when launching or interacting with other components in the same application or in other applications installed on the device.

Therefore, when auditing an activity that is exported, it is always critical to assess how intents passed to the activity are parsed and processed. That counts for the MainActivity we are auditing, too. The focus of the audit, therefore, shifts to java/im/vector/app/features/MainActivity.kt, which contains the code of the MainActivity.

In Kotlin, each activity holds an attribute, namely intent, that points to the intent that started the activity. So, by searching for all the instances of intent. in the activity source, we obtain a clear view of the lines where the intent is somehow accessed. Each audit, naturally, comes with a good amount of rabbit holes, so for the sake of simplicity and brevity let’s directly jump to the culprit:

| |

Dissecting the piece of code above, the flow of the intent can be described as follows:

- The activity checks whether the intent comes with an extra named

EXTRA_NEXT_INTENT, which type is itself an intent. - If the extra exists, it will be parsed and used to start a new activity.

What this means, in other words, is that MainActivity here acts as an intent proxy: when launched with a certain “nested” intent attached, MainActivity will launch the activity associated with that intent. While apparently harmless, this intent-based design pattern hides a serious security vulnerability, known as Intent Redirection.

Let’s explain, in a nutshell, what is the security issue introduced by the design pattern found above.

An Intent To Rule Them All 💍

As we have previously mentioned, there is a boolean property in the activities declared in the AndroidManifest.xml, namely the exported property, that informs the system whether a certain activity can be launched by external apps or not.

This provides applications with a way to define “protected” activities that are only supposed to be invoked internally.

For instance, let’s assume we are working on a digital banking application, and we are developing an activity, named TransferActivity. The activity flow is simple: it reads from the extras attached to the intent the account number of the receiver and the amount of money to send, then it initiates the transfer. Now, it only makes sense to define this activity with exported="false", since it would be a huge security risk to allow other applications installed on the device to launch a TransferActivity intent and send money to arbitrary account numbers. Since the activity is not exported, it can only be invoked internally, so the developer can establish a precise flow to access the activity that allows only a willing user to initiate the wire transfer. With this introduction, let’s again analyze the Intent Proxy pattern that was discovered in the Element Android application.

When the MainActivity parses the EXTRA_NEXT_INTENT bundled in the launch intent, it will invoke the activity associated with the inner intent. However, since the intent is now originating from within the app, it is not considered an external intent anymore. Therefore, activities which are set as exported="false" can be launched as well. This is why using an uncontrolled Intent Redirection pattern is a security vulnerability: it allows external applications to launch arbitrary activities declared in the target application, whether exported or not. As an impact, any “trust boundary” that was established by non exporting the app is broken.

The diagram below hopefully clarifies this:

Being an end-to-end encrypted messaging client, Element needs to establish multiple security boundaries to prevent malicious applications from breaking its security properties (confidentiality, integrity, and availability). In the next section, we will showcase some of the attack scenarios we have reproduced, to demonstrate the different uses and impacts that an intent redirection vulnerability can offer to malicious actors.

Note: in order to exploit the intent redirection vulnerability, we need to install on the target device a malicious application that we control from which we can call the

MainActivitybundled with the wrappedEXTRA_NEXT_INTENT. Doing so requires creating a new project on Android Studio (detailing how to setup Android Studio for mobile application development is beyond the purpose of this blogpost).

PIN Code? No, Thanks!

In the threat model of secure messaging application, it is critical to consider the risk of device theft: it is important to make sure that, in case the device is stolen unlocked or security gestures / PIN are not properly configured, an attacker would not be able to compromise the confidentiality and integrity of the secure chats. For this reason, Element prompts user into creating a PIN code, and afterwards “guards” entrance to the application with a screen that requires the PIN code to be inserted. This is so critical in the threat model that, upon entering a wrong PIN a certain number of times, the app clears the current session from the device, logging out the user from the account.

Naturally, the application also provides a way for users to change their PIN code. This happens in im/vector/app/features/pin/PinActivity.kt:

| |

So PinActivity reads a PinArgs extra from the launching intent and it uses it to initialize the PinFragment view. In im/vector/app/features/pin/PinFragment.kt we can find where that PinArgs is used:

| |

Therefore, depending on the value of PinArgs, the app will display either the view to authenticate i.e. verify that the user knows the correct PIN, or the view to create/modify the PIN (those are handled by the same fragment).

By leveraging the intent redirection vulnerability with this information, a malicious app can fully bypass the security of the PIN code. In fact, by bundling an EXTRA_NEXT_INTENT that points to the PinActivity activity, and setting as the extra PinMode.MODIFY, the application will invoke the view that allows to modify the PIN. The code used in the malicious app to exploit this follows:

| |

Note: In order to successfully launch this, it is necessary to declare a package in the malicious app that matches what the receiving intent in Element expects for

PinArgs. To do this, it is enough to create anim.vector.app.featurespackage and create aPinArgsenum in it with the same values defined in the Element codebase.

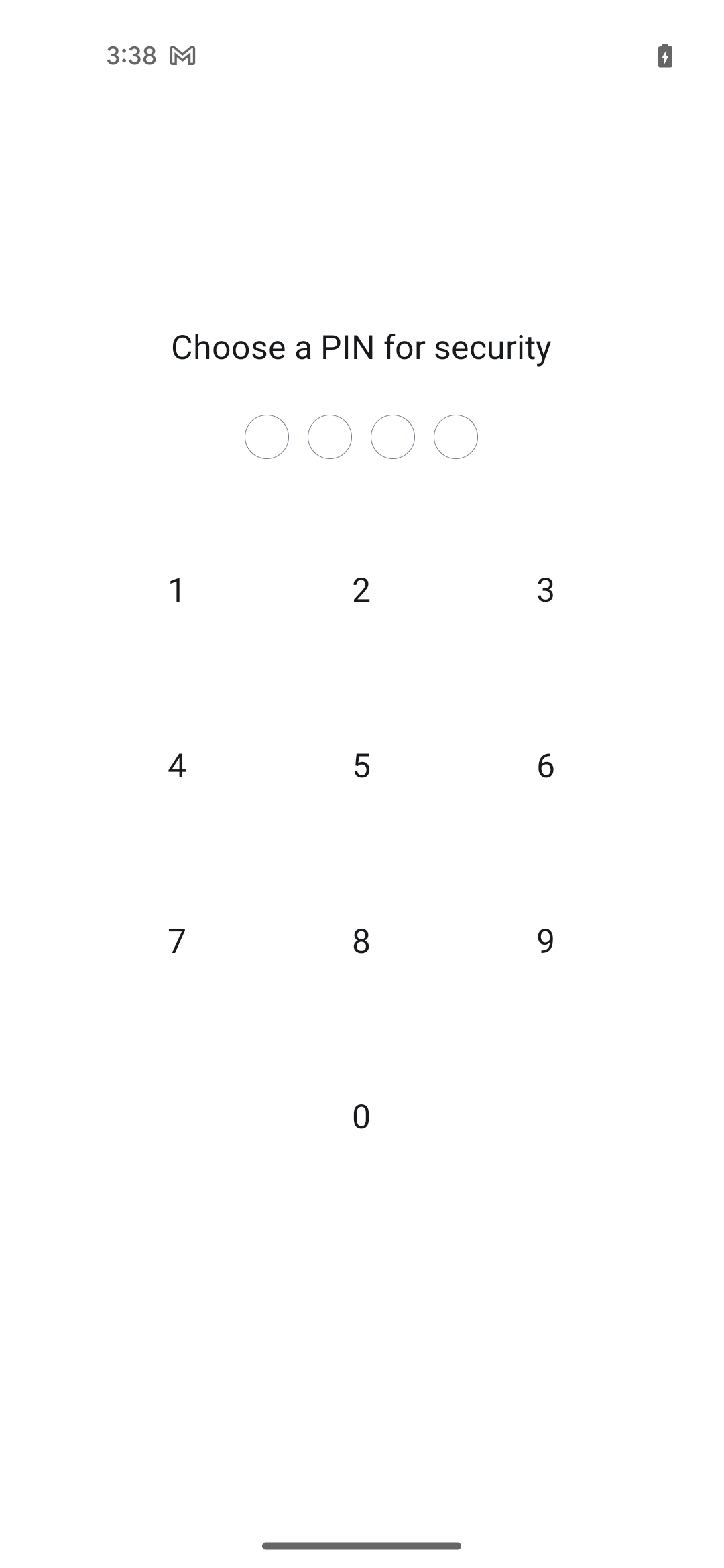

Running and installing this app immediately triggers the following view in the target device:

View to change the PIN code

Hello Me, Meet The Real Me

Among its multiple features, Element supports embedded web browsing via WebView components. This is implemented in im/vector/app/features/webview/VectorWebViewActivity.kt:

| |

Therefore, a malicious application can use this sink to have the app visiting a custom webpage without user consent. Typically externally controlled webviews are considered vulnerable for different reasons, which range from XSS to, in some cases, Remote Code Execution (RCE). In this specific scenario, what we believe would have the highest impact is that it enables some form of UI Spoofing. In fact, by forcing the application into visit a carefully crafted webpage that mirrors the UI of Element, the user might be tricked into interacting with it to:

- Show them a fake login interface and obtain their credentials in plaintext.

- Show them fake chats and receive the victim messages in plaintext.

- You name it.

Developing such a well-crafted mirror is beyond the scope of this proof of concept. Nonetheless, we include below the code that can be used to trigger the forced webview browsing:

| |

Running this leads to:

Our WebView payload, force-browsed into the application.

All Your Credentials Are Belong To Us

While assessing the attack surface of the application to maximize the impact of the intent redirection, there is an activity that quickly caught our attention. It is defined in im/vector/app/features/login/LoginActivity.kt:

| |

In im/vector/app/features/login/LoginConfig.kt:

| |

The purpose of the LoginConfig object extra passed to LoginActivity is to provide a way for the application to initiate a login against a custom server e.g. in case of self-hosted Matrix instances. This, via the intent redirection, can be abused by a malicious application to force a user into leaking their account credentials towards a rogue authentication server.

In order to build this PoC, we have quickly scripted a barebone Matrix rogue API with just enough endpoints to have the application “accept it” as a valid server:

| |

Then, we developed the following intent redirection payload in the malicious application:

| |

By launching this, the application displays the following view:

Login activity populated with our rogue server, hosted on replit.

After clicking on “Sign In” and entering our credentials, we see the leaked username and password in the API console:

| |

You might notice we have used a little phishing trick, here: by leveraging the user:password@host syntax of the URL spec, we are able to display the string Connect to https://matrix.com, placing our actual rogue server url into a fake server-fingerprint value. This would avoid raising suspicions in case the user closely inspects the server hostname.

By routing these credentials to the actual Matrix server, the rogue server would also be able to initiate an OTP authentication, which would successfully bypass MFA and would leak to a full account takeover.

This attack scenario requires user interaction: in fact, the victim needs to willingly submit their credentials. However, it is not uncommon for applications to logout our accounts for various reasons; therefore, we assume that a user that is suddenly redirected to the login activity of the application would “trust” the application and just proceed to login again.

CVE-2024-26131

This issue was reported to the Element security team, which promptly acknowledged and fixed it. You can inspect the GitHub advisory and Element’s blogpost.

The fix to this introduces a check on the EXTRA_NEXT_INTENT which can now only point to an allow-list of activities.

Nothing Is Beyond Our Reach

Searching for more exported components we stumbled upon the im.vector.app.features.share.IncomingShareActivity that is used when sharing files and attachments to Matrix chats.

| |

The IncomingShareActivity checks if the user is logged in and then adds the IncomingShareFragment component to the view.

This Fragment parses incoming Intents, if any, and performs the following actions using the Intent’s extras:

- Checks if the Intent is of type

Intent.ACTION_SEND, the Android Intent type used to deliver data to other components, even external. - Reads the

Intent.EXTRA_STREAMfield as a URI. This URI specify the Content Provider path for the attachment that is being shared. - Reads the

Intent.EXTRA_SHORTCUT_IDfield. This optional field can contain a Matrix Room ID as recipient for the attachment. If empty, the user will be prompted with a list of chat to choose from, otherwise the file will be sent without any user interaction.

| |

| |

During the sharing process in the Intent handler, the execution reaches the getIncomingFiles function of the Picker class, and in turn the getSelectedFiles of the FilePicker class.

These two functions are responsible for parsing the Intent.EXTRA_STREAM URI, resolving the attachment’s Content Provider, and granting read permission on the shared attachment.

Summarizing what we learned so far, an external application can issue an Intent to the IncomingShareActivity specifying a Content Provider resource URI and a Matrix Room ID. Then resource will be fetched and sent to the room.

At a first glance everything seems all-right but this functionality opens up to a vulnerable scenario. 👀

Exporting The Non-Exportable

The Element application defines a private Content Provider named .provider.MultiPickerFileProvider.

This Content Provider is not exported, thus normally its content is readable only by Element itself.

| |

Moreover, the MultiPickerFileProvider is a File Provider that allow access to files in a specific folders defined in the <paths> tag.

In this case the defined path is of type files-path, that represents the files/ subdirectory of Element’s internal storage sandbox.

| |

To put it simply, by specifying the following content URI content://im.vector.app.multipicker.fileprovider/external_files/ the File Provider would map it to the following folder on the filesystem /data/data/im.vector.app/files/.

Thanks to the IncomingShareActivity implementation we can leverage it to read files in Element’s sandbox and leak them over Matrix itself!

We developed the following intent payload in a new malicious application:

| |

By launching this, the application will send the encrypted Element chat database to the specified $ROOM_ID, without any user interaction.

CVE-2024-26132

This issue was reported to the Element security team, which promptly acknowledged and fixed it. You can inspect the GitHub advisory and Element’s blogpost.

The fix to this restrict the folder exposed by the MultiPickerFileProvider to a subdirectory of the Element sandbox, specifically /data/data/im.vector.app/files/media/ where temporary media files created through Element are stored.

It is still possible for external applications on the same device to force Element into sending files from that directory to arbitrary rooms without the user consent.

Conclusions

Android offers great flexibility on how applications can interact with each other. As it is often the case in the digital world, with great power comes great responsibilities vulnerabilities 🐛🪲🐞.

The scope of this blogpost is to shed some light in how to perform security assessments of intent-based workflows in Android applications. The fact that even a widely used application with a strong security posture like Element was found vulnerable, shows how protecting against these issues is not trivial!

A honorable mention goes to the security team of Element, for the speed they demonstrated in triaging, verifying, and fixing these issues. Speaking of which, if you’re using Element Android for your secure communications, make sure to update your application to a version >= 1.6.12.

Pitch 🗣

Did you know that this was the outcome of a Penetration Test for one of our customers? They developed a product based on Element and asked Shielder to assess its security. And guess what? While the Element code was not directly in-scope we went for the extra-mile, and it payed off! If you are looking for a trusted partner to assess the security of your products: get in touch with us!

如有侵权请联系:admin#unsafe.sh