2024-4-26 02:38:15 Author: securityboulevard.com(查看原文) 阅读量:2 收藏

You’d be hard-pressed to find a cybersecurity provider that doesn’t tout its AI-based defenses as a panacea. But AI defenses are by no means a silver bullet – and shouldn’t be treated as one.

AI Data Poisoning: A Hidden Threat on the Horizon

AI data poisoning is not a theoretical concept, but a tangible threat. The newly updated OWASP for LLMs lists Training Data Poisoning as a Top 10 vulnerability (LMM03). Model creators do not have full control of the data input into an LLM. Cybercriminals exploit this fact by using poisoned data to bypass AI-based security defenses, leading them astray and teaching them to make the wrong decisions. This deliberate manipulation becomes a silent accomplice, providing an open door to exploit unsuspecting systems.

Imagine you’ve got this super-smart AI model tasked with spotting anomalies in your system. What if someone were to sneak in data during its training or refinement, deliberately teaching it to ignore real threats?

For the attacker, it’s all about disguising the data to look legitimate. In some cases, bad actors will use real data and only tweak a few numbers – anything to fool the AI into thinking it’s legit. In essence, data is used to teach AI to make bad decisions.

Fake It ‘Til You Break It: Bypassing AI Security Using Harvested Data

In a more sophisticated twist, sometimes the data used to trick AI actually is the real deal. In this scenario, adversaries harvest real data (typically stolen) and replay it to bypass AI models. One way this works is by using harvested digital fingerprints along with recorded mouse movements and gestures that are hard-coded and randomized to develop an automated script. While the data is technically real, it is inauthentic because it wasn’t originally generated by the person using it.

This data is then fed at scale into the security tool’s AI model, which can bypass defenses if the AI or ML model can’t detect that the data was harvested. Let this sink in: even the most advanced AI models are ineffective for security defense if they can’t verify that the data presented is authentic and not harvested (aka fake).

Outdated Anti-Bot Defenses Unprepared for Attacks on AI

Teams who rely on traditional bot management solutions can miss over 90% of bad bot requests due to these new types of data poisoning and fake data attacks, in which data and automation are used to bypass their ML and AI security detections.

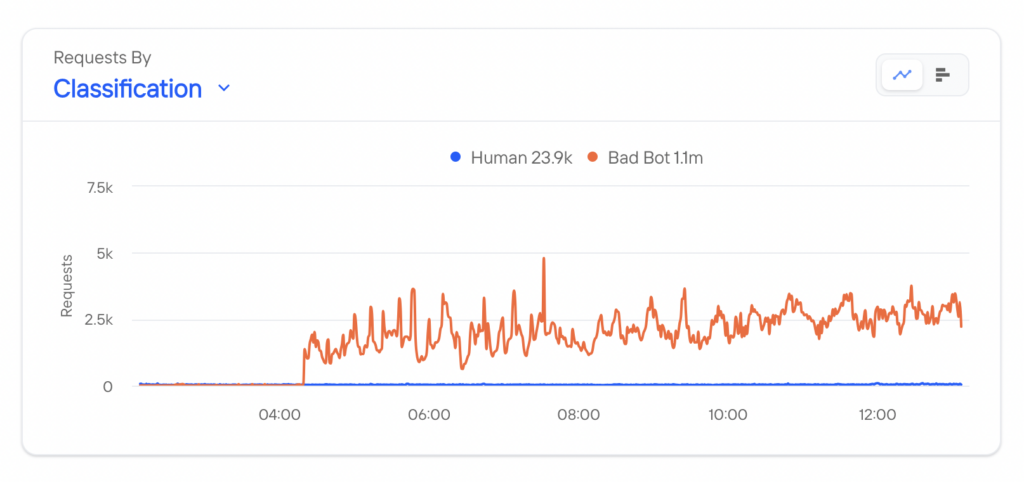

To illustrate the magnitude of this problem, take a look at what happened when Kasada’s bot defense was turned on for a leading streaming provider:

Kasada was deployed behind CDN-based bot detection for this streaming company. At the go-live event at 4:15, the large spike in detected and mitigated bad bots (red) was a result of data being harvested and replayed that were evading the CDN’s AI detections. Since the CDN-based detection accepted the faked data as authentic, it exhibited a 98% false negative rate prior to implementing Kasada. The human activity (blue) was completely dwarfed by the scale of the bot problem.

Ironically, the customer didn’t realize the vast majority of their traffic wasn’t real since the harvested data looked just like humans – causing an awakening for both their fraud and marketing (digital ad fraud) teams.

One of the most important elements to realizing the potential of AI for cyber defense, while minimizing the impact of falsifying data input, is Proof of Execution (PoE). It’s Kasada’s collective term for client and server-side techniques designed to validate that the data presented to the AI detection model is indeed authentic. PoE can verify the data presented to the system is generated and executed in real-time.

Three Steps Defenders Can Take Now

Data-based attacks on AI demand attention from defenders using or considering security solutions that employ machine learning or AI models. Here are some actionable recommendations you can take to protect your organization from such attacks:

- Monitor for abnormal behavior: Keep a close eye on security solutions that rely heavily on AI models. Know what human day-night cycles should look like and whether they are observable in your traffic.

- Diversify your defenses: Don’t solely depend on server-side AI learning for bot detection. Implement client-side detections and rigorous validation checks to ensure your security controls are working the way you intended.

- Stay vigilant and proactive: Ensure your bot detection and mitigation solutions can validate the authenticity of the data presented to the system. A keen sense of what’s real is crucial.

As more security solutions add “AI” to their technology, the ability to identify and stop data poisoning and harvested data becomes paramount as adversaries aim to bypass AI protections. AI has opened up new attack surfaces and data-based evasion techniques for the adversary, which must be addressed by the defender. The adversarial cat-and-mouse game is ongoing, and the question remains: are your defenses dynamic and adaptive enough to rise to the challenge?

Kasada was designed with defense-in-depth in mind to outsmart the latest automated attacks and the motivated adversaries behind them. Request a demo to learn how our experts can help you today.

The post AI Data Poisoning: How Misleading Data Is Evading Cybersecurity Protections appeared first on Kasada.

*** This is a Security Bloggers Network syndicated blog from Kasada authored by Neil Cohen. Read the original post at: https://www.kasada.io/ai-data-poisoning/

如有侵权请联系:admin#unsafe.sh