As Constella analyzed in the first part of this blog series, which focused on exhibitions in the emerging AI sector, we’ll delve deeper into the risks and vulnerabilities in this field, along with the threat of Infostealer exposures. Constella has evaluated some of the most relevant and utilized tools in the AI field, revealing concerning Infostealer exposures.

Diving Into the Data: Understanding the Impact

Our analysis exposes a stark reality: Over one million user accounts are at risk, predominantly due to devices infected by Infostealers. Among the compromised data, we’ve identified corporate credentials representing a substantial security threat. This discovery highlights the critical need for strengthened protective measures to safeguard sensitive information.

Through our analysis, we have uncovered significant credential exposures at several AI-focused companies, specifically: Openai, Wondershare, Figma, Zapier, Cutout, Elevenlabs, Huggingface, Make, and Heygen among others.

Understanding the Impact of Infostealer Exposures and Taking Action

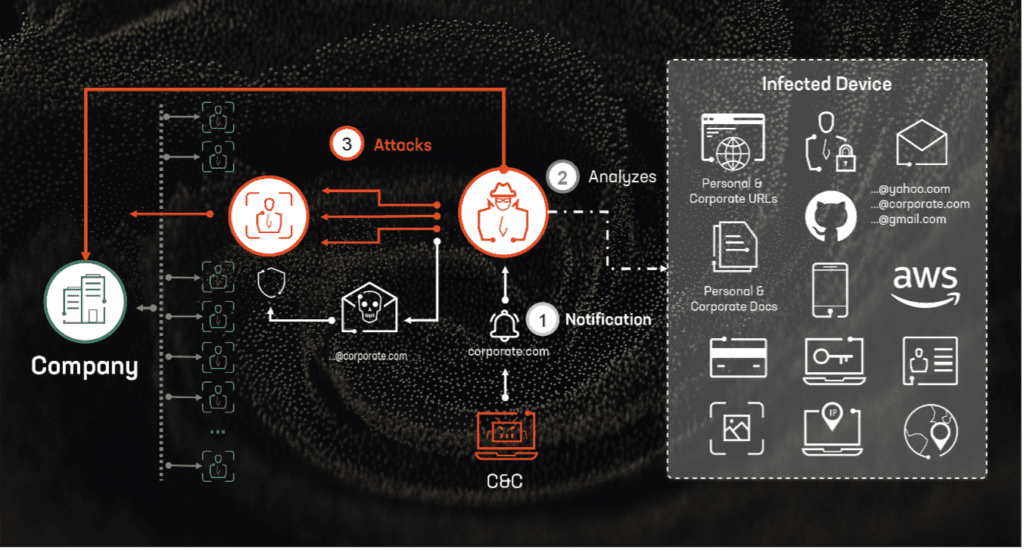

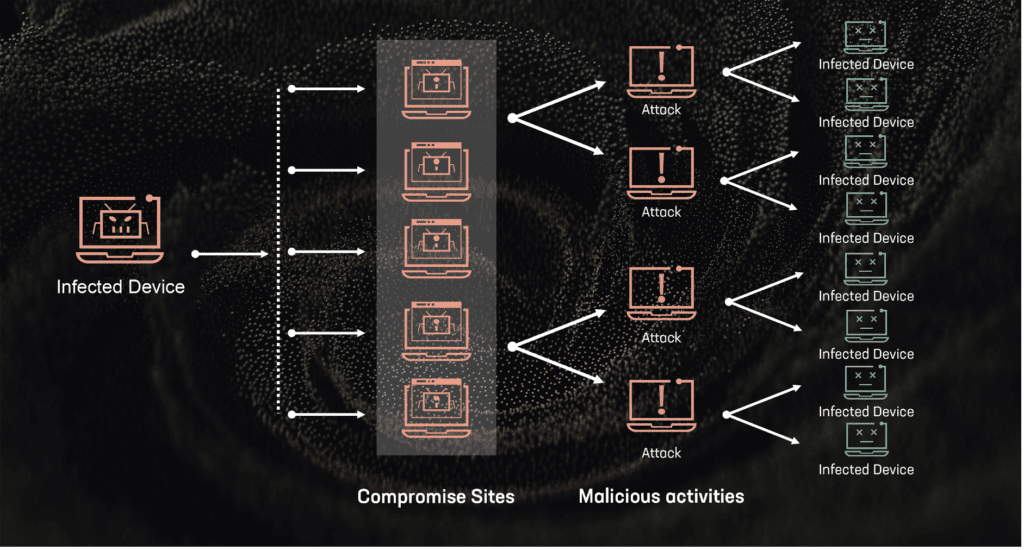

A threat actor can exploit exposed credentials from AI companies to orchestrate sophisticated attacks, even if multi-factor authentication (MFA) is in use.

Personal account information, when compromised by an infostealer infection, can be exploited through social engineering strategies such as phishing campaigns. These tactics deceive employees into unwittingly providing access or divulging further confidential details. The stakes are particularly high in AI companies, where such breaches can lead to several specific threats:

- Data Privacy and Confidentiality Risks: Access to AI tools like ChatGPT by unauthorized parties could result in the exposure of sensitive information, violating confidentiality agreements and privacy norms.

- Surveillance and Tracking: Compromised AI systems could be used for covert surveillance, enabling unauthorized tracking of individuals or organizational activities.

- Model Poisoning: Interference with the training data of AI models by malicious entities can corrupt their outputs, producing biased or harmful results and compromising the integrity of the AI applications.

To safeguard against the risks associated with infostealer infections and enhance security in AI environments, consider implementing the following strategies:

- Regularly Update and Patch Systems: Ensure that all systems are up-to-date with the latest security patches. Regular updates can close vulnerabilities that could be exploited by threat actors.

- Monitor and Audit AI Model Inputs and Outputs: Regularly review the inputs and outputs of AI models to detect any signs of model poisoning or other anomalies that could indicate tampering.

- Limit Data Retention: Establish clear data retention policies to reduce exposure risks.

*** This is a Security Bloggers Network syndicated blog from Constella Intelligence authored by Alberto Casares. Read the original post at: https://constella.ai/security-in-the-ai-sector-understanding-infostealer-exposures-and-corporate-risks/