02 May 2024 - Posted by Anthony Trummer

Every so often we see people discussing whether they still need to have product security audits (commonly referred to as pentests) because they have a bug bounty program. While the answer to this seems clear to us, it nonetheless is a recurring topic of discussion, particularly in the information security corners of social media. We’ve decided to publish our thoughts on this topic to clarify it for those who might still be unsure.

Product Security Audit

What we refer to as a product security audit is a time-bound project, where one or more engineers focus on a particular application exclusively. The testing is performed by employees of an application security firm. This work is usually scoped ahead of time and billed at flat hourly/daily rates, with the total cost known to the client prior to commencing.

These can be white box (i.e., access to source code and documentation) or black box (i.e., no source code access, with or without documentation), or somewhere in the middle. There is usually a well-defined scope and often preliminary discussions on points of interest to investigate more closely than others. Frequently, there will also be a walkthrough of the application’s functionality. More often than not, the testing takes place in a predefined set of days and hours. This is typically when the client is available to respond to questions, react in the event of potential issues (e.g., a site going down) or possibly to avoid peak traffic times.

Because of the trust that clients have in professional firms, they will often permit them direct access to their infrastructure and code - something that is generally never done in a bug bounty program. This empowers the testers to find bugs that are potentially very difficult to find externally and things that may be out of scope for dynamic tests, such as denial-of-service vulnerabilities. Additionally, with this approach, it’s common to discover one vulnerability, only to then quickly discover it’s a systemic issue specifically because of the access to the code. With this access, it is also much easier to identify things like vulnerable dependencies, often buried deep in the application.

Once the testing is complete, the provider will usually supply a written report and may have a wrap-up call with the client. There may also be a follow-up (retest) to ensure a client’s attempts at remediation have been successful.

Bug Bounty Programs

What is most commonly referred to as a bug bounty program is typically an open-ended, ongoing effort where the testing is performed by the general public. Some companies may limit participation to a smaller group, permitting participation on whatever criteria they wish, with past performance in other programs being a commonly used factor.

Most programs define a scope of things to be tested and the vulnerability types that they are interested in receiving reports on. The client typically sets the payout amounts they are offering, with escalating rewards for more impactful discoveries. The client is also free to incentivize testing on certain areas through promotions (e.g., double bounties on their new product). Most bug bounty programs are exclusively black box, with no source code or documentation provided to the participating testers.

In most programs, there are no limits as to when the testing occurs. The participants determine if and when they perform testing. Because of this, any outages caused by the testing are usually treated as either normal engineering outages or potentially as security incidents. Some programs do ask their testers to identify their traffic via various means (e.g., passing a unique header) to more easily understand what they’re seeing in logs, if questions arise.

The bug bounty program’s concept of reporting is commonly individual bug reports, with or without a pre-formatted submission form. It is also common for programs to request that the person submitting the report validate the fix.

Hybrid Approaches

While not the focus of this post, we felt it was necessary to also acknowledge that there are hybrid approaches available. These offerings combine various aspects of both a bug bounty program and focused product security audits. We hope this post will inform the reader well enough to ensure they select the approach and mix of services that is right for their organization and fully understand what each entails.

From the definitions, the two approaches seem reasonably similar, but when we go below the surface, the differences become more apparent.

The people

It’s not fair to paint any group with a broad brush, but there are some clear differences between who typically works in a product security audit versus a bug bounty program. Both approaches can result in great people testing an application and both could potentially result in participants lacking the professionalism and/or skill set you hoped for.

When a firm is retained to perform testing for a client, the firm is staking their reputation on the client’s satisfaction. Most reputable firms will attempt to provide clients with the best people they have available, ideally considering their specific skills for the engagement. The firm assumes the responsibility to screen their employees’ technical abilities, usually through multiple rounds of testing and interviewing prior to hiring, along with ongoing supervision, training and mentoring. Clients are also often provided with summaries of the engineers’ résumés, with the option to request alternate testers, if they feel their background doesn’t match with the project. Lastly, providers are also usually required to perform criminal background checks on their staff to meet client requirements.

A Bug Bounty program usually has very minimal entry requirements. Typically this just means that the participants are not from embargoed countries. Participants could be anyone from professionals looking to make extra money, security researchers, college students or even complete novices looking to build a résumé. While theoretically a client may draw more eyes to their project than in a typical audit, that’s not guaranteed and there are no assurances of their qualifications. Katie Moussouris, a well-known CEO of a bug bounty consultancy, is quoted underscoring this point, saying “Their latest report shows most registered users are basically either fake or unskilled”. Further, per their own statistics, one of the largest platforms stated that only about one percent of their participants “were really doing well”. So, despite large potential numbers, the small percentage of productive participants will be stretched thinly across thousands of programs, at best. In reality, the top participants tend to aggregate around programs they feel are the most lucrative or interesting.

The process

When a client hires a quality firm to perform a product security audit, they’re effectively getting that firm’s collective body of knowledge. This typically means that their personnel have others within the company they can interact with if they encounter problems or need assistance. This also means that they likely have a proprietary methodology they adhere to, so clients should expect thorough and consistent results. Internal peer review and other quality assurance processes are also usually in place to ensure satisfactory results.

Generally, there are limitations on what a client wants or is able to share externally. It is common that a firm and client sign mutual NDAs, so neither party is allowed to disclose information about the audit. Should the firm leak information, they can potentially be held legally liable.

In a bug bounty program, each tester makes their own rules. They may overlap each other, creating repeated redundant tests, or they may compliment each other, giving the presumed advantage of many eyes. There is generally no way for a client to know what has or has not been tested. Clients may also find test accounts and data littered throughout the app (e.g., pop-up alerts everywhere), whereas professional testers are typically more restrained and required to not leave such remnants.

Most bug bounty programs don’t require a binding NDA, even if they are considered “private”. Therefore, clients are faced with a decision as to what and how much to share with the program participants. As a practical matter, there is little recourse if a participant decides to share information with others.

The results

When a client hires a firm, they should expect a well-written professional report. Most firms have a proprietary reporting format, but will usually also provide a machine-readable report upon request. In most cases, clients can preview a sample report prior to hiring a firm, so they can get a very clear picture of the deliverables.

Reports from professional audits are typically subjected to several rounds of quality control prior to being delivered to clients. This will typically include a technical review or validation of reported issues, in addition to language and grammar editing to ensure reports are readable and professionally constructed. Additionally, quality firms also understand the fact that the results may be reviewed by a wide audience at their clients. They will therefore invest the time and effort to construct them in such a way that an audience, with a wide range of technical knowledge, are all able to understand the results. Testers are also typically required to maintain testing logs and quality documentation of all issues (e.g., screenshots - including requests and responses). This ensures clear findings reports and reproduction steps along with all the supporting materials.

Through personalized relationships with clients and potentially their source code, firms have the opportunity to understand what is important to them, which things keep them up at night and which things they aren’t concerned about. Through kickoff meetings, ongoing direct communication and wrap-up meetings, firms build trust and understanding with clients. This allows them to look at vulnerabilities of all severity levels and understand the context for the client. This could result in simply saving the client’s time or recognizing when a medium severity issue is actually a critical issue, for that client’s organization.

Further, repeated testing allows a client to tangibly demonstrate their commitment to security and how quickly they remediate issues. Additionally, product security audits conducted by experienced engineers, especially those with source code access, can highlight long-term improvements and hardening measures that can be taken, which would not generally be a part of a bug bounty program’s reports.

In a bug bounty program, the results are unpredictable, often seemingly driven mainly by the participants’ focus on payouts. Most companies end up inundated with effectively meaningless reports. Whether valid or not, they are often unrealistic, overhyped, known CVEs or previously known bugs, or issues the organization doesn’t actually care about. It is rare that results fully meet expectations, but not impossible. Submissions tend to cluster around things pushing (often quite imaginatively) to be considered critical or high severity, to gain the largest payouts or the low hanging fruits detected by automated scanners, usually reported by the lower rated participants looking for any type of payouts, no matter how trivial. The reality is that clients need to pay a premium to get the “good researchers” to participate, but on public programs that itself can also cause a significant uptick in “spam” reports.

Bug bounty reports are typically not formatted in a consistent manner and not machine-readable for ingestion into defect tracking software. Historically, there have been numerous issues that have arisen from reports which were difficult to triage due to language issues, poor grammar or bad proof-of-concept media (e.g., unhelpful screenshots, no logs, meandering videos). To address this, some platforms have gone as far as to incentivize participants to provide clear and easily readable reports via increased payouts, or positive reviews which impact the reporters’ reputation scores.

The value

A professional audit is something that produces a deliverable that a client can hand to a third-party, if necessary. While there is a fixed cost for it, regardless of the results, this documented testing is often required by partner companies and for compliance reasons. Furthermore, when using a reputable firm, a client may find it easier to pass the security requirements of their partners. Lastly, should there be an incident, a client can attest to their due diligence and potentially lessen their legal liability.

A bug bounty provides no assurances as to the amount of the application that is tested (i.e., the “coverage”). It neither produces an acceptable deliverable that can be offered to third parties, nor does it attest to the quality of the skills of those testing the application(s). Further, bug bounty programs don’t typically satisfy any compliance requirements with respect to testing requirements.

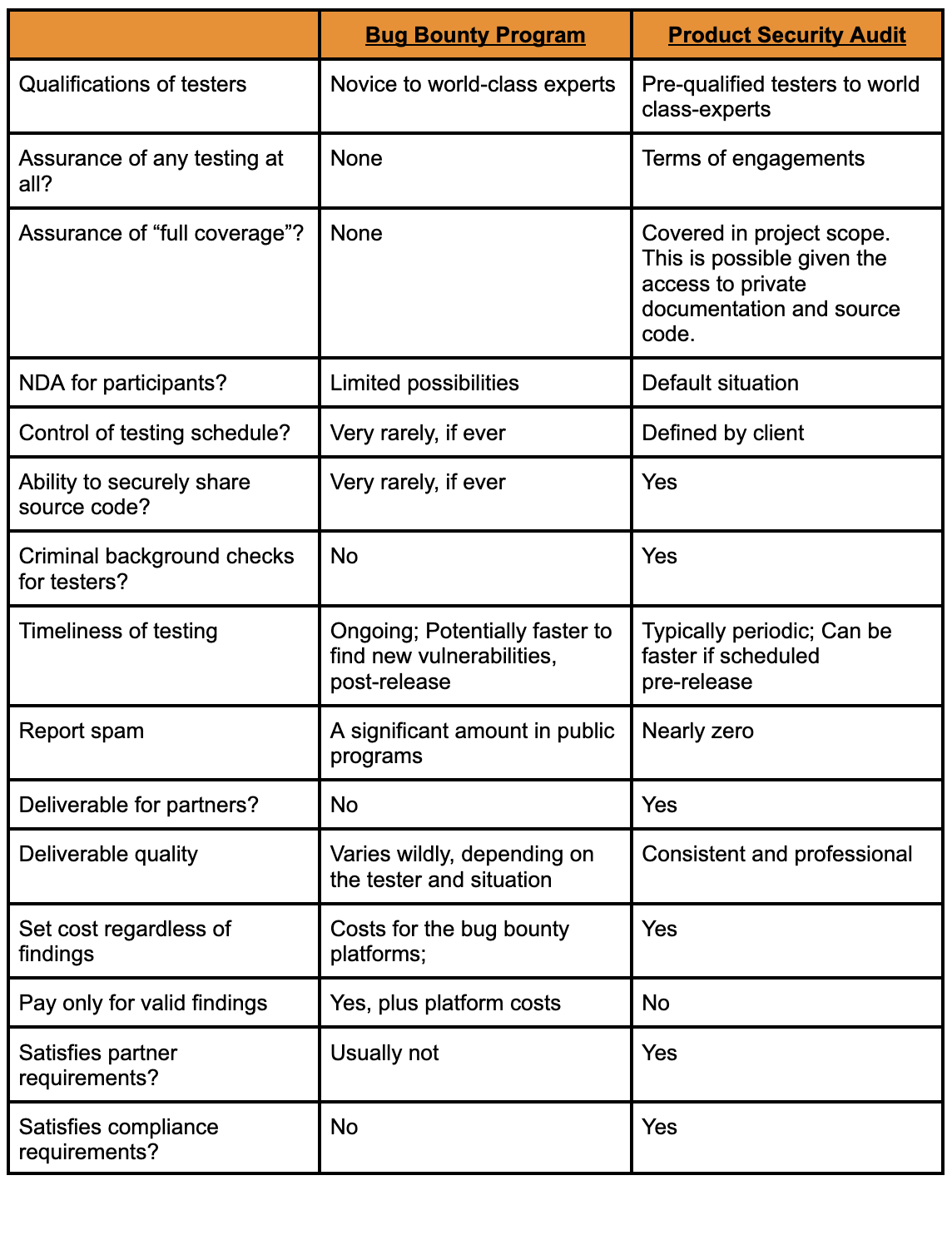

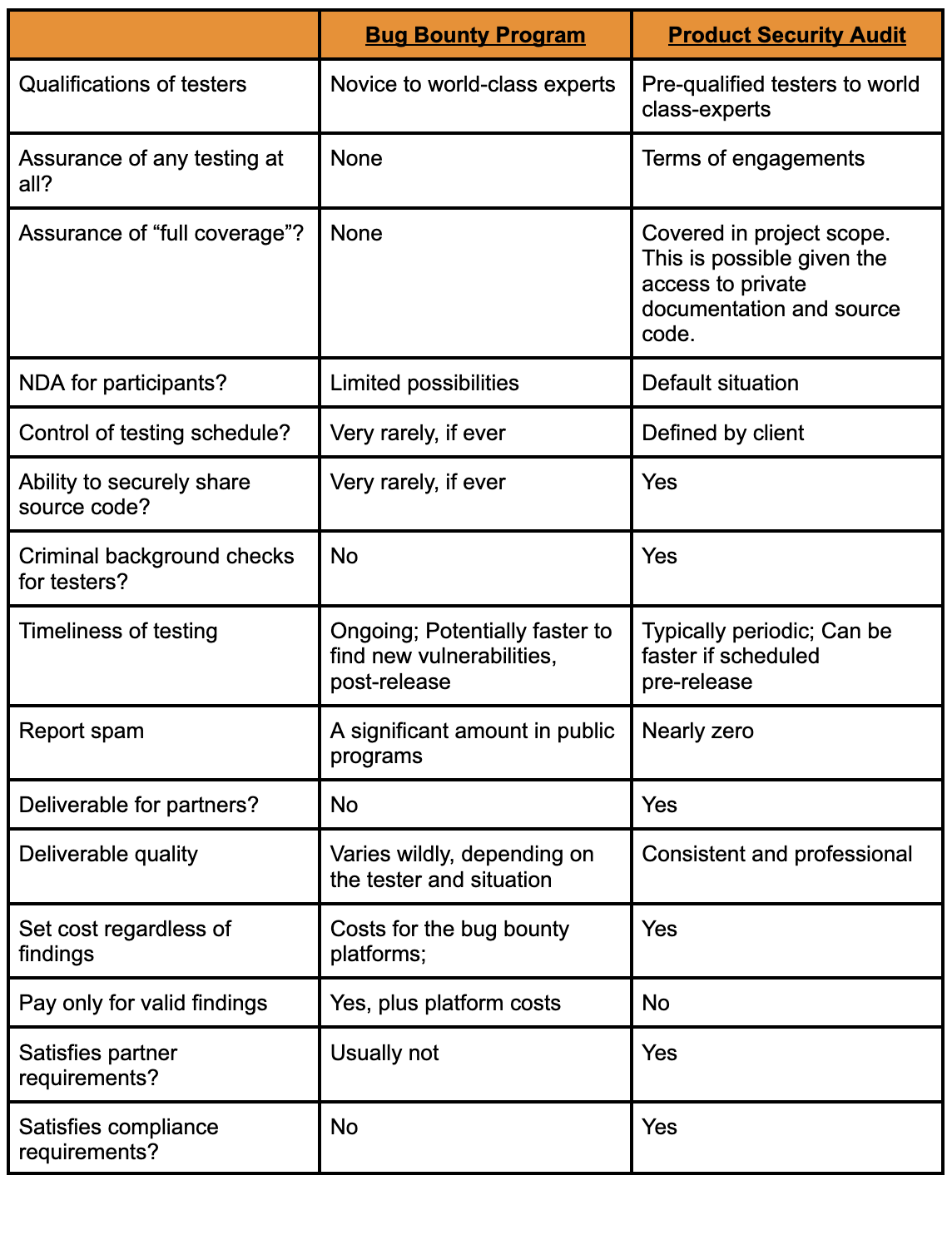

In the following table, we perform a side-by-side comparison of the two approaches to make the differences clearer.

Which approach an organization decides to take will vary based on many factors including budget, compliance requirements, partner requirements, time-sensitivity and confidentiality requirements. For most organizations, we feel the correct approach is a balanced one.

Ideally, an organization should perform recurring product security audits at least quarterly and after major changes. If budgets don’t permit that frequency of testing, the typical compromise is annually, at an absolute minimum.

Bug bounty programs should be used to fill the gaps between rigorous security audits, whether those audits are performed by internal teams or external partners. This is arguably the need they were designed to fill, rather than replacing recurring professional testing.

如有侵权请联系:admin#unsafe.sh