2024-5-31 21:0:0 Author: www.tenable.com(查看原文) 阅读量:6 收藏

Like many organizations, yours is likely using AI – or at least thinking about deploying it soon. But how can you ensure you use it securely, responsibly, ethically and in compliance with regulations? Check out best practices, guidelines and tips in this special edition of the Tenable Cybersecurity Snapshot!

If your organization is using artificial intelligence (AI), chances are that the CISO and other security leaders have been enlisted to help create guardrails for its use. How can the security team contribute to these efforts? That’s the topic of this special edition of the Tenable Cybersecurity Snapshot. We look at best practices for secure use of AI. We cover new guidelines on integrating security into the AI lifecycle. Also, we unpack recommendations for recognizing data leakage risks and strategies for maintaining data privacy. And we delve into how to keep your AI deployment in line with regulations.

1 - Build security in at every stage

Integrating security practices throughout the AI system's development lifecycle is an essential first step to ensure you’re using AI securely and responsibly. This includes using secure coding practices, scanning for vulnerabilities, conducting code reviews, employing static and dynamic analysis tools and performing regular security testing and validation.

In the recently released guide “Deploying AI Systems Securely,” jointly published by the Five Eyes countries – Australia, Canada, New Zealand, the U.K. and the U.S. – the authors note that AI is increasingly a target for cybercrime.

“Malicious actors targeting AI systems may use attack vectors unique to AI systems, as well as standard techniques used against traditional IT,” the report notes.

The guidance recommends that organizations developing and deploying AI systems incorporate the following:

Ensure a secure deployment environment: Confirm that the organization’s IT infrastructure is robust, with good governance, a solid architecture and secure configurations in place. This includes appointing a person to be responsible for AI system cybersecurity who is also accountable for the organization’s overall cybersecurity – typically the CISO or Head of Information Security.

Require a threat model: Have the primary developer of the AI system – whether it’s a vendor or an in-house team – provide a threat model that can guide the deployment team in implementing security best practices, assessing threats and planning mitigations. Also, consider putting your security requirements in the procurement contracts with vendors of AI products or services.

Promote a collaborative culture: Encourage communication and collaboration among the organization’s data science, IT infrastructure and cybersecurity teams to address any risks or concerns effectively.

Robust architecture design: Implement security protections at the boundaries between the IT environment and the AI system; address identified blind spots; protect proprietary data sources; and apply secure design principles, including zero trust frameworks.

Harden configurations: Follow best practices for the deployment environment, such as using hardened containers for running machine learning models; monitoring networks; applying allowlists on firewalls; keeping hardware updated; encrypting sensitive AI data; and employing strong authentication and secure communication protocols.

Learn more about recommendations for building and deploying AI securely:

- “OWASP AI Security and Privacy Guide” (OWASP)

- “Google's Secure AI Framework” (Google)

- “How To Boost the Cybersecurity of AI Systems While Minimizing Risks” (Tenable)

- “Guidelines for Secure AI System Development” (U.S. and U.K. governments)

- “Secure Software Development Practices for Generative AI and Dual-Use Foundation Models” (NIST)

2- Prevent data leakage and establish clear guidelines on privacy standards

AI operates by analyzing vast amounts of data. Ensuring the ethical use of this data and maintaining privacy standards is essential for protecting your organization’s intellectual property and other sensitive data, such as customers’ personal data. Organizations should establish clear ethical guidelines that dictate the use of data. This involves obtaining proper consent for data use, anonymizing sensitive information, and being transparent about how AI models use data. Implementing robust data governance frameworks will not only protect user privacy but also build trust in AI systems.

A recent study from Coleman Parkes Research finds only one in 10 organizations has a reliable system in place to measure bias and privacy risk in large language models (LLMs). Creating a trusted environment and minimizing the risk of data loss when using AI and providing access to AI applications centers on proactive measures and thoughtful system architecture.

In its recent publication,“5 steps to make sure generative AI is secure AI,” Accenture recommends business leaders keep the following in mind when it comes to data-leakage risk:

Acknowledging the risk: Recognize that the risk of unintentional transmission of confidential data through generative AI applications is significant.

Custom front-end development: Develop a custom front-end that interacts with the underlying language model API, such as OpenAI's, bypassing the default ChatGPT interface and controlling data flow at the application layer.

Use of sandboxes: Establish sandboxes that act as controlled environments where data is isolated. This setup serves as a secure gateway to LLMs, with additional filters to safeguard data and mitigate bias.

Data control: Depending on the sensitivity of the data, establish protocols where sensitive information remains within the company's control in a trusted environment, while less sensitive data can be exchanged with a hosted service equipped to handle data in a secure, isolated manner.

Train employees: Without proper guidance, employees may not understand the risks of AI technology and this could lead to the emergence of "shadow IT," posing new cybersecurity risks. Implement a comprehensive workforce training program as soon as possible.

Accenture has also released a list of its top-four security recommendations for using generative AI in an enterprise context.

Application: Prioritize secure data capture and storage at the application or tool level to mitigate risks associated with data breaches and privacy violations.

Prompt: Protect against "prompt injection" attacks by ensuring that models cannot be instructed to deliver false or malicious responses, which could be used to bypass controls added to the system.

Foundation Model: Monitor foundation model behavior and outputs to detect anomalies, attacks, or deviations from expected performance, and take quick action if necessary.

Data: Develop data-breach incident response plans to ensure proper handling and safeguarding of sensitive and proprietary data.

To learn more about privacy and AI:

- “Adopting AI Technology With Data Privacy In Mind” (Corporate Board Member)

- “80% of AI decision makers are worried about data privacy and security” (AI News)

- “AI Risk Management Framework” (NIST)

- “AI and Privacy Issues: What You Need to Know” (eWeek)

- “A regulatory roadmap to AI and privacy” (International Association of Privacy Professionals)

3- Compliance should also be top of mind

In the “State of Generative AI in the Enterprise” report from Deloitte, 2,800 executives were asked about their generative AI risk-management capabilities. Only 25% said their organizations are either “highly” or “very highly” ready to deal with generative AI governance and risk issues. Governance concerns cited include: lack of confidence in results; intellectual property issues; data misuse; regulatory compliance; and lack of transparency.

“Adopting AI securely and compliantly requires organizations to establish clear boundaries, implement acceptable use policies, establish responsible AI policies for developers, create AI procurement policies, and identify possible misuse scenarios,” Prof. Hernan Huwyler, a governance, risk, and compliance specialist who develops compliance and cybersecurity controls for multinational companies, writes in an article in Digital First.

Attorney Anup Iyer, senior counsel with Moore & Van Allen, advises regular data audits to show how data is being collected and stored, highlighting areas for improvement.

“By integrating privacy-by-design principles in AI initiatives, whether developed in-house or acquired through third party vendors, organizations can focus on the importance of data privacy at the outset and lower the risk of data exposure,” he writes in an article for Reuters.

Meanwhile, keep your eyes open for AI-specific laws and regulations that are in development now and will likely have an impact on compliance in the near future. For example, the European Union recently finalized the landmark Artificial Intelligence Act, marking a significant regulatory step in managing AI technologies within the 27-nation bloc. The law aims to ensure AI developments are human-centric, enhancing societal and economic growth.

The AI Act categorizes AI applications by risk, imposing stricter requirements on those deemed high-risk, such as medical devices and critical infrastructure, while banning certain uses entirely, including social scoring and most biometric surveillance. The regulation also addresses the challenges posed by generative AI models like OpenAI's ChatGPT, requiring developers to disclose training data and adhere to EU copyright laws, and to label AI-generated content clearly.

EU companies operating powerful AI systems will need to conduct risk assessments, report serious incidents, and implement robust cybersecurity measures in order to comply. The act is expected to take effect later this year and will impact EU member states within six months of becoming law.

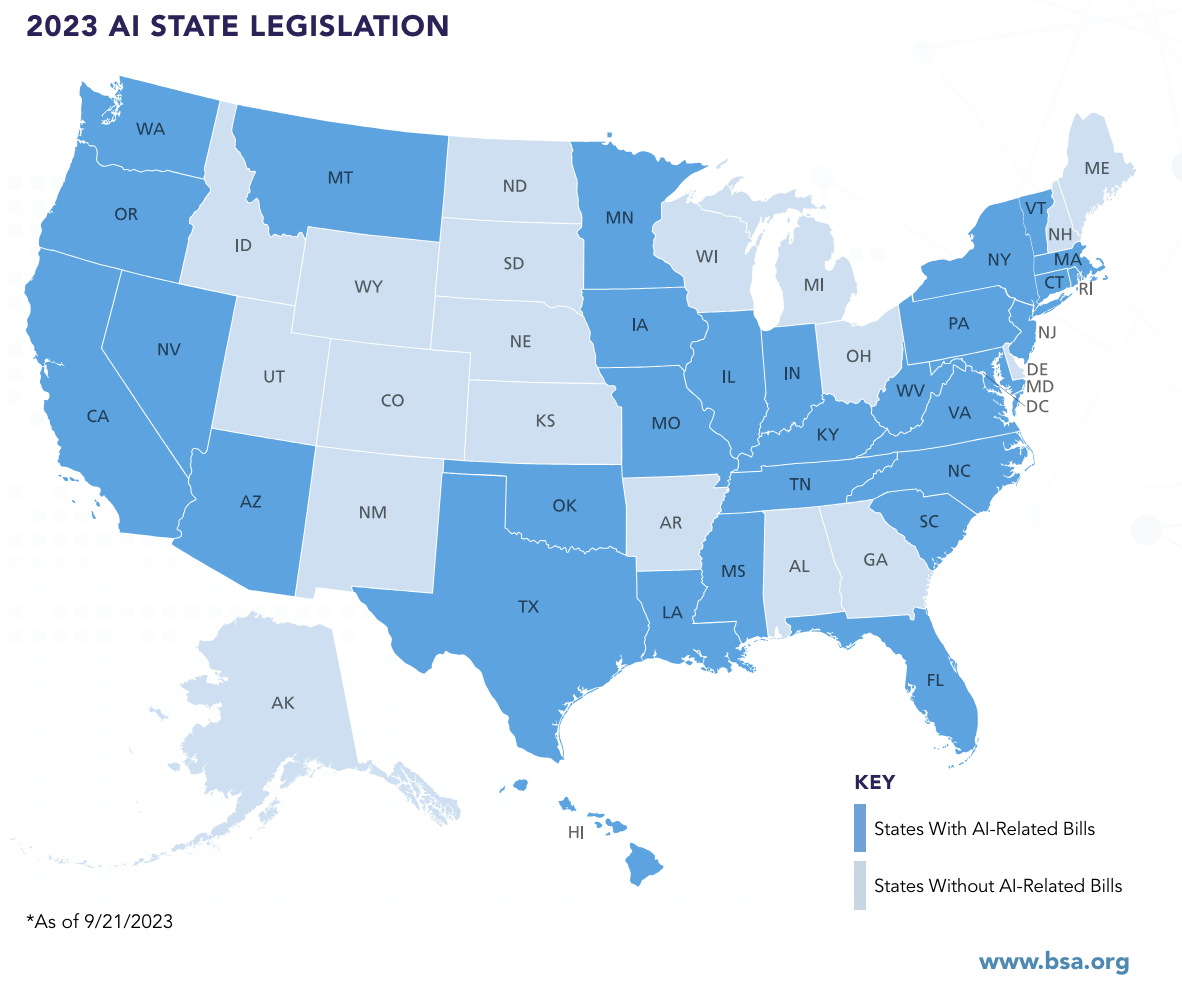

In the U.S., state legislatures have introduced hundreds of AI-related bills, according to the Business Software Alliance, an industry group. Their tracking reveals that in 2023, state legislators introduced more AI-related bills – 191 – than in the previous two years combined. That’s a 440% increase from the number of AI-related bills introduced in 2022. The bills focused on multiple aspects of AI, including regulating specific AI use cases, requiring AI governance frameworks, creating inventories of states’ uses of AI, establishing task forces and committees, and addressing the state governments’ AI use, according to BSA.

In California, a state legislator recently proposed legislation that focuses on extensive testing and regulatory oversight for significant AI models before they are released to the public. Key provisions of the bill include mandatory emergency shutdown capabilities for AI systems and enhanced hacking protections to secure AI from malicious attacks.

Learn more about AI, compliance and legislative actions:

- “Navigating data challenges and compliance in AI initiatives” (Reuters)

- “Practical Tips to Adopt AI Securely and Compliantly” (Digital First)

- “What to know about landmark AI regulations proposed in California” (ABC News)

- “Europe's world-first AI rules get final approval from lawmakers. Here's what happens next” (Associated Press)

- “2023 State AI Legislation Summary” (Business Software Alliance)

4 - Train employees on safe and secure AI

Consumer applications for AI like ChatGPT have caught on very quickly as millions of people embrace these tools. But the surge in interest poses a challenge for business leaders. Accenture notes that the majority of workers are self-educating on the technology through social media and various news outlets, which could lead to a spread of inaccurate information about the use of AI tools. Lacking a reliable source to distinguish correct from incorrect information, employees might inadvertently contribute to the creation of an unmanaged "shadow IT" landscape by using these apps on their personal devices, potentially ushering in fresh cybersecurity risks.

Another study from Salesforce finds more than half of employees are using AI without employer permission.

Organizations need to create employee training programs to combat this. Nihad A. Hassan, cybersecurity consultant, author and expert in digital forensics and cyber open source intelligence (OSINT), writes in searchenterpriseai, that to effectively safeguard against the cybersecurity threats posed by generative AI technologies, it's essential for companies to:

Prioritize employee education: Establish comprehensive training programs that highlight the potential security risks associated with the use of generative AI. Employees need to be aware of how their interactions with AI could potentially expose the organization to cyber threats.

Develop and enforce policies: Formulate specific internal policies governing the use of generative AI. These policies should dictate how AI tools are used and emphasize the importance of human supervision in verifying and refining AI-generated content.

Clarify data handling: The policies should be clear about the types of data that are permissible to share with AI-powered systems. Sensitive information, such as intellectual property, personally identifiable information (PII), protected health information (PHI), and copyrighted materials, should be explicitly forbidden from being input into these tools by employees.

For more information:

- “Employers Train Employees to Close the AI Skills Gap” (Society for Human Resource Management)

- “AI education is key to employee security” (Business Reporter)

- “Three Ways To Prepare Your Workforce for Artificial Intelligence” (IEEE)

- “More than Half of Generative AI Adopters Use Unapproved Tools at Work” (Salesforce)

- “AI and employee privacy: important considerations for employers” (Reuters)

5 - Collaborate and stay on top industry efforts to develop standards

Don’t go it alone. It is critically important for security leaders today to stay on top of industry efforts that are shaping AI standards like the “AI Safety Initiative” hosted by the Cloud Security Alliance (CSA), with Amazon, Anthropic, Google, Microsoft and OpenAI, which was founded in late 2023.

“Generative AI is reshaping our world, offering immense promise but also immense risks. Uniting to share knowledge and best practices is crucial. The collaborative spirit of leaders crossing competitive boundaries to educate and implement best practices has enabled us to build the best recommendations for the industry,” said Caleb Sima, industry veteran and Chair of the Cloud Security Alliance AI Safety Initiative, when the news of the alliance was announced.

There are also multiple industry groups that CISOs and other security leaders can join that are working to develop and adhere to safety and security guidelines for artificial intelligence, with a particular focus on generative AI. The Association for the Advancement of Artificial Intelligence (AAAI), the International Association for Pattern Recognition (IAPR), and the IEEE Computational Intelligence Society (IEEE CIS) are dedicated to advancing AI research, education, and innovation. Organizations such as the Association for Computing Machinery (ACM) Special Interest Group on Artificial Intelligence (SIGAI), the Association for Machine Learning and Applications (AMLA), and the Partnership on AI (PAI) strive to bridge connections among AI practitioners, developers, and users across various fields. Groups like Women in AI (WAI), Black in AI (BAI), and the AI for Good Foundation (AI4G) focus on promoting diversity, inclusion, and positive social impact within the AI community.

CISA issued its “Roadmap for AI,” a comprehensive agency-wide strategy that aligns with the U.S. government’s national AI policy. The plan is focused on promoting the positive application of AI to boost cybersecurity measures, safeguard AI systems from cyber threats and prevent exploitation of AI technologies that could jeopardize the critical infrastructure. SANS Institute has also issued guidance on how CISOs can embrace AI for innovation while also mitigating the risk associated with the technology. Security leaders should stay on top of industry guidance and lean on it to prioritize the establishment of internal policies, protect sensitive information, audit AI usage and remain vigilant to evolving security threats.

For more information, check out:

- “Want to Deploy AI Securely? New Industry Group Will Compile AI Safety Best Practices” (Tenable)

- “Roadmap for AI” (CISA)

- “The CISO's Guide to AI: Embracing Innovation While Mitigating Risk” (SANS Institute)

- “World Economic Forum Launches AI Governance Alliance Focused on Responsible Generative AI” (World Economic Forum)

- “AI Alliance will open-source AI models; Meta, IBM, Intel, NASA on board” (9to5Mac)

6 - Proceed with caution

Don’t move too fast. An Accenture poll of more than 3,400 IT executives finds nearly three-quarters (72%) are purposely exercising restraint with generative AI investments. With so much at stake both from a security and privacy perspective, it is wise not to jump in without careful planning and consideration.

Researchers with the Cloud Security Alliance said in a recent study that they see no sign that enthusiasm for AI will be slowing down soon. But, they warn, implementing AI tools too quickly could lead to mistakes and problems.

"This complex picture underscores the need for a balanced, informed approach to AI integration in cybersecurity, combining strategic leadership with comprehensive staff involvement and training to navigate the evolving cyber threat landscape effectively," the researchers wrote.

For more information:

- “Study: Most orgs adopt AI without usage policies, training” (Tenable)

- “Managing the risks of generative AI: A playbook for risk executives” (PwC)

- “Proactive risk management in Generative AI” (Deloitte)

- “Implementing generative AI with speed and safety” (McKinsey)

- “Managing the Risks of Generative AI” (Harvard Business Review)

Joan Goodchild

Joan Goodchild is a veteran journalist, editor, and writer who has been covering security for more than a decade. She has written for several publications and previously served as editor-in-chief for CSO Online.

如有侵权请联系:admin#unsafe.sh