2024-6-11 12:19:53 Author: hackernoon.com(查看原文) 阅读量:6 收藏

Authors:

(1) Silei Xu, Computer Science Department, Stanford University Stanford, CA with equal contribution {[email protected]};

(2) Shicheng Liu, Computer Science Department, Stanford University Stanford, CA with equal contribution {[email protected]};

(3) Theo Culhane, Computer Science Department, Stanford University Stanford, CA {[email protected]};

(4) Elizaveta Pertseva, Computer Science Department, Stanford University Stanford, CA, {[email protected]};

(5) Meng-Hsi Wu, Computer Science Department, Stanford University Stanford, CA, Ailly.ai {[email protected]};

(6) Sina J. Semnani, Computer Science Department, Stanford University Stanford, CA, {[email protected]};

(7) Monica S. Lam, Computer Science Department, Stanford University Stanford, CA, {[email protected]}.

Table of Links

WikiWebQuestions (WWQ) Dataset

Conclusions, Limitations, Ethical Considerations, Acknowledgements, and References

A. Examples of Recovering from Entity Linking Errors

6 Experiments

In this section, we evaluate WikiSP on WikiWebQuestions and demonstrate how it can be used to complement large language models such as GPT-3.

6.1 Semantic Parser Results

We evaluate our model with two different answer accuracy metrics: (1) exact match (EM): the percentage of examples where the answers of the predicted SPARQL exactly match the gold answers, and (2) Macro F1 score (F1): the average F1 score for answers of each example. The evaluation results are shown in Table 1. Our approach achieves a 65.5% exact match accuracy and a 71.9% F1 score on the WWQ dataset.

As a reference, the current state-of-the-art result on the original WebQuestionsSP dataset for Freebase is 78.8% F1 (Yu et al., 2023). The result was obtained with a combination of semantic parsing and retrieval. The WikiWebQuestions dataset is slightly different, as discussed above. More significantly, unlike Freebase, Wikidata does not have a fixed schema and ours is an end-to-end, seq2seq semantic parser.

6.2 Ablation Experiments

6.2.1 Entity Linking

Our first ablation study evaluates the need for entity linking with ReFinED, by replacing it with simply using the LLM to detect entities as mentions. In this experiment, all entity IDs in the training data are replaced by their mentions; during inference, we map the predicted entities to their actual QIDs according to Section 3.2.2.

The results show that replacing the neural entity linker with just using mentions reduces the exact match by 9.1% and the F1 score by 9.3%. This suggests that entity linking is important.

6.2.2 Allowing Mentions as Entities

Our logical form is designed to recover from entity linking errors by allowing entities be specified by

a mention, as an alternative to a QID. Our ablation study on this feature tested two training strategies: ReFinED. The entity linker tuples are produced by fine-tuned ReFinED, which may be missing entities in the gold target. The data show that generating unseen QIDs is needed for missing entities.

Oracle. The entity linker tuples are exactly all the entities used in the gold. The model would only encounter missing QIDs at test time when ReFinED fails to generate all the necessary QIDs.

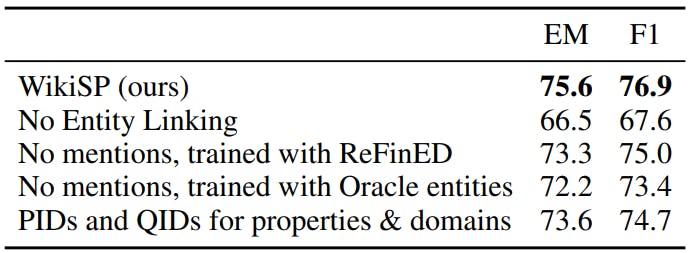

The answer accuracy of the model using entity linked tuples from ReFinED (“No mentions, trained with ReFinED” in Table 2) lags by 2.3% when compared against our best model. The model using Oracle (“No mentions, trained with Oracle entities” in Table 2) lags by 3.4%. These results indicate that allowing mentions is useful for recovering from entity linking errors.

6.2.3 Names vs. IDs for Properties & Domains

Our logical form replaces PIDs with property names, and domain-entity QIDs with the domain names. Here we evaluate the effectiveness of this query format. We compare our approach with the original SPARQL where all properties and entities are represented with PIDs and QIDs. Our ablation study shows that our representation with property names and domain names improves the answer accuracy by 2.0% (Table 2). This shows that LLMs can adapt to changes in query notation with finetuning, and it is easier to learn names than remembering random IDs. If we did not allow mentions in the predicted logical form, the replacement of QIDs with their names is likely to be more significant.

6.3 Complementing GPT-3

LLMs like GPT-3 can answer many questions on general knowledge correctly; however, they may also hallucinate. WWQ is representative of popular questions, so we expect GPT-3 to perform well. We use text-davinci-002 with the temperature set to 0 to evaluate GPT-3’s performance on WWQ.

On the dev set of WWQ, GPT-3 answers 66.4% of the questions correctly and provides incomplete answers to 26.5% of the questions. For example, when asked “What does Obama have a degree in?”, GPT-3 correctly identifies President Obama’s political science degree, but fails to mention his law degree. In total, GPT-3 gives wrong answers to 7.1% of the questions.

For this dev set, we can give definitive answers to 75.6% of the questions with WikiSP (Table 2). For the rest of the questions (24.4%), accounting for the overlap between the GPT-3 and our semantic parser’s results, the percentages of guessing correctly, incompletely, and incorrectly are at 15.2%, 5.5%, and 3.7%, respectively (Figure 2).

In summary, the combination of GPT-3 and WikiSP makes it possible to give a definitive, correct, and complete answer three quarters of the time for the dev set. Users can also benefit from GPT3’s guesses the rest of the time at a 3.7% error rate, which is about half of the original error rate.

6.4 Error Analysis

We analyzed the 111 examples in the WWQ dev set where the model failed.

6.4.1 Acceptable Alternative Results (18.0%)

Our analysis shows that 18.0% of the “errors” can actually be deemed to be correct.

Reasonable alternate answers (11.7%). In 11.7% of the cases, the model predicts an alternative interpretation to the question and returns a reasonable answer that is different from the gold. For example, the gold for question “what did Boudicca do?” uses the position held property, while the model predicts occupation property. Both are considered valid answers to the question.

Reasonable alternative SPARQL but no answer was retrieved (6.3%). In another 6.3% of cases, the model predicts a reasonable alternative SPARQL, but the SPARQL returns no answer. Sometimes, since the information for the “correct” property is missing, the question is represented with a similar property. For example, since residence property is missing for Patrick Henry, the gold SPARQL for “where did Patrick Henry live?” uses place of birth instead, while our model predicts residence.

6.4.2 Errors in Entity Linking (35.1%)

The biggest source of errors is entity linking. Entity linker failed to provide the correct entities in 35.1% of the failed examples. While WikiSP can potentially recover from missing entities, it cannot recover from incorrect entities. This is especially common for character roles, as some character roles have different entities for books and movies or even different series of movies. Sometimes WikiSP located the correct mention from the question, but the lookup failed. For example, the model located the mention of the event “allied invasion of France” in question “where did the allied invasion of France take place?”, but failed to find the corresponding entity from Wikidata by the name.

6.4.3 Errors Beyond Entity Linking

Semantic parsing in Wikidata is challenging as there are no predefined schemas, and there are 150K domains and 3K applicable properties. Some representative mistakes include the following:

Wrong property (17.1%). 17.1% of the errors are caused by predicting the wrong property. Some of the examples require background knowledge to parse. For example the answer of the question “what did martin luther king jr do in his life?” should return the value of movement, while the model predicts occupation. Properties are a challenge in Wikidata because as illustrated here which property to predict depends on the entity itself.

Missing domain constraint (5.4%). Another common problem is missing the domain constraint. For example, the model correctly identifies that property shares border with should be used for question “what countries are around Egypt?”. However, it does not limit the answer to countries only, thus extra entities are returned.

如有侵权请联系:admin#unsafe.sh