2024-6-11 09:22:33 Author: hackernoon.com(查看原文) 阅读量:4 收藏

Authors:

(1) Silei Xu, Computer Science Department, Stanford University Stanford, CA with equal contribution {[email protected]};

(2) Shicheng Liu, Computer Science Department, Stanford University Stanford, CA with equal contribution {[email protected]};

(3) Theo Culhane, Computer Science Department, Stanford University Stanford, CA {[email protected]};

(4) Elizaveta Pertseva, Computer Science Department, Stanford University Stanford, CA, {[email protected]};

(5) Meng-Hsi Wu, Computer Science Department, Stanford University Stanford, CA, Ailly.ai {[email protected]};

(6) Sina J. Semnani, Computer Science Department, Stanford University Stanford, CA, {[email protected]};

(7) Monica S. Lam, Computer Science Department, Stanford University Stanford, CA, {[email protected]}.

Table of Links

WikiWebQuestions (WWQ) Dataset

Conclusions, Limitations, Ethical Considerations, Acknowledgements, and References

A. Examples of Recovering from Entity Linking Errors

5 Implementation

This section discusses the implementation details of the entity linker and the WikiSP semantic parser.

5.1 Entity Linking

We use ReFinED (Ayoola et al., 2022) for entity linking, which is the current state of the art for WebQuestionsSP. As discussed before, Wikidata treats many common terms such as “country” as named entities and assigns them QIDs. To fine-tune ReFinED to learn such terms, we add the question and entity pairs from the training set of WikiWebQuestions to the data used to train ReFinED’s questions model.

We run 10 epochs of finetuning using the default hyperparameters suggested by Ayoola et al. (2022). For each identified entity, we provide the mention in the original utterance, the QID, as well as its domain in plain text. The information is appended to the utterance before being fed into the neural semantic parsing model.

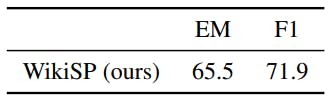

5.2 The WikiSP Semantic Parser

We prepare the training data with entities provided by fine-tuned ReFinED. Comparing with the gold entities, ReFinED provides extra entities in 215 cases, while missing at least one entity in 137 cases. When ReFinED failed to produce the correct entities, we replace the missing QIDs in the logical form with the corresponding mention of the entity in the question. During evaluation, if a mention of an entity is predicted by the model, we look up the QID using the Wikidata “wbsearchentities” API [4].

We fine-tune LLaMA with 7B parameters because it has been shown to perform well despite its relatively small size (Touvron et al., 2023). We include the Alpaca (Taori et al., 2023) instruction following data, which was derived using the selfinstruct (Wang et al., 2023) method, in our training data.

The training data samples in WikiWebQuestion start with the following instruction: “Given a Wikidata query with resolved entities, generate the corresponding SPARQL. Use property names instead of PIDs.”. We concatenate the resolved entities and the user utterance together as input. We up-sample the WikiWebQuestion fewshot set 5 times and train for 3 epochs using 2e-5 learning rate and 0.03 warmup ratio.

5.3 Executing Queries on Wikidata

SPARQL queries are used to retrieve answers from the Wikidata SPARQL endpoint[5]. Since Wikidata

is actively being updated, the gold SPARQL can be easily re-executed to acquire up-to-date answers, allowing the benchmark to compare with forthcoming iterations of large language models.

[4] https://www.wikidata.org/w/api.php? action=wbsearchentities

[5] https://www.wikidata.org/wiki/ Wikidata:SPARQL_query_service

如有侵权请联系:admin#unsafe.sh