2024-6-20 08:43:14 Author: hackernoon.com(查看原文) 阅读量:4 收藏

Authors:

(1) Xiaohan Ding, Department of Computer Science, Virginia Tech, (e-mail: [email protected]);

(2) Mike Horning, Department of Communication, Virginia Tech, (e-mail: [email protected]);

(3) Eugenia H. Rho, Department of Computer Science, Virginia Tech, (e-mail: [email protected] ).

Table of Links

Study 1: Evolution of Semantic Polarity in Broadcast Media Language (2010-2020)

Study 2: Words that Characterize Semantic Polarity between Fox News & CNN in 2020

Discussion and Ethics Statement

Study 1 shows that semantic polarization in broadcast media language has been increasing in recent years and overall peaking closer to 2020 during which the two major stations use starkly contrasting language in their discussion of identical words (Study 2). Such partisan divide in televised news language is similarly observed online where public discourse is becoming increasingly fraught with political polarization (Chinn, Hart, and Soroka 2020). To what degree is broadcast media one of the causes of online polarization in today’s agora of public discourse on social media? Do the temporal changes in semantic polarity in broadcast media significantly predict ensuing temporal changes in the semantic polarization across social media discourse?

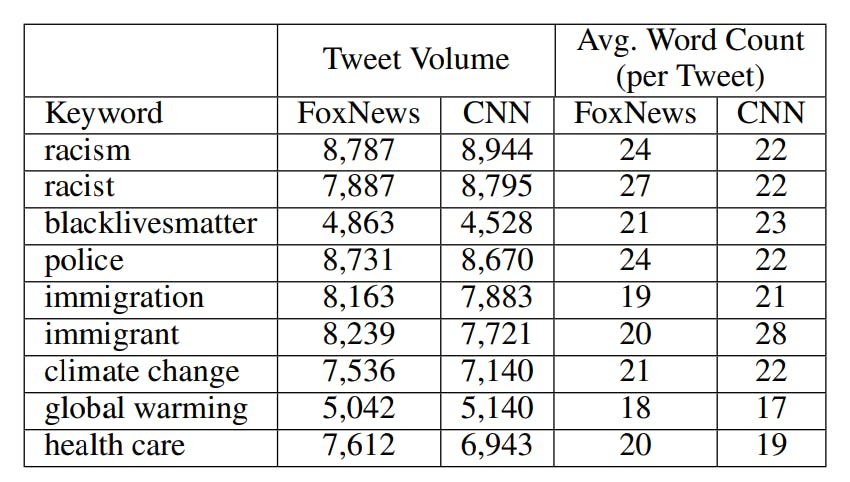

To answer this, we used Granger causality to test whether semantic polarity between CNN and Fox News broadcast media language over the last decade Granger-causes the semantic polarity between Twitter users who follow and mention @CNN or @FoxNews in their tweets that contain one of the nine keywords (“racist”, “racism”, “police”, “blackbivesmatter”, “immigrant”, “immigration”, “climate change”,“global warming”, and “health care”) over the same time period.

Twitter Dataset

For our social media corpus, we collected all tweets between 2010 and 2020 based on the following criteria: (1) written by users who follow both @CNN and @FoxNews; (2) mention or are replying to either @CNN or @FoxNews; (3) contain one of the nine keywords. Our final corpus amounted to 131, 627 tweets spanning over a decade. The volume of tweets and mean word count per tweet are shown in Table 5.

Hypothesis Testing with Granger Causality

To test whether temporal patterns in semantic polarity in TV news language significantly predict semantic polarity trends across Twitter users, we conducted a Granger causality analysis. Granger causality is a statistical test to determine whether a time series X is meaningful in forecasting another time series Y (Granger 1980). Simply, for two aligned time series X and Y , it can be said that X Grangercauses Y if past values Xt−l ∈ X lead to better predictions of the current Yt ∈ Y than do the past values Yt−l ∈ Y alone, where t is the time point and l is the lag time or the time interval unit in which changes in X are observed in Y . Using this logic, we tested the following hypotheses:

H1. Semantic polarization on TV news significantly Granger-causes semantic polarization on Twitter.

H2. Semantic polarization on Twitter significantly Granger-causes semantic polarization on TV.

Method and Analysis

First, we computed the monthly semantic polarity (SP) scores spanning from 2010 to 2020 for a total of 132 time points (12 months × 11 years) for each topical keyword for both the televised closed caption and Twitter data. We then built 18 time series using the monthly SP values for each of the 9 keywords across 11 years derived from the closed captions and the tweets. We then paired each of the TV news language time series with the Twitter time series by keyword, giving us a total of 9 causality tests per hypothesis. As a prerequisite for Granger causality testing, we conducted the Augmented Dickey-Fuller (ADF) test (Cheung and Lai 1995) to ensure that the value of the time series was not merely a function of time (see Appendix). We used the serial difference method (Cheung and Lai 1995) to obtain stationarity for ∆T wSracism and ∆T vSBLM, which were the only two time series with an ADF test value greater than the 5% threshold. In total, we conducted 18 time series analyses to test whether there was a Granger-causal relationship between the monthly SP values of broadcast media language (SPtv) and that of Twitter (SPtw) by each of the 9 keywords to determine the extent to which semantic polarization on TV drives semantic polarization on Twitter (H1) and vice versa (H2). For both hypotheses, we used a time-lag ranging from 1-12 months.

Results

We observe that changes in semantic polarity patterns on Twitter can be significantly predicted by semantic polarity trends in broadcast media language and vice-versa. Table 6, shows Granger causality results for hypotheses 1 and 2 with corresponding significant lag lengths (p < 0.05).

Hypothesis 1. As shown in Table 6, semantic polarity trends in broadcast media’s discussion on racism (keywords: “racism”, “racist”) and immigration (keywords: “immigration” and “immigrant”) significantly forecasts semantic polarity shifts in how people discuss these topics on Twitter with a time lag of 2 and 3 months, respectively. In other words, it takes about 2 months for the influence of semantic polarization in broadcast media language to manifest across how Twitter users semantically diverge in their discussion on racism, and about 3 months in how they talk about keywords linked to immigration.

Hypothesis 2. To test whether the directionality of influence is not only such that semantic polarization in televised news shapes polarity across Twitter conversations, but also vice-versa, we tested H2. Our results show that for “climate change” and “global warming”, semantic polarization across how people discuss these keywords on Twitter Granger-causes how CNN and Fox News semantically diverge in their use of these words on their shows with a lag of 3 months. This may be due to the fact that discussions on climate change and global warming have become increasingly political and less scientific on Twitter (Jang and Hart 2015; Chinn, Hart, and Soroka 2020), therefore raising online prominence of the topic through controversial political sound bites that may in return, draw attention from mainstream media outlets as a source for news reporting.

Bidirectional Causality. For Black Lives Matter, there is bidirectional causality, meaning semantic polarity in how the keyword “blacklivesmatter” is discussed on televised news programs shapes semantic polarity trends in how users talk about the topic and vice-versa. This may be due to the fact that BLM is an online social movement, largely powered by discursive networks on social media. Hence, while users may draw upon the narratives generated by traditional media outlets in their Twitter discussions, journalists and broadcast media companies too, may be attuned to how online users discuss BLM issues on social media.

Words That Characterize the Relational Trends in Semantic Polarization Between Broadcast Media and Twitter Discourse

To understand the manner in which language plays a role in how semantic polarity trends in broadcast media forecasts polarization patterns on Twitter, for topical keywords with significant Granger-causality results, we identified contextual tokens that are most characteristic of how each topical keyword is discussed on TV vs. Twitter separated by the corresponding lag lengths based on results shown in Table 6.

Hence, given the 132 time points (12 months × 11 years) represented by tij , where i represents the month (i ∈ [1, 12]), j is the year (j ∈ [1, 11]), and l is the value of the lag length in months corresponding to significant Granger coefficients (l ∈ [1, 8]), we first re-organized our TV news data with ti 0j time points, where i 0 represents the value of the maximum month minus l with the range: (i 0 ∈ [1, 12 − l]) and our Twitter data with ti 00j time points, where i 00 represents the value of the minimum month plus l with the range: (i 00 ∈ [1 + l, 12]). For topical keywords where semantic polarity in Twitter Granger-causes semantic polarity trends in broadcast media language, we interchange the values of i 0 and i 00. Next, following a similar approach to Study 2, we separately fed the TV news and Twitter corpora into a BERTclassifier and used Integrated Gradients to identify the top 10 most predictive tokens for each topical keyword.

Results are shown in Tables 8 - 11 (Appendix) where we demonstrate the top 10 contextual tokens that are most attributive of how each topical keyword is used either by a speaker from CNN or Fox News stations or by a user responding to @CNN or @FoxNews in their tweet during time periods separated by monthly lag lengths corresponding to significant Granger-causal relationships.

As shown by the bolded tokens, a fair proportion of the top 10 words most characteristic of how televised news programs (CNN vs. Fox News) and Twitter users (replying to @CNN vs. @FoxNews) discuss topical keywords overlap quite a bit, suggesting that these tokens are semantically indicative of how linguistic polarity in one media manifests in another over time. For example, six of the top 10 tokens predictive of how CNN broadcast news contextually uses the keyword “racism” are identical to that of how users on Twitter, replying to @CNN, use “racism” in their tweets: “believe”, “today”, “president”, “Trump”, “campaign”, “history”, “issue”.

For “blacklivesmatter” where the Granger-causality relationship is bidirectional, words most predictive of semantic polarity in broadcast news that drive polarized discourse on Twitter are different from the tokens on Twitter that drive polarity in televised news language. For example, the set of tokens (“violence”, “matter”, “white”, “support”, “blacks”) most predictive of how TV news programs on Fox News talk about BLM that appear three months later in tweets replying to @FoxNews, are strikingly different from the set of words (“blacks”, “cities”, “democrats”, “lives”, “moral”, “organization”, and “shoot”) that best characterize the tweets replying to @FoxNews, which appear three months later on the Fox News channel.

如有侵权请联系:admin#unsafe.sh