2024-6-29 00:0:19 Author: securityboulevard.com(查看原文) 阅读量:6 收藏

The Eureka Moment: Discovering Application Traffic Observability

by David Meltzer

If you’ve been part of a network segmentation or Zero Trust architecture planning project or a data center or application migration initiative, the following scenario probably rings true.

One of your first tasks is establishing a granular access policy for applications in your environment. You start by asking the application owners about the traffic going to and from their applications.

More specifically – what IP addresses (systems) and ports (services) does the application need to communicate with in order to work?

Often, the reply you get is that they don’t really know. It’s simply not on the application owner’s radar because what they do on a daily basis doesn’t require that level of technical detail.

When you’re met with a blank stare, you may try to look for documentation that will tell you what you need to know.

But if you find an architecture diagram from when the application was deployed several years ago, you can bet it’s no longer accurate.

And you’ve learned from past painful lessons that -if you implement a network policy based on old information, applications are going to start to break.

Observability is the lynchpin to success

Whether you’re trying to segment assets where they are or moving them to a new location, implementing any of these initiatives is difficult because you don’t know what the expected behavior of any affected applications should be.

Let’s consider segmentation. Because application owners don’t have granular observability into application traffic, communication is often more open than it needs to be. This unregulated network access enables malicious lateral movement and data exfiltration, and is the compelling event for many organizations to adopt a Zero Trust network architecture model. Understanding your application traffic lets you know to what extent network segmentation rules are overly permissive, creating lateral movement risks, if you need more granular access policies (aka network segmentation) to limit that access.

A similar problem exists for data center and application migration projects. Whether you’re migrating from one data center to another or moving an application from a data center to the cloud, you need to understand the behavior of the application traffic. Migration is driven by the need for greater capacity, efficiency, and utilization. But moving applications, services, and data without knowing the communication dependencies can disrupt your operations and create significant security risks.

Piecemeal approaches are limiting

Organizations traditionally have had no good way to gain application traffic observability across their entire multi-cloud and hybrid network. Looking for what you need to know using most tools can feel like panning for gold, sifting through massive expanses of data until you discover those few valuable nuggets of information.

For example, you may have a network traffic monitoring system already deployed. But typically, that system only covers parts of the network, which means you also need to use multiple different tools – one tool for the data center and a different tool for each of your different cloud environments. You try to piece all the data together to try to create a complete picture, but you still have gaps in observability, such as the traffic going between clouds.

There’s also the challenge of different teams across the organization that need access to the data. The NetOps, SecOps, and CloudOps teams each have access to different tools and views of data, but the application owner is still in the dark.

Another strategy I’ve seen is to try to gather the raw data, put it into a data lake, and write queries to pull out data for individual application owners. The network team then sends spreadsheets to the application owners who annotate the spreadsheet and send it back to the operations team. It’s a manual process with multiple steps that by its very nature consumes precious staff time, is error-prone, and cumbersome to maintain.

The Eureka Moment

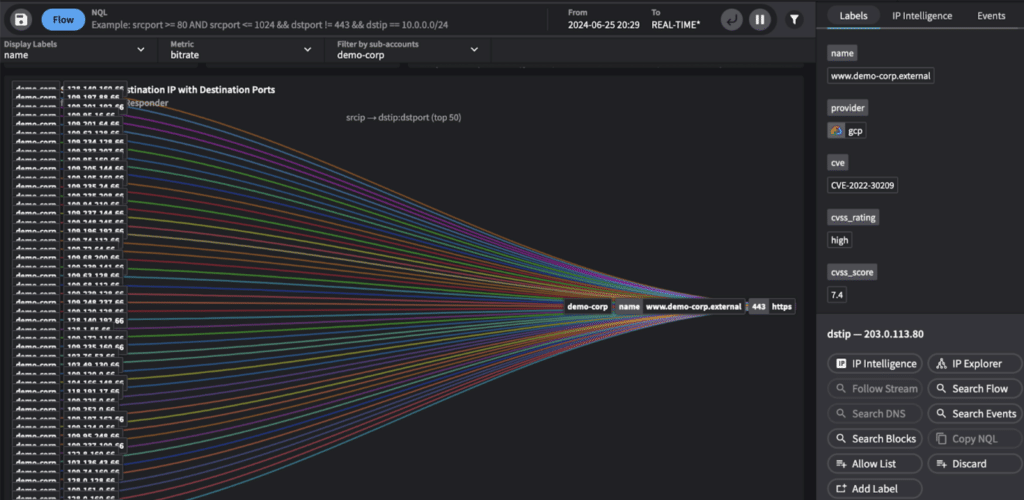

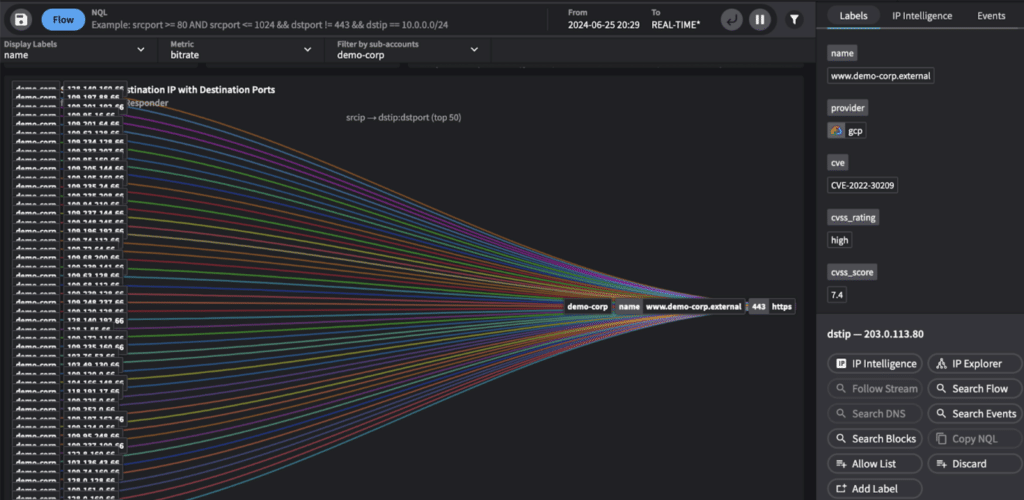

Enter Netography’s Fusion platform. At a foundational level, the Netography Fusion platform presents a holistic and reliable picture of all the network communication related to your applications in your multi-cloud and on-prem environments that your cloud, network, security operations, and application teams can use.

The Fusion platform delivers real-time observability by aggregating and normalizing metadata collected from your multi-cloud and on-prem network. The metadata consists of cloud flow logs from all five major cloud providers (Amazon Web Services, Microsoft Azure, Google Cloud, IBM Cloud, and Oracle Cloud) and flow data (NetFlow, sFlow, and IPFIX) from routers, switches, and other physical or virtual devices.

Fusion then enriches this metadata with context contained in applications and services in your existing tech stack, including asset management, CMDB, EPP, CSPM, and vulnerability management systems. The context can include dozens of attributes, including owners, tags, risks, asset types, risks, and users.

Context transforms the metadata in your network from a table of IP addresses, ports, and protocols into context-rich descriptions of the activities of your applications and devices.

In our self-service SaaS model, application owners can log in, see the actual application traffic, both inbound and outbound, and use that information to understand and define the communication flows required for their application to work.

Application owners get real-time awareness of actual application traffic (both inbound and outbound) enriched with context from your tech stack

Your CloudOps, NetOps, and SecOps teams will all see the same view and can use that information to easily map behaviors and communication dependencies for critical workloads. This is the critical knowledge that lets them propose, test, and implement new segmentation policies where necessary.

Fusion’s continuous monitoring helps your security teams see connections that are trying to circumvent access controls and policies rules so they can investigate and take action. Post-migration, your NetOps and CloudOps teams can use Fusion’s real-time observability for troubleshooting application networking issues.

The net result is more locked-down access controls to your applications, which prevents lateral movement and reduces your risk exposure. Equally important, there are no more blank stares from your application owners. It’s that Eureka moment when you discover how applications actually operate versus how they should operate so you can move forward on your segmentation or migration project with confidence. You may not have discovered 24-karat gold, but it sure feels like it.

The post The Eureka Moment: Discovering Application Traffic Observability appeared first on Netography.

*** This is a Security Bloggers Network syndicated blog from Netography authored by David Meltzer. Read the original post at: https://netography.com/the-eureka-moment-discovering-application-traffic-observability/

如有侵权请联系:admin#unsafe.sh