2024-7-1 17:0:17 Author: hackernoon.com(查看原文) 阅读量:4 收藏

Authors:

(1) Hamed Alimohammadzadeh, University of Southern California, Los Angeles, USA;

(2) Rohit Bernard, University of Southern California, Los Angeles, USA;

(3) Yang Chen, University of Southern California, Los Angeles, USA;

(4) Trung Phan, University of Southern California, Los Angeles, USA;

(5) Prashant Singh, University of Southern California, Los Angeles, USA;

(6) Shuqin Zhu, University of Southern California, Los Angeles, USA;

(7) Heather Culbertson, University of Southern California, Los Angeles, USA;

(8) Shahram Ghandeharizadeh, University of Southern California, Los Angeles, USA.

Table of Links

Conclusions and Current Efforts, Acknowledgments, and References

A DV raises many interesting multimedia systems challenges. The characteristics of a challenge may vary for different applications. This section presents two challenges.

4.1 FLS Localization

FLSs must localize in order to illuminate a shape and implement haptic interactions. The modular design of DV will enable an experimenter to evaluate alternative localization techniques. These techniques may be implemented by hardware components attached to the DV display, an FLS, or both. This section presents infrared cameras, RGB cameras, ultra-wideband (UWB) transceivers, retroreflective markers, or a hybrid of these components for localization. Below, we describe the four techniques in turn.

First, one may install infrared cameras on the DV display and mount retro-reflective markers on the FLSs. Software hosted on the DV-Hub will process images captured by the cameras to compute the location of FLSs. This centralized technique is inspired by Vicon which provides a high accuracy [35, 54, 57]. It requires the FLSs to maintain a line of sight with the cameras. It may be difficult (if not impossible) to form unique markers for more than tens of small drones measuring tens of millimeters diagonally [54].

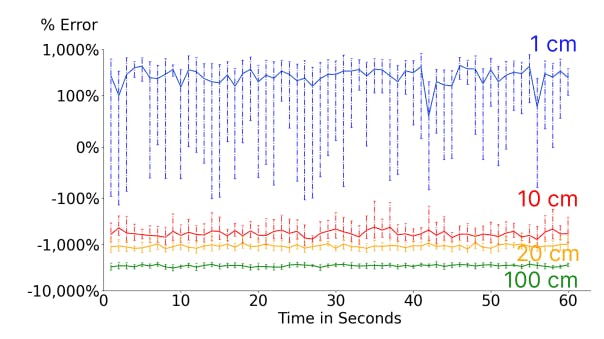

Second, one may install a set of sensors at known positions [37], e.g., on the side beams, a back panel, floor, or ceiling of the DV display. These cards serve as an anchor. A sensor may be mounted on each FLS to become a tag. (This is the LOC in Figure 4.) Both centralized and decentralized algorithms can be implemented to localize the FLSs using this setup. In this approach, the position of the anchors should be fixed and known by the individual FLSs. This approach requires accurate time synchronization between the anchors. Depending on the algorithm, time synchronization between anchors and tags may be required as well. We have experimented with UWB cards [20] as candidate sensors. In our experiments [52], we observed a high margin of error with measuring small distances such as 1 cm, see Figure 7. This is consistent with their reported 5-15 cm margin of error in measuring distance [14, 20, 37].

![Figure 7: Percentage error (log scale) in measuring 1 cm distances per second for 60 seconds using two UWB DW1000 [20] cards calibrated at 1, 10, 20, and 100 cm.](https://hackernoon.imgix.net/images/fWZa4tUiBGemnqQfBGgCPf9594N2-7f83zdk.png?auto=format&fit=max&w=1920)

Third, the CPU of each FLS may host a vision-based algorithm that processes the patterns superimposed on the floor (and ceiling) tiles to compute its position [10, 60, 78]. A limitation of this technique is that some FLSs may occlude the line of sight between other FLSs and the patterns. With a fix sized DV display, it may be possible to develop algorithms to address this limitation.

Fourth, we envision FLSs with mounted sensors and antennas that enable an FLS to compute its relative distance and angle to an anchor FLS. A localizing FLS will compare this information with the desired distance and angle in the ground truth, making adjustments to approximate the ground truth more accurately. This decentralized localization algorithm will require line of sight between a localizing FLS and an anchor FLS. It may implement nature inspired swarming protocols [56] to render animated shapes.

4.2 3D Acoustics

DV audio is a multi-faceted topic. It includes both how to suppress the unwanted noise from the FLSs and how to generate the acoustics consistent with an application’s specifications. Consider each in turn. First, the unwanted noise is due to the propulsion system of an FLS consisting of engines and propellers [61]. This noise increases with higher propeller speeds and larger propeller blades [43, 64].

Second, an application may want to render 3D acoustics given the 3D illuminations [25]. For example, consider an illuminated scene where a character placed at one corner of the display shouts at another placed at the farthest corner of the display. The application may want the audio to be louder for those users in close proximity of the shouting character.

We will investigate a host of techniques to address the above two challenges. With undesirable noise, we will investigate sound suppression and cancellation techniques. With 3D acoustics, we will investigate use of speakers built in the DV display and a subset of the FLSs. We will also consider out-of-the-box solutions. Central to this system would be a pair of noise-canceling headphones, purposefully designed to minimize the ambient drone noise that could potentially distract from the content being displayed. This feature ensures that users are fully focused on the audio-visual presentation, enhancing their overall experience. Additionally, the incorporation of 3D audio technology would allow the sound to dynamically adapt based on the displayed content. This means that as the display changes, the audio component would adjust in real-time, mirroring the spatial positioning and movement of the drones.

如有侵权请联系:admin#unsafe.sh