2024-7-9 07:41:30 Author: hackernoon.com(查看原文) 阅读量:1 收藏

LM Studio is a popular GUI application that allows users with basic computer knowledge to easily download, install, and run large language models (LLMs) locally on their Linux machines.

Popular LLM models such as Llama 3, Phi3, Falcon, Mistral, StarCoder, Gemma, and many more can be easily installed, set up, and accessed using the LM Studio chat interfaces.

This way, you won't be stuck with proprietary cloud-based AI like OpenAI's ChatGPT and can easily run your own LLM privately offline without paying any extra money for the full features.

In this article, I'll show you how to install LM Studio on Linux, set it up, and use the GPT-3 model.

System Requirements

Running LLM models locally on a PC requires meeting certain minimum requirements to ensure a decent experience. Make sure you have at least...

- An NVIDIA or AMD graphics card with at least 8 GB of VRAM.

- 20 GB of free storage (SSD would be a better option).

- 16 GB of DDR4 RAM.

Installing LM Studio on Linux

First, visit the official LM Studio website, and download the LM Studio AppImage by clicking "Download LM Studio for Linux."

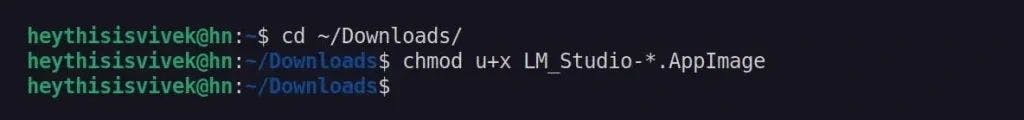

Once the download is complete, open your terminal, navigate to the downloads directory, and assign executable permissions to the LM Studio AppImage.

$ cd ~/Downloads/

$ chmod u+x LM_Studio-*.AppImage

Next, unpack the contents of the AppImage using the command below, which will create a new directory named "squashfs-root."

$ ./LM_Studio-*.AppImage --appimage-extract

Now, navigate to the extracted "squashfs-root" directory and assign appropriate permissions and ownership to the "chrome-sandbox" file.

$ cd squashfs-root/

$ sudo chown root:root chrome-sandbox

$ sudo chmod 4755 chrome-sandbox

Finally, run the LM Studio application within the same directory using this command:

$ ./lm-studio

That's it! You can now search, download, and access your favorite open-source LLMs with LM Studio. For instructions on setting up and accessing GPT-3, see the next section.

Accessing the GPT-3 Model in LM Studio

To access the GPT-3 model, you need to first download it. To achieve this, click on the search icon in the left panel, type "GPT-3" as the model name, and select the appropriate model size based on your system specifications.

The download process will begin, and you can track the progress by clicking on the bottom panel.

Once the download is complete, click the chat icon on the left panel, select the GPT-3 model from the top dropdown, and start chatting with the GPT-3 model by typing your message in the bottom input field.

That's it! You can now ask any question locally on the GPT-3 model. The response time could vary depending on your system specifications. For example, I've got a decent GPU with 8 GB of VRAM and 16 GB of DDR4 RAM, for which it took around 45 seconds to generate 150–200 words.

If the response is too poor in your case, you can try using a different LLM model. I would recommend using the Meta Llama 3 and making sure to choose the smallest size for the model to start with.

Wrap Up

In this article, you’ve learned how to use LM Studio to download and access open-source LLM models like GPT-3 and Llama 3 on your local PC. Now, before you transition from cloud services to using your PC for LLMs, keep in mind that it uses a GPU, which consumes electricity and affects GPU lifespan.

So, if you're an AI enthusiast and privacy advocate, it won't bother you, but if you're concerned about electricity bills or have a less powerful PC, unfortunately, cloud-based AI may be your only option.

如有侵权请联系:admin#unsafe.sh