2024-7-18 02:0:15 Author: hackernoon.com(查看原文) 阅读量:2 收藏

Authors:

(1) Luyuan Peng, Acoustic Research Laboratory, National University of Singapore;

(2) Hari Vishnu, Acoustic Research Laboratory, National University of Singapore;

(3) Mandar Chitre, Acoustic Research Laboratory, National University of Singapore;

(4) Yuen Min Too, Acoustic Research Laboratory, National University of Singapore;

(5) Bharath Kalyan, Acoustic Research Laboratory, National University of Singapore;

(6) Rajat Mishra, Acoustic Research Laboratory, National University of Singapore.

Table of Links

IV Experiments, Acknowledgment, and References

IV. EXPERIMENTS

We test three model configurations on both the simulator and tank datasets. First, we implement a pose regression model based on GoogLeNet [7] as our baseline model. Secondly, we implement a model using ResNet-50 [9] to evaluate the improvement possible by use of a deeper network. ResNet allows us to have deeper networks with more free parameters, and tries to avoid the problem of overfitting by having residual connections between layers [9]. Thirdly, we implement a pose

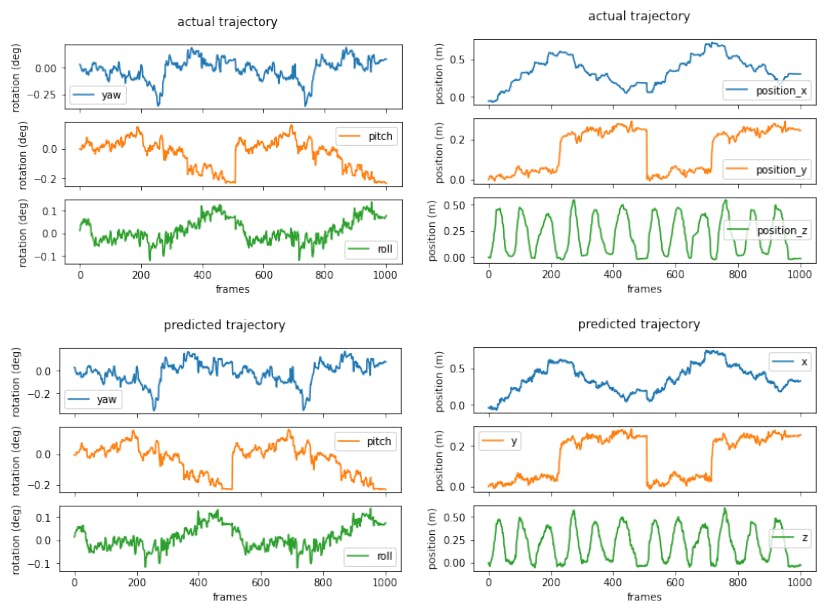

regression model using an LSTM as an intermediate layer. The results indicate that all three configurations can perform well in both simulated and tank datasets as shown in Table I. The errors are minimal and of comparable magnitude to the noise in the pose recorded by the camera sensors. Fig. 4 shows that the trajectories predicted by the models are very close to the actual trajectories in terms of both position and orientation. With the simulator dataset, which is free of noise, turbidity, light distortion, and other challenges that may be observed in real underwater settings, the ResNet-50 and LSTM-based model performed better than the baseline model. On the other hand, with the tank datasets with some distortions typical of underwater scenarios, ResNet-50 performed worse than the baseline model, whereas the LSTM model did better than baseline with dataset #1 (which mostly featured translation but almost no rotation). This suggests the ResNet-50 architecture may be slightly overfitting despite the regularization, so it is not able to provide better performance in the tank dataset. We also note that data augmentation significantly improves the model performance, so in cases where there is no significant rotation, the use of data from both cameras can be used to bolster performance. Overall, these methods are robust for application in real underwater environments, and show promise for use in open water settings, where they will be tested next.

ACKNOWLEDGMENT

This research project is supported by A*STAR under its RIE2020 Advanced Manufacturing and Engineering (AME) Industry Alignment Fund - Pre-Positioning (IAF-PP) Grant No. A20H8a0241.

REFERENCES

[1] J. Gonzalez-Garcıa, A. Gomez-Espinosa, E. Cuan-Urquizo, L. G. ´ Garcıa-Valdovinos, T. Salgado-Jimenez, and J. A. E. Cabello, “Au-tonomous Underwater Vehicles: Localization, Navigation, and Commu- nication for Collaborative Missions,” Applied Sciences, vol. 10, no. 4, p. 1256, Feb. 2020.

[2] L. Peng and M. Chitre, ”Regressing Poses from Monocular Images in an Underwater Environment”, 2022 OCEANS - Chennai, in press.

[3] A. Kendall, M. Grimes and R. Cipolla, ”PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization,” 2015 IEEE International Conference on Computer Vision (ICCV), 2015, pp. 2938- 2946, doi: 10.1109/ICCV.2015.336.

[4] S. Hochreiter and J. Schmidhuber, “Long Short-Term Memory,” Neural Computation, vol. 9, no. 8, pp. 1735–1780, Nov. 1997, doi: 10.1162/neco.1997.9.8.1735.

[5] F. Walch, C. Hazirbas, L. Leal-Taixe, T. Sattler, S. Hilsenbeck, and D. Cremers, “Image-based localization using lstms for structured feature correlation,” 2017 IEEE International Conference on Computer Vision (ICCV), 2017.

[6] A. Kendall and R. Cipolla, ”Geometric Loss Functions for Camera Pose Regression with Deep Learning,” 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 6555-6564, doi: 10.1109/CVPR.2017.694.

[7] C. Szegedy et al., ”Going deeper with convolutions,” 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 1-9, doi: 10.1109/CVPR.2015.7298594.

[8] A. Chaudhary, R. Mishra, B. Kalyan, and M. Chitre, ”Development of an Underwater Simulator using Unity3D and Robot Operating Systems”, 2021 OCEANS - San Diego, in press.

[9] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016.

如有侵权请联系:admin#unsafe.sh