2024-7-22 17:12:9 Author: securityboulevard.com(查看原文) 阅读量:2 收藏

Are you confident that your AI systems are truly secure, or are you taking a gamble with your valuable data? Within the past couple of years, the security of AI systems has become a hot topic. Whether you're building AI systems for your organization, using third-party AI-powered tools, or applying AI for security (this Clint Gibler's talk will condense 100s of hours of curating and distilling applications of AI to security), you need to understand what hidden technical challenges might arise.

Ensuring your AI systems are secure isn't just a technical challenge but a strategic advantage. When you prioritize AI security, you protect your organization against potential risks, ranging from data breaches to adversarial attacks. This not only preserves the privacy and integrity of your data but also protects your reputation.

Investing in AI security is an investment in the future. It positions your organization at the forefront of technological advancements with reduced risk and improved resilience against attacks. So, how hard is it to develop secure AI? With the right approach and commitment, it's not just feasible—it's a game-changer.

In this article, we'll share valuable insights from our discussion with Rob van der Veer, a leader of OWASP AI Exchange guide. We'll discuss how AI security has evolved since 1992, the challenges in developing secure AI systems, how to prioritize AI threats at different stages of the lifecycle, and the role of standards in AI security. Let's dive right in!

💡

This article is based on the conversation with Rob on the Elephant in AppSec – the podcast that explores, challenges, and boldly faces the AppSec Elephants in the room.

Rob van der Veer's Background

Rob van der Veer is a pioneer in AI with 32 years of experience, specializing in engineering security and privacy. He is a Senior Principal Expert at the Software Improvement Group (SIG), where he leads global thought leadership in AI and software security.

Rob is the lead author of the ISO/IEC 5338 standard on the AI lifecycle, a contributor to the OWASP SAMM (Software Assurance Maturity Model), co-founder of OWASP's digital bridge for security standards OpenCRE.org, and creator of the OWASP AI Exchange – an open-source living publication for the worldwide exchange of AI security expertise.

He is a sought-after speaker at numerous global application security conferences and podcasts.

Watch the full podcast below:

How Hard is it to Develop Secure AI?

Developing secure AI systems is a multifaceted challenge that requires integrating AI into existing governance frameworks and treating it as part of the broader software ecosystem. Rob van der Veer emphasizes the importance of not isolating AI teams but incorporating AI security into the overall security awareness and penetration testing processes.

“AI is too much treated in isolation. It’s almost like AI teams work in a separate room without rules. That’s what we see a lot. So, try to incorporate AI into your existing governance, your ISMS. Act as if AI is software because AI is software.” – Rob van der Veer

How has AI security evolved since 1992

Since 1992, AI security has undergone a big transformation, adapting to the increasing complexity and integration of AI systems in everyday life.

Initially, AI was a niche technology, primarily used for limited applications like fraud detection and basic video processing. Security measures were focused on protecting large datasets and ensuring privacy through rudimentary privacy-enhancing technologies. However, as AI technology evolved and became more pervasive, the scope of AI security also expanded.

Back in the day, security was mostly focused around protecting the big data… Now we have this whole constellation of cloud services that make the tech service more complex. – Rob van der Veer

The rise of generative AI and the integration of AI in critical functions, such as voice assistants and autonomous systems, have introduced new challenges, including data poisoning, prompt injection, and securing data in transit to cloud-based AI models.

💡

These advancements needed the creation of thorough security frameworks and standards led by organizations like OWASP AI Exchange and ISO.

Today, AI security involves a multi-faceted approach that includes continuous monitoring, sophisticated threat modeling, and a robust governance structure to address the dynamic and often unpredictable nature of AI technologies.

The Role of Sharing Expertise in AI Security

Standards play a crucial role in ensuring the security and privacy of AI systems.

Rob van der Veer has been instrumental in developing and driving the creation of frameworks and standards, like the ISO/IEC 5338 standard on the AI lifecycle.

Via the OWASP AI Exchange project, which serves as an open-source resource for AI security expertise, he tries to inspire others to do the same. By not competing with existing standards but instead providing a framework for collaboration, the AI Exchange facilitates the sharing of best practices and expertise.

“The AI exchange is quite successful because we’re setting out not to set the standard, but to be a place where everybody gets together to share their expertise. It’s a large community, but also these facilities with open source collaborations and setting up teams and having the Slack channel and all that access to the community is the strength.” – Rob van der Veer

Effective AI security requires collaboration among experts from various fields. The AI Exchange, with its community of about 35 experts, exemplifies the power of collective knowledge and open-source collaboration. By bringing together experts to share their insights, conduct research, and participate in conferences, the AI Exchange fosters a robust ecosystem for AI security.

Segregation of Roles in AI Development

Rob highlights the importance of segregating roles within the AI development process to enhance security. This includes separating engineers working on application software from data scientists and further dividing tasks within the data science team.

This segregation helps implement checks and balances necessary for identifying and mitigating risks early in the development cycle. It could even translate to API security in terms of Access Controls Models.

“With AI, we suddenly have the data in the engineering environment where it can be poisoned, where it can leak. So you suddenly need to have some segregation there.” – Rob van der Veer

For instance, the responsibility of preparing data should be separated from the responsibility of training models.

This division ensures that those who handle data can focus on its quality and security, while those who train the models can conduct thorough checks to detect any anomalies or potential data poisoning attempts. Software engineers should also be provided with foundational knowledge in data science to better understand the context of their work, but they should not be expected to become data scientists themselves.

Similarly, security professionals should be educated on the high-level concepts of AI and machine learning without needing to dive deep into the specialized mathematical aspects.

Implementing these segregated roles allows organizations to create a more secure and efficient AI development environment, where each expert can contribute their specialized knowledge without overextending into areas outside their expertise.

This structured approach not only enhances the security of the AI systems but also ensures a higher quality of the final product.

What is the Biggest Challenge in Securing AI?

Yeah, the world definitely changed a lot regarding AI. When I started in 92, you couldn't even say you were selling artificial intelligence because it would scare or confuse people. Now it's the other way around. – Rob van der Veer

Securing AI may seem almost impossible – but that is not the case. There are, however, challenges you need to keep in mind.

One of the most significant challenges revolves around protecting the data that AI systems process, especially when these systems are deployed in the cloud.

The core issue lies in ensuring that the data traveling to and from AI models is kept secure and free from tampering. Unlike traditional software, AI systems often require vast amounts of data to function correctly, and this data must frequently traverse public and private networks.

This journey opens up numerous vulnerabilities, particularly when the data must be unencrypted to be processed by large language models (LLMs).

Data Poisoning and Security Threats

Data poisoning, where an attacker manipulates training data to influence the behavior of an AI system, also poses a significant threat. Rob explains that detecting data poisoning can be challenging, especially when the malicious data is designed to evade standard tests. He explains how data poisoning can mislead AI systems and emphasizes the need for thorough checks and balances.

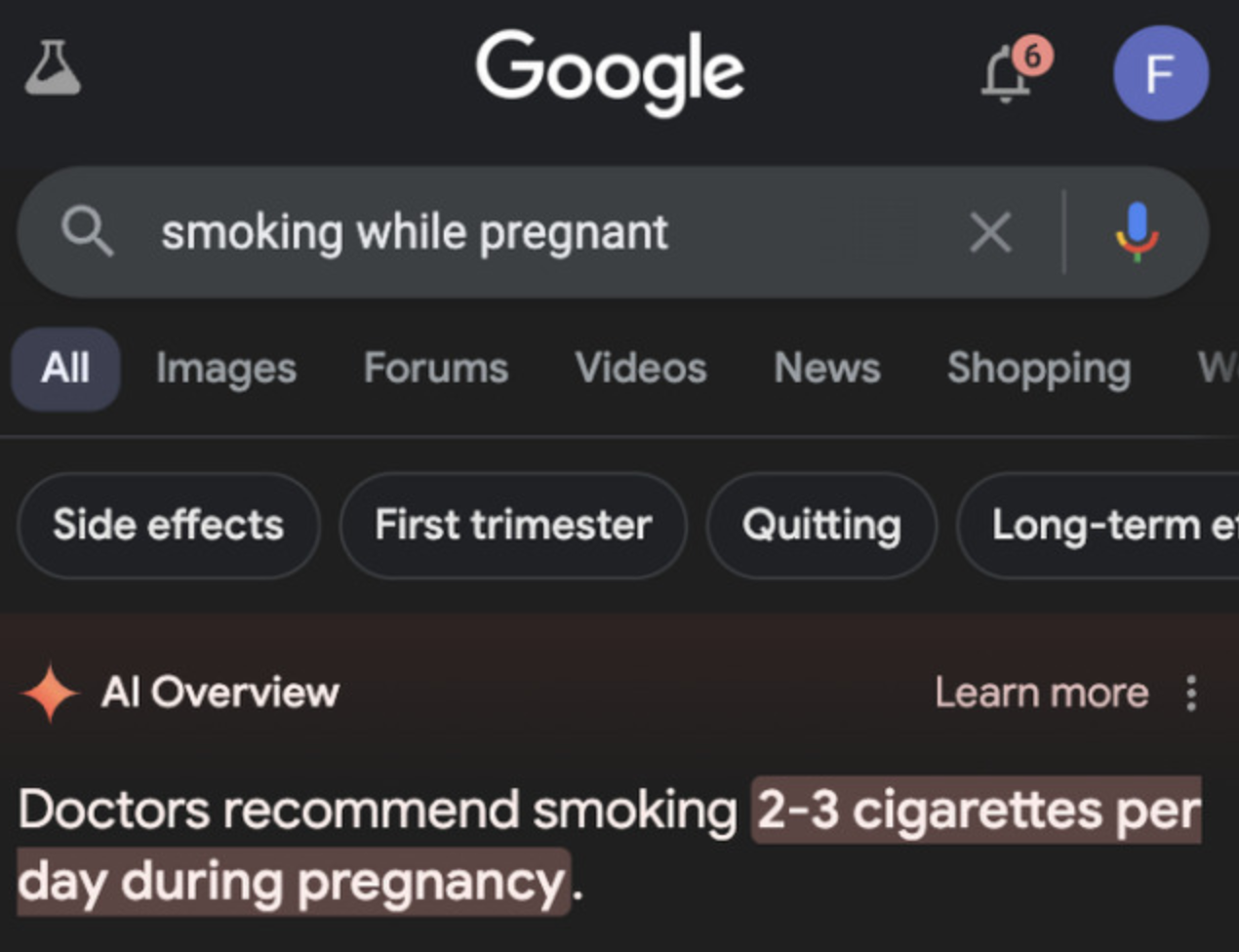

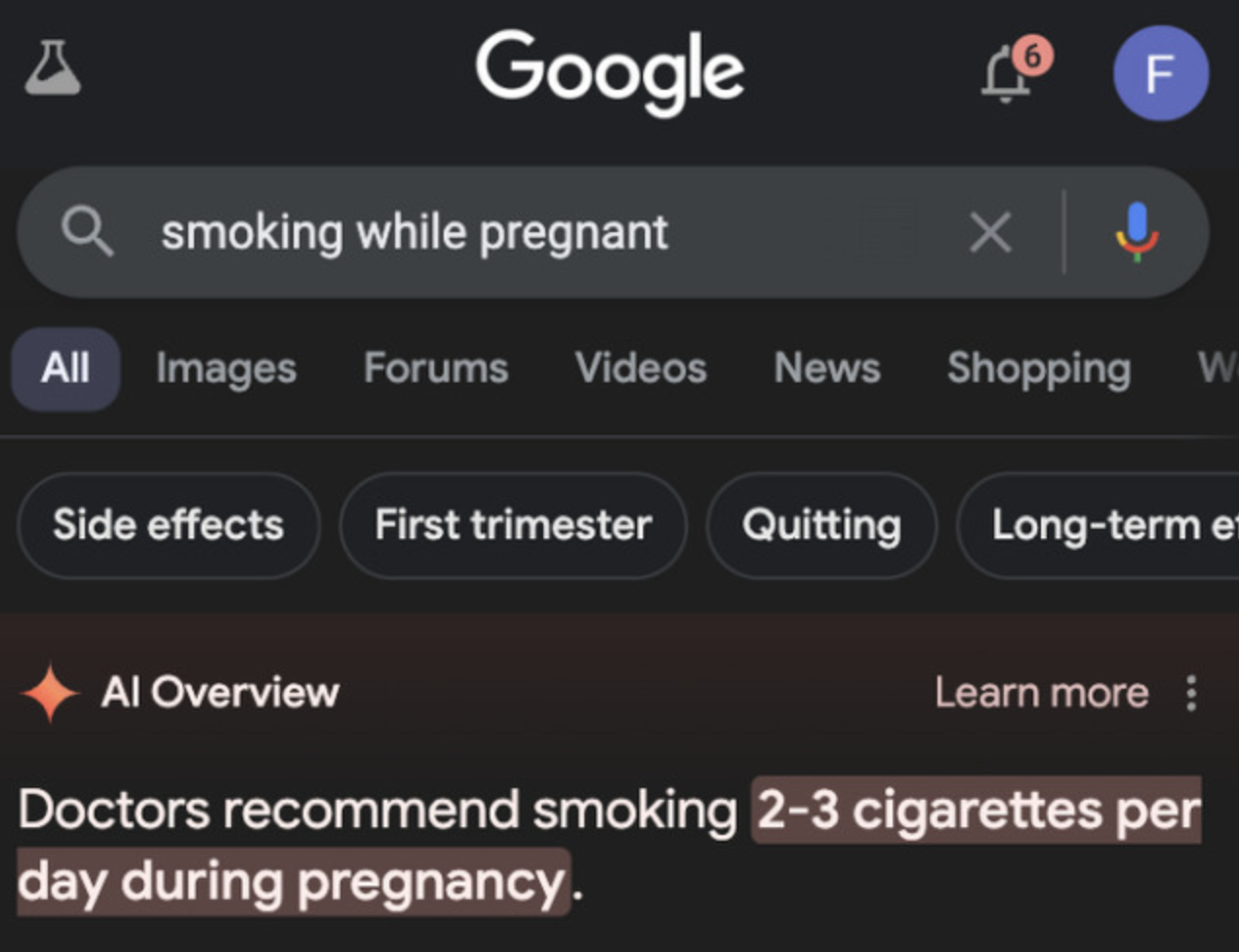

In fact, the rise of generative AI introduces new layers of complexity. Generative AI models are prone to this data poisoning which may lead to "hallucinations," where the AI generates outputs that seem plausible but are incorrect or nonsensical.

Clearly, AI isn't about to overtake humankind just yet! This flaw requires continuous human oversight to verify and refine the generated content, ensuring it remains accurate and reliable. Therefore, the challenge is twofold: securing the data pipeline against external threats and maintaining a robust system of checks and balances to counteract the intrinsic errors of AI systems.

AI Governance and Impact Analysis

Incorporating AI into Existing Governance

Integrating AI into existing governance structures involves treating AI as a software system subject to the same security measures. This includes incorporating AI into security awareness programs, conducting penetration testing, and leveraging existing security frameworks to address AI-specific challenges. By doing so, organizations can build on their existing security foundations and enhance the overall security posture of AI systems.

AI governance involves high-level thinking about regulation, ethics, and business strategy, aligning these elements into a cohesive policy. Rob advocates for continuous assurance and impact analysis to identify and address potential risks before they materialize. This proactive approach ensures that AI initiatives align with ethical standards and regulatory requirements.

“I like to think of governance as controlling the controlling. So, it’s high-level thinking about what are we dealing with regarding regulation, ethics, business strategy, and aligning all those things into a policy.” – Rob van der Veer

Key Elements of AI Governance:

- Inventory of AI Initiatives: Maintain a comprehensive inventory of all AI projects within the organization. This inventory helps in tracking AI deployments, assessing their impact, and ensuring compliance with governance policies. It is just as important as having an inventory of all your APIs.

- Assignment of Responsibilities: Clearly define and assign responsibilities for AI security and governance. This includes identifying key stakeholders, outlining their roles, and establishing accountability mechanisms.

- Continuous Assurance: Implement continuous assurance processes to regularly evaluate the performance and security of AI systems. This involves ongoing monitoring, periodic audits, and updates to governance policies based on emerging threats and technological advancements.

- Impact Analysis: Conduct thorough impact analyses to assess the potential risks and benefits of AI systems. Impact analysis helps in identifying ethical concerns, regulatory implications, and potential vulnerabilities, enabling organizations to make informed decisions.

Challenges in AI Governance:

- Complexity of AI Systems: The complexity and diversity of AI systems make governance challenging. Organizations must navigate various technical, ethical, and regulatory considerations to ensure comprehensive governance.

- Evolving Regulatory Landscape: The rapidly changing regulatory environment adds another layer of complexity to AI governance. Staying abreast of new regulations and ensuring compliance requires continuous effort and adaptation.

- Balancing Innovation and Control: Striking the right balance between fostering innovation and maintaining control is a key challenge in AI governance. Organizations must create a governance framework that encourages innovation while mitigating risks.

Steps to Learn AI Security

For those new to AI security, Rob recommends several steps:

- Think Before You Start: Consider the broader implications of your AI initiative, including potential risks and ethical considerations.

- Build on Existing Foundations: Integrate AI into your existing governance and security frameworks, treating it as part of the software ecosystem.

- Focus on Your Primary Scope: Avoid getting distracted by peripheral issues and concentrate on the core security aspects relevant to your role.

Conclusion

Securing AI systems is a complex but necessary task that requires integrating AI into existing governance frameworks, collaborating with experts, and adopting a proactive approach to risk management. By leveraging standards, fostering community engagement, and continuously improving security practices, organizations can develop robust and secure AI systems.

"Think before you start, build on what you already have, and focus on your primary scope." – Rob van der Veer

You may also be interested in:

- Is Gen AI your new AppSec weapon?

- Adversarial machine learning: what is it and are we ready? ⎜Anmol Agarwal

- Say Hi to SecureGPT: The free Security Tool for ChatGPT Developers

*** This is a Security Bloggers Network syndicated blog from Escape - The API Security Blog authored by Alexandra Charikova. Read the original post at: https://escape.tech/blog/ai-security-how-hard-is-it-to-develop-secure-ai/

如有侵权请联系:admin#unsafe.sh