2024-7-31 05:15:52 Author: securityboulevard.com(查看原文) 阅读量:0 收藏

We Can Do Better

As a Detection Engineer and Threat Hunter, I love MITRE ATT&CK and I whole-heartedly believe that you should too. However, there’s something about the way that some folks leverage MTIRE ATT&CK that has me concerned. Specifically, it is the lack of both precision and accuracy in how mappings are sometimes applied to controls. While we can debate the utility and validity of using MITRE ATT&CK as a “coverage map” or “benchmark” of any kind, the reality is that many teams, organizations, and security products use MITRE ATT&CK (for good reasons) to assess, measure, and communicate breadth and depth of detection and/or prevention capability. If that’s the case, then it seems pertinent to talk about how we can do a better job of presenting a more realistic picture of coverage through closer examination of the quality of our mappings. In this article, I’ll present an argument for why precision and accuracy matter, provide some examples of common mistakes and how to fix them, and finally share some ideas and recommendations for thinking about how to map detections properly moving forward.

Why Accuracy & Precision Matter

Accuracy and precision are not just inherently desirable traits; they are essential for the effective use of the MITRE ATT&CK framework within any organization. Accurate and precise mappings are crucial for maximizing the framework’s utility. To understand their importance, let’s first define these terms.

In this context, “accuracy” refers to whether the applied Tactic, Technique, or Sub-Technique correctly represents the activity identified by the detection. Imagine a literal map with cities in the wrong states, states in the wrong regions, and countries in the wrong parts of the world. Such a map would be unreliable for understanding our location or navigating to a new one.

Similarly, inaccurate mappings in threat detection lead to a distorted understanding of our security posture and undermine the integrity and utility of our efforts. This can result in misallocated resources, focusing on areas that don’t need attention while neglecting those that do. Such outcomes are detrimental to Threat Hunters and Detection Engineers, who already face a shortage of useful and actionable tools to understand and navigate the threat landscape effectively. Accurate mappings are therefore critical for these professionals to communicate the value of their work and make informed decisions.

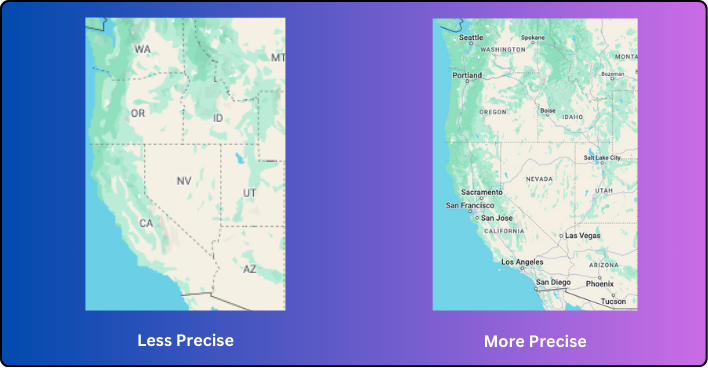

In this context, “precision” refers to the level of specificity or granularity in a given mapping. The ATT&CK Framework is structured as a hierarchy of abstractions: Tactics encompass Techniques, which in turn encompass Sub-Techniques. For Detection Engineers and Threat Hunters, descending this hierarchy results in more granular and specific categories, thereby increasing their usefulness.

To use the metaphor of a literal map, a precision problem would be akin to a map of a country that only shows states or provinces but omits cities and towns. Such a map might help you understand the general direction needed to travel from one state to another, but it would be inadequate for navigating to a specific city within a state.

Similarly, imprecise mappings in threat detection provide only a “general idea” of our security posture and potential areas of focus. While this may offer some strategic insight, it ultimately falls short of being highly actionable. Precise mappings, down to the most granular elements of the ATT&CK Framework, are essential for making informed, effective decisions in threat detection and response.

Without placing explicit and intentional attention on the quality of mappings from detections to ATT&CK, individual errors can add up, giving us a “view of the world” that simply isn’t realistic or useful (can you even imagine what’s happening on the security vendor side?! If it is in their best interest to stretch the truth, do we really know how realistic their coverage is?).

Common Mistakes

Unlike with a world map, to the average observer, it is not always obvious when there is a lack of accuracy or precision. Mapping detection capabilities to MITRE ATT&CK properly is not easy. The ATT&CK Framework is a vast and deep source of knowledge that, despite being quite granular, still has gaps and is still subject to some degree of expert interpretation. In this section, we’ll highlight a few examples of both accuracy and precision error using Sigma rules from the SigmaHQ repository.

Example 1:

Tactic: Defense Evasion

Technique(s): None

Sub-Technique(s): None

This is the most classic precision error; assigning a Tactic but not attributing it to a particular Technique or Sub-Technique.

- T1036: Masquerading should be added.

– While there are Sub-Techniques within T1036 that are similar, they are not the same, so no Sub-Technique can be assigned.

Example 2:

Name: Activate Suppression of Windows Security Center Notifications

Tactic: Defense Evasion

Technique(s): T1112: Modify Registry

Sub-Technique(s): None

This is a nuanced precision error; this detection is missing a Sub-Technique which overlaps with but does not mutually exclude T1112.

- T1562.001: Impair Defenses: Disable or Modify Tools should be added here.

Example 3:

Tactic: Initial Access

Technique(s): T1133 External Remote Services

Sub-Technique(s): None

This is an accuracy error.

- T1133 should be removed because this Technique is for remote *access services such as VPN, RDP, VNC, SSH. Also, with T1133, you’re more likely to need “Valid Accounts”, which are not present in this activity.

- 1190: Exploit Public-Facing Application should be added instead.

Example 4:

Tactic: Credential Access

Technique(s): T1187: Forced Authentication

Sub-Technique(s): None

Almost accurate, but not quite! PetitPotam is an example of T1187, but this detection is not identifying PetitPotam, just something (Rubeus) that is likely to happen after PetitPotam is used.

- T1187 is invalid.

- T1078.002: Valid Accounts: Domain Accounts is probably more accurate.

Example 5:

Tactic: Execution, Defense Evasion, Impact

Technique(s): T1140: Deobfuscate/Decode Files or Information, T1485: Data Destruction, T1498: Network Denial of Service

Sub-Technique(s): T1059.001: Command and Scripting Interpreter: PowerShell

This is primarily an accuracy error; the rule and the threat that it detects are kind of dense, so it would be easy to get confused.

- This detection looks for specific Powershell commands related to BlackByte’s Defense Evasion.

– T1562.001 Impair Defenses: Disable or Modify Tools should be added.

– T1027.010: Obfuscated Files or Information: Command Obfuscation should be added.

– T1140 should be removed, as this is not an example of deobfuscation. - The commands in question are not part of the “encryption routine”.

– Impact is not a valid Tactic for this detection.

– T1485 and T1498 are not valid Techniques in this instance.

– T1486: Data Encrypted for Impact would be more valid if the commands were part of the encryption routine, but they are not.

Don’t believe me? Look at what the research article, referenced in the rule, suggests for these specific commands (bottom of article).

Getting It Right

While it may seem like nit-picking, these small errors, when compounded across hundreds of rules, can lead to a significant misrepresentation of reality. Although there is no definitive formula for mapping rules accurately, there are several high-level principles that can enhance the accuracy and precision of our mappings.

Keep It Simple

- Focus on what is immediately observable and not on what could likely be inferred from the data.

– For example, detecting execution of a malware/tool that exhibits a Technique is not the same thing as detecting the Technique itself. - Less Techniques is better than more Techniques.

– Detections that look like they could be mapped to multiple Techniques at the same time are also likely not specific enough to qualify for a single Technique. - Map to Sub-Techniques where possible.

– Only use a Technique when there’s no other option.

Don’t Be Greedy

- Not all Techniques/Sub-Techniques can be detected with logs and telemetry.

– Most Techniques within the Recon and Resource Development Tactics are fundamentally un-detectable through internal logs and telemetry. - Not every detection needs to be tagged with a Technique/Sub-Technique.

– If it’s not obvious which Technique or Sub-Technique applies to your detection, refrain from mapping it. - Avoid mapping rules to Techniques/Sub-Techniques that you don’t fully understand.

– Find the boundaries of your knowledge, accept that you’ve hit a wall, and keep learning to move beyond them.

Become A Better Researcher

- Improve your ability to find, interpret, and understand research on malware, tools, techniques, and vulnerabilities.

– Focus on the broader context rather than getting lost in the bits, bytes, and assembly.

– Learn how to read Proof of Concept (PoC) code or use an LLM to help you interpret code. - Set up a lab environment for testing.

– Use Atomic Red Team tests to get more hands-on exposure to simple Techniques.

– Experiment with writing malware tools to better understand the ins and outs of operating systems.

Am I suggesting that getting mappings done properly is simple? No.

Is this asking a lot of practitioners? Probably, yes.

Should you do it anyway? Absolutely.

One of my many personal mottos has always been “there’s no free lunch when it comes to solving hard problems”. In other words, we don’t always realize that the “easy buttons” made available to us through modern technology were only made possible by the people who came before us, the people who actually did put in some seriously hard work somewhere earlier down the line to pave the way for everyone else.

Getting It Right With SnapAttack

Even though Detection Engineering and Threat Hunting have been around for years, it still feels like most of us are stuck at the starting line. At SnapAttack, we’ve been putting in the work to solve the hard problem of Threat Detection, enabling customers to stand on our shoulders and start ahead of us, and giving them the tools to grow their capabilities beyond us. While I’m not here to give you the hard sell, it would be negligent to not mention at least a few things that we do at SnapAttack that are relevant to this article’s topic.

- Pre-Written Tested Detections

– A core component of our solution is a growing library of pre-written Sigma rules that we develop and test against Sandbox sessions conducted by our own Threat Research team.

– Yes, we map the rules to ATT&CK and even adjust the mappings on rules that we don’t write ourselves but pull in to the library. - MITRE ATT&CK Prioritization & Coverage Reporting

– First, the platform uses best-in-breed Threat Intelligence to prioritize Techniques & Sub-Techniques according to an organization’s industry and region.

– Next, we import your existing rules to understand current coverage.

— We have an additional service that helps you map rules to ATT&CK and/or check the accuracy and precision of your existing mappings.

– Finally, we display all this information in actionable reports and dashboards that help you implement detection capability for the highest priority threats first.

— ATT&CK Navigator JSON files look good, but they aren’t functional. - Research Sandbox

– The platform includes a basic Sandbox that enables operators to do hands-on-keyboard research without having to spin up their own lab environment.

— SnapAttack’s internal threat research team uses the Sandbox to understand threats, build new detections from scratch, and test detections.

— Platform users can also leverage the Sandbox to enhance their existing research capabilities or simply consume Sandbox reports to boost their knowledge. - Atomic Red Team Tests

– Detection content in our platform is programmatically tied back to Atomic Red Team Tests that we pull in from the community and develop ourselves through the Sandbox.

— This gives advanced operators the ability to simulate simple but specific attacks to explore log visibility and detection capability at an individual level.

If any of this sounds even remotely interesting — please reach out for a demo of our platform today! We’d love to show you exactly how we can help you detect more threats faster with SnapAttack.

Detection Rules & MITRE ATT&CK Techniques was originally published in SnapAttack on Medium, where people are continuing the conversation by highlighting and responding to this story.

*** This is a Security Bloggers Network syndicated blog from SnapAttack - Medium authored by Jordan Camba. Read the original post at: https://blog.snapattack.com/detection-rules-mitre-att-ck-techniques-7e7d7895b872?source=rss----3bac186d1947---4

如有侵权请联系:admin#unsafe.sh