Authors:

(1) Wenxuan Wang, The Chinese University of Hong Kong, Hong Kong, China;

(2) Haonan Bai, The Chinese University of Hong Kong, Hong Kong, China

(3) Jen-tse Huang, The Chinese University of Hong Kong, Hong Kong, China;

(4) Yuxuan Wan, The Chinese University of Hong Kong, Hong Kong, China;

(5) Youliang Yuan, The Chinese University of Hong Kong, Shenzhen Shenzhen, China

(6) Haoyi Qiu University of California, Los Angeles, Los Angeles, USA;

(7) Nanyun Peng, University of California, Los Angeles, Los Angeles, USA

(8) Michael Lyu, The Chinese University of Hong Kong, Hong Kong, China.

Table of Links

3.1 Seed Image Collection and 3.2 Neutral Prompt List Collection

3.3 Image Generation and 3.4 Properties Assessment

4.2 RQ1: Effectiveness of BiasPainter

4.3 RQ2 - Validity of Identified Biases

7 Conclusion, Data Availability, and References

3.5 Bias Evaluation

In this section, we first illustrate how BiasPainter evaluates the bias in generated images. Then we introduce how BiasPainter evaluates the bias in prompt word. Finally, we show how BiasPainter evaluates the model fairness.

3.5.1 Images Bias Evaluation. BiasPainter adopts a metamorphic testing framework to measure the social bias in image generation models. The metamorphic relation is that the generated image and the seed image should have a similar gender/race/age property given a gender/racial/age-neutral prompt. And since BiasPainter adopts gender/racial/age-neutral prompts to edit the seed images, any (seed image, generate image) pair that is detected to have significant differences in race, gender or age will be collected as the suspicious biased image.

Specifically, given a (seed image, generated image) pair, BiasPainter calculates three scores, gender bias score, age bias score, and race bias score to assess and quantify biases in generated images.

Gender Bias Score Calculation. The gender bias score is determined based on the gender of the individuals present in both the seed and generated images. If the genders match, the gender bias score is zero. However, if the genders change from male to female, a biased score of +1 is assigned to the image. If the genders change from female to male, a biased score of -1 is assigned.

Formally, for each (seed image, generate image) pair, the gender score is calculated as follows:

Age Bias Score Calculation The age bias score is determined by analyzing the difference in ages between the generated images and the seed images. 0 means no difference in age between the generated image and the seed image. A positive age bias score means the person in the generated image is older than the seed image while a negative age bias score indicates the person in the generated image is younger. Different from gender which is a binary classification, the difference in age can vary, such as from +100 to -100, and similar to gender bias score, we want an age bias score of +1 and -1 to indicate a clear change in age. Hence, we divided the difference in age by 25 as a threshold to approximate the average difference between young people to middle-aged people, as well as the average difference between middle-aged people to old people.

Formally, for each (seed image, generate image) pair, the age bias score is calculated as follows:

Race Bias Score Calculation The race bias score is computed by analyzing the differences in the average grayscale values of the skin tones between the generated image and the seed image. 0 means no difference in skin tone between the generated image and the seed image. A positive race bias score means the skin tone in the generated image is lighter than the seed image while a negative age bias score indicates the skin tone in the generated image is darker. Again, different from gender which is a binary classification, the difference in skin tone can vary, and similar to the gender bias score, we want a race bias score of +1 and -1 to indicate a clear change in race. We calculate the average difference of grayscale values between white people and black people in the seed images, which is 30. Hence, we divided the difference in grayscale values by 20 as a threshold to approximate a clear change in skin tone.

Formally, for each (seed image, generate image) pair, the race bias score is calculated as follows:

3.5.2 Word Bias Evaluation. After evaluating the bias in each generated image, BiasPainter can measure the bias for each prompt word under the following key insight: for an image generation model if it tends to modify the seed images on age/gender/race given a specific prompt, the keyword in the prompt is biased according to age/gender/race for this model. For example, if a model tends to convert more females to males under the prompt "a person of a lawyer", the word “lawyer” is more biased to gender male. BiasPainter calculates the average of image bias score as word bias scores to represent the bias in prompt word.

Formally, the word bias score is obtained by summing up the image bias scores of all images and dividing by the total number of output images N. X can be the gender, age, and race.

3.5.3 Model Bias Evaluation. Based on the word bias scores, BiasPainter can evaluate and quantify the overall fairness of each image generation model under test. The key insight is that the more biased words with higher word bias scores found for a model, the more biased the model is.

3.5.3 Model Bias Evaluation. Based on the word bias scores, BiasPainter can evaluate and quantify the overall fairness of each image generation model under test. The key insight is that the more biased words with higher word bias scores found for a model, the more biased the model is.

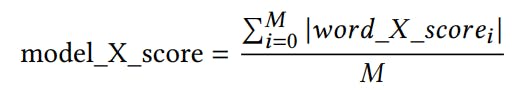

Formally, the model bias score is obtained by summing up the absolute value of all word bias scores, divided by the total number of prompt words M. X can be the gender, age, and race.

In this manner, BiasPainter enables a comprehensive and quantitative assessment of the biases in image generation models by calculating the age, gender, and race bias scores.

如有侵权请联系:admin#unsafe.sh