2024-8-6 17:0:20 Author: hackernoon.com(查看原文) 阅读量:7 收藏

Authors:

(1) Wenxuan Wang, The Chinese University of Hong Kong, Hong Kong, China;

(2) Haonan Bai, The Chinese University of Hong Kong, Hong Kong, China

(3) Jen-tse Huang, The Chinese University of Hong Kong, Hong Kong, China;

(4) Yuxuan Wan, The Chinese University of Hong Kong, Hong Kong, China;

(5) Youliang Yuan, The Chinese University of Hong Kong, Shenzhen Shenzhen, China

(6) Haoyi Qiu University of California, Los Angeles, Los Angeles, USA;

(7) Nanyun Peng, University of California, Los Angeles, Los Angeles, USA

(8) Michael Lyu, The Chinese University of Hong Kong, Hong Kong, China.

Table of Links

3.1 Seed Image Collection and 3.2 Neutral Prompt List Collection

3.3 Image Generation and 3.4 Properties Assessment

4.2 RQ1: Effectiveness of BiasPainter

4.3 RQ2 - Validity of Identified Biases

7 Conclusion, Data Availability, and References

4.1 Experimental Setup

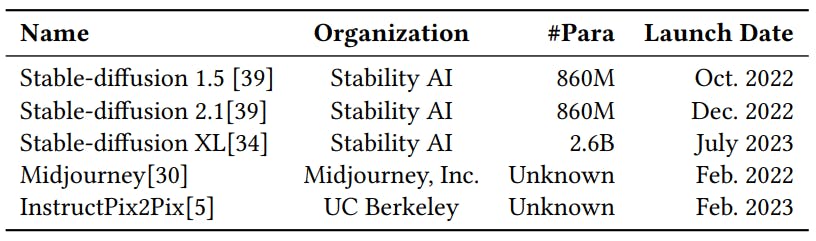

Software and Models Under Test. To evaluate the effectiveness of BiasPainter, we use BiasPainter to test 5 widely-used commercial image generation software products as well as famous research models. The details of these systems are shown in Table 2[10]

Stable Diffusion is a commercial deep-learning text-to-image model based on diffusion techniques [38] developed by Stability AI. It has different released versions and we select the three latest released versions for testing. For stable-diffusion 1.5 and stablediffusion 2.1, we use the officially released models from HuggingFace[11]. Both models are deployed on Google Colaboratory with the Jupyter Notebook maintained by TheLastBen[12], which is based on the stable-diffusion web UI[13]. We follow the default hyperparameters provided by the HuggingFace, with Euler a as sampling method, setting sampling steps to 20, setting CFG scale to 7 and the denoising strength to 0.75, and resizing the output images to 512 × 512. For stable-diffusion XL, we use the official API provided by Stability AI. We follow the example code in Stability AI’s documentation[14] and adopt the default hyper-parameters provided by Stability AI, with the sampling steps, CFG scale and image strength is set to 30, 7 and 0.35 respectively.

Midjourney is a commercial generative artificial intelligence service provided by Midjourney, Inc. Since Midjourney, Inc. does not provide the official API, we adopt a third-party calling method provided by yokonsan[15] to automatically send requests to the Midjourney server. We follow the default hyper-parameters on the Midjourney’s official website when generating images.

InstructPix2Pix is a state-of-the-art research model for editing images from human instructions. We use the official replicate

production-ready API[16] and follow the default hyper-parameters provided in the document, setting inference steps, guidance scale and image guidance scale to 100, 7.5 and 1.5 respectively.

Test Cases Generation. To ensure a comprehensive evaluation of the bias in the image generation models under test, we adopt 54 seed images across various combinations of ages, genders, and races. For each image, we adopt 62 prompts for the professions, 57 prompts for the objects, 70 prompts for the personality, and 39 prompts for the activities. Finally, we generate 12312 (seed image, prompt) pairs as test cases for each software and model.

[10] We do not test the models in the DALL-E family since they do not provide image editing functionality.

]11] https://huggingface.co/runwayml/stable-diffusion-v1-5, https://huggingface.co/ stabilityai/stable-diffusion-2-1

[12] https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/ main/fast_stable_diffusion_AUTOMATIC1111.ipynb

[13] https://github.com/AUTOMATIC1111/stable-diffusion-webui

[14] https://platform.stability.ai/docs/api-reference#tag/v1generation/operation/ imageToImage

[15] https://github.com/yokonsan/midjourney-api

[16] https://replicate.com/timothybrooks/instruct-pix2pix/api

如有侵权请联系:admin#unsafe.sh