2024-8-8 08:9:39 Author: hackernoon.com(查看原文) 阅读量:1 收藏

I recently migrated a full-fledged SQL Server from a virtual machine to Azure SQL. The journey was full of discoveries, which inspired me to write this article.

For the sake of simplicity, let’s assume that the decision to go with Azure SQL is already made. I will primarily focus on how I chose between DTU and vCore Azure SQL offerings regarding performance. Delving into the details is required, so be ready to meet with performance tests, hardware, tempdb, execution plans, and more.

The official documentation doesn’t definitively answer how DTUs relate to vCores and full-fledged virtual machine cores. All the answers come down to “it depends,” which makes sense because the workload in each system is unique. Nevertheless, you can find the following formula for standard plans:

100 DTU ~ 1 vCore.

The original server had four cores, so our candidates are:

- Azure SQL Standard 400 DTU.

- Azure SQL General Purpose 4 vCore.

I immediately abandoned the idea of writing synthetic tests because the system's performance with actual data and queries is the most accurate metric. Therefore, the choice shifted towards leveraging existing APIs (HTTP) with time measurements. One scenario involved sending a series of requests, each creating different load and stress levels on the database.

Within the article, I assigned names to each scenario that reflected what would happen from a database perspective. You can see the complete list below.

- Scenario 1: Medium SELECTs.

- Scenario 2: Large SELECTs.

- Scenario 3: Medium UPDATEs.

- Scenario 4: Medium INSERTs.

- Scenario 5: Large INSERTs.

- Scenario 6: Extremely Large INSERTs.

- Scenario 7: All-in.

For instance, the Medium SELECTs scenario involves queries with multiple JOINs, ORDER BYs, etc. The Large SELECTs script represents more complex queries with multi-stage JOINs and subqueries. On the other hand, All-in is the most complicated scenario, encompassing the full range of read/write operations. Everything else falls somewhere in between.

A single run included the execution of the scenarios above one by one. Afterward, the script would delete and restore the original state from a backup. As soon as a run was completed on one type of database, its tier changed (for example, from 400 DTU to 4 vCores and vice versa), and the entire process repeated.

I expected vCore to outperform DTUs. After all, aren’t real cores better than the abstract ones? Moreover, Microsoft positions DTUs as the first-choice solution for smaller systems. But the results turned out to be unexpected.

To be honest, achieving stable results wasn’t that easy; the numbers varied by 20–30% depending on the time of the test (or maybe even the phase of the Moon). Nevertheless, the collected data was good enough to show an overall picture.

The underdog DTU consistently performed in more straightforward scenarios and was generally quicker than its counterpart. However, vCores stayed ahead in more extensive tests (5, 6). But the heaviest scenario (7) knocked the reserved cores out: 19.3 minutes versus 1.5! The degradation is more than 12 times! That was indeed a cold shower. This performance drop repeated in 100% of the tests.

Using the Azure Data Studio profiler, I identified one of the problematic queries. Find the piece of its execution plan below.

This operation ran over 1 minute, and the optimizer warned with the following text:

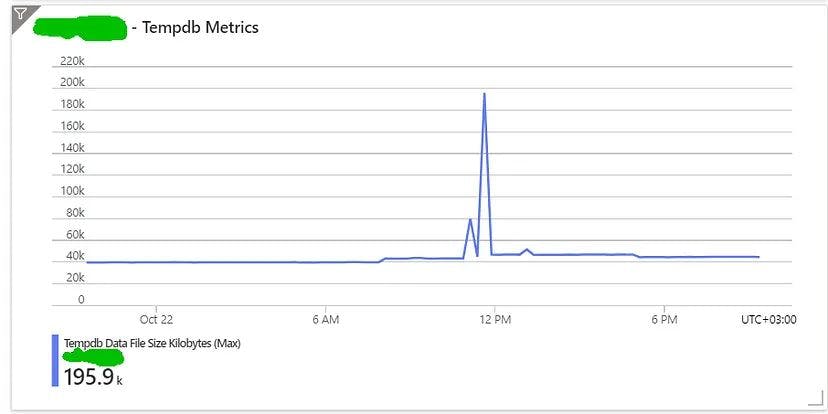

This indicates that the SQL engine unloaded a relatively large dataset (880Mb) into the tempdb, in other words, onto the hard disk (SSD). Despite modern SSDs being significantly faster than traditional HDDs, they are still slower than RAM. That’s why performance dropped greatly.

The next step was to see how 400 DTUs handled the same query. I expected to see a different execution plan, but I was wrong again. The plan was identical to what I saw with 4 vCores: structure, costs, characteristics, etc., but there was one slight difference.

There's no warning on the same sorting operation, which means the process didn’t run out of memory. As a result, it took only 5 seconds instead of 74!

I concluded that DTUs have a built-in mechanism for automatic memory scaling, which provides slightly more resource allocation at peak moments than fixed vCores. This keeps the operation running while vCores would otherwise struggle with tempdb.

In this benchmark, I declare Azure SQL 400 DTU as the winner. Despite the challenges in some tests, it provides consistent system performance. I found it fascinating to reach the real crashing point within an experiment where vCores struggle and DTUs don’t. This reminded me of the famous TV show MythBusters.

Another valuable result is that after these tests, it became clear which tables/queries require immediate attention. Additionally, I added monitoring for the tempdb size to track such issues in the production environment.

For the sake of science, I also tested six vCores and 800 DTUs, respectively. Both competitors showed identical results, about 10–15% better than 400 DTUs. There was no degradation in complex scenarios, presumably due to the larger memory capacity.

Having all the test data, I confirm that 100 DTU equals more than one core. The target system works fine on DTU 400 and requires at least six vCores to perform comparably.

如有侵权请联系:admin#unsafe.sh