2024-8-9 21:10:29 Author: securityboulevard.com(查看原文) 阅读量:8 收藏

With DARPA’s AI Cyber Challenge (AIxCC) semifinal starting today at DEF CON 2024, we want to introduce Buttercup, our AIxCC submission. Buttercup is a Cyber Reasoning System (CRS) that combines conventional cybersecurity techniques like fuzzing and static analysis with AI and machine learning to find and fix software vulnerabilities. The system is designed to operate within the competition’s strict time and budget constraints.

Since DARPA awarded us and six other small businesses $1 million in March to develop a CRS for AIxCC, we’ve been working nonstop on Buttercup, and we finally submitted it in mid-July. We’re excited to participate in the semifinals, where DARPA will test our CRS for its ability to find and fix vulnerabilities more efficiently than humans. Many Trail of Bits engineers who developed Buttercup will be at DEF CON. Please come say hi!

The logo of our CRS, Buttercup

This post will introduce the team behind Buttercup and explain why we’re competing, the challenges we’ve faced, and what comes next.

Why we’re competing

At Trail of Bits, one of our core pillars is strengthening the security community by contributing to open-source software, developing tools, and sharing our knowledge. Open-source software is vital, powering much of today’s technology—from the Linux operating system, which runs millions of servers worldwide, to the Apache HTTP Server, which serves a significant portion of the internet. However, the real problem lies in the sheer volume and complexity of open-source code, making it difficult to keep secure.

Dan Guido explained, “There’s just too much code to look through, and it’s too complex to find all the vulnerabilities all over the globe. We’re writing more software every day and we’re becoming more dependent on software, but the number of security engineers has not scaled with the need to perform that work. AI is an opportunity that might help us find and fix security issues that are now pervasive and increasing in number.”

Our work on Buttercup aims to address these challenges, reinforcing our belief that securing open-source software is essential for a safer world. By developing advanced AI-driven solutions, Trail of Bits is not only competing for innovation but also contributing to a broader mission of securing the systems we all depend on.

The team behind Buttercup

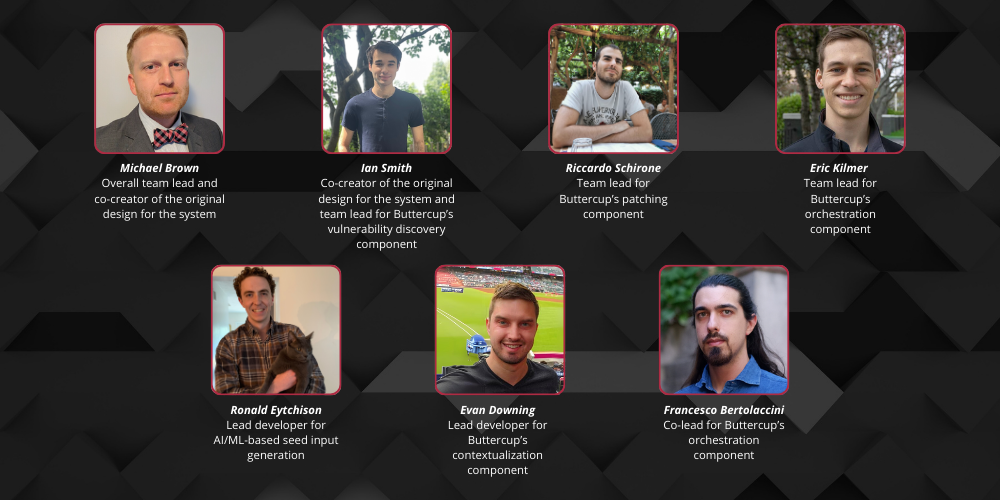

Our AIxCC team consisted of 19 engineers, each working on a sub-team with a specific goal and task. We were a fully remote team, working almost around the clock due to different time zones, which presented challenges and opportunities. First, let’s introduce our team leads:

The core team that developed Buttercup

The other team members who worked on Buttercup are Alan Cao, Alessandro Gario, Akshay Kumar, Boyan Milanov, Marek Surovic, Brad Swain, William Tan, and Amanda Stickler.

Artem Dinaburg, Andrew Pan, Henrik Brodin, and Evan Sultanik made valuable contributions in the initial phases of Buttercup’s development.

Introducing Buttercup: Our AIxCC submission

Buttercup, our CRS for AIxCC, represents a significant leap forward in automated vulnerability detection and remediation. Here’s what makes Buttercup unique:

- Hybrid approach: Buttercup combines conventional cybersecurity techniques like fuzzing and static analysis with cutting-edge AI and machine learning. This fusion allows us to leverage the strengths of both approaches, overcoming limitations inherent in each.

- Adaptive vulnerability discovery: Our system uses large language models (LLMs) to generate seed inputs for fuzzing, significantly reducing the time needed to discover vulnerabilities. This innovative approach helps us work within the competition’s strict time constraints.

- Intelligent contextualization: Buttercup doesn’t just find vulnerabilities; it understands them. Our system can identify bug-inducing commits and provide crucial context for effective patching.

- AI-driven patching: We’ve implemented a multiple interactive LLM agent approach for patch generation. These agents collaborate to analyze, debug, and iteratively improve patches based on validation feedback.

- Scalability and resilience: Drawing from our experience with Cyberdyne in the Cyber Grand Challenge, we’ve designed Buttercup with a distributed architecture that ensures both scalability and resilience to failures.

- Language versatility: While initially focused on C and Java for the competition, Buttercup’s architecture is designed to be extensible to other programming languages in future iterations.

By combining these capabilities, Buttercup aims to automate the entire vulnerability lifecycle—from discovery to patching—without human intervention. This approach not only meets the competition’s requirements but also pushes the boundaries of what’s possible in automated cybersecurity.

Adapting to competition constraints

The competition hasn’t been without its challenges. Buttercup’s development took three months and involved building and integrating components and frequent progress check-ins. Along the way, the team continually adapted to evolving requirements and new competition rules from DARPA, which often forced us to redo parts of Buttercup.

The AIxCC posed unique challenges, including a strict four-hour time limit and a $100 limit on LLM queries for each challenge, pushing us to innovate and adapt in ways we hadn’t initially anticipated:

- Optimized seed generation: We’ve refined our use of LLMs to generate high-quality seed inputs for fuzzing, aiming to discover vulnerabilities more quickly.

- Streamlined workflow: Our entire pipeline, from vulnerability discovery to patch generation, has been optimized to work within tight time constraints.

- Prioritization strategies: We’ve implemented intelligent prioritization mechanisms to focus on the most promising leads within the limited timeframe.

- Efficient resource allocation: Buttercup dynamically allocates computational resources to maximize productivity within the four-hour window.

- Strategic use of LLMs: The $100 limit on LLM queries per challenge required careful budgeting of our AI resources and emphasized the need for efficient, targeted use of LLMs throughout the process.

Beyond the time limit and resource constraints, we faced several other challenges:

- AI unpredictability: AI’s unpredictability demands precise prompts for useful outputs. It generates probabilistic, not definitive, results. Our system uses feedback from fundamental testing tools and methods like fuzzing to evaluate ambiguous or probabilistic outputs. This lets the team determine if a vulnerability is a false or true positive.

- Parallel development: Building and integrating components simultaneously required exceptional teamwork and adaptability. Our global team worked almost around the clock, leveraging different time zones to make continuous progress.

- Evolving requirements: We continually adapted to new information and rule clarifications from DARPA, sometimes having to reevaluate and adjust our approach.

While we believe looser constraints would allow for discovering deeper, more complex vulnerabilities, we’ve embraced this challenge as an opportunity to push the boundaries of what’s possible in rapid, automated vulnerability discovery and remediation.

What comes next

On July 15, we finalized and submitted Buttercup for the AIxCC semifinal competition. This submission showcases our work on vulnerability discovery, patching, and orchestration. Our short-term goal is to place in the top seven out of forty teams in the semifinals at DEF CON and continue developing Buttercup for the final competition in 2025.

Looking ahead, our long-term goals are to advance the use of AI and ML algorithms in detecting and patching vulnerabilities and transition this technology to government and industry partners. We are committed to releasing Buttercup in line with the competition requirements, continuing our philosophy of contributing to the broader cybersecurity community.

As we embark on this exciting phase of the AIxCC, we invite you to be part of our journey:

- Stay informed: Sign up for our newsletter and follow our accounts on X, LinkedIn, and Mastodon for updates on our progress in the competition and insights into our work with AI. Our AI/ML team has recently shared our work helping secure ML systems by reporting vulnerabilities in Ask Astro and Sleepy Pickle for ML model exploitation.

- Explore our open-source work: While Buttercup won’t be open-sourced until next year, you can check out our other projects on GitHub. Our commitment to open-source continues to drive innovation in the cybersecurity community.

- Connect with us at DEF CON: If you’re attending DEF CON, come say hello to our team at the AIxCC village! We’d love to discuss our approach and explore potential collaborations.

- Partner with us: We’re here to help companies apply LLMs to cybersecurity challenges. Our experience with Buttercup has given us unique insights into leveraging AI for security—let’s discuss how we can enhance your team.

The AIxCC semifinals mark just the beginning of this journey. By participating in this groundbreaking competition, we’re not just building a tool—we’re shaping the future of cybersecurity. Join us in pushing the boundaries of what’s possible in automated vulnerability discovery and remediation.

As the semifinals are ongoing, follow us on social media to stay up-to-date on our overall progress and team achievements.

*** This is a Security Bloggers Network syndicated blog from Trail of Bits Blog authored by Trail of Bits. Read the original post at: https://blog.trailofbits.com/2024/08/09/trail-of-bits-buttercup-heads-to-darpas-aixcc/

如有侵权请联系:admin#unsafe.sh