2024-8-14 20:56:15 Author: www.malwarebytes.com(查看原文) 阅读量:5 收藏

In what may come as a surprise to nobody at all, there’s been yet another complaint about using social media data to train Artificial Intelligence (AI).

This time the complaint is against X (formerly Twitter) and Grok, the conversational AI chatbot developed by Elon Musk’s company xAI. Grok is a large language model (LLM) chatbot able to generate text and engage in conversations with users.

Unlike other chatbots, Grok has the ability to access information in real-time through X and to respond to some types of questions that would typically face rejection by other AI systems. Grok is available for X users that have a Premium or Premium+ subscription.

According to European privacy group NYOB (None Of Your Business):

“X began unlawfully using the personal data of more than 60 million users in the EU/EEA to train its AI technologies (like “Grok”) without their consent.”

NOYB decided to follow up on High Court proceedings launched by the Irish Data Protection Commission (DPC) against Twitter International Unlimited Company over concerns about the processing of the personal data of European users of the X platform, as it said it it was unsatisfied with the outcome of those proceedings.

Dublin-based Twitter International Unlimited Company is the data controller in the EU with respect to all personal data on X.

The DPC claimed that by its use of Grok, Twitter International is not complying with its obligations under the GDPR, the EU regulation that sets guidelines for information privacy and data protection.

Despite the implementation of mitigation measures—after the fact–the DPC says that the data of a very significant number of X’s millions of European-based users have been and continue to be processed without the protection of these mitigation measures, which isn’t consistent with rights under GDPR.

But NOYB says the DPC is missing the mark:

“The court documents are not public, but from the oral hearing we understand that the DPC was not questioning the legality of this processing itself. It seems the DPC was concerned with so-called ‘mitigation measures’ and a lack of cooperation by Twitter. The DPC seems to take action around the edges, but shies away from the core problem.”

For this reason, NOYB has now filed GDPR complaints with data protection authorities in nine countries (Austria, Belgium, France, Greece, Ireland, Italy, Netherlands, Poland, and Spain).

All they had to do was ask

The EU’s GDPR provides an easy solution for companies that wish to use personal data for AI development and training: Just ask users for their consent in a clear way. But X just took the data without asking for permission and later created an opt-out option referred to as the mitigation measures.

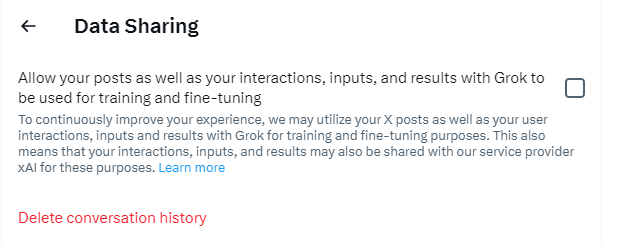

It wasn’t until two months after the start of the Grok training, that users noticed X had activated a default setting for everyone that gives the company the right to use their data to train Grok. The easiest way to check if you are sharing your data is to visit https://x.com/settings/grok_settings while you are logged in to X. If there is a checkmark, you are sharing your data for the training of Grok. Remove that checkmark and it stops.

In a similar case about the use of personal data for targeted advertising, Meta argued that it has a legitimate interest that overrides users’ fundamental rights. This counts as one of the six possible legal bases to escape GDPR regulations, but the Court of Justice rejected this reasoning.

Many AI system providers have run into problems with GDPR, specifically the regulation that stipulates the “right to be forgotten,” which is something most AI systems are unable to comply with. A good reason not to ingest these data into their AI systems in the first place, I would say.

Likewise, these companies always claim that it’s impossible to answer requests to get a copy of the personal data contained in training data or the sources of such data. They also claim they have an inability to correct inaccurate personal data. All these concerns raise a lot of questions when it comes to the unlimited ingestion of personal data into AI systems.

When the EU adopted the EU Artificial Intelligence Act (“AI Act”) which aims to regulate artificial intelligence (AI) to ensure better conditions for the development and use of this innovative technology, some of these considerations played a role. Article 2(7)) for example calls for the right to privacy and protection of personal data to be guaranteed throughout the entire lifecycle of the AI system.

We don’t just report on threats – we help protect your social media

Cybersecurity risks should never spread beyond a headline. Protect your social media accounts by using Cyrus, powered by Malwarebytes.

如有侵权请联系:admin#unsafe.sh