2024-8-15 23:55:23 Author: hackernoon.com(查看原文) 阅读量:5 收藏

Authors:

(1) Amin Mekacher, City University of London, Department of Mathematics, London EC1V 0HB, (UK) and this author contributed equally;

(2) Max Falkenberg, City University of London, Department of Mathematics, London EC1V 0HB, (UK), this author contributed equally, and Corresponding authors: [email protected];

(3) Andrea Baronchelli, City University of London, Department of Mathematics, London EC1V 0HB, (UK), The Alan Turing Institute, British Library, London NW1 2DB, (UK), and Corresponding authors: [email protected].

Table of Links

Acknowledgements, Data availability, and References

METHODS

Gettr data

The data used for this study has been collected using GoGettr [76], a public client developed by the Stanford Internet Observatory to give access to the Gettr API. This API allows to query user interactions, including the posts they like or share. User profiles were initially collected through a snowball sampling, by using highly popular accounts on the platform as seed users, and using the API to query their follower and following list, before repeating the same process for a random sample of the newly retrieved users. Repeating this process many times ensures that our dataset is near-complete for the studied time period.

To attract more users from Twitter, Gettr previously offered a feature that would automatically import a user’s tweets upon creating an account. However, due to Twitter blocking this capability on July 10, 2021[77], Gettr had to discontinue this feature. To ensure the accuracy of our results, any posts imported before July 10, 2021, and any Gettr post whose timestamp precedes the account’s creation date were removed from our dataset.

To ensure our case study on the Brazilian rightwing group encompasses the Bras´ılia insurrection, we expanded the data collection time frame for any user associated with the Brazilian community. The data collection was run in July 2022 for every user whose profile we have retrieved, and in January 2023 for the users in the Brazilian cohort.

Twitter data

For each verified Gettr account where the Gettr API references their Twitter followers in the account metadata, we check that the Twitter account with the same username is active using the Twitter API (see https://developer.twitter.com/en/ products/twitter-api/academic-research). Then, for each active account we download their Twitter timeline including all tweets and retweets in the period July 2021 to May 2022. This totals approximately 12 million tweets. Data was collected between September and October 2022, preceding Elon Musk’s amnesty of suspended Twitter accounts.

User labelling

Throughout our analysis, we label verified Gettr users as being either “matched” or “banned”, depending on whether their corresponding Twitter account is active or suspended. Any verified user who decided to link their Twitter account on their Gettr page has their Twitter follower count displayed on their profile, which can also be retrieved from the Gettr API. We stress that this selfdeclaration permits cross-platform matching since users can “reasonably expect” that their Gettr accounts will be associated with their Twitter accounts.

To match accounts across platforms, we assumed that users picked the same username on both Gettr and Twitter, and we used the Twitter API to retrieve their Twitter activity. A user is classified as “banned” if the Twitter user endpoint returned an error indicating that the account has been suspended.

Note that for data privacy reasons, all analysis of users across platforms is aggregated at the cohort level; we do not present results for individual users.

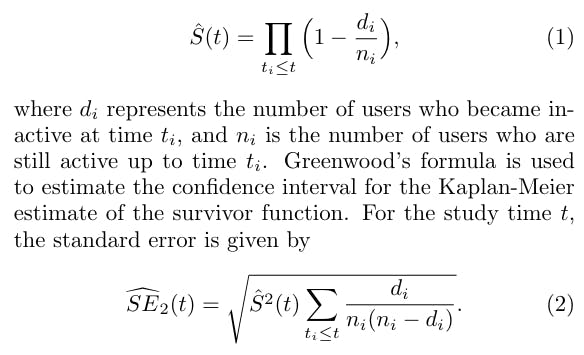

Calculating account survival

The Kaplan-Meier estimate is a tool used to quantify the survival rate of a population (in our case, users active on a social platform) over time. For each time step t, we measure how many users become indefinitely inactive, and we quantify the survival rate as

Topic Modelling

Gettr and Twitter content is analysed using the BERTopic topic modelling library [32]. This method extracts latent topics from an ensemble of documents (in our case Gettr posts and Twitter tweets). The base model uses pre-trained transformer-based language models to construct document embeddings which are then clustered.

These methods are known to struggle with very short documents which are common on micro-blogging sites. Hence, we train our topic model using exclusively Gettr posts which are longer than 100 characters. To avoid any single user dominating a specific topic, we limit the training set to no more than 50 posts from any given user.

Latent ideology

To calculate the latent ideology on Gettr, we use the method developed in [36, 37] and filtering procedures from [38]. The method uses a bipartite approach where it classifies Gettr accounts as influencers or regular users. It then generates an ordering of users based on the interaction patterns of regular users with the influencer set. In the current paper, we select the matched and banned user sets as our influencers, and the remaining set of Gettr users as our cohort of regular users.

Two factors can conflate the latent ideology: (1) account geography, and (2) a lack of user-influencer interactions. Since we are interested in the segregation of the banned and matched cohorts based on political ideology, the former is problematic because country-specific communities on social media can appear structurally segregated from a related community in other countries, even if they are politically aligned. For this reason, we remove a small number of accounts associated with the UK and China from our set of Gettr influencers. In the latter case, a lack of user-influencer interactions can be problematic since influencers with few user interactions appear as erroneous outliers when computing the latent ideology, often because they do not take active part in the conversation. Hence, we restrict our influencer set to the 500 banned and matched accounts who receive the largest number of interactions from other users on Gettr. In the current study, we consider any interaction type including comments, shares and likes. The latent ideology is robust as long as the number of influencers used is larger than 200 accounts [38].

In order to assess the modality of the ideology distributions, we use Hartigan’s diptest. This approach is used to identify polarized social media conversations and echo-chambers [37, 38]. Hartigan’s diptest compares a test distribution against a matched unimodal distribution to assess distribution modality [39].

The test computes the distance, D, between the cumulative density of the test distribution and the cumulative density of the matched unimodal distribution. The D-statistic is accompanied by a p-value which quantifies whether the test distribution is significantly different to a matched unimodal distribution. A p-value of less than 0.01 indicates a multimodal distribution.

Toxicity analysis

The toxicity of Gettr and Twitter content is computed using the Google Perspective API [42], which has been used in several social media studies to assess platform toxicity [78–80].

Given a text input, the API returns a score between 0 and 1, indicating how likely a human moderator is to flag the text as being toxic. For our analysis, we used the flagship attribute “toxicity”, which is defined as “[a] rude, disrespectful, or unreasonable comment that is likely to make people leave a discussion” [81].

When computing statistics for the toxicity of each user cohort, we apply a bootstrapping procedure to avoid erroneous results from variable cohort sizes. This is important since the distribution of post toxicity is fat tailed; there are far more posts with low toxicity than high toxicity. Therefore, a smaller set of posts from a user cohort may have a lower median toxicity, purely due to sampling effects. To avoid this conflation, bootstrapping is employed where equally sized samples are drawn from each cohort (usually 100 posts), and the median toxicity is computed for each sample. Then, the sampling procedure is repeated 100 times to compute the median and inter-quartile range for the sampled post toxicity.

News media classification using Media Bias / Fact Check

For the quote-ratio analysis in Fig. 5, we identify the Twitter handles of news media outlets and classify their political leaning using Media Bias / Fact Check (MBFC; see https://mediabiasfactcheck.com/). Ratings provided by MBFC are largely similar to other reputable media rating datasets [60].

MBFC classify news outlets under seven leaning categories: extreme left, left, center-left, least (media considered unbiased), center-right, right, extreme right. To ensure that we have enough news media outlets to enable the quote-ratio analysis, we group these classifications into four larger groups: Left – (extreme left, left, center-left), Least – (least), Right – (center-right, right), Far-right – (extreme right). Note that we have chosen to use the terminology “far-right” instead of “extremeright” since the former is more common in the academic literature.

如有侵权请联系:admin#unsafe.sh