2024-8-16 17:33:46 Author: hackernoon.com(查看原文) 阅读量:2 收藏

It may seem that any project must be covered with unit tests, and it is often viewed as an obligation rather than a beneficial practice. However, many projects avoid unit tests altogether. When you suggest adding them, sponsors often nod and promise to consider it but rarely agree to implement them. I follow their hesitation. Essentially, you are proposing something they do not fully understand and have not clearly explained the benefits of. Why should anyone invest time and money into something that might not seem immediately useful? You might say that unit tests help programmers ensure our code works better and prevents bugs. However, that explanation sounds too abstract and impractical for real-world scenarios.

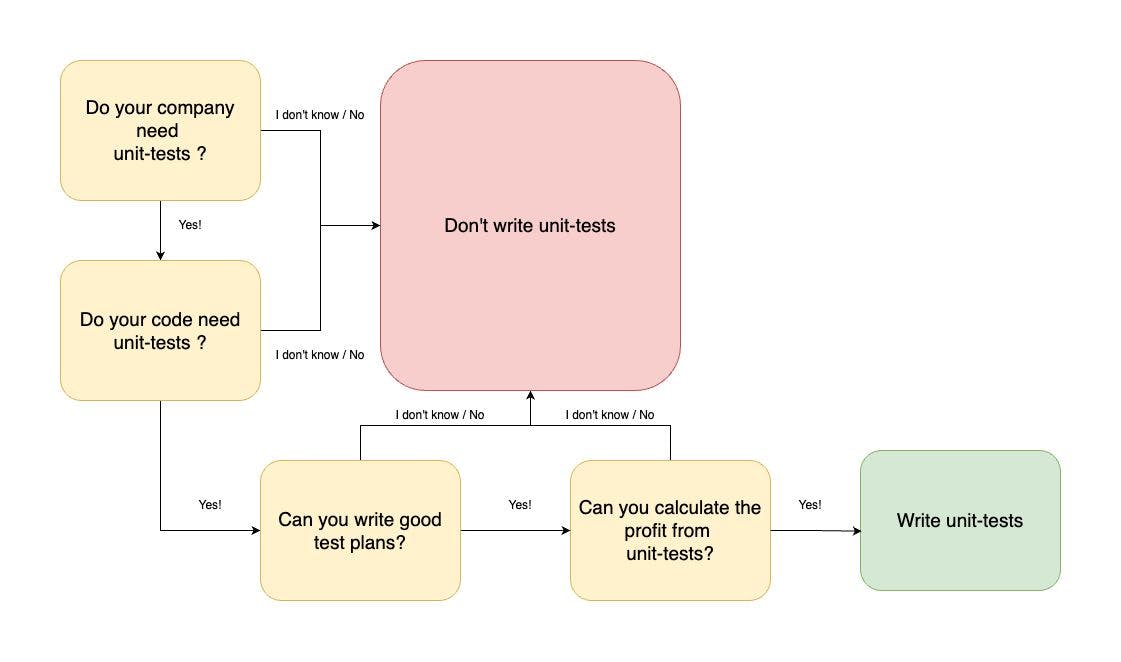

In this article, I aim to explain when unit tests are necessary, which unit tests should be written, and how to effectively communicate the value of unit tests to business stakeholders. Unfortunately, many companies that already have unit tests do not use them properly, and those who have them are unaware of the potential benefits.

Before understanding the key points for writing unit tests, let’s highlight when they might not be necessary.

First, you need to know your company’s priorities. Writing unit tests is a time-consuming process that can take days to prepare test plans and weeks to implement them. It’s always about weighing the pros and cons. In some cases, unit tests may not be necessary.

For pre-seed startups or even growing startups, it might be more beneficial to invest in feature development and rely on manual testing due to limited resources.

Some companies have good integration test coverage and run these tests before release to ensure that changes can safely go to production. Your product may already have a comprehensive suite of integration tests. Before adding unit tests, you will need to review existing test plans, identify what has not been tested, and find gaps. This process can be time-consuming. I’m not saying you won’t need unit tests in this scenario, but it will be harder to justify their value to the business, and writing proper unit tests will also take considerable time.

Your code changes might not contain many bugs, and manual testing works very well, with structured test suites. Such companies are usually well-established and have gained significant respect from their customers. The developers know the codebase, and the number of bugs is minimal. It would be really hard to persuade the business to adopt unit testing in this scenario, and it may not actually be worth it.

One signal that you should delay unit testing is when you know your code will soon undergo significant changes. For example, if you are planning to rewrite a complex module of a monolithic system, or if you are updating legacy code to integrate with a modern library or database, adding unit tests now would be redundant. In these scenarios, the code is highly coupled to the current implementation and will be refactored soon.

If you write unit tests now, you’ll have to align them with the new changes later, which is manageable for small updates. However, in the mentioned cases, you would need to revise entire test suites and the underlying logic, which is not a quick task.

Your code might not be ready for unit tests, or it might not need them. Consider a legacy system that was written 20 years ago. The architecture of this module was designed without anticipating that future developers would want to cover the code with unit tests. Additionally, if the system has been integrated for a long time, it means the code was developed long ago, successfully tested by your company, and has survived numerous releases.

Additionally, one advantage of avoiding unit tests is if your code changes very seldom – say, not more than once every three months. The benefits from test coverage might only become apparent after years, considering the time required to develop the test suites. This is especially true for legacy systems that are infrequently updated. In such cases, writing unit tests should be considered a low priority. Instead, it might be more beneficial to include some use cases from the legacy system in your integration tests.

Prepare your codebase

It is crucial to achieve proper outcomes from unit test coverage. Therefore, you need to prepare your system to be covered with unit tests. This means your code must be well-isolated and perform a clear role, making it easy for anyone in the company to explain its purpose and communication protocols. Establishing strict boundaries is essential before writing unit tests.

Consider a service that only communicates with others through APIs. If your changes do not affect the API but only the internal logic, you don’t need to retest the entire codebase, just your service. This is known as separation of concerns. In such cases, manual feature testing and a partial regression tests covering communication with your service might be enough.

From my perspective, if unit tests are written professionally, even regression testing can become redundant for minor releases. Regression testing can sometimes take hours, so by relying on well-written unit tests, you can save a lot of time and significantly increase the number of releases. The more similar services you have, the faster you can allow for releases.

Don’t rely only on code coverage percentage

It is necessary to write proper unit tests and not rely solely on test coverage metrics. First, you need to build a test plan outlining what you are going to test and how. You don’t have to test every line of code; instead, try to divide your code according to functional requirements. Each function was written with a specific purpose and use case, so focus on testing those.

Even if you achieve 100% test coverage, it doesn’t mean you have covered everything. Test coverage metrics only show how many lines of code have been tested, not how well the code has been tested. For example, if you have a function with 100 lines of code and you write 100 separate tests, each checking a single line, you may achieve 100% coverage. However, these tests will be almost useless because they don’t test the actual use cases for which the code was written.

When our code is clear, it is easier to focus on business scenarios and test functions separately. It is crucial to write tests based on feature use cases, not just the code itself. This approach is relevant to all types of tests, whether unit, functional, or integration tests – the difference lies only in the scope.

Include tests into build system

An important note is to have a build system and integrate your unit tests into it. Writing tests to run locally is redundant work and should be avoided. Never rely on manual processes. Developers will not fix tests unless they are part of the build system and a blocker to the release. Similarly, developers will not add tests to features unless it is part of the policy.

Include test coverage checks in your build system. While high coverage alone does not guarantee safety, having 80–90 percent coverage with a well-planned test suite is a good target. This policy ensures developers write unit tests, as their changes must meet the coverage requirements to pass.

Assuming you really need unit tests and your code is ready for them, how do you convince businesses? The simplest way is to show them numbers. Explain how unit tests will improve development quality and increase release frequency in numbers.

Let’s elaborate with an example.

You have a service “A” that has been under active development for 6 months. You review each commit to see how many features and bugs were addressed during that time. Suppose there were 20 commits, each corresponding to a feature request, and 5 bugs that arose after these commits. We’ll focus on these 5 bugs because each was related to a specific commit, not relevant bugs can be excluded from statistics.

Imagine that each task took 2 days for development and 1 day for testing. One day for testing is our time to perform full regression and manual testing. Each bug took 2 days to find a fix and the same 1 day for testing before the release. For the 20 tasks, we have following

Development: 20 * 2 = 40 days.

Testing: 20 * 1 = 20 days

Bugs: 5 * 2 + 1 = 15 days (development and testing)

In total: 40 + 20 + 15 = 75 days over 6 months.

Now, consider what would happen with unit tests. The ongoing development time remains the same at 40 days, regression testing time could be reduced or eliminated. Manual testing per task remains the same but writing unit tests could take 4 hours per task. In total we have 40 days for development and the same 20 days for tests (20 tasks, 4 hours on testing manual + unit). However, you will save time on bugs. That’s 15 days. In total, you would spend 40 + 20 = 60 days. Thus, you save 15 days over 6 months and avoid 5 potential bugs if the unit tests are effective. Here, I put 25% of development time into writing unit tests but reduce time for full regression which is not necessary anymore. If you don’t have full regression you could make an accent on the amount of bugs after release.

Furthermore, good unit tests can speed up code reviews, providing long-term benefits. You must mention that writing unit tests requires an initial investment of time, the payoff comes within a year or even later. Highlight the potential time savings and the reduction in bugs, which positively impacts the product and client satisfaction.

Again, if scaled our example to 10 services, saving 15 days per service every 6 months, we could save in total 150 days ( 10 services * 15 days ) and avoid around 50 bugs (5 bugs/service * 10 services). These numbers can be quite convincing but to make them closer to real I would divide them by two.

Even for companies already using integration tests, unit tests can be a useful addition. You will have to carefully analyze existing coverage and revise existing tests if necessary to avoid duplication.

Unit tests may not always be necessary, but they can significantly enhance the quality and speed of software delivery. They represent an essential yet straightforward aspect of development that can yield substantial benefits. I find it disheartening when tests are merely written out of obligation, without serious consideration for test plans. Unfortunately, this lack of dedication can extend to integration tests as well. It’s crucial that integration tests do not serve as a safety net for unit tests. If they do, unit tests may not be taken seriously. This issue becomes particularly relevant in companies that employ various types of tests – functional, regression, acceptance, load, unit, and others – where overlaps and gaps in test coverage can arise.

如有侵权请联系:admin#unsafe.sh