2024-08-16

8 min read

Cloudflare Stream is an end-to-end solution for video encoding, storage, delivery, and playback. Our focus has always been on simplifying all aspects of video for developers. This goal continues to motivate us as we introduce first-class portrait (vertical) video support today. Newly uploaded or ingested portrait videos will now automatically be processed in full HD quality.

Why portrait video

In the past few years, the popularity of portrait video has exploded, motivated by short-form video content applications such as TikTok or YouTube Shorts. However, Cloudflare customers have been confused as to why their portrait videos appear to be lower quality when viewed on portrait-first devices such as smartphones. This is because our video encoding pipeline previously did not support high-quality portrait videos, leading them to be grainy and lower quality. This pain point has now been addressed with the introduction of high-definition portrait video.

The current stream pipeline

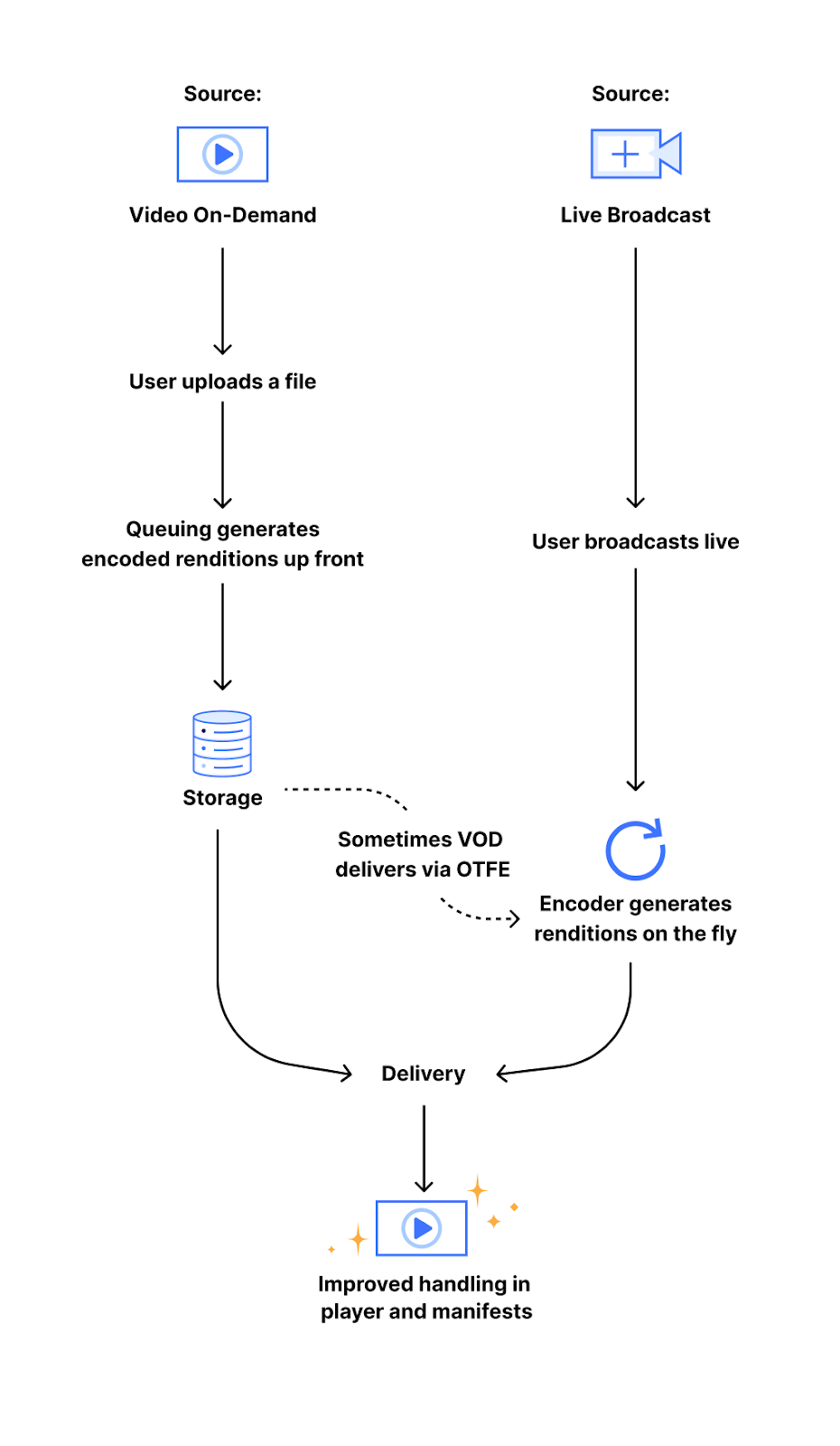

When you upload a video to Stream, it is first encoded into several different “renditions” (sizes or resolutions) before delivery. This is done in order to enable playback in a wide variety of network conditions, as well as to standardize the way a video is experienced. By using these adaptive bitrate renditions, we are able to offer viewers the highest quality streaming experience which fits their network bandwidth, meaning someone watching a video with a slow mobile connection would be served a 240p video (a resolution of 320x240 pixels) and receive the 1080p (a resolution of 1920x1080 pixels) version when they are watching at home on their fiber Internet connection. This encoding pipeline follows one of two different paths:

The first path is our video on-demand (VOD) encoding pipeline, which generates and stores a set of encoded video segments at each of our standard video resolutions. The other path is our on-the-fly encoding (OTFE) pipeline, which uses the same process as Stream Live to generate resolutions upon user request. Both pipelines work with the set of standard resolutions, which are identified through a constrained target (output) height. This means that we encode every rendition to heights of 240 pixels, 360 pixels, etc. up to 1080 pixels.

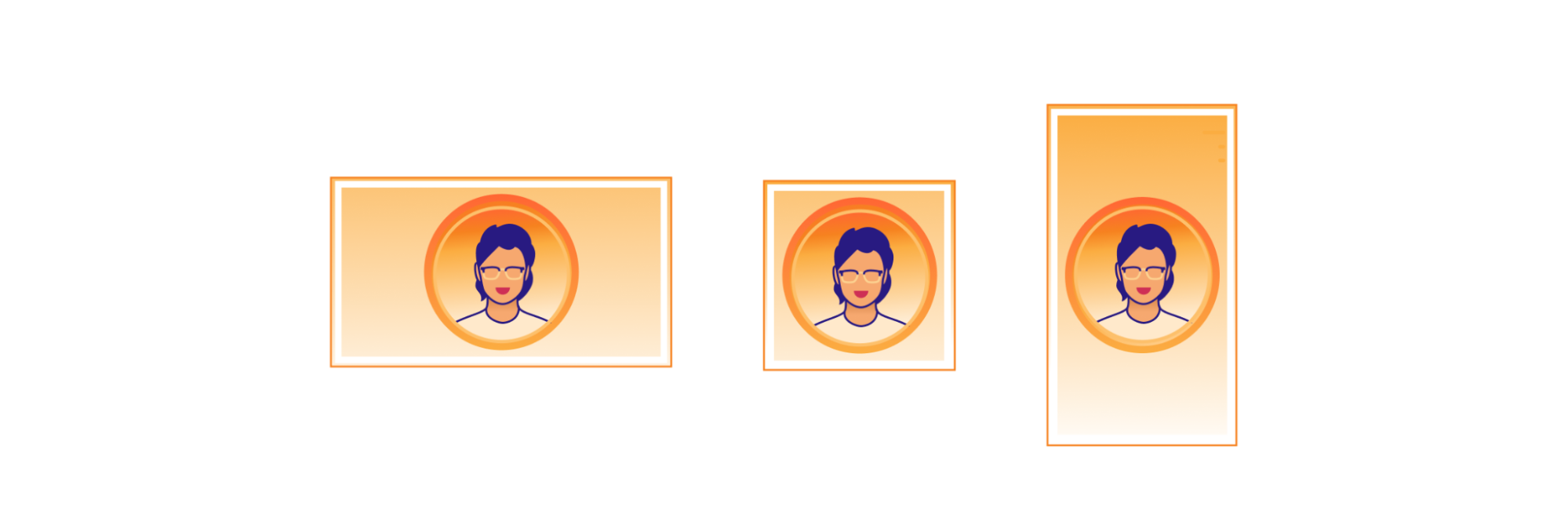

When originally conceived, this encoding pipeline was not designed with portrait video in mind because portrait video was less common. As a result, portrait videos were encoded with lower quality dimensions that were consistent with landscape video encoding. For example, a portrait HD video would have the dimensions 1920x1080 — scaling that down to the height of a landscape HD video would result in the much smaller output of 1080x606. However, current smartphones all have HD displays, making the discrepancy clear when a portrait video is viewed in portrait mode on a phone. With this new change to our encoding pipeline, all newly uploaded portrait videos will now be automatically encoded with constrained target width, using a new set of standard resolutions for portrait video. These resolutions are the same as the current set of landscape resolutions, but with the dimensions reversed: 240x426 up to 1080x1920.

Technical details

As the Stream intern this summer, I was tasked with this project, as well as the expectation of shipping a long-requested change, by the end of my internship. The first step in implementing this change was to familiarize myself with the complex architecture of Stream’s internal systems. After this, I began brainstorming a few different implementation decisions, like how to consistently track orientation through various stages of the pipeline. Following a group discussion to decide which choices would be the most scalable, least complex, and best for users, it was time to write the technical specification.

Due to the implementation method we chose, making this change involved tracing the life of a video from upload to delivery through both of our encoding pipelines and applying different logic for portrait videos. Previously, all video renditions were identified by their height at each stage of the pipeline, making certain parts of the pipeline completely agnostic to the orientation of a video. With the proposed changes, we would now be using the constraining dimension and orientation to identify a video rendition. Therefore, much of the work involved modifying the different portions of the pipeline to use these new parameters.

The first area of the pipeline to be modified was the Stream API service, which is the process which handles all Stream API calls. The API service enqueues the rendition encoding jobs for a video after it is uploaded, so it was necessary to introduce a new set of renditions designed for portrait videos, and enqueue the corresponding encoding jobs. The queuing system is handled by our in-house queue management system, which handles jobs generically and therefore did not require any changes.

Following this, I tackled the on-the-fly encoding pipeline. The area of interest here was the delivery portion of our pipeline, which generated the set of encoding resolutions to pass on to our on-the-fly encoder. Here I also introduced a new set of portrait renditions and the corresponding logic to encode them for portrait videos. This part of the backend is written and hosted on Cloudflare Workers, which made it very easy and quick to deploy and test changes.

Finally, we wanted to change how we presented these quality levels to users in the Stream built-in player and thought that using the new constrained dimension rather than always showing the height would feel more familiar. For portrait videos, we now display the size of the constraining dimension, which also means quality selection for portrait videos encoded under our old system now more accurately reflects their quality, too. As an example, a 9:16 portrait video would have been encoded to a maximum size of 608x1080 by the previous pipeline. Now, such a rendition will be marked as 608p rather than the full-quality 1080p, which would be a 1080x1920 rendition.

Stream as a whole is built on many of our own Developer Platform products, such as Workers for handling delivery, R2 for rendition storage, Workers AI for automatic captioning, and Durable Objects for bitrate observation, all of which enhance our ability to deploy and ship new updates quickly. Throughout my work on this project, I was able to see all of these pieces in action, as well as gain a new understanding of the powerful tools Cloudflare offers for developers.

Results and findings

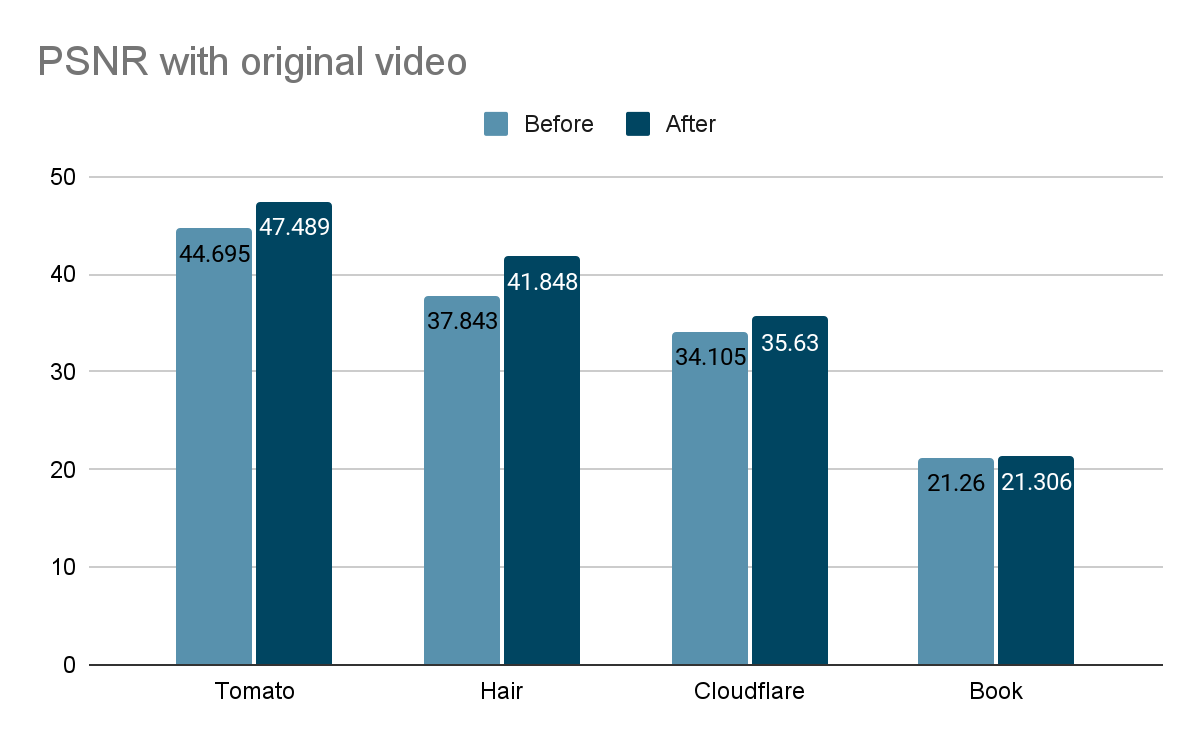

After the change, portrait videos are now encoded to higher resolutions and visibly appear to be higher quality. To confirm these differences, I analyzed the effect of the pipeline change on four different sample videos using the peak-signal-to-noise ratio (PSNR, a mathematical representation of image quality). Since the old pipeline produced lower resolution videos, the comparison here is between an upscaled version of the old pipeline rendition and the current pipeline rendition. In the graph below, higher values reflect higher quality relative to the unencoded original video.

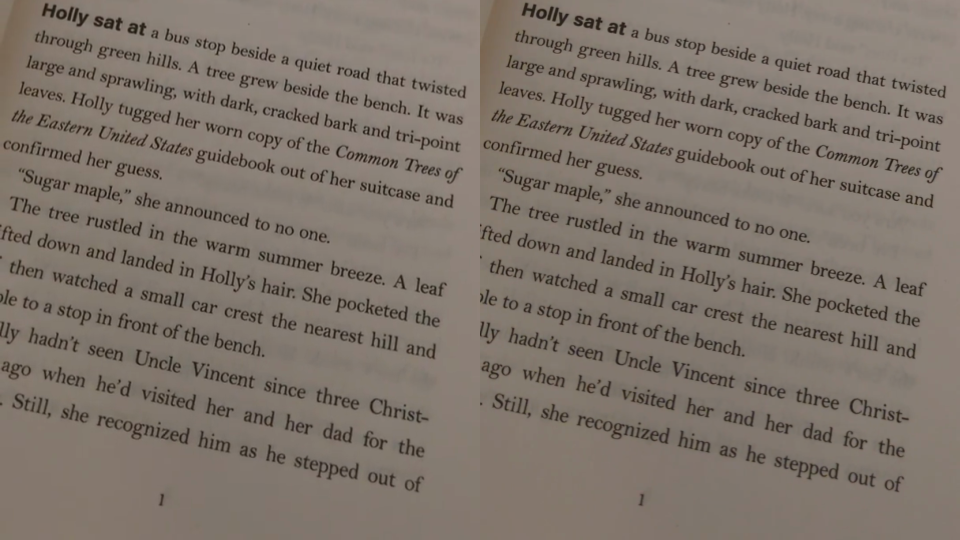

According to this metric, we see an increase in quality from the pipeline changes as high as 8%. However, the quality increase is most noticeable to the human eye in videos that feature fine details or a high amount of movement, which is not always captured in the PSNR. For example, compare a side-by-side of a frame from the book sample video encoded both ways:

The difference between the old and new encodings is most clear when zoomed in:

Here’s another example (sourced from Mixkit):

Magnified:

Of course, watching these example clips is the clearest way to see:

Maximize the Stream player and look at the quality selector (in the gear menu) to see the new quality level labels – select the highest option to compare. Note the improved sharpness of the text in the book sample as well as the improved detail in the hair and eye shadow of the hair and makeup sample.

Implementation challenges

Due to the complex nature of our encoding pipelines, there were several technical challenges to making a large change like this. Aside from simply uploading videos, many of the features we offer, like downloads or clipping, require tweaking to produce the correct video renditions. This involved modifying many parts of the encoding pipeline to ensure that portrait video logic was handled.

There were also some edge cases which were not caught until after release. One release of this feature contained a bug in the on-the-fly encoding logic which caused a subset of new portrait livestream renditions to have negative bitrates, making them unusable. This was due to an internal representation of video renditions’ constraining dimensions being mistakenly used for bitrate observation. We remedied this by increasing the scope of our end-to-end testing to include more portrait video tests and live recording interaction tests.

Another small bug caused downloading very small videos to sometimes fail. This was because for videos under 240p, our smallest encoding resolution, the non-constraining dimension was not being properly scaled to match the aspect ratio of the video, causing some specific combinations of dimensions to be interpreted as portrait when they should have been landscape, and vice versa. This bug was fixed quickly, but was not initially caught after the release since it required a very specific set of conditions to activate. After this experience, I added a few more unit tests involving small videos.

That’s a wrap

As my internship comes to a close, reflecting on the experience makes me grateful for all the team members who have helped me throughout this time. I am very glad to have shipped this project which addresses a long-standing concern and will have real-world customer impact. Support for high-definition portrait video is now available, and we will continue to make improvements to our video solutions suite. You can see the difference yourself by uploading a portrait video to Stream! Or, perhaps you’d like to help build a better Internet, too — our internship and early talent programs are a great way to jumpstart your own journey.

Sample video acknowledgements: The sample video of the book was created by the Stream Product Manager and shows the opening page of The Strange Wonder of Roots by Evan Griffith (HarperCollins). The hair and makeup fashion video was sourced from Mixkit, a great source of free media for video projects.

Cloudflare's connectivity cloud protects entire corporate networks, helps customers build Internet-scale applications efficiently, accelerates any website or Internet application, wards off DDoS attacks, keeps hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.