About The Project

Hack Fortress (HF) is a combination of a first person shooter (Team Fortress 2), and a jeopardy style CTF. Teams of ten are assembled with six gamers and four hackers in a single-elimination bracket. Hackers solve challenges and unlock points to buy in-game items for gamers. Each round is thirty minutes long except for the finals which run for forty-five minutes I’ve previously blogged about Hack Fortress(12) challenges, but this blog post is going to cover how each round of challenges have been deployed for the last 7-ish years at DEF CON and Shmoocon. If you’re going to the last Shmoocon this coming January, be sure to swing by the room and say hello (we have stickers)! Even better, follow the official HF accounts to stay up to date with the latest happenings!

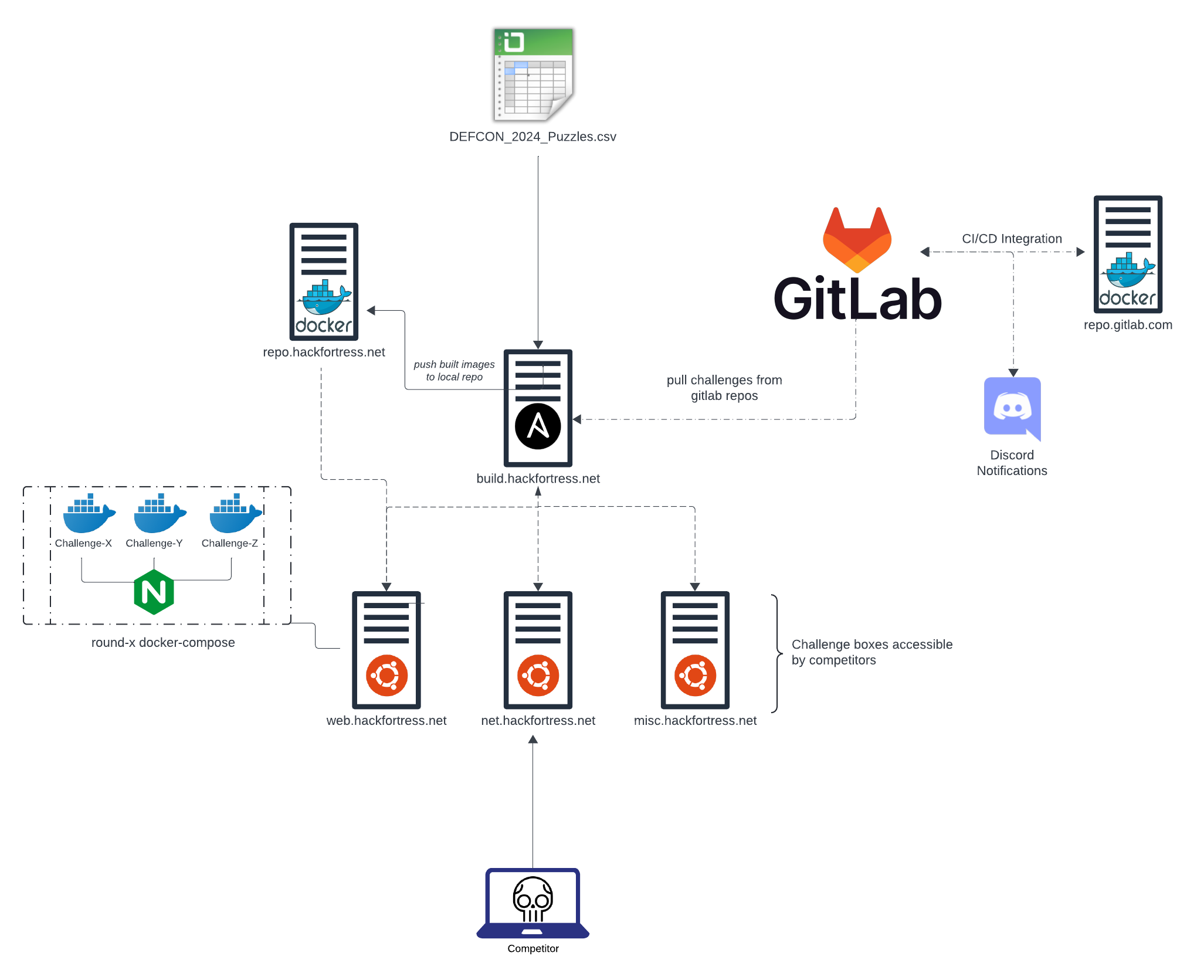

The complete diagram of challenge deployment workflow can be seen below.

Hack Fortress Team Organization: Hack Side & Gaming Side

The Hack Fortress team is an entirely volunteer run competition. The team structure is composed of a “Hack” side and “Gaming” side. Both sides serve critical roles to ensure the success of the competition and have their own expertise to make this unique competition run. The Hack side of Hack Fortress develop automation, maintain infrastructure, and create challenges based on historical vulnerabilities, new happenings in the InfoSec industry and fun hacker trivia to include in each event.

The gaming side has to battle with Valve updates leading up to the event as well as the critical and often poorly timed Team Fortress 2 update. Often by the time hardware is shipped to Vegas for DEF CON, something needs to be updated on site which leads to further troubleshooting and battling with a conference area’s network connectivity while bringing up other aspects of the challenge. Additionally, they handle all the streaming responsibilities for our Twitch.tv presence.

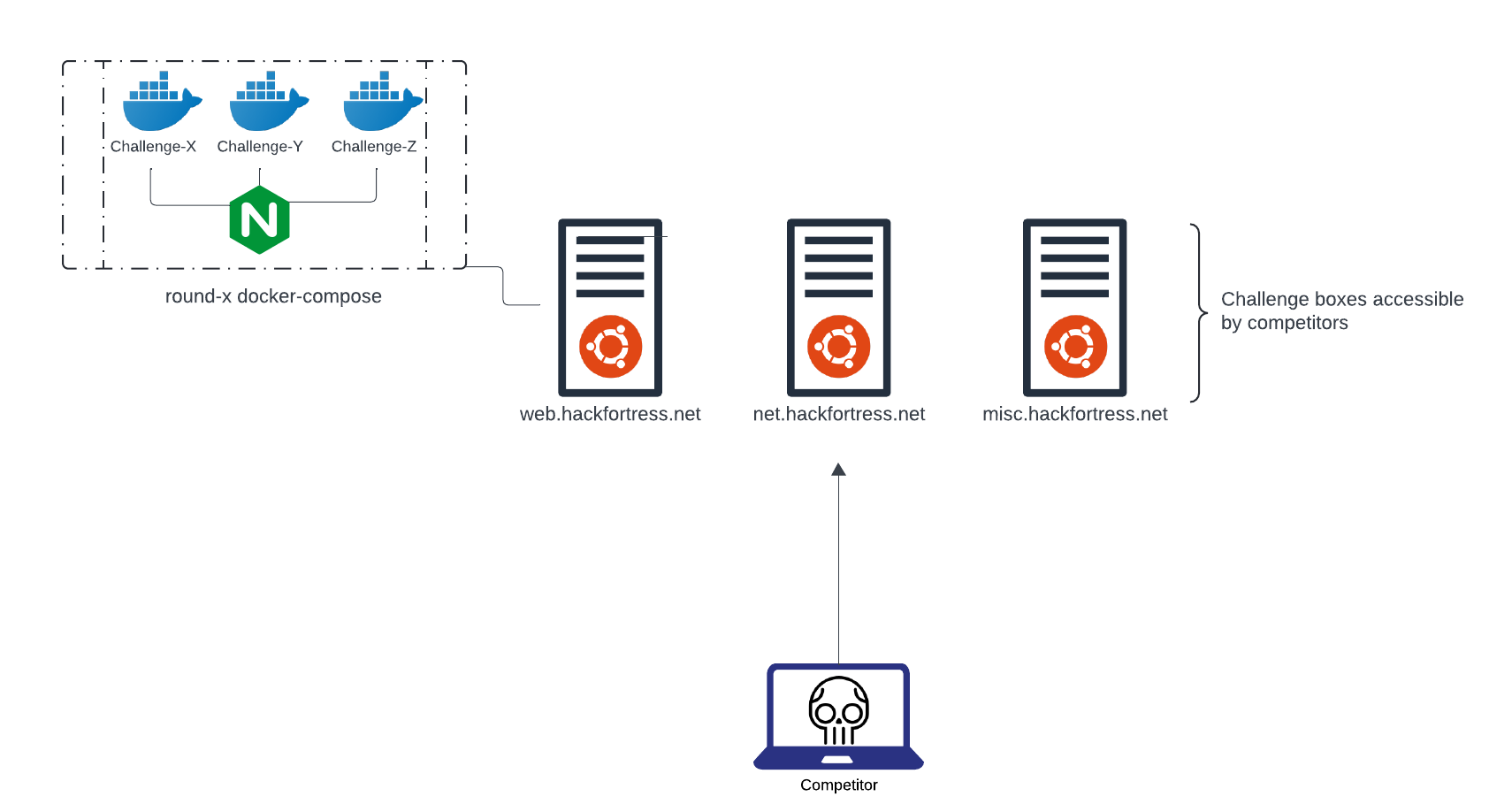

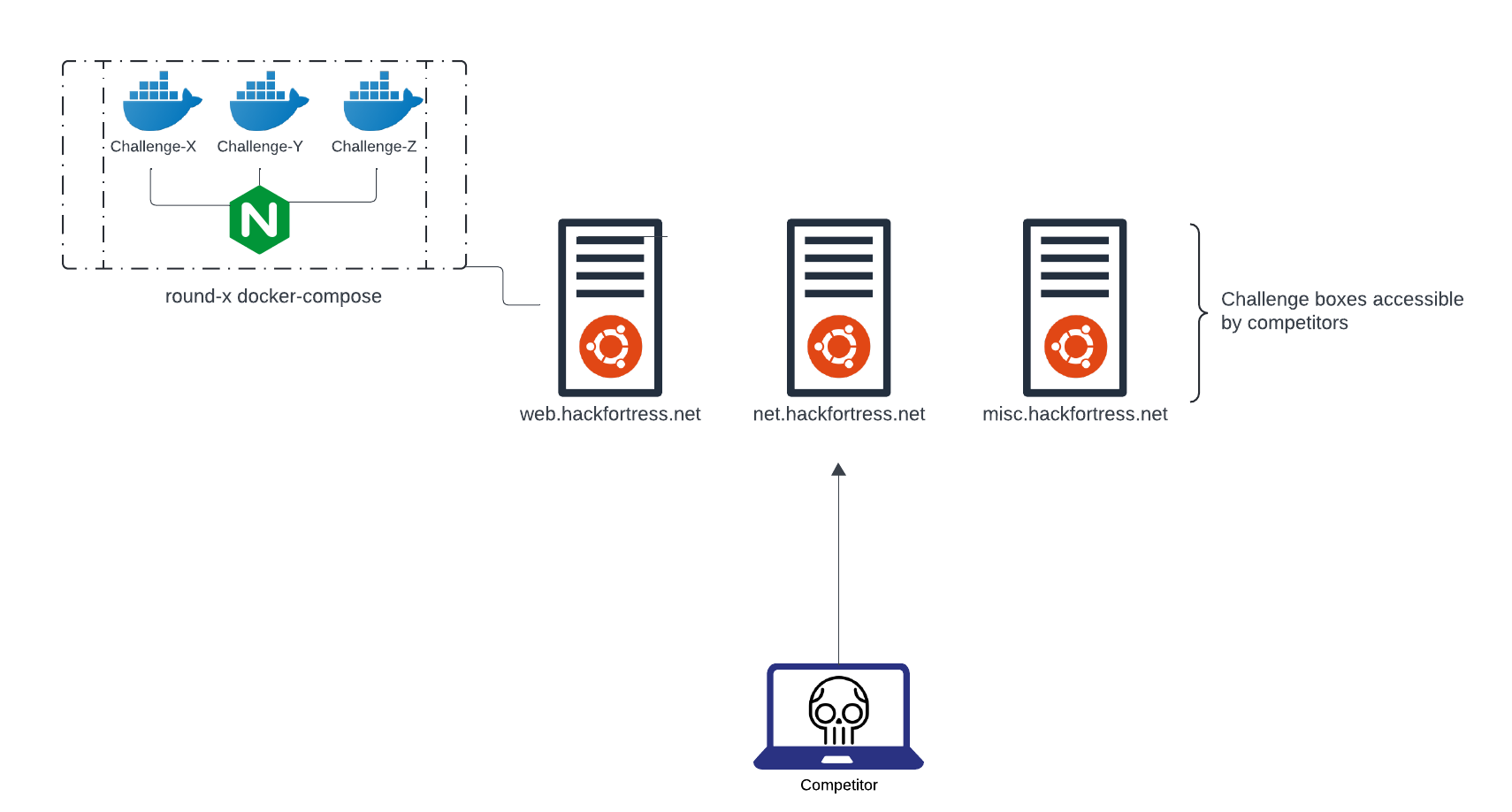

Competitor facing Infrastructure

Hack Fortress challenges break down into either static binaries delivered to the competitor via a file share through the scoreboard or a docker challenge. Docker challenges end up one of the “challenge hosts” depending on their category. This could be a web challenge which would intuitively go on the web host, net challenges for challenges that may require special networking modifications or misc which is our catchall for special deployments.

Our challenge hosts are patched pre-competition with limited exposed services to ensure no accidental compromise of a rogue competitor. Wherever possible container deployment best practices are followed. For a deep dive on building secure CTF environments, be sure to checkout Golfing with Dragons: Building Secure CTF Environments which provides a nice overview on threat modeling your CTF and how to build in safeguards and prevent accidental misconfigurations that could lead to an unintended compromise. With a secure base, our insecure challenges created by our talented team of challenge developers can thrive!

From Challenge Spreadsheet to Deployed Challenge

The entire Hack Fortress team is geographically dispersed. When Hack Fortress members develop challenges, they create a unique git repository per challenge, and update a challenge spreadsheet with appropriate challenge information to include point value, virtual host information (if applicable in the case of a web challenge), which round the challenge should be deployed in, the challenge author, etc… This spreadsheet is ingested by a custom build and deployment tool “Raspberry Ferret”.

Raspberry Ferret aka “rasfer” is a Python utility that wraps Docker and Ansible commands to perform challenge building and deployment tasks for each round. The origins of the project name come from a random project name generator found online one evening. Rasfer performs sanity checks, similar to a linter for each challenge in the spreadsheet. This ensures that all appropriate fields are filled out before building challenges to reduce the chance of errors. If a field is incomplete, an error is thrown along with the challenge name and author to help with remediation efforts.

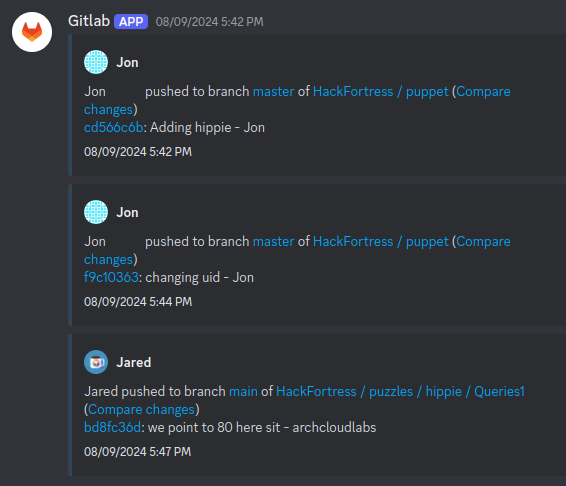

Upon passing sanity checks, docker challenges are built and pushed to a local container registry for each round. During development, Gitlab’s CI/CD is leveraged to build and store challenge containers in a private container repository. This enables challenge authors to test each other’s challenges and make appropriate updates prior to the competition.

During the actual competition, every challenge host (misc, web, and net) leverage the local container registry to avoid potential bandwidth issues at a given venue. Often at conference venues, during peak hours bandwidth issues make pulling in new content such as a container image a large issue. Having a local container repository has aided in last minute challenge patches vs having to pull challenges from an upstream container repo (ex: gitlab).

After successfully building and pushing the docker images for each challenge, a single docker-compose.yml file is generated for each round.

This file is nested under a challenge directory named after the host it should be deployed on (misc, net and web). For web challenges, each docker-compose file has a Nginx reverse proxy injected into the YML via rasfer during build time in order to direct the incoming request to the upstream http service based on the host header. A few years ago we looked at replacing this with Traefik, but ultimately decided to keep Nginx because of general familarity of debugging Nginx among the team. When in doubt, don’t fix what’s not broken :). At this point, all that’s left to do is execute docker-compose up -d for each round on each host. This is where Ansible comes into play. Abstracting out the directory creation, file copies and command execution with Ansible variables has made the process repeatable and ease for others team members to use. The Ansible deployment playbook can be seen below.

---

- hosts: "{{ target }}"

tasks:

- name: Looking for round-one-{{ target }}...

local_action: stat path='files/{{ round }}-{{ target }}.tar.gz'

register: package

- name: Make Challenge Directory

file:

path: "/opt/challenges/{{ round }}-{{ target }}"

state: directory

become: yes

- name: "Copy Docker {{ target }} challenges"

copy: src={{ item.src }} dest={{ item.dest }}

with_items:

- { src: 'files/{{ round }}-{{ target }}.tar.gz',

dest: '/opt/challenges/{{ round }}-{{ target }}.tar.gz' }

become: yes

when: package.stat.exists == true

# Untaring files

- name: Untaring Web

unarchive:

src: /opt/challenges/{{ round }}-{{ target }}.tar.gz

dest: /opt/challenges/{{ round }}-{{ target }}/

remote_src: yes

become: yes

register: pkgtar

when: package.stat.exists == true

- name: Copy launch & destroy scripts

copy:

src: '{{ item }}'

dest: '/opt/challenges/{{ round }}-{{ target }}/{{ round }}/{{ target }}/'

mode: 0740

with_items:

- 'files/launch-compose.sh'

- 'files/destroy-compose.sh'

become: yes

- name: Run docker-compose-launch

raw: "/opt/challenges/{{ round }}-{{ target }}/{{ round }}/{{ target }}/launch-compose.sh"

become: yes

With each round of challenges built, the last thing to do is to deploy them with rasfer!

$> rasfer deploy -t all <ROUND_HERE>

Ultimately, limited issues occurred at DEF CON and the exception handling built into the rasfer utility made it easy to troubleshoot and resolve the issues quickly.

For those that participated, thank you for spending your conference with us! We hope you enjoyed playing as much as we do building.

Beyond The Project

This, in a nutshell, is how Hack Fortress is built and deployed every year. If you’ve ever joined us at any conference and heard one of us yell “five more minutes”, there’s a good chance that either one of the components in this deployment pipeline were being debugged, or we were awaiting on teams to show up! For all those that have competed, thank you for making Hack Fortress what it is today. I hope to see you in a few months at Shmoocon for the final Hack Fortress aka Final Fortress!