2024-8-23 06:36:59 Author: hackernoon.com(查看原文) 阅读量:1 收藏

There are particular difficulties in getting NYC coverage from Node.js operating in Docker containers. This blog discusses the procedures needed to operate Node.js clusters in Docker and produce reliable code coverage reports.

How to Get Coverage Nativally?

Running NYC locally is straightforward with a simple command:

"start": "nyc node ./dist/main.js",

However, there are more stages involved in getting support within Docker. Let’s explore the nuances of this.

What Are the Challenges With Signal Handling in Docker Containers?

Sending a docker an interrupt signal is quite a deal in our case. Reason? Instead of running a binary, I’m running a script (to talk about later).

You see my dear readers, what do I’ve to face? My docker container doesn’t die, but why? It’s because of the signal Handling in Docker and shell behavior

.

-

PID 1 in Containers: In a Docker container, the CMD or ENTRYPOINT process runs as PID 1. This process is responsible for handling system signals. When using sh or bash to run a script, sh becomes the PID 1 process inside the container.

-

Signal Forwarding: The shell (sh) does not automatically forward signals to child processes. Instead, it handles the signals itself, often not propagating them to the processes it spawns.

When you press Ctrl+C, Docker sends a SIGINT signal to the PID 1 process. If sh is the PID 1 process, it might not forward the signal to the actual application process (e.g., node, yarn start). -

Zombie Processes: If the shell does not correctly forward the signal to the child processes, those processes continue running. This can lead to zombie processes and an unresponsive container.

How to solve this? A simple trap signal and passing it to child processes should work fine.

#!/bin/sh

set -a

. ".env.$ENVIRONMENT_NAME"

set +a

# Function to handle SIGINT signal

handle_int() {

echo "SIGINT received, forwarding to child process..."

kill -INT "$child" 2>/dev/null

exit 0

}

# Trap SIGINT signal

trap 'handle_int' INT TERM

sleep 10 # Ensure services like DB are up

# Start yarn and get its PID

yarn start &

child=$!

echo "Started yarn with PID $child"

# Wait for child process to finish

wait "$child"

# Copy nyc output to host directory (optional, if you need it)

From within the container, this is what all processes look like:

But is this trap and send enough? No it is not.

It surely does kill the container but doesn’t do the graceful shutdown. It kills the PID 1 and moves ahead without child processes to wrap up, something we’re really looking for here.

Reason for this issue:-

-

Shell Limitations: The default shell (

sh) might not forward signals to child processes correctly. Instead, it might terminate itself, leaving child processes orphaned. -

Signal Propagation: The shell script (

run.sh) directly receives the signal and may not propagate it to theyarn startprocess effectively.

Then how to send the signal to the yarn start which gets our coverage?

What Are Effective Solutions for Handling Signals?

We need to ensure that the default kill signal is converted to a stop signal, and signal is passed to child processes. Finally, we also intend to copy data from the docker image to the host machine. We can follow the below steps to handle these signals and ensure that the interrupt signal reaches the process groups.

- Using dumb-init for Better Signal Handling

- Handling Signal Traps in Shell Scripts

- Copying NYC Coverage Data from Docker Container

Using dumb-init for Better Signal Handling

Dumb-init is a simple process supervisor designed to run as PID 1 inside Docker containers. It handles reaping zombie processes and forwards signals to all processes in its process group.

Why dumb-init helps:

- Signal Forwarding: When the Docker container receives a SIGINT signal,

dumb-initforwards it to the shell script and all child processes.

- Process Management:

dumb-initensures proper reaping of zombie processes, which the default shell might not handle well.

<STAGE-1-BUILD>

...

<STAGE-2-BUILD>

...

# Set working directory

ENTRYPOINT ["dumb-init", "--"]

# RUN echo "hi"

STOPSIGNAL SIGINT

# Set entrypoint and command

CMD [ "sh","./scripts/migrate-and-run.sh"]

# Expose port 9000

EXPOSE 9000

Thus the usage of dumb-init helps us gracefully kill the yarn start and its subsequent workers.

Ensuring NYC Coverage Data is Written Correctly

One key mistake I made was handling NYC coverage across different locations: building the coverage in one place, copying it to another for execution, and reading the report in yet another. This inconsistency was problematic

How to Handle Signal Traps in Shell Scripts?

As you saw earlier, I run the build file, which includes build functions and maps them to my code. When running a multi-stage build, the directory may differ, yet the build still works.

In the example below, the application is built in the app-build directory. In the second stage of the Docker build, it is executed via the script. For building purposes, the exact location doesn't matter much, but for NYC, it does.

NYC relies on consistent paths to correctly map coverage data to the source files. Therefore, ensuring that the context of the build, execution, and reading paths remain the same is crucial for accurate NYC coverage reporting.

So when I try to get the coverage I see something like, reason? NYC doesn’t have the context of the code in the second stage of the build, therefore, I get no line coverage.

So, our dockerfile needs the same path that’s in pre-build vs post-build; something that looks like this:

<STAGE-1-BUILD>

...

<STAGE-2-BUILD>

...

WORKDIR /app-build

# Set working directory

ENTRYPOINT ["dumb-init", "--"]

# RUN echo "hi"

STOPSIGNAL SIGINT

# Set entrypoint and command

CMD [ "sh","./scripts/migrate-and-run.sh"]

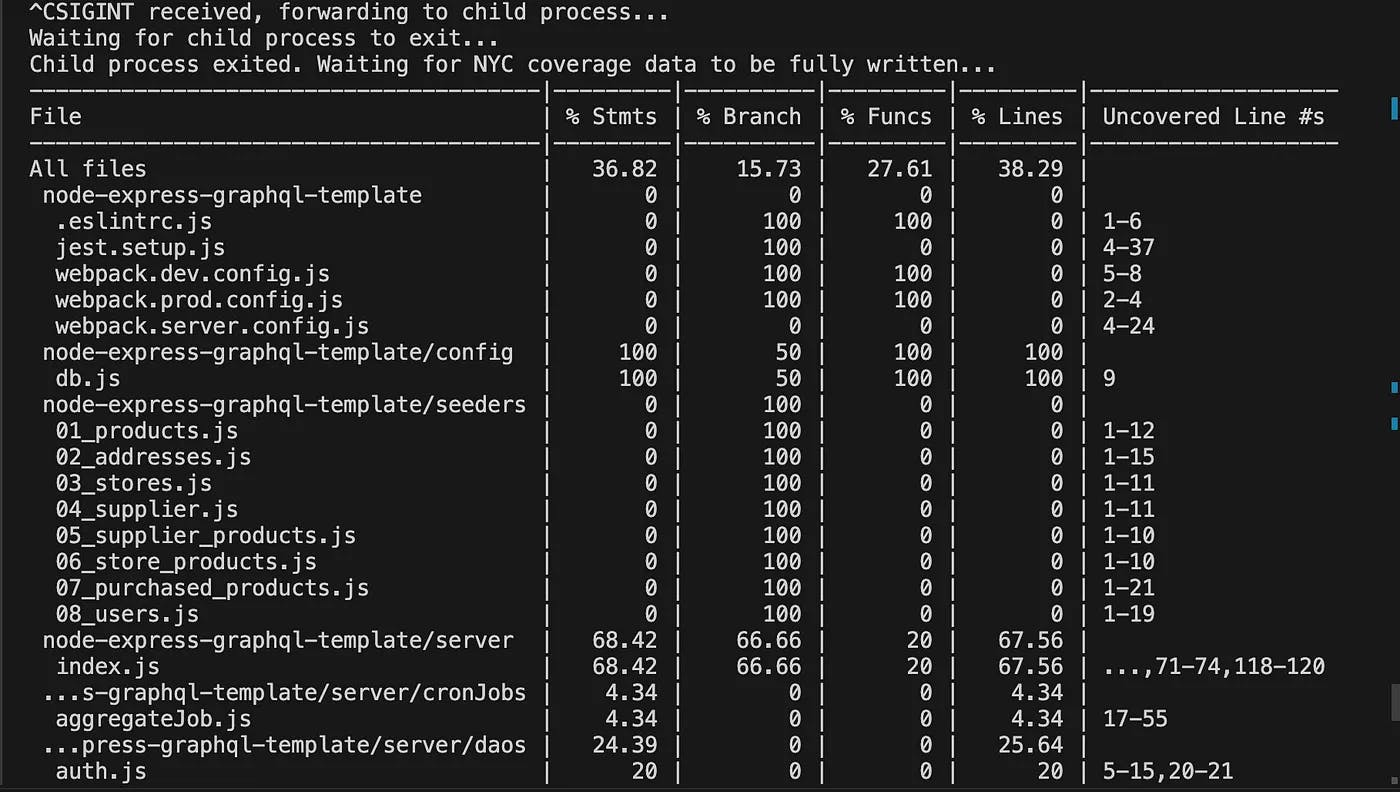

And our coverage in the docker container looks like this:

Lets copy this data locally and see what happens:

But this isn’t what I saw in the screenshot above. Why is the coverage zero?

You see the build and working context of the directory isn’t the same as my local machine, thus NYC isn’t able to fetch me the correct coverage. How to solve it?

Keep the current path of the repository, build, and the working directory the same. As you see, I take the path as an argument and build over it to keep the context always the same for my NYC to output correct data.

<STAGE-1-BUILD>

ENV APP_PATH=${APP_PATH:-/default/path}

...

<STAGE-2-BUILD>

...

# Set working directory

WORKDIR ${APP_PATH}

ENTRYPOINT ["dumb-init", "--"]

# RUN echo "hi"

STOPSIGNAL SIGINT

# Set entrypoint and command

CMD [ "sh","./scripts/migrate-and-run.sh"]

The command to build the docker image:

sudo -E docker build --build-arg ENVIRONMENT_NAME=docker --build-arg BUILD_NAME=docker --build-arg APP_PATH=$(pwd) -t custom_app .

The result of this is when I copy the data on my local machine, it gives me the desired output.

The Importance of Script

In script, we catch the signal and handle it. If it wasn’t done, we would see something like this ~

What just happened here now? You see, when the program is stopped, NYC needs some time to write the final JSON file from memory to a local folder. This extra small delay is something we won’t wait for. This causes this error.

#!/bin/sh

# Function to handle SIGINT signal

handle_int() {

echo "SIGINT received, forwarding to child process..."

kill -INT "$child" 2>/dev/null

echo "Exiting after delay..."

exit 0

}

# Trap SIGINT signal

trap 'handle_int' INT TERM

sleep 10 # Ensure services like DB are up

# Start yarn and get its PID

yarn start &

child=$!

echo "Started yarn with PID $child"

# Wait for child process to finish

wait "$child"

# Copy nyc output to host directory (optional, if you need it)

To solve the above code, wait for child processes to die with an update handle function.

#!/bin/sh

# Function to handle SIGINT signal

handle_int() {

echo "SIGINT received, forwarding to child process..."

kill -INT "$child" 2>/dev/null

echo "Waiting for child process to exit..."

wait "$child"

echo "Child process exited. Waiting for NYC coverage data to be fully written..."

sleep 10 # Wait for 50 seconds

echo "Exiting after delay..."

exit 0

}

# Trap SIGINT signal

trap 'handle_int' INT TERM

sleep 10 # Ensure services like DB are up

# Start yarn and get its PID

yarn start &

child=$!

echo "Started yarn with PID $child"

# Wait for child process to finish

wait "$child"

# Copy nyc output to host directory (optional, if you need it)

How to Copy NYC Coverage Data from Docker Container?

Would a normal volume mounting work? Nope, it won’t because when you mount the file, NYC clears the old files, and then you will find some logs like this.

So, you can set the cleaning to be false; something like this.

"start": "nyc --clean=false --max-old-space-size=4096 --reporter=lcov --reporter=text node ./dist/main.js",

Or instead of this, you can manually copy the file using the docker command.

docker cp custom_apps:$(pwd)/.nyc_output $(pwd)/.nyc_output

Conclusion

-

Passing SIGINT to Container and Its Sub-Processes

When running Node.js applications in Docker containers, it’s crucial to handle SIGINT signals correctly. Using a process manager like dumb-init ensures that SIGINT signals are forwarded to all child processes. This allows for a clean shutdown of the application, ensuring that all necessary cleanup tasks are performed. -

Setting the Correct Context of Location for NYC to Calculate Coverage

NYC, the code coverage tool for Node.js, relies on having a consistent file path for both the build and execution phases. When using multi-stage Docker builds, make sure that the paths used in each stage are identical. This prevents issues with NYC being unable to locate source files, which can result in inaccurate coverage reports. -

Adding Delay in Docker Stop to Ensure Data is Copied Out

Adding a delay when stopping a Docker container ensures that all data, such as NYC coverage reports, is fully written and can be copied out. This can be achieved by trapping SIGINT signals in the container’s entry script, forwarding the signal to child processes, and waiting for a short period before exiting. -

Incase of docker-compose handling the docker containers, make sure to use the below code in the dockerfile.

STOPSIGNAL SIGINT

The above code snippet will help you send the interrupt signal to the docker container so that the container is interrupted instead of terminated.

Our final dockerfile looks something like this:

FROM node:14-alpine

# Set working directory

WORKDIR APP_PATH

COPY . .

ENTRYPOINT ["dumb-init", "--"]

STOPSIGNAL SIGINT

# Set entrypoint and command

CMD [ "sh","./scripts/migrate-and-run.sh"]

# Expose port 9000

EXPOSE 9000

FAQs

Why doesn’t my Docker container stop when I press Ctrl+C?

This often happens because the shell script running as PID 1 in the container doesn’t propagate the signal to child processes. The parent process terminates but leaves the child's processes running, leading to zombie processes. Using a process manager like dumb-init ensures proper signal forwarding and process management.

How do I ensure that NYC code coverage reports are generated correctly in Docker?

Ensure that the file paths in the Docker container are consistent between the build and runtime stages. NYC relies on file paths to map coverage data to source files. By keeping the paths consistent, NYC can accurately calculate coverage.

What is dumb-init, and why should I use it?

dumb-init is a simple process manager designed to run as PID 1 inside containers. It forwards signals to all child processes and reaps zombie processes. This ensures clean shutdowns for Node.js applications in Docker and that all cleanup tasks, including NYC coverage report generation, are completed before the container stops.

How do I prevent NYC from clearing coverage data when I stop a Docker container?

You can set the --clean=false flag in the NYC command to prevent it from clearing coverage data. Alternatively, you can manually copy the coverage files using Docker commands, ensuring that the coverage data is preserved.

What is the correct way to handle signals in Docker when using a shell script?

You should trap signals like SIGINT in your shell script and forward them to child processes. This can be done by using a signal handler that sends the signal to child processes and waits for them to finish before exiting. This ensures proper shutdown and coverage report generation.

How do I ensure that NYC coverage data is saved before a Docker container exits?

Add a small delay in your signal handler before the container shuts down to ensure all NYC coverage data is fully written. This delay gives NYC the time it needs to save data from memory to disk, preventing incomplete coverage reports.

如有侵权请联系:admin#unsafe.sh