Explore my blog series on building a NUC cluster with Proxmox! Learn about connecting hosts, setting up tools, and avoiding pitfalls from my own build mistakes. Perfect for anyone keen on creating a home lab for testing attack paths and security tools. Dive in for practical insights!

I've written a lot before about building vulnerable environments to lab out attack paths, learn how tooling and techniques work and how to fix them up. I've also talked about the different attacks and tooling I've built in the past; however, I have been running labs on a single machine for the longest time.

Recently, I have invested time in creating a NUC cluster using Proxmox; this several-part blog series will explore the different phases of connecting the hosts, installing Proxmox, and setting up various options and tooling. It's nothing ground-breaking, but it might give you some insight into doing this yourself if you decide to. It's also titled rebuilding, as the initial build failed, and my lessons learned from that are to use all the same SSD brands and models when building a cluster due to speed differences.

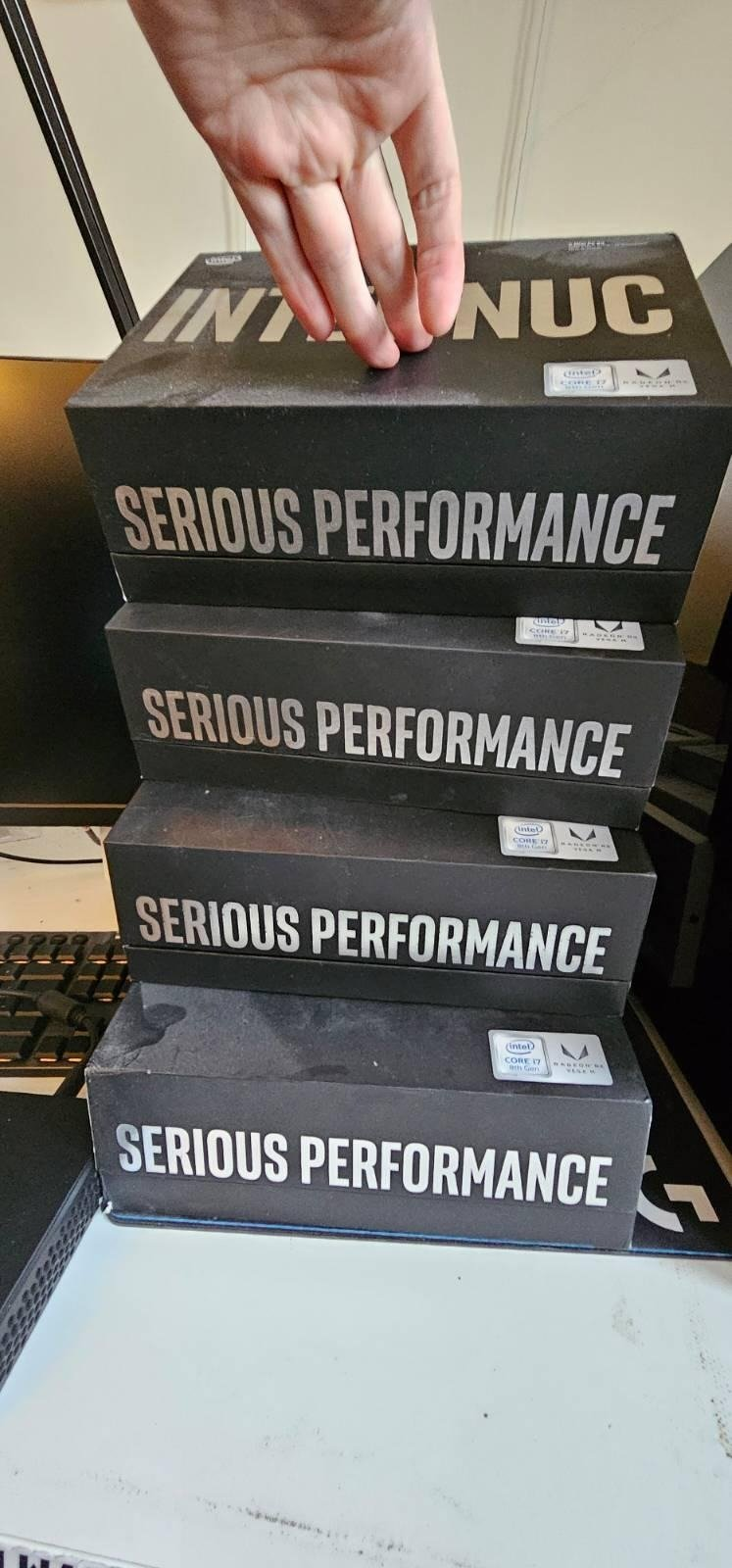

The Hardware

Before diving into the configuration, it is essential to highlight the hardware used here. I opted for Hades Canyon Intel NUCs for this build purely because I love the form factor and ability to specify these to achieve a nice balance of performance and portability.

Each NUC is about the same size as a VHS tape (if you were born after the year 2000 and are reading this, this is what we used to watch movies and other content on before Netflix/Prime/Disney+/Plex/Insert streaming service). The specs of each are as follows:

- 4 core i7 8705G - 8 hyperthreading cores - Decent for virtualisation and power

- 64GB RAM (https://amzn.to/4bpLskU) - Cheap enough for an upgrade and max out the RAM

- 4TB SSD (2x2TB https://amzn.to/3W2vWqS)—Decent deals are usually 110-125/SSD. You could go 2x4TB if you wanted to, but these get more expensive quickly.

- While not used in this build, the built-in 4GB Radeon RX Vega M GL can be helpful if you want to run a local Plex server, for example.

You can pick up a barebones Hades Canyon(If you search the model

NUC8i7HNK3) from eBay for about £200-300 these days and build it out with a decent spec, aka 64GB RAM & 4TB of SSDs, for about £400, so for ~£600, you can have yourself a decent system for a home lab.

Due to their size, the NUCs stack pretty nicely, too. I finally found a legitimate use for my label printer: labelling the IDs in the stack(next, I will add the IPs to them to know which is which).

In addition, I have 3x Thunderbolt 3 cables for connecting the stack together;

- https://amzn.to/3xFbd2O - Available here; any will do, but this one is short and easy for cable management.

I'm not going to walk you through how to set up the hardware as it's pretty straightforward with a few screws on the top panel, and then you have access to the RAM and SSDs.

Side Note: If you want to run this all on one host, one of my friends recently bought an MS-01 and has it deployed with 96GB RAM and decent SSDs. They're a little more expensive, but they're still worth a look if you want something more modern; if you can stretch, I recommend the 13th-gen i9 for maximum CPU capacity and support for 96 GB, as it offers a decent round package.

(Optional) Before we dive into the proxmox setup, if you want to cluster three NUCs together in a setup similar to what I have above, you can roll with the following cluster setup with Thunderbolt 3 cables;

- Connect all your NUCs to power in the standard way

- Disable secure boot in BIOS on all nodes; accessing the BIOS can be done with the

delKey on boot. - Connect all 3 TB3 cables in a ring topology:

using the numbers printed on the case of the intel13 nucs connect cables as follows (this is important):

- node 1 port 1 > node 2 port 2

- node 2 port 1 > node 3 port 2

- node 3 port 1 > node 1 port 2

- Prepare a bootable USB key by downloading proxmox iso and tools such as 'rufus'. The latest version of ProxMox can be found here: https://www.proxmox.com/en/downloads/proxmox-virtual-environment/iso. At the time of writing, I'm running Proxmox VE 8.2.4.

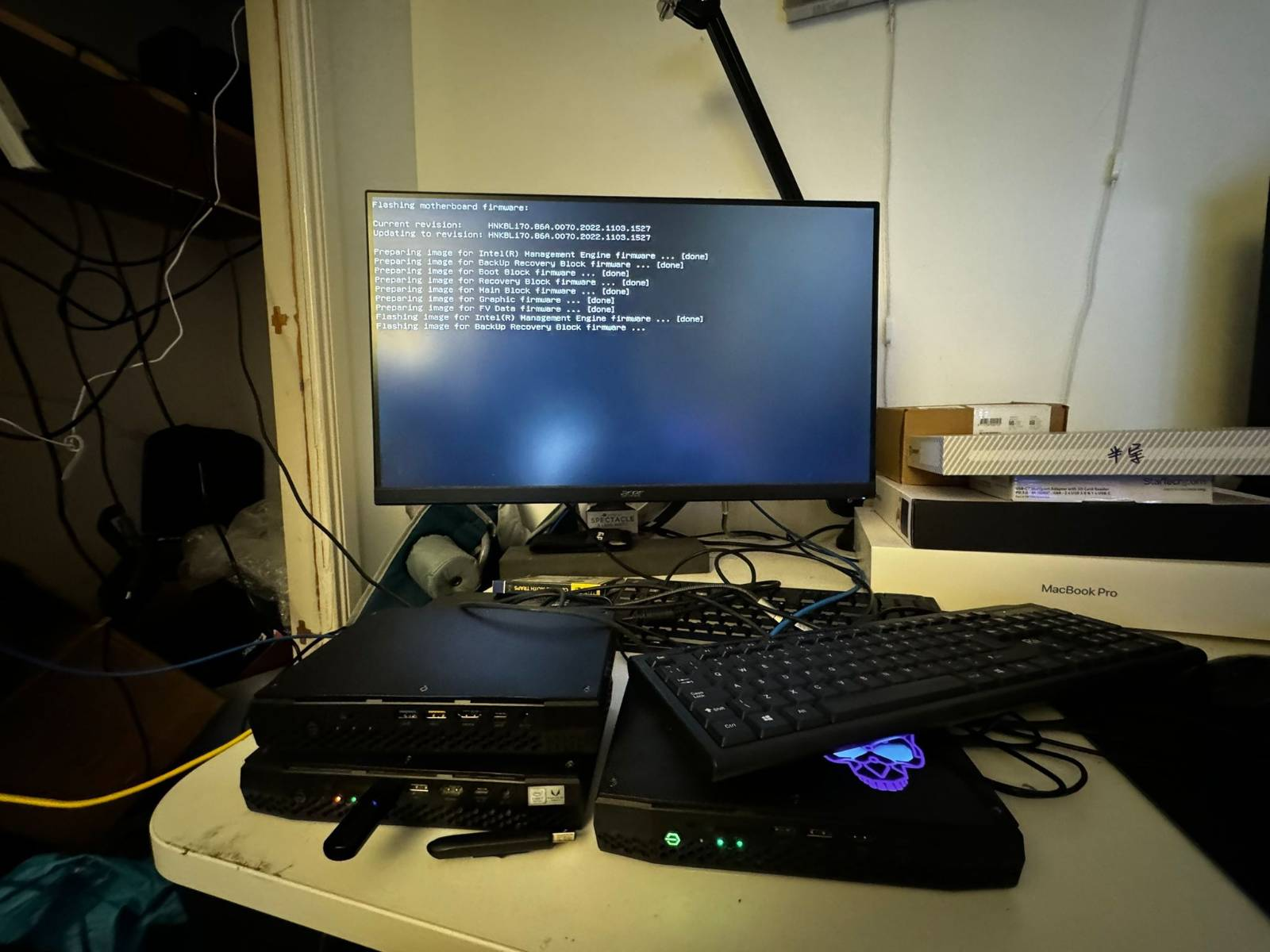

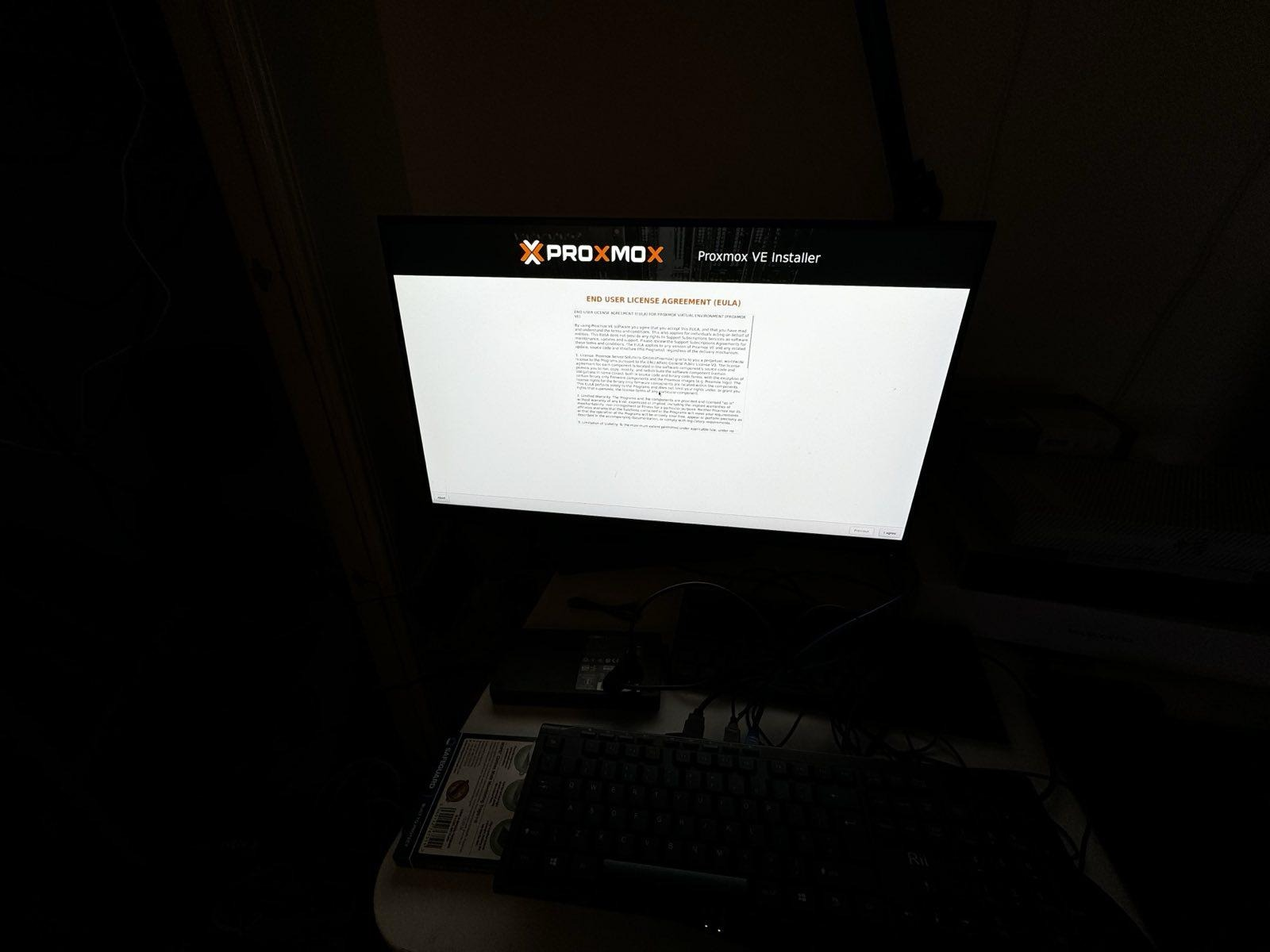

Installing Proxmox

Before booting into your USB installer, I recommend you stick with an ethernet cable connected. If you are rolling with a cluster setup, skip Thunderbolt for the moment.

- Boot from the USB you created and set up proxmox just as you would any other Unix install.

- Key things to note are the password you create and the IP range for the management IP, as you'll need both of these to access your system once it's set up.

- Set country, keyboard, and general settings

- Choose

enp86s0as the management interface (on proxmox 8, you will see the Thunderbolt network too, if cables are connected); my advice is setup the NUC without ThunderBolt first then roll with it later. - set a name for each node - I used

pveXwhere X = the node number I wanted in this setup. But you can roll with whatever hostnames you want. Just note them, as you'll need them to differentiate when/if you cluster them.

Once you have set up your hostnames, the next step is to set a fixed IPv4 address for the management network. This can be whatever you want once again, but something easy to remember helps;

- I opted for 10.10.53.x as my home network uses several sub-subnets within the 10.x range :)

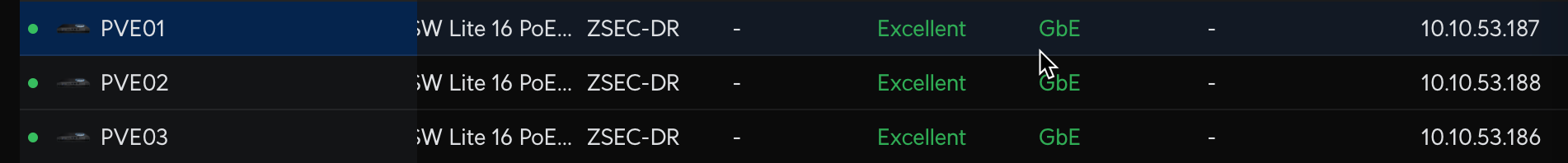

- 10.10.53.186/24 for node 1

- 10.10.53.187/24 for node 2

- 10.10.53.188/24 for node 3

- Set an external DNS server and gateway; if you already have a pi-hole or AdGuard set up, feel free to use these, too.

- Follow the defaults for setting up partitions unless you want to be fancy. Then, just let the software partition the disk as it sees fit.

- Finally, review the summary page, make sure you are happy with everything, and remove the USB and reboot when prompted.

On average, across the three hosts, the above takes around 15 minutes. If you have a faster or slower SSD, that time will vary, but 15 minutes on average is a good benchmark.

Once installed and booted, you should be able to access the web interface on the respective IP addresses on port 8006, e.g.:

This will prompt you to log in. Your username will be root, and your password will be whatever you set on install.

Configure Proxmox

Now that you've set up your host(s), the next step is to configure proxmox. There are many ways to do this, such as using GUI or SSH. I prefer SSH as it's easier to tweak and configure paths.

From an SSH terminal, we will tweak the sources.list file so that it uses the no-subscription repository to update apt. To do this, simply open up. /etc/apt/sources.list in your favourite text editor; for me, it's nano because it is the best text editor. Edit yours so that it matches the below:

deb http://ftp.debian.org/debian bookworm main contrib

deb http://ftp.debian.org/debian bookworm-updates main contrib

# Proxmox VE pve-no-subscription repository provided by proxmox.com,

# NOT recommended for production use

deb http://download.proxmox.com/debian/pve bookworm pve-no-subscription

# security updates

deb http://security.debian.org/debian-security bookworm-security main contribOnce done, we need to also tweak the other two files in /etc/apt/sources.list.d:

Starting with ceph.list you're going to want to comment out the existing value and replace it with :

deb http://download.proxmox.com/debian/ceph-reef bookworm no-subscription Finally, remove or comment out the entry in /etc/apt/sources.list.d/pve-enterprise.list.

Then simply run apt update && apt upgrade as the root user, select Y to accept packages that need to be updated.

(Optional) Configuring and Building A Cluster

While this post focuses on getting set up with Proxmox and tweaking settings to build a cluster, you might not have multiple hosts; therefore, if you would instead get into the critical details of setting up hosts, feel free to skip this section.

To save re-inventing the whole wheel, I followed a very detailed guide that can be found here:

https://gist.github.com/scyto/76e94832927a89d977ea989da157e9dc

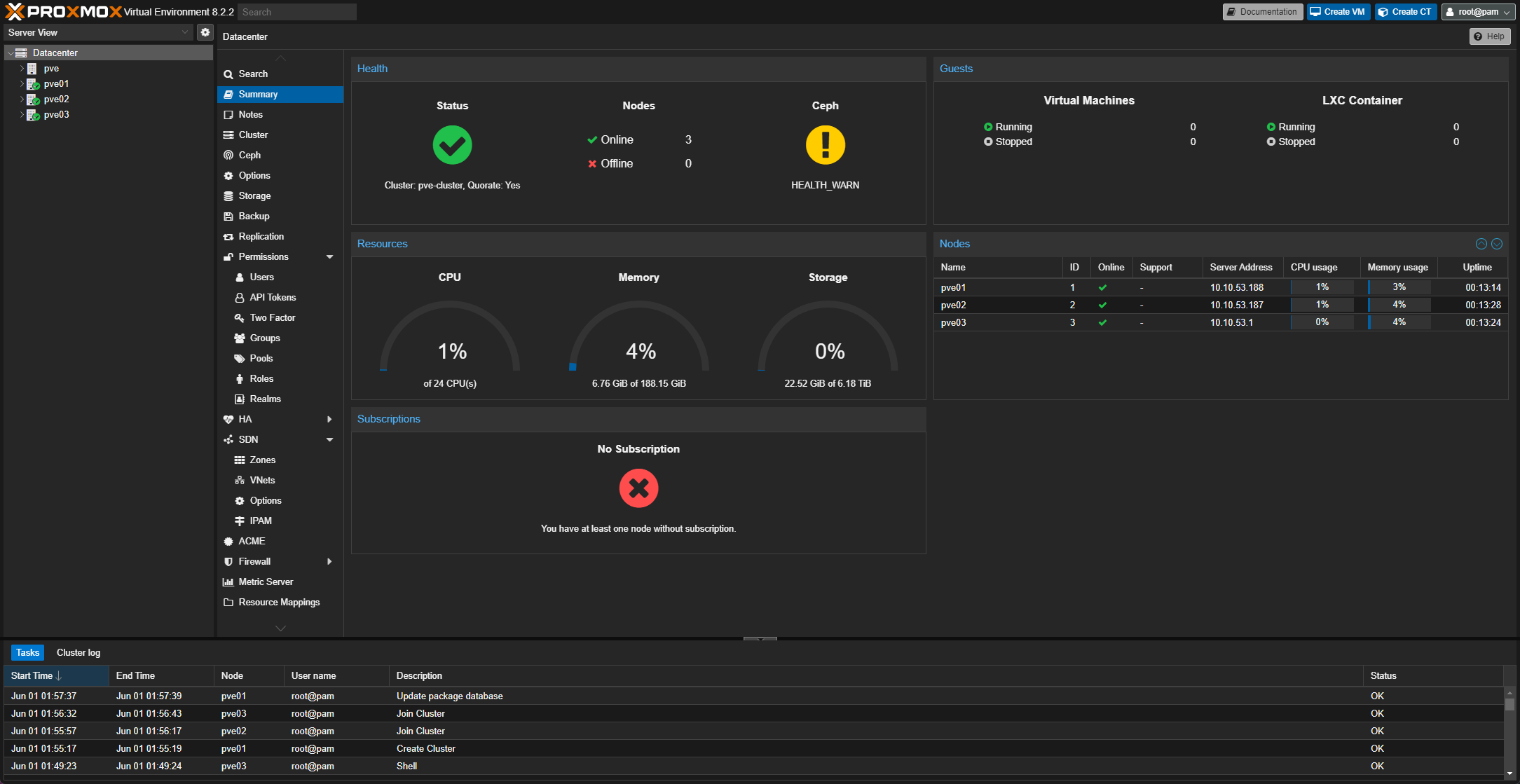

The gist walks you through each essential step for configuring Thunderbolt networking across a cluster and explains how to do it step-by-step. Once built you have a high availability cluster with shared resources, like mine below:

Onward with setting up the different nodes and their functions.

- PVE01 = DockerHost with various images, including Gitea, AdGuard, Portainer, Jenkins and other tools.

- PVE02 = http://Ludus.cloud

- PVE03 = Media Stack with Plex

Setting up Docker VM Host

I chose Ubuntu Server as my baseline for my Docker host, but you can run whatever you fancy. The setup instructions below detail how to get Docker working on Ubuntu (as taken from Docker's site).

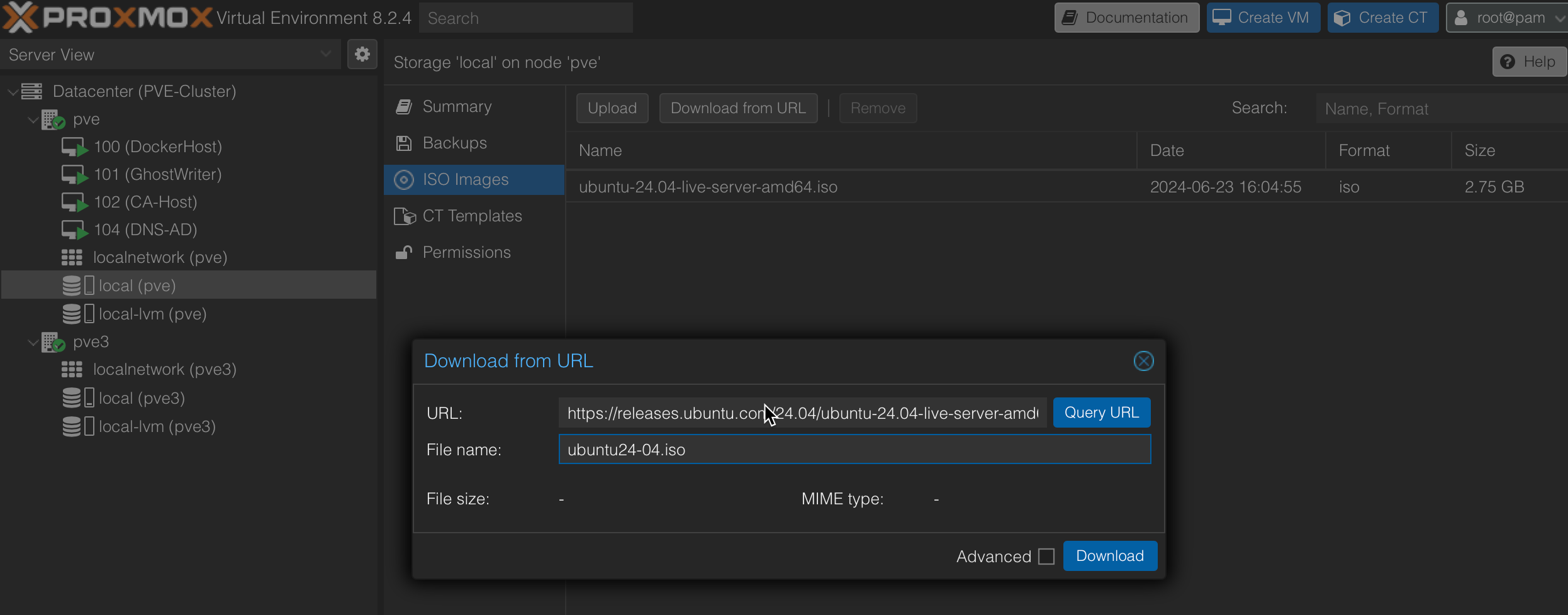

First things first, we need to get the ISO on our Proxmox host; this can be done by uploading the ISO, or we can download the ISO directly to the host by navigating to local -> ISO Images -> Download from URL. Note local may be named something different in your setup.

Supply the URL; in this case, the direct download URL for Ubuntu 24-04 can be found here(https://ubuntu.com/download/server). Copy the direct link into the URL box, name the ISO whatever you want in the 'File Name' box, and then simply click download; this will download the ISO to your host directly.

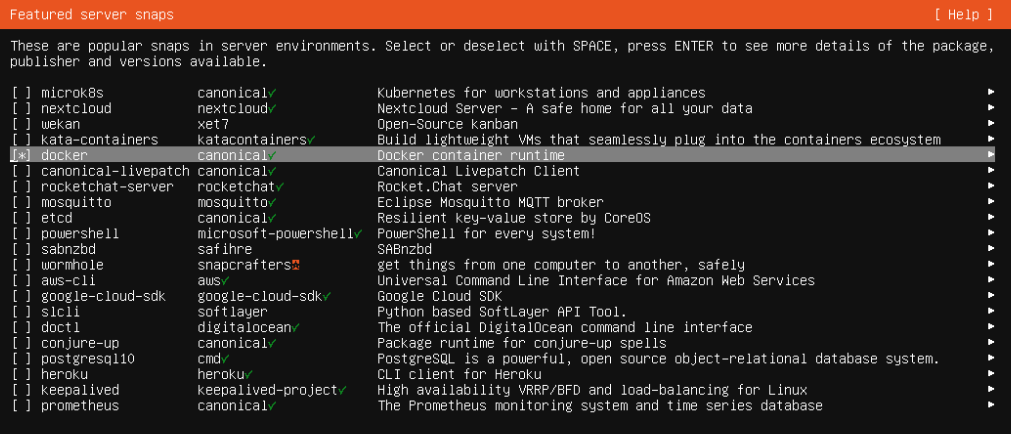

Install Ubuntu, follow the various steps until you select packages; ensure you select install OpenSSH, and from the packages, select docker, then choose install. The process will take a few minutes, so make a pot of tea or whatever else you fancy. Once done, we can SSH into our host and run the following to get docker spun up on the Ubuntu Server host:

for pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; done

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl -y

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -yThe script above can be copied and pasted into your terminal or saved to a .sh file. Or, if you're lazy, you can simply run this one-liner to pull the script and execute it in your shell; it will prompt for your sudo password due to the commands above:

curl https://gist.githubusercontent.com/ZephrFish/e313e9465a0445b7e4df6f842adda788/raw/2328a828b97b7eadecdd456cb10f48730711e785/DockerSetupUbunut.sh | sh Once finished, the script will successfully install the Docker on your host(hopefully!).. In the next few subsections, you can move on to adding Docker images.

Portainer

Portainer is a lightweight management UI that allows you to easily manage Docker environments (hosts or Swarm clusters). It provides a decent web interface for managing containers, images, networks, and volumes, making it much easier for those who prefer not to manage Docker solely via CLI.

The CLI below allows deploying a portainer image, mapping the ports and mapping to the docker socket:

docker run -d --name=portainer \

-p 8000:8000 \

-p 9000:9000 \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /volume1/docker/portainer:/data \

--restart=always \

portainer/portainer-ce- The

-pflags map the container ports to your host, allowing you to access the Portainer UI onhttp://<your-host-ip>:9000. - The

-vflags are used for volume mapping:The first-v /var/run/docker.sock:/var/run/docker.sockallows Portainer to manage Docker on the host by connecting to the Docker socket.The second-v /volume1/docker/portainer:/datais where Portainer will store its data. You can change/volume1/docker/portainerto any directory path on your local system where you prefer to store Portainer's data, ensuring it's persistent across container restarts.

After logging in, Portainer will ask you to add a Docker environment. We can select the local environment option as we are running it locally. You can use an agent or specify remote Docker endpoints for remote environments.

Watchtower

This has to be my favourite application for using Docker. Watchtower essentially keeps your Docker images up to date by connecting to the raw Docker Socket.

docker run -d --name=watchtower \

-v /var/run/docker.sock:/var/run/docker.sock \

--restart=always \

containrrr/watchtower --cleanup- The

-v /var/run/docker.sock:/var/run/docker.sockflag mounts the Docker socket, allowing Watchtower to interact with and manage your containers. - The

--restart=alwaysoption ensures that Watchtower restarts automatically if the container stops or the host reboots. - The

--cleanupflag removes old images after updates, freeing up disk space.

After deploying Watchtower, it will begin monitoring your running containers based on the configuration provided. It checks for updates once every 24 hours by default, but you can adjust this with the WATCHTOWER_POLL_INTERVAL setting.

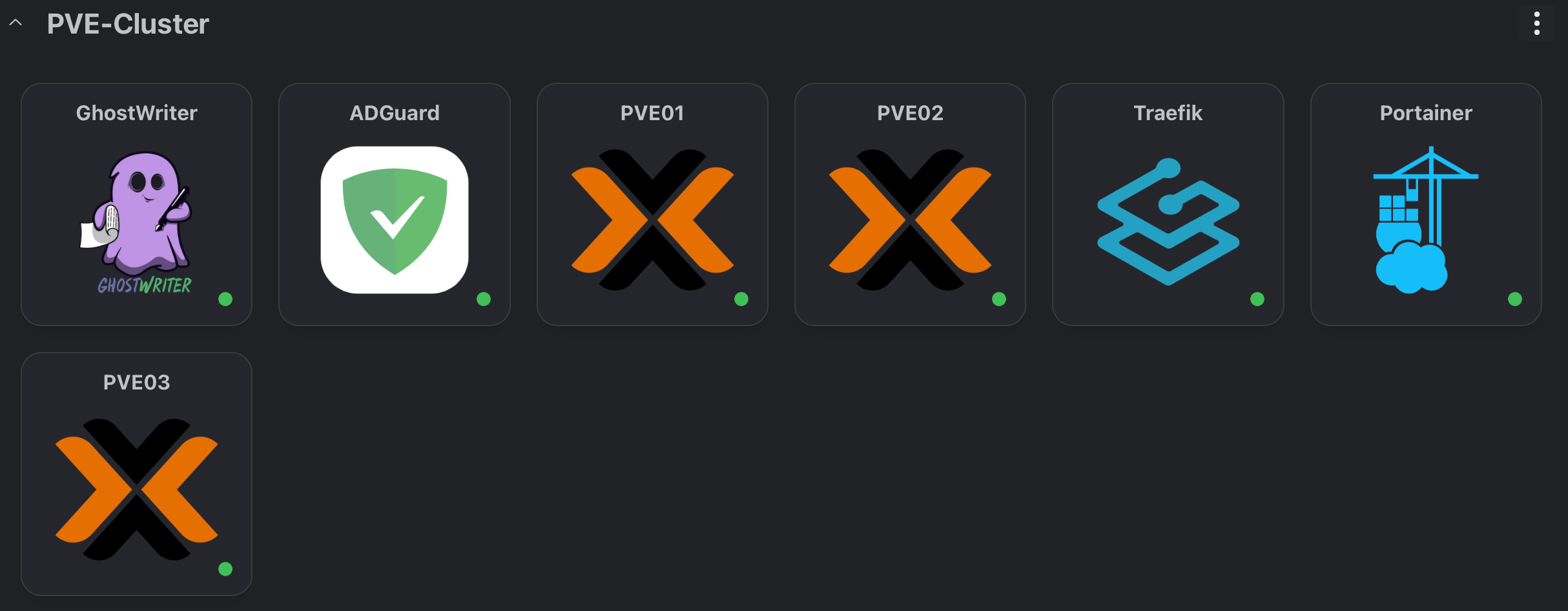

Homarr

Homarr is a central dashboard aggregating and managing your Docker applications, especially those running on different web ports. It provides a clean, custom interface to monitor and access various services from a single pane of glass, making it easier to manage your Docker environment.

docker run -d --name=homarr \

-p 80:4755 \

-v /volume1/docker/homarr:/app/data/configs \

-v /volume1/docker/homarr/icons:/app/public/icons \

-v /volume1/docker/homarr/data:/data \

-v /var/run/docker.sock:/var/run/docker.sock \

-e TZ=Europe/London \

--restart always \

ghcr.io/ajnart/homarr:latest- Ports: The

-p 80:4755flag maps port 4755 of the Homarr container to port 80 on your host, allowing you to access the dashboard viahttp://<your-host-ip>:80. - Volumes: The

-vflags are used to map local directories to container paths:/volume1/docker/homarr:/app/data/configsis where Homarr stores its configuration files./volume1/docker/homarr/icons:/app/public/iconsstores custom icons for your applications./volume1/docker/homarr/data:/datais used for additional data management. - Docker Socket: The

-v /var/run/docker.sock:/var/run/docker.sockflag allows Homarr to communicate with the Docker daemon, enabling it to monitor and manage your containers directly from the dashboard. - Timezone: Set your local timezone with the

-e TZ=Europe/Londonenvironment variable to ensure the timestamps are correct. - Configuring Homarr:Adding Services: Homarr allows for specifying service names, URLs, and ports. Custom Icons and Shortcuts: Customise each service with icons for easy identification. Upload your own icons or use those from built-in libraries. Map service shortcuts to quickly jump between commonly used apps.Widgets: Homarr supports widgets for added functionality, such as weather updates, news feeds, system stats, etc. Widgets can be placed on the dashboard for at-a-glance information relevant to your environment.

- Managing Docker Containers: Homarr integrates with Docker through the mounted Docker socket, providing a GUI to:Start, Stop, and Restart Containers: You can manage your containers directly from the Homarr interface without CLI commands.Monitor Container Health: Get real-time status updates and resource usage metrics (CPU, memory, etc.) for each container.View Logs: Access container logs to monitor application performance and troubleshoot issues directly from the dashboard.

- Customizing the Dashboard:Layouts: Homarr offers flexible layout options, allowing you to arrange services and widgets as needed. Themes: Choose from various themes to match your aesthetic preferences or align with your dark/light mode settings.

- Access Controls: Implement basic access controls to secure your dashboard, ensuring only authorized users can change

- Webhooks and API Integrations: Homarr can integrate with various APIs, allowing you to extend its functionality further with custom integrations or automate tasks via webhooks.

Next Up

To wrap up this first part of the series, stay tuned for Part 2, where we'll dive into setting up Ludus, installing SCCM and AD labs, and exploring how to distribute the workload across various nodes in the cluster. In Part 3, we'll focus on building a home media stack using Plex, Overseerr, and other goodies.